当前位置:网站首页>Spark cluster environment construction

Spark cluster environment construction

2022-08-08 16:03:00 【Star elder brother play cloud】

1. Install jdk

Installation environment: CentOS-7.0.1708 Installation method: Source code installation software: jdk-6u45-linux-x64.bin Download address: http://www.Oracle.com/technetwork/Java/javase/downloads/java-archive-downloads-javase6-419409.html

The first step: change the permission

chmod 775 jdk-6u45-linux-x64.bin

Step 2: Execute jdk installation

./jdk-6u45-linux-x64.bin

Step 3: Configure environment variables

The configuration of environment variables is divided into several ways, according to your choice:

Method 1: configure JAVA_HOME and PATH and CLASS_PATH in the vi /etc/profile file

Because this setting will take effect on the shell of all users, it will have an impact on system security.

Add at the end of this file:

export JAVA_HOME=/usr/local/softWare/jdk1.6.0_45

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

Executing source /etc/profile is that the configuration takes effect immediately

Method 2:

Modify the .bashrc file to configure environment variables:

#vi.bashrc

export JAVA_HOME=/usr/local/softWare/java/jdk1.6.0_45

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

After the configuration is complete, use the logout command to log out, and then log in again to make it take effect.

Verify if the installation was successful, use java -version to check.

2. Install scala download path: https://downloads.lightbend.com/scala/2.12.8/scala-2.12.8.tgz scala-2.12.8.tgz Upload and decompress the download package tar -zxvf scala-2.12.8.tgz rm -rf scala-2.12.8.tgz Configure environment variables vi /etc/profile export SCALA_HOME=/usr/local/scala-2.12.8 export PATH=$PATH:$JAVA_HOME/bin:$SCALA_HOME/bin to other nodes: scp -r scala-2.12.8 192.168.0.109:/usr/local/ scp -r scala-2.12.8 192.168.0.110:/usr/local/scp /etc/profile 192.168.0.109:/etc/ scp /etc/profile 192.168.0.110:/etc/ Make environment variables effective: source /etc/profile Verify: scala -version

3.ssh password-free login reference https://blog.51cto.com/13001751/2487972

4. Install HadoopReference https://blog.51cto.com/13001751/2487972

5. Install spark Upload and decompress the download package cd /usr/local/ tar -zxvf spark-2.4.5-bin-hadoop2.7.tgz cd /usr/local/spark-2.4.5-bin-hadoop2.7/conf/ #Enter the spark configuration directory mv spark-env.sh.template spark-env.sh #Copy from the configuration template vi spark-env.sh #Add configuration content export SPARK_HOME=/usr/local/spark-2.4.5-bin-hadoop2.7 export SCALA_HOME=/usr/local/scala-2.12.8 export JAVA_HOME=/usr/local/jdk1.8.0_191 export HADOOP_HOME=/usr/local/hadoop-2.7.7 export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$SCALA_HOME/bin export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop export SPARK_MASTER_IP=spark1 SPARK_LOCAL_DIRS=/usr/local/spark-2.4.5-bin-hadoop2.7 SPARK_DRIVER_MEMORY=1G export SPARK_LIBARY_PATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$HADOOP_HOME/lib/native, vi slaves spark2 spark3 scp -r /usr/local/spark-2.4.5-bin-hadoop2.7 [email protected]:/usr/local/ scp -r /usr/local/spark-2.4.5-bin-hadoop2.7 [email protected]:/usr/local/ ./sbin/start-all.sh (do not start-all.sh directly, this command is hadoop)

边栏推荐

- 18、学习MySQL ALTER命令

- json根据条件存入数据库

- ‘xxxx‘ is declared but its value is never read.Vetur(6133)

- mmdetection最新版食用教程(一):安装并运行demo及开始训练coco

- bzoj2816 [ZJOI2012]网络

- A16z:为什么 NFT 创作者要选择 cc0?

- Thread local storage ThreadLocal

- 【Unity入门计划】Unity实例-C#如何通过封装实现对数据成员的保护

- Jingdong T9 pure hand type 688 pages of god notes, SSM framework integrates Redis to build efficient Internet applications

- [Online interviewer] How to achieve deduplication and idempotency

猜你喜欢

跟我一起来学弹性云服务器ECS【华为云至简致远】

Superset 1.2.0 安装

Jingdong T9 pure hand type 688 pages of god notes, SSM framework integrates Redis to build efficient Internet applications

国产数据库的红利还能“吃”多久?

消除游戏中宝石下落的原理和实现

GPT3中文自动生成小说「谷歌小发猫写作」

pytorch安装过程中出现torch.cuda.isavailable()=False问题

LED显示屏在会议室如何应用

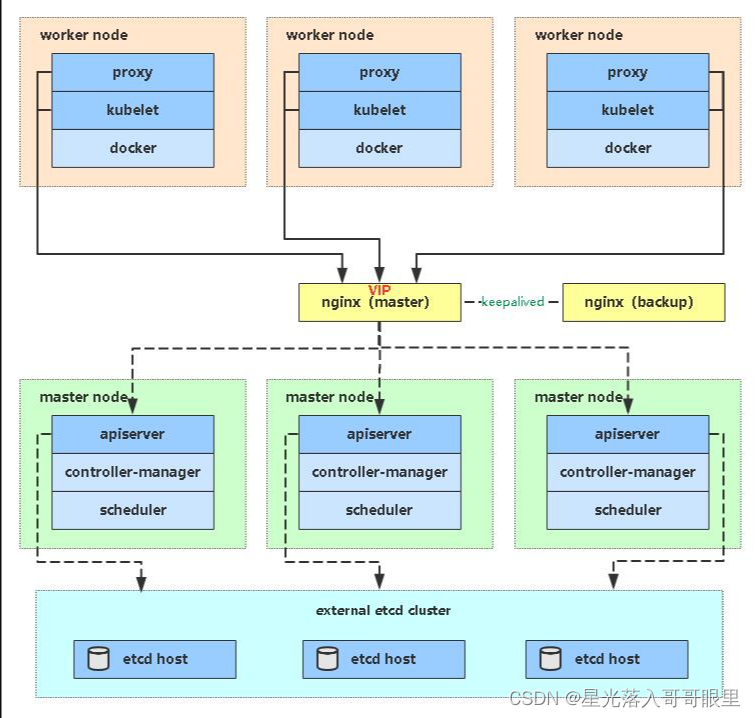

Kubernetes二进制部署高可用集群

sqllabs 1~6通关详解

随机推荐

手机注册股票开户的流程?网上开户安全?

我分析30w条数据后发现,西安新房公摊最低的竟是这里?

Kubernetes二进制部署高可用集群

Metamask插件中-添加网络和切换网络

《流浪方舟》首发重现,点我试玩

pytorch安装过程中出现torch.cuda.isavailable()=False问题

通过jenkins交付微服务到kubernetes

使用pymongo保存数据到MongoDB的工具类

本博客目录及版权申明

使用 ansible-bender 构建容器镜像

Share these new Blender plugins that designers must not miss in 2022

使用 FasterTransformer 和 Triton 推理服务器加速大型 Transformer 模型的推理

在 Fedora 上使用 SSH 端口转发

方程组解的情况与向量组相关性转化【线代碎碎念】

分布式服务治理

C#/VB.NET 将PDF转为PDF/X-1a:2001

成员变量和局部变量的区别?

sql合并连续时间段内,某字段相同的行。

大佬们,这个测试demo只能获取到全量数据,不能获取增量,我的mysql 已经开启了row模式的bi

【MATLAB项目实战】基于Morlet小波变换的滚动轴承故障特征提取研究