当前位置:网站首页>Function, type and design principle of neural network activation function

Function, type and design principle of neural network activation function

2022-04-22 06:56:00 【Indignant teasing】

One . Activation function

Activation function activation function is very important in neurons . In order to enhance the expression ability and learning ability of the network ,

1. Activation functions need to have the following properties :

(1) Continuous and derivable ( A few points are allowed to be non derivable ) The nonlinear function of . The differentiable activation function can directly use numerical optimization method to learn network parameters .

(2) The activation function and its derivative should be as simple as possible , It can improve the efficiency of network computing .

(3) The range of the derivative of the activation function should be in a suitable range , It can't be too big or too small , Otherwise, it will affect the efficiency and stability of training .

2. effect : Change the range of linear function from real number interval “ extrusion ” here we are (0, 1) Between , It can be used to express probability .( It can be regarded as a mapping relationship of transformation domain , Some books are also called “ Rectifier linear unit ”), The basic expression is

3. characteristic : The derivative is large , Gradient consistency , The second derivative is almost everywhere 0, The first derivative is everywhere 1

4. The goal is : Solve nonlinear problems

5. type :

5.1 Sigmoid(Logistic)

advantage : Output mapping in (0,1) Inside , Monotone continuous , Derivative easily

shortcoming : The input falls in non (-5,5) In the area , The derivative is close to 0, Will cause the gradient to disappear

nature : 1) Its output can be regarded as probability distribution directly , So that the neural network can be better combined with the statistical learning model . 2) It can be seen as a soft door (Soft Gate), Used to control the amount of information output by other neurons

5.2 Tanh

advantage : The value range is (-1, 1), With 0 For the output center , Fast convergence

shortcoming : The gradient disappears

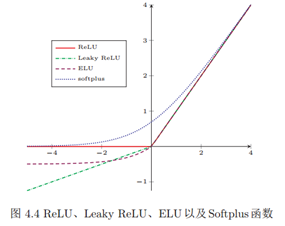

5.3 ReLU: A slope (ramp) function

advantage : use ReLU All you need to do is add 、 Multiplication and comparison operations , Computationally more efficient .

shortcoming : The output is non-zero centered , Bias is introduced into the neural network of the latter layer , It affects the efficiency of gradient descent .

(1)Leaky ReLU: Input x < 0 when , Keep a small gradient λ. In this way, when the neuron is inactive, there can also be a non-zero gradient to update the parameters , Avoid never being activated

(2)PRelu: Introduce a learnable parameter , Different neurons can have different parameters

(3)ELU

5.4 Sotfplus

advantage : Unilateral inhibition 、 Characteristics of wide excitation boundary

shortcoming : No sparse activation

5.5 Swish: A self gating system (Self-Gated) Activation function

among σ(·) by Logistic function

Swish Functions can be regarded as linear functions and ReLU Nonlinear interpolation function between functions , The degree depends on the parameter β control

6. summary

Several problems that should be considered in designing activation function :

(1) The activation function is monotonically continuous in the input field

(2) The output should preferably be in 0 Centered , It will make the convergence speed faster

(3) The output should preferably be free of saturation , Can solve the gradient disappearance

(4) Functions are easy to derive , The first derivative is 1, The second derivative is almost everywhere 0

版权声明

本文为[Indignant teasing]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220600162619.html

边栏推荐

- Anaconda配置深度学习环境(yolo为例)

- 好用的流布局

- Lachnospira, the core genus of intestinal bacteria

- 手把手教你腾讯云搭建RUOYI系统

- 将遥感数据集中的超大图像标注切分成指定尺寸保存成COCO数据集-目标检测

- The app enters for the first time and pops up the service agreement and privacy policy

- ACMer中的大神都很喜欢数学(题目方法的研究)

- 【AI视野·今日Robot 机器人论文速览 第二十八期】Wed, 1 Dec 2021

- win10完美安装cuda11.x + pytorch 1.9 (血流成河贴┭┮﹏┭┮)让你的torch.cuda.is_available()变成True!

- 遇到数学公式中不认识的符号怎么办

猜你喜欢

随机推荐

Daily question - find the maximum monotonic increasing number less than the target number

升级版微生物16s测序报告|解读

2021-09-03 爬虫模板(只支持静态页面)

ROS系列(三):ROS架构简介

ROS系列(一):ROS快速安装

Use of Excel IFS function

ROS系列(二):ROS快速体验,以HelloWorld程序为例

将遥感数据集中的超大图像标注切分成指定尺寸保存成COCO数据集-目标检测

ACMer中的大神都很喜欢数学(题目方法的研究)

图片合成视频

使用stream load向doris写数据的案例

Flink的安装部署及WordCount测试

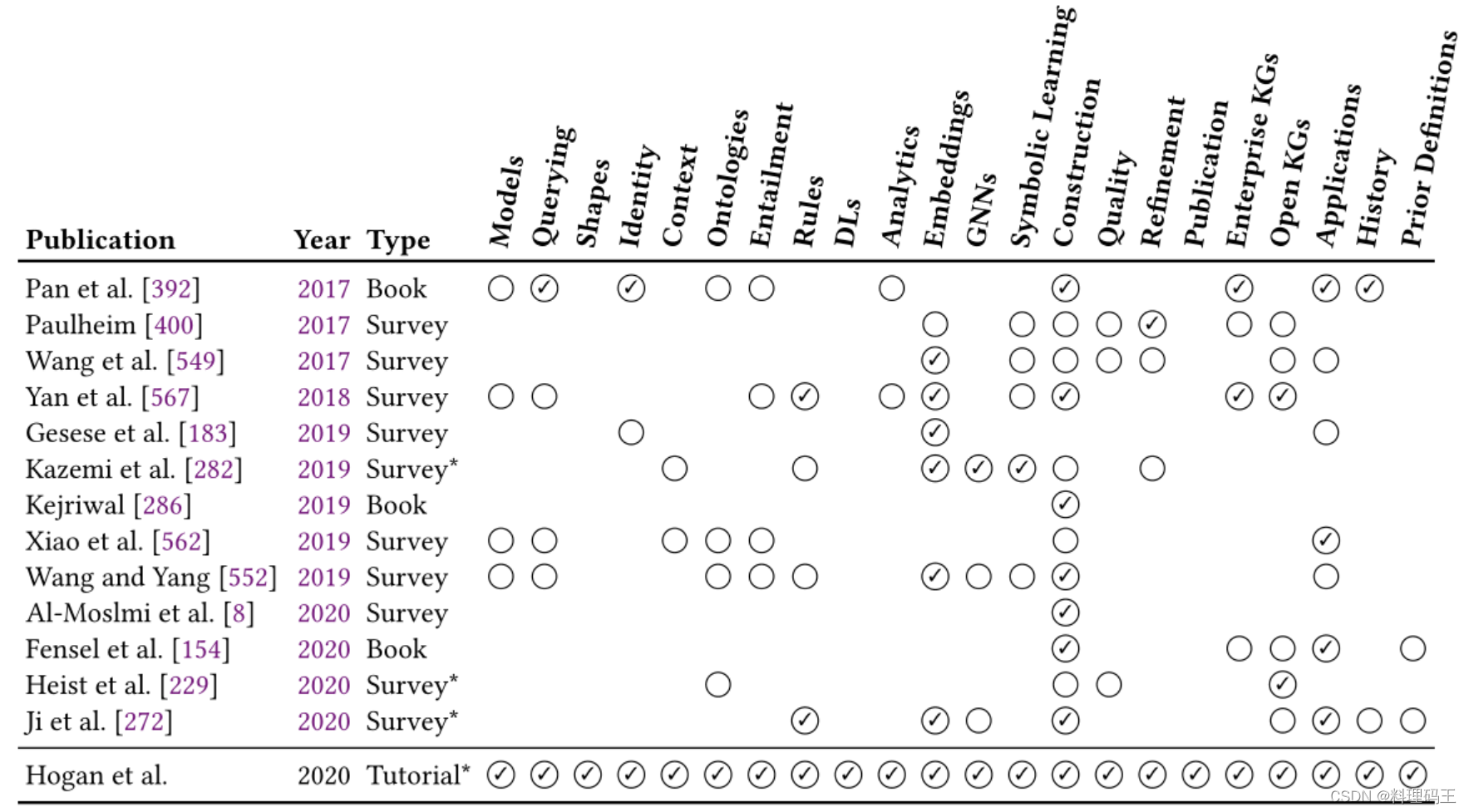

知识图谱综述(二)

现在是用AI给工业视觉检测赋能最好的时代

知识图谱综述(一)

卷积神经网络基础知识

【数学建模】我的数模记忆

【AI视野·今日Sound 声学论文速览 第二期】Fri, 15 Apr 2022

剑就是剑,木剑铜剑没有差别

计数排序(C语言实现)------学习笔记