当前位置:网站首页>Crawler example: climb the ranking of Chinese Universities

Crawler example: climb the ranking of Chinese Universities

2022-04-21 14:04:00 【ddy-ddy】

step :

1. use requests Library crawls for Web Information

2. use BeautifulSoup Handle html Key data , Put it in the list

3. Format print information

import requests

import bs4

from bs4 import BeautifulSoup

def getData(url): # Get information from the web

try:

r=requests.get(url,timeout=30)

r.raise_for_status()

r.encoding=r.apparent_encoding

return r.text

except:

print(" Crawling failed ")

def settleData(html,datalist): # Handle html Key data , Put it in the list

soup=BeautifulSoup(html,'html.parser')

for tr in soup.find('tbody').children: # Traverse tbody All the sons of the label

if isinstance(tr,bs4.element.Tag):

tds=tr('td')

datalist.append([tds[0].string,tds[1].string,tds[2].string,tds[3].string])

def printData(datalist,num): # Print information

tplt = "{0:{4}^10}\t{1:{4}^10}\t{2:{4}^10}\t{3:^10}"

print(tplt.format(" ranking "," School name "," School address "," Total score ",chr(12288))) # To align , Fill with Chinese characters chr(12288)

for i in range(num):

u=datalist[i]

print(tplt.format(u[0],u[1],u[2],u[3],chr(12288)))

def main():

uinfo=[]

url='http://www.zuihaodaxue.cn/zuihaodaxuepaiming2019.html'

html=getData(url)

settleData(html,uinfo)

printData(uinfo,30)

def search(string): # The search function

uinfo = []

url = 'http://www.zuihaodaxue.cn/zuihaodaxuepaiming2019.html'

html = getData(url)

settleData(html, uinfo)

for i in range(len(uinfo)):

u=uinfo[i]

if u[1]==string:

print(u[0],u[1])

main()

search(" Jiangxi Normal University ")

The effect is as follows

版权声明

本文为[ddy-ddy]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204211351090516.html

边栏推荐

猜你喜欢

Zabbix5系列-接入Grafana面板 (十七)

Zabbix5 series - nail alarm (XV)

The importance of computing edge in Networkx: edge intermediate number or intermediate centrality edge_ betweenness

求导法则 高阶导数

Shandong University project training raspberry pie promotion plan phase II (III) SSH Remote Connection

让别人连接自己的mysql数据库,共享mysql数据库

Emergency response notes

< 2021SC@SDUSC > Application and practice of software engineering in Shandong University jpress code analysis (6)

C语言选择和循环经典习题

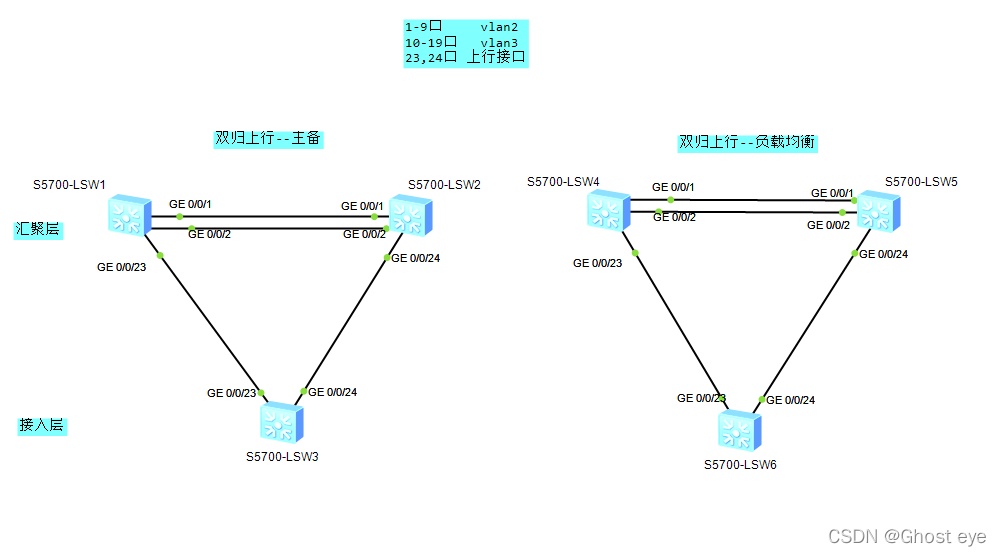

Dual homing uplink - active / standby / load

随机推荐

C语言实现扫雷

redis集群搭建管理入门

多线程之手动实现锁

Impala common commands (continuous updating)

ceph搭建基本使用

MYSQL搭建MHA集群主从竞主

CognitiveComputationalNeuroscienceonlineReadingClub第三季成员招募

微积分之微分

Pits to be avoided when Networkx and pyg calculate degree degree: self loop and multiple edges

Why should sparse adjacency matrix be written in transposed form adj in pytorch geometric_ t

1 ActiveMQ介绍与安装

应急响应笔记

RHCE builds a simple web site

MarkDown格式

基于data文件夹恢复mysql数据库

iscsi

基于word2vec的k-means聚类

Zabbix5 series - monitoring HP server ILO management port (6)

Zabbix5系列-制作拓扑图 (十三)

Ceph维护命令了解