当前位置:网站首页>keras使用class weight和sample weight处理不平衡问题

keras使用class weight和sample weight处理不平衡问题

2022-08-09 14:55:00 【yisun123456】

在机器学习或者深度学习中,经常会遇到正负样本不平衡问题,尤其是广告、push等场景,正负样本严重不平衡。常规的就是上采样和下采样。

这里介绍Keras中的两个参数

class_weight和sample_weight

1、class_weight 对训练集中的每个类别加一个权重,如果是大类别样本多那么可以设置低的权重,反之可以设置大的权重值

2、sample_weight 对每个样本加权中,思路与上面类似。样本多的类别样本权重低

例如 model.fit(class_weight={0:1.,1:100.0},,,) 其中Keras也可以直接设置为model.fit(class_weight='auto',,,)

需要注意的是:

1.使用class_weight就会改变loss范围,这样可能会导致训练的稳定性。当Optimizer中的step_size与梯度的大小相关时,将会出现问题。而类似Adam等优化器则不受影响。另外,使用class_weight后的模型的loss大小不能和不使用时做比较

2、也可以直接使用Keras中的‘auto’这样自动识别权重大小。

下面是摘要的代码

def fit(self,

x=None,

y=None,

batch_size=None,

epochs=1,

verbose=1,

callbacks=None,

validation_split=0.,

validation_data=None,

shuffle=True,

class_weight=None,

sample_weight=None,

initial_epoch=0,

steps_per_epoch=None,

validation_steps=None,

validation_freq=1,

max_queue_size=10,

workers=1,

use_multiprocessing=False,

**kwargs):

"""Trains the model for a fixed number of epochs (iterations on a dataset).

Arguments:

x: Input data. It could be:

- A Numpy array (or array-like), or a list of arrays

(in case the model has multiple inputs).

- A TensorFlow tensor, or a list of tensors

(in case the model has multiple inputs).

- A dict mapping input names to the corresponding array/tensors,

if the model has named inputs.

- A `tf.data` dataset. Should return a tuple

of either `(inputs, targets)` or

`(inputs, targets, sample_weights)`.

- A generator or `keras.utils.Sequence` returning `(inputs, targets)`

or `(inputs, targets, sample weights)`.

y: Target data. Like the input data `x`,

it could be either Numpy array(s) or TensorFlow tensor(s).

It should be consistent with `x` (you cannot have Numpy inputs and

tensor targets, or inversely). If `x` is a dataset, generator,

or `keras.utils.Sequence` instance, `y` should

not be specified (since targets will be obtained from `x`).

batch_size: Integer or `None`.

Number of samples per gradient update.

If unspecified, `batch_size` will default to 32.

Do not specify the `batch_size` if your data is in the

form of symbolic tensors, datasets,

generators, or `keras.utils.Sequence` instances (since they generate

batches).

epochs: Integer. Number of epochs to train the model.

An epoch is an iteration over the entire `x` and `y`

data provided.

Note that in conjunction with `initial_epoch`,

`epochs` is to be understood as "final epoch".

The model is not trained for a number of iterations

given by `epochs`, but merely until the epoch

of index `epochs` is reached.

verbose: 0, 1, or 2. Verbosity mode.

0 = silent, 1 = progress bar, 2 = one line per epoch.

Note that the progress bar is not particularly useful when

logged to a file, so verbose=2 is recommended when not running

interactively (eg, in a production environment).

callbacks: List of `keras.callbacks.Callback` instances.

List of callbacks to apply during training.

See `tf.keras.callbacks`.

validation_split: Float between 0 and 1.

Fraction of the training data to be used as validation data.

The model will set apart this fraction of the training data,

will not train on it, and will evaluate

the loss and any model metrics

on this data at the end of each epoch.

The validation data is selected from the last samples

in the `x` and `y` data provided, before shuffling. This argument is

not supported when `x` is a dataset, generator or

`keras.utils.Sequence` instance.

validation_data: Data on which to evaluate

the loss and any model metrics at the end of each epoch.

The model will not be trained on this data.

`validation_data` will override `validation_split`.

`validation_data` could be:

- tuple `(x_val, y_val)` of Numpy arrays or tensors

- tuple `(x_val, y_val, val_sample_weights)` of Numpy arrays

- dataset

For the first two cases, `batch_size` must be provided.

For the last case, `validation_steps` must be provided.

shuffle: Boolean (whether to shuffle the training data

before each epoch) or str (for 'batch').

'batch' is a special option for dealing with the

limitations of HDF5 data; it shuffles in batch-sized chunks.

Has no effect when `steps_per_epoch` is not `None`.

class_weight: Optional dictionary mapping class indices (integers)

to a weight (float) value, used for weighting the loss function

(during training only).

This can be useful to tell the model to

"pay more attention" to samples from

an under-represented class.

sample_weight: Optional Numpy array of weights for

the training samples, used for weighting the loss function

(during training only). You can either pass a flat (1D)

Numpy array with the same length as the input samples

(1:1 mapping between weights and samples),

or in the case of temporal data,

you can pass a 2D array with shape

`(samples, sequence_length)`,

to apply a different weight to every timestep of every sample.

In this case you should make sure to specify

`sample_weight_mode="temporal"` in `compile()`. This argument is not

supported when `x` is a dataset, generator, or

`keras.utils.Sequence` instance, instead provide the sample_weights

as the third element of `x`.

initial_epoch: Integer.

Epoch at which to start training

(useful for resuming a previous training run).

steps_per_epoch: Integer or `None`.

Total number of steps (batches of samples)

before declaring one epoch finished and starting the

next epoch. When training with input tensors such as

TensorFlow data tensors, the default `None` is equal to

the number of samples in your dataset divided by

the batch size, or 1 if that cannot be determined. If x is a

`tf.data` dataset, and 'steps_per_epoch'

is None, the epoch will run until the input dataset is exhausted.

This argument is not supported with array inputs.

validation_steps: Only relevant if `validation_data` is provided and

is a `tf.data` dataset. Total number of steps (batches of

samples) to draw before stopping when performing validation

at the end of every epoch. If validation_data is a `tf.data` dataset

and 'validation_steps' is None, validation

will run until the `validation_data` dataset is exhausted.

validation_freq: Only relevant if validation data is provided. Integer

or `collections_abc.Container` instance (e.g. list, tuple, etc.).

If an integer, specifies how many training epochs to run before a

new validation run is performed, e.g. `validation_freq=2` runs

validation every 2 epochs. If a Container, specifies the epochs on

which to run validation, e.g. `validation_freq=[1, 2, 10]` runs

validation at the end of the 1st, 2nd, and 10th epochs.

max_queue_size: Integer. Used for generator or `keras.utils.Sequence`

input only. Maximum size for the generator queue.

If unspecified, `max_queue_size` will default to 10.

workers: Integer. Used for generator or `keras.utils.Sequence` input

only. Maximum number of processes to spin up

when using process-based threading. If unspecified, `workers`

will default to 1. If 0, will execute the generator on the main

thread.

use_multiprocessing: Boolean. Used for generator or

`keras.utils.Sequence` input only. If `True`, use process-based

threading. If unspecified, `use_multiprocessing` will default to

`False`. Note that because this implementation relies on

multiprocessing, you should not pass non-picklable arguments to

the generator as they can't be passed easily to children processes.

**kwargs: Used for backwards compatibility.

Returns:

A `History` object. Its `History.history` attribute is

a record of training loss values and metrics values

at successive epochs, as well as validation loss values

and validation metrics values (if applicable).

Raises:

RuntimeError: If the model was never compiled.

ValueError: In case of mismatch between the provided input data

and what the model expects.

"""边栏推荐

- 自定义指令,实现默认头像和用户上传头像的切换

- Linux安装mysql8.0详细步骤--(快速安装好)

- 量子力学初步

- 对导入的 excel 的时间的处理 将excel表中的时间,转成 标准的时间

- stream去重相同属性对象

- How to List < Map> grouping numerical merge sort

- At the beginning of the C language order 】 【 o least common multiple of three methods

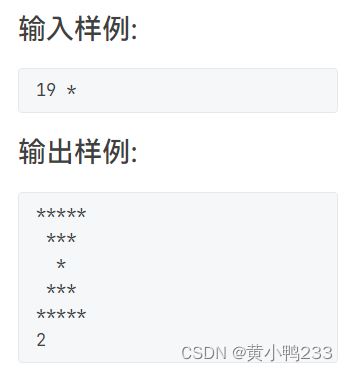

- PAT1027 Printing Hourglass

- Qt control - QTextEdit usage record

- 工作不等于生活,但生活离不开工作 | 2022 年中总结

猜你喜欢

随机推荐

Qt control - QTextEdit usage record

6大论坛,30+技术干货议题,2022首届阿里巴巴开源开放周来了!

Sort method (Hill, Quick, Heap)

Analysis of the common methods and scopes of the three servlet containers

排序方法(希尔、快速、堆)

Server运维:设置.htaccess按IP和UA禁止访问

How do quantitative investors obtain real-time market data?

几何光学简介

WebGL:BabylonJS入门——初探:注入活力

如何防止浏览器指纹关联

关于亚马逊测评你了解多少?

.Net Core动态注入

How to use and execute quantitative programmatic trading?

个人域名备案详细流程(图文并茂)

Mathematica 数据分析(简明)

【小白必看】初始C语言(下)

Stock trading stylized how to understand their own trading system?

如何保证电脑硬盘格式化后数据不能被恢复?

工作不等于生活,但生活离不开工作 | 2022 年中总结

Example of file operations - downloading and merging streaming video files