当前位置:网站首页>Apache seatunnel 2.1.0 deployment and stepping on the pit

Apache seatunnel 2.1.0 deployment and stepping on the pit

2022-04-23 13:42:00 【Ruo Xiaoyu】

brief introduction

SeaTunnel Original name Waterdrop, since 2021 year 10 month 12 Renamed SeaTunnel.

SeaTunnel It is a very easy to use ultra-high performance distributed data integration platform , Support real-time synchronization of massive data . It can synchronize tens of billions of data stably and efficiently every day , It's near 100 Used in the production of this company .

characteristic

- Easy to use , Flexible configuration , Low code development

- Real time streaming

- Offline multi-source data analysis

- High performance 、 Massive data processing capabilities

- Modular and plug-in mechanisms , extensible

- Supported by SQL Data processing and aggregation

- Support Spark Structured streaming media

- Support Spark 2.x

- Here we stepped on a pit , Because we tested spark The environment has been upgraded to 3.x edition , Now, SeaTunnel Only support 2.x, So we need to redeploy one 2.x Of spark

-

- Here we stepped on a pit , Because we tested spark The environment has been upgraded to 3.x edition , Now, SeaTunnel Only support 2.x, So we need to redeploy one 2.x Of spark

Workflow

install

Installation document

https://seatunnel.incubator.apache.org/docs/2.1.0/spark/installation

- Environmental preparation : install jdk and spark

- config/seatunnel-env.sh

- Download installation package

- https://www.apache.org/dyn/closer.lua/incubator/seatunnel/2.1.0/apache-seatunnel-incubating-2.1.0-bin.tar.gz

- Decompress and edit config/seatunnel-env.sh

- Specify the necessary environment configuration , for example SPARK_HOME(SPARK Download and unzip the directory )

1、 test jdbc-to-jdbc

- Create a new config/spark.batch.jdbc.to.jdbc.conf file

env {

# seatunnel defined streaming batch duration in seconds

spark.app.name = "SeaTunnel"

spark.executor.instances = 1

spark.executor.cores = 1

spark.executor.memory = "1g"

}

source {

jdbc {

driver = "com.mysql.jdbc.Driver"

url = "jdbc:mysql://0.0.0.0:3306/database?useUnicode=true&characterEncoding=utf8&useSSL=false"

table = "table_name"

result_table_name = "result_table_name"

user = "root"

password = "password"

}

}

transform {

# split data by specific delimiter

# you can also use other filter plugins, such as sql

# sql {

# sql = "select * from accesslog where request_time > 1000"

# }

# If you would like to get more information about how to configure seatunnel and see full list of filter plugins,

# please go to https://seatunnel.apache.org/docs/spark/configuration/transform-plugins/Sql

}

sink {

# choose stdout output plugin to output data to console

# Console {}

jdbc {

# Configuration here driver Parameters , Otherwise, the data exchange will not succeed

driver = "com.mysql.jdbc.Driver",

saveMode = "update",

url = "jdbc:mysql://ip:3306/database?useUnicode=true&characterEncoding=utf8&useSSL=false",

user = "userName",

password = "***********",

dbTable = "tableName",

customUpdateStmt = "INSERT INTO table (column1, column2, created, modified, yn) values(?, ?, now(), now(), 1) ON DUPLICATE KEY UPDATE column1 = IFNULL(VALUES (column1), column1), column2 = IFNULL(VALUES (column2), column2)"

}

}

yarn Start command

./bin/start-seatunnel-spark.sh --master 'yarn' --deploy-mode client --config ./config/spark.batch.jdbc.to.jdbc.conf

Step on the pit : Run times [driver] as non-empty , Locate and find sink It needs to be set in the configuration driver Parameters

ERROR Seatunnel:121 - Plugin[org.apache.seatunnel.spark.sink.Jdbc] contains invalid config, error: please specify [driver] as non-empty

版权声明

本文为[Ruo Xiaoyu]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230602186365.html

边栏推荐

- Common analog keys of ADB shell: keycode

- TERSUS笔记员工信息516-Mysql查询(2个字段的时间段唯一性判断)

- Window function row commonly used for fusion and de duplication_ number

- Opening: identification of double pointer instrument panel

- Oracle calculates the difference between two dates in seconds, minutes, hours and days

- MySQL and PgSQL time related operations

- At the same time, the problems of height collapse and outer margin overlap are solved

- Isparta is a tool that generates webp, GIF and apng from PNG and supports the transformation of webp, GIF and apng

- Explanation of input components in Chapter 16

- Stack protector under armcc / GCC

猜你喜欢

顶级元宇宙游戏Plato Farm,近期动作不断利好频频

![[point cloud series] pointfilter: point cloud filtering via encoder decoder modeling](/img/da/02d1e18400414e045ce469425db644.png)

[point cloud series] pointfilter: point cloud filtering via encoder decoder modeling

Short name of common UI control

交叉碳市场和 Web3 以实现再生变革

Xi'an CSDN signed a contract with Xi'an Siyuan University, opening a new chapter in IT talent training

![[machine learning] Note 4. KNN + cross validation](/img/a1/5afccedf509eda92a0fe5bf9b6cbe9.png)

[machine learning] Note 4. KNN + cross validation

On the bug of JS regular test method

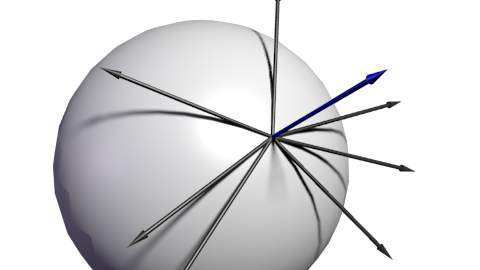

Tangent space

面试官给我挖坑:单台服务器并发TCP连接数到底可以有多少 ?

Zero copy technology

随机推荐

Xi'an CSDN signed a contract with Xi'an Siyuan University, opening a new chapter in IT talent training

联想拯救者Y9000X 2020

SAP ui5 application development tutorial 72 - animation effect setting of SAP ui5 page routing

Solve the problem that Oracle needs to set IP every time in the virtual machine

Exemple de méthode de réalisation de l'action d'usinage à point fixe basée sur l'interruption de déclenchement du compteur à grande vitesse ob40 pendant le voyage de tia Expo

PG library to view the distribution keys of a table in a certain mode

[point cloud series] so net: self organizing network for point cloud analysis

The difference between is and as in Oracle stored procedure

[point cloud series] relationship based point cloud completion

Parameter comparison of several e-book readers

Modification of table fields by Oracle

Solve the problem of Oracle Chinese garbled code

Machine learning -- model optimization

UEFI learning 01-arm aarch64 compilation, armplatformpripeicore (SEC)

[point cloud series] summary of papers related to implicit expression of point cloud

Summary of request and response and their ServletContext

Personal learning related

MySQL and PgSQL time related operations

Opening: identification of double pointer instrument panel

Common types and basic usage of input plug-in of logstash data processing service