当前位置:网站首页>[point cloud series] a rotation invariant framework for deep point cloud analysis

[point cloud series] a rotation invariant framework for deep point cloud analysis

2022-04-23 07:20:00 【^_^ Min Fei】

List of articles

1. Summary

TVCG 2021 Periodical

Code :https://github.com/nini-lxz/Rotation-Invariant-Point-Cloud-Analysis

2. motivation

The common problem with current methods is : Rotation invariance is not guaranteed

So this is the guarantee .

Use a low-level semantic Expression of rotation invariance To replace 3D Cartesian coordinate input , It is a bit similar to the process of using hand-designed features with rotation invariance to give the optimization of network science .

3. Method

3.1 Common methods feature extraction A A A

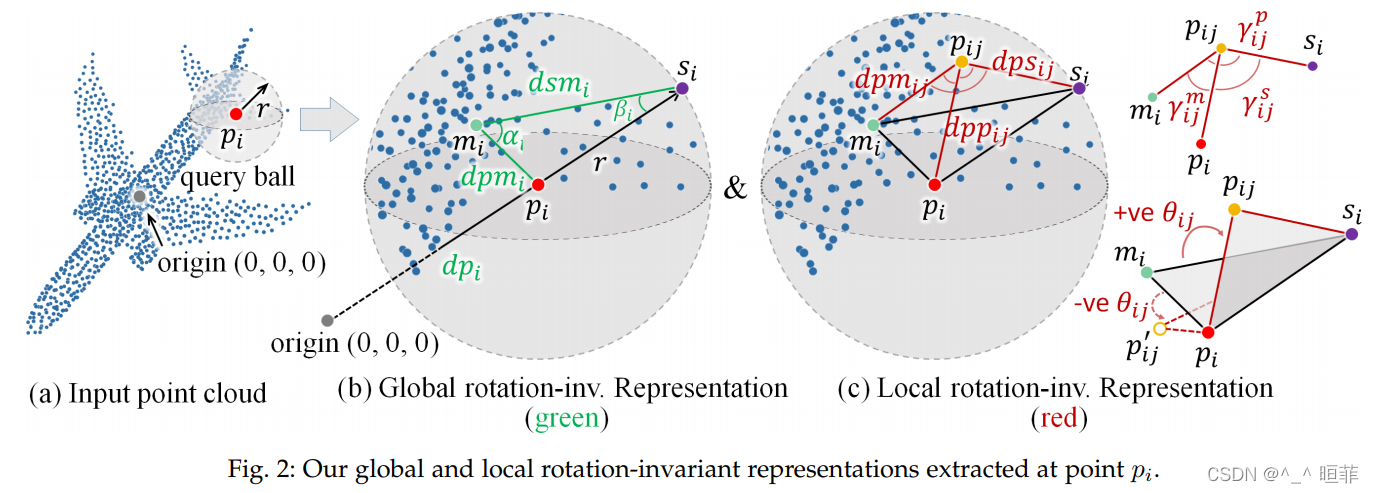

Based on global features G i G_i Gi+ Local features L i j L_{ij} Lij + Nonlinear functions h θ h_{\theta} hθ

among A A A It's a symmetric function .

3.2 Rotation invariance

A frame has rotation invariance = The network input is rotation invariant ( In fact, a rotation invariant expression is extracted from the input point cloud to replace the original point cloud as the input ) + The operands are rotation invariant

Network input design

Think about it 4 spot :

- That is, regardless of the input point cloud S S S How to transform , The extracted expression with rotation invariance remains unchanged . Let the function of extracting rotation invariance be Φ \Phi Φ, Then need to satisfy :

there R Refer to 3D Arbitrary rotation in coordinates . - Satisfy the formula (2) Easy to use L2 Distance or relative angle as input is too rough , And the information is lost ;

- No ambiguity , That is, different local regions have their own rotation invariance expression ;

- Need anti noise ;

Network architecture design

Consider two points :

- The network framework cannot contain any rotational operations , For example, you cannot specify the order

- The network framework does not include point cloud coordinates , It's just relevant geometric information , For example, distance, angle, etc. as input ;

3.3 Roll invariance expression

-

Preprocessing :

First, input the point cloud S S S Normalize , In the cell sphere .

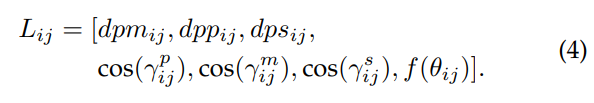

And then use PointNet++li d query ball To define the proximity point { p i j } j = 1 K \{ p_{ij}\}^K_{j=1} { pij}j=1K, Here's the picture 2(a) And (b). -

Calculation :

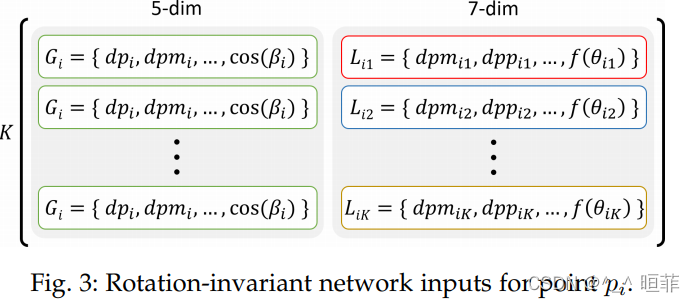

The extracted features G i G_i Gi And p i p_i pi Local characteristics of { L i j } j = 1 K \{L_{ij}\}^K_{j=1} { Lij}j=1K. The overall features are shown in the figure 3 Shown :

Global features G i G_i Gi: contain 5 Parts of , Pictured 2 (a)&(b) Shown

1). d p i = ∣ ∣ p i ∣ ∣ 2 d_{pi}=||p_i||^2 dpi=∣∣pi∣∣2: p i p_i pi Simple global and rotation invariance

2). d p m i d_{pm_i} dpmi : p i p_i pi And p i ′ p_i' pi′ The local distance is m i m_i mi, Select geometric median .

3)–5): d s m i d_{sm_i} dsmi As the last three parts . among , location s i s_i si + Near point and origin p i p_i pi Intersection of extension lines + triangle p i − m i − s i p_i-m_i-s_i pi−mi−si. In the setup of this article , The radius size increases with the network hierarchy .Local features :7 Parts of , Pictured 2 As shown in

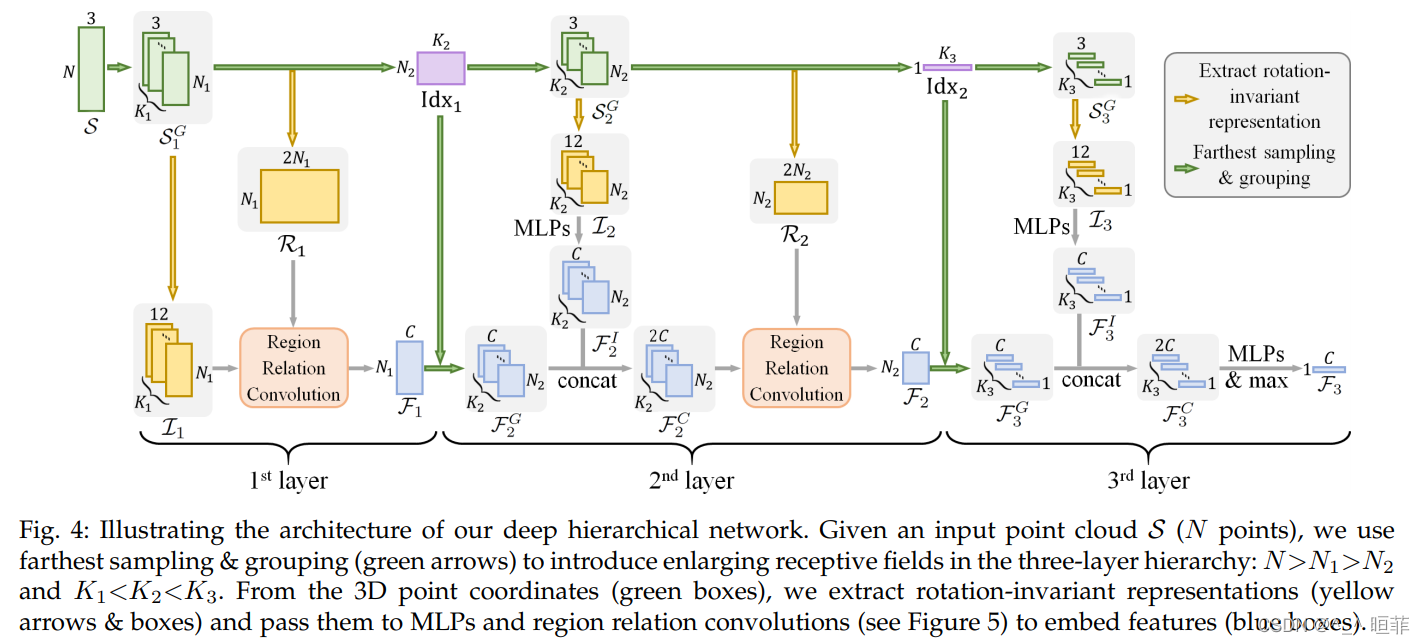

3.4 Overall network framework

in total 3 layer ,

Yellow box : Extracted rotation invariant features

Green box : Point cloud coordinates

Purple box : Longest distance sampling

Blue box : Features embedded in the network

first floor :

- Use PointNET++ The way , Sample and group . Use farthest point sampling , Each subset N 1 individual spot N_1 A little bit N1 individual spot

- Use query ball look for K 1 K_1 K1 A close neighbor , The build size is N 1 × K 1 × 3 N_1 \times K_1\times 3 N1×K1×3 Voxels of , As S 1 G S^G_1 S1G

- Do two things simultaneously :

(1) Extract rotation invariant features I 1 I_1 I1( Yellow box ),

(2) Calculate its global incidence matrix R 1 R_1 R1( Yellow box ). - At the same time 3 Medium I 1 I_1 I1 and R 1 R_1 R1 All input to the regional relationship convolution ( Orange frame ) To get features F 1 F_1 F1( Blue box )

The second floor :

5. Continue to the smaller sampling area S 2 G S_2^G S2G

6. The features of the first layer are spliced with the sampled points , Form the second characteristic F 2 G F^G_2 F2G; here N 2 < N 1 N_2<N_1 N2<N1, K 2 > K 1 K_2>K_1 K2>K1 It can allow the gradual expansion of the receptive field .

7. Then joining together F 2 G F_2^G F2G And F 2 I F_2^I F2I, To eliminate the loss of information , I 2 I_2 I2 It is a high-level semantic feature obtained by multi-layer perceptron ;

8. Finally, the spliced features are generated F 2 C F^C_2 F2C., The final joint relevance matrix R 2 R_2 R2 Get the second layer of features F 2 F_2 F2.

The third level :

9. Continue sampling grouping , obtain S 3 G S^G_3 S3G

10. Similar to the operation of the second layer, get the characteristics F 3 C F^C_3 F3C

11. Use multi-layer perceptron , then maxpooling To the final feature F 3 F_3 F3

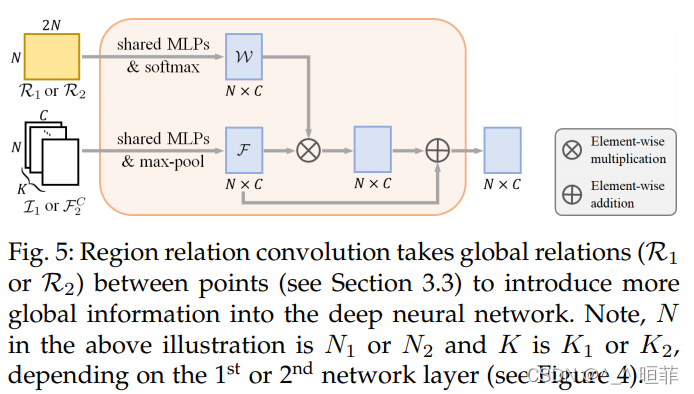

among , Region Relation Convolution The calculation is shown in the figure 5: In essence, it is similar to a attention Let's do it .

4. experiment

Classification accuracy : It looks good

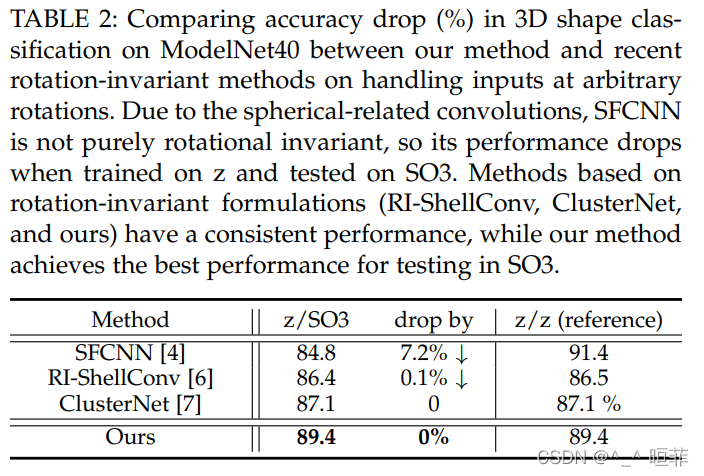

surface 2:

z/SO3: The training set has z Axis rotation enhancement , The test set is arbitrarily rotated ;

z/z: Training and testing are all about z The rotation of the shaft is enhanced

SO3/SO3: Training and testing are arbitrary rotation ;

The stability of the proposed method is illustrated ?

surface 3:

NR/NR: Training tests are all rotation

NR/AR: Training : No rotation ; test : Any rotation

There is no difference between the two , Why? ? In fact, it is because the proposed method is originally aimed at rotation invariance , So the extracted features have this performance , Even if the training set is not for different rotations , It should also have rotation invariance . It also shows that this method has this performance .

Visual effects :

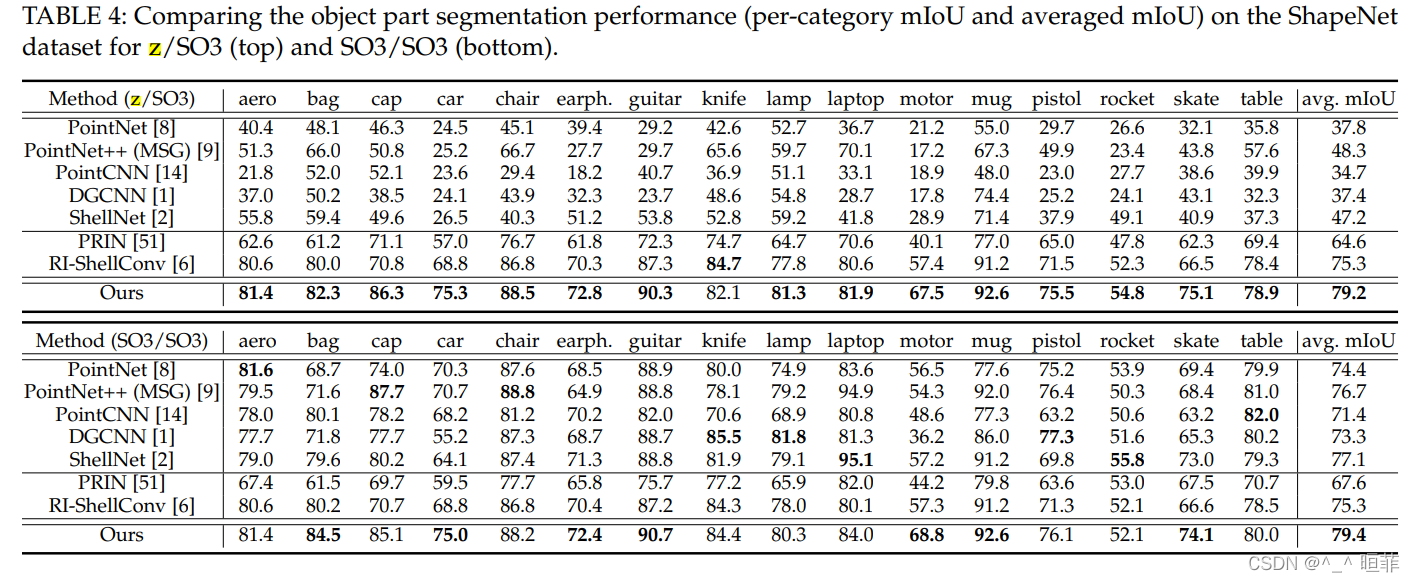

Segmentation rendering :

Ablation Experiment :

5. limitations

Simple method , The effect is not good ;

In fact, the processing of noise is limited ;

It depends on the invariance of manual design , Whether it is effective for all objects , In doubt .

版权声明

本文为[^_^ Min Fei]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230611136406.html

边栏推荐

猜你喜欢

C# EF mysql更新datetime字段报错Modifying a column with the ‘Identity‘ pattern is not supported

Easyui combobox 判断输入项是否存在于下拉列表中

Viewpager2 realizes Gallery effect. After notifydatasetchanged, pagetransformer displays abnormal interface deformation

Project, how to package

1.2 preliminary pytorch neural network

c语言编写一个猜数字游戏编写

Google AdMob advertising learning

【2021年新书推荐】Red Hat RHCSA 8 Cert Guide: EX200

Bottomsheetdialogfragment conflicts with listview recyclerview Scrollview sliding

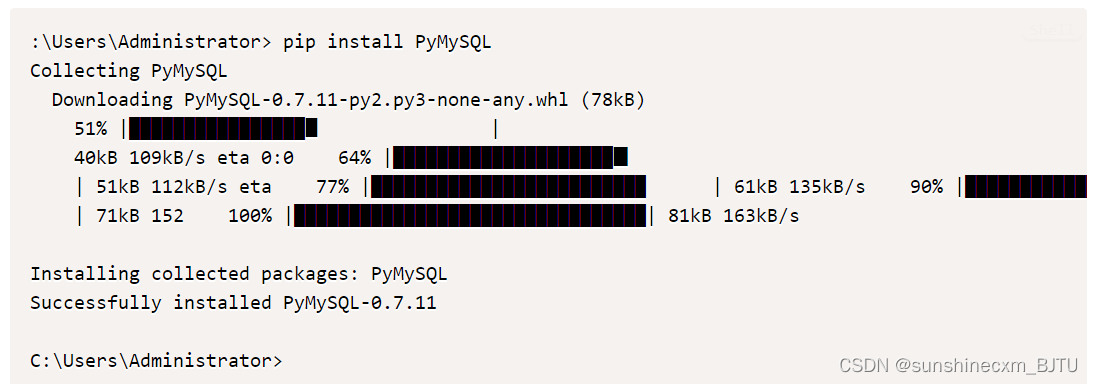

PyMySQL连接数据库

随机推荐

树莓派:双色LED灯实验

adb shell top 命令详解

ArcGIS license server administrator cannot start the workaround

第8章 生成式深度学习

Summary of image classification white box anti attack technology

Using stack to realize queue out and in

DCMTK (dcm4che) works together with dicoogle

[recommendation of new books in 2021] practical IOT hacking

[recommendation for new books in 2021] professional azure SQL managed database administration

[Andorid] 通过JNI实现kernel与app进行spi通讯

利用官方torch版GCN训练并测试cora数据集

Markdown basic grammar notes

【动态规划】不同路径2

ThreadLocal,看我就够了!

Exploration of SendMessage principle of advanced handler

WebView displays a blank due to a certificate problem

SSL/TLS应用示例

读书小记——Activity

Easyui combobox 判断输入项是否存在于下拉列表中

Handler进阶之sendMessage原理探索