当前位置:网站首页>tf. keras. layers. Density function

tf. keras. layers. Density function

2022-04-23 02:56:00 【Live up to your youth】

The function prototype

tf.keras.layers.Dense(units,

activation=None,

use_bias=True,

kernel_initializer='glorot_uniform',

bias_initializer='zeros',

kernel_regularizer=None,

bias_regularizer=None,

activity_regularizer=None,

kernel_constraint=None,

bias_constraint=None,

**kwargs

)

Function description

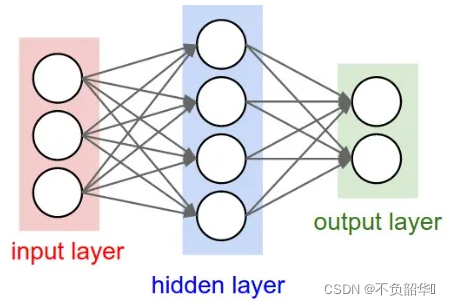

The full connection layer is used to linearly change one feature space to another , It plays the role of classifier in the whole neural network .

Each node of the whole connection layer is connected with each node of the upper layer , Integrate the output characteristics of the previous layer , Because it uses all the local features , All are called full connection . The fully connected layer is generally used for the last layer of the model , By mapping the hidden feature space of the sample to the sample tag space , Finally, the purpose of sample classification .

The full connection layer is in tensorflow with Dense Function to define , The operation realized is output = activation(dot(input, kernel) + bias). among input For input data ,kernel Is the kernel matrix , By the parameter kernel_initializer Definition ,dot The dot product of two matrices , bias For the offset matrix , By the parameter bias_initializer Definition ,activation Is the activation function .

Parameters use_bias Indicates whether an offset matrix is used , The default is True. If use_bias=False, Then the operation result of the matrix is output output = activation(dot(input, kernel)).

And the first parameter units, Defines the dimension of the output space . How do you understand that ? If the output shape of the previous layer is (None, 32), adopt Dense(units=16) After the layer , The output shape is (None, 16); If the output shape is (None, 32, 32), adopt Dense(units=16) After the layer , The output shape is (None, 32, 16). More precisely , This parameter changes the size of the last dimension of the output space .

If you use Dense Layer as the first layer , Need to provide a input_shape Parameters to describe the shape of the input tensor .

The usage function

First example

model = tf.keras.Sequential([

# Input layer , The input shape is (None, 32, 64)

tf.keras.layers.InputLayer(input_shape=(32, 64)),

# Fully connected layer , The last dimension of the output is 32, The activation function is relu, The output shape is (None, 32, 32)

tf.keras.layers.Dense(32, activation="relu")

])

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 32, 32) 2080

=================================================================

Total params: 2,080

Trainable params: 2,080

Non-trainable params: 0

_________________________________________________________________

Second example

model = tf.keras.Sequential([

# Input layer , The input shape is (None, 32, 64)

tf.keras.layers.InputLayer(input_shape=(32, 64)),

# Fully connected layer , The last dimension of the output is 32, The activation function is relu, The output shape is (None, 32, 32)

tf.keras.layers.Dense(32, activation="relu"),

# Flattening layer , Reduce output dimension

tf.keras.layers.Flatten(),

# Fully connected layer , The activation function is softmax, For multi category situations , The output shape is (None, 4)

tf.keras.layers.Dense(4, activation="softmax")

])

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 32, 32) 2080

flatten (Flatten) (None, 1024) 0

dense_1 (Dense) (None, 4) 4100

=================================================================

Total params: 6,180

Trainable params: 6,180

Non-trainable params: 0

_________________________________________________________________

The third example

model = tf.keras.Sequential([

# Input layer , The input shape is (None, 32, 64)

tf.keras.Input(shape=(32, 64)),

# Fully connected layer , The last dimension of the output is 32, The activation function is relu, The output shape is (None, 32, 32)

tf.keras.layers.Dense(32, activation="relu"),

# Flattening layer , Reduce output dimension

tf.keras.layers.Flatten(),

# Fully connected layer , The activation function is relu, The output shape is (None, 16)

tf.keras.layers.Dense(16, activation="relu"),

# Fully connected layer , The activation function is sigmoid, Used in the case of two categories , The output shape is (None, 1)

tf.keras.layers.Dense(1, activation="sigmoid")

])

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 32, 32) 2080

flatten (Flatten) (None, 1024) 0

dense_1 (Dense) (None, 16) 16400

dense_2 (Dense) (None, 2) 34

=================================================================

Total params: 18,514

Trainable params: 18,514

Non-trainable params: 0

_________________________________________________________________

版权声明

本文为[Live up to your youth]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220657127376.html

边栏推荐

- 《信息系統項目管理師總結》第六章 項目人力資源管理

- Win view port occupation command line

- VirtualBox virtual machine (Oracle VM)

- Linux Redis ——Redis HA Sentinel 集群搭建详解 & Redis主从部署

- Reverse a linked list < difficulty coefficient >

- The express project changes the jade template to art template

- Fashion MNIST 数据集分类训练

- JZ35 replication of complex linked list

- Fashion MNIST dataset classification training

- Source code and some understanding of employee management system based on polymorphism

猜你喜欢

Kubernetes - Introduction to actual combat

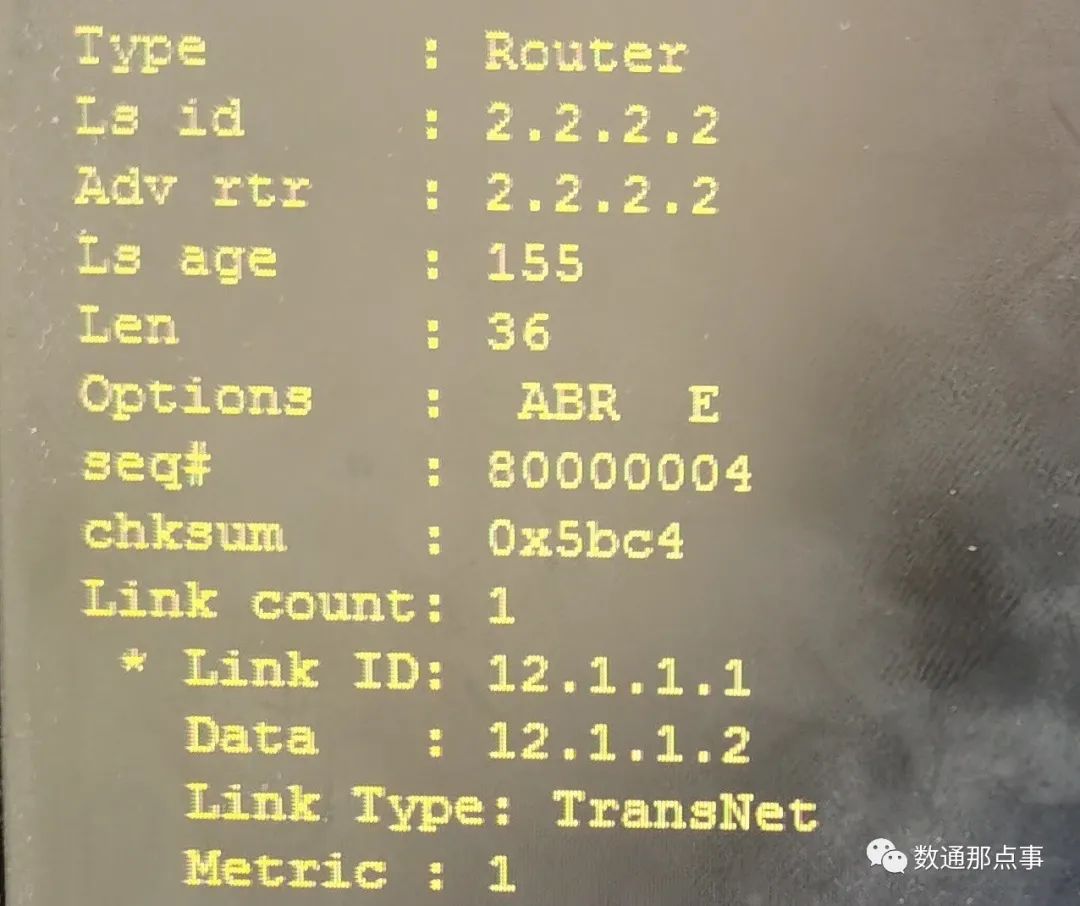

【Hcip】OSPF常用的6种LSA详解

windows MySQL8 zip安装

Error installing Mongo service 'mongodb server' on win10 failed to start

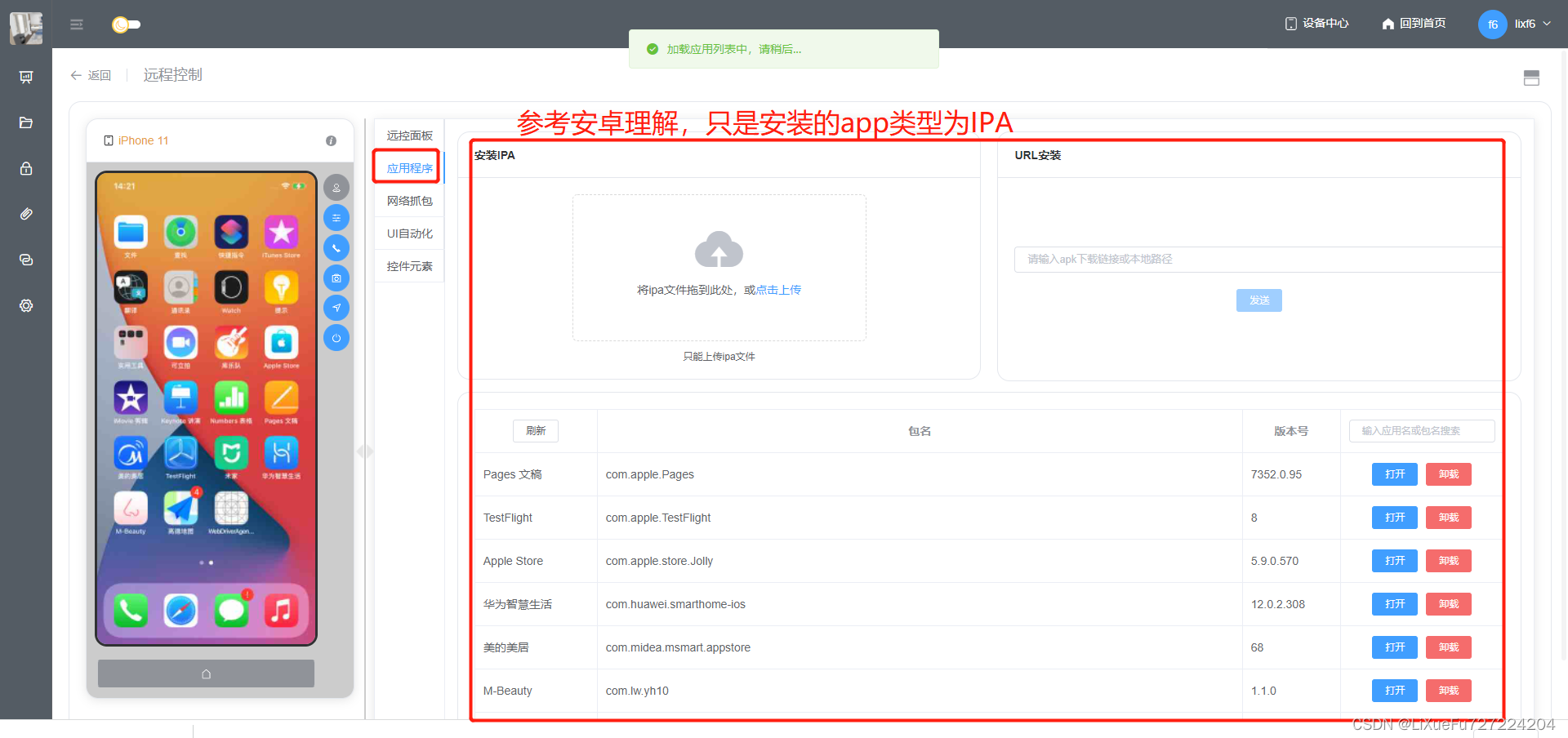

Sonic cloud real machine tutorial

Those years can not do math problems, using pyhon only takes 1 minute?

Modify the content of MySQL + PHP drop-down box

Day 3 of learning rhcsa

![[unity3d] rolling barrage effect in live broadcasting room](/img/61/46a7d6c4bf887fca8f088e7673cf2f.png)

[unity3d] rolling barrage effect in live broadcasting room

First knowledge of C language ~ branch statements

随机推荐

C language 171 Number of recent palindromes

Shell script learning notes -- shell operation on files sed

windows MySQL8 zip安装

Reverse a linked list < difficulty coefficient >

Slave should be able to synchronize with the master in tests/integration/replication-psync.tcl

第46届ICPC亚洲区域赛(昆明) B Blocks(容斥+子集和DP+期望DP)

If MySQL / SQL server judges that the table or temporary table exists, it will be deleted

Leangoo brain map - shared multi person collaborative mind mapping tool

Practical combat of industrial defect detection project (II) -- steel surface defect detection based on deep learning framework yolov5

leangoo脑图-共享式多人协作思维导图工具分享

The input of El input input box is invalid, and error in data(): "referenceerror: El is not defined“

[learn junit5 from official documents] [II] [writingtests] [learning notes]

JZ22 鏈錶中倒數最後k個結點

The problem of removing spaces from strings

MySQL / SQL Server判断表或临时表存在则删除

win查看端口占用 命令行

Day 3 of learning rhcsa

Huashu "deep learning" and code implementation: 01 Linear Algebra: basic concepts + code implementation basic operations

Modification du contenu de la recherche dans la boîte déroulante par PHP + MySQL

Publish to NPM?