当前位置:网站首页>Paper on Image Restoration - [red net, nips16] image restoration using very deep revolutionary encoder decoder networks wi

Paper on Image Restoration - [red net, nips16] image restoration using very deep revolutionary encoder decoder networks wi

2022-04-23 05:59:00 【umbrellalalalala】

Know that the account with the same name is published synchronously

Catalog

One 、 framework

Full title of the paper :Image Restoration Using Very Deep Convolutional Encoder-Decoder Networks with Symmetric Skip Connections

In fact, that is conv and deconv, Plus symmetrical skip connection. The author says there will be one on every two floors skip connection.

The author means to say ,conv The function of is to feature extraction, Keep the main components of the objects in the diagram , At the same time eliminate corruption.deconv Is that recover the details of image contents.

All layers are followed by ReLU,feature maps Yes 64 layer ( The author says more can improve , But not much ),filter size yes 3×3( This point refers to VGG). Input image Of size It can be random , The author of the experiment on the input channel The settings are 1.️ Be careful not to pooling and unpooling, The author's reason is that this will discard essential image detail.

Look at another picture :

This is it. paper Description of the model architecture in , There's nothing to say , Next, let's talk directly about the idea of this article .

Two 、main contribution

1,skip connection

The author says that this connection mainly does two things , One thing is to help gradient back propagation , It's easy to understand .

Another thing is to help transfer the details of the image to the later layers (pass image details to the top layers).

The advantage of doing so is , On the one hand, it can improve performance, On the other hand, it can network Become deeper .

2, The first method

The author says this is the first to use single model Make a difference noise level And realize the denoising task good accuracy Methods .( But notice that it is 2016.9.1,DnCNN yes 2016.8.13, The latter can also achieve different noise level Denoising of , however level Must be in preset Of range Inside ).

3,performance leading

More recently (2016.9.1) De-noising and super fractional SOTA All right .

At the same time, pay attention to the author's understanding of DNN methods There is a description :purely data driven; no assumption about noise distribution.

3、 ... and 、 Some discussions

1, If not skip connection:

If not , So for shallow networks ,deconv can recover detail; For deep networks, you can't . So this connection is for recover detail And it helps to make the network deeper .

2, and highway nets,resnet Different :

The network pass information of the conv feature maps to the corresponding deconv layers.

3,residual learning

What network studies is residuals , No noisy image To noise-free image Mapping .

Four 、 Training & Model function & Some comparisons

1,100 individual epochs after , from loss Look up ,30 Layer belt connection <20 Layer belt connection <10 Layer without connection <20 Layer without connection <30 Layer without connection . The connection here refers to skip connection, It can be seen that , If you don't bring it , If there are more layers, there will be problems . therefore Proved skip connection The necessity of .

2, and resnet It is also compared , identical block size when ,PSNR On RED-Net Better than resnet. The author advocates their skip connection Yes element-wise correspondence, This is right pixel-wise prediction problems Very important .

(block size Refers to the span of the connection (the span of the connections))

3,loss Euclidean distance is used , Specifically, minimize the following :

4, There is one trick It's the last layer with a smaller learning rate , The author doesn't think it's necessary here .

5,* How to generate training sets :

The customary :

- gray-scale image for denoising

- luminance channel for super-resolution

use 300 Zhang BSD Of images,50×50 Of patch As ground-truth:

- Generate noisy image: Add additive Gaussian noise ;

- Generate low resolution maps : First down sampling , And then sampling to original size

The above two kinds of data are for denoising and super-resolution tasks respectively , This model uses these two to train , Be able to handle two tasks at the same time .(️ Be careful : In the training dataset , Generate noisy image Using a different noise level, Different methods are used to generate low resolution images scaling parameter)

5、 ... and 、 Summary

This article is very simple , Sum up , The model has two parts of work , Namely extracting primary image content and recovering details. The point is to use skip connections, The author's description is :

which helps on recovering clean images and tackles the optimization difficulty caused by gradient vanishing, and thus obtains performance gains when the network goes deeper

That is to help solve the optimization difficulties caused by the disappearance of gradient , So the network can become deeper, thus gain some performance The promotion of . Then it is better than that at that time in denoising and super division SOTA.

版权声明

本文为[umbrellalalalala]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230543474274.html

边栏推荐

- delete和truncate

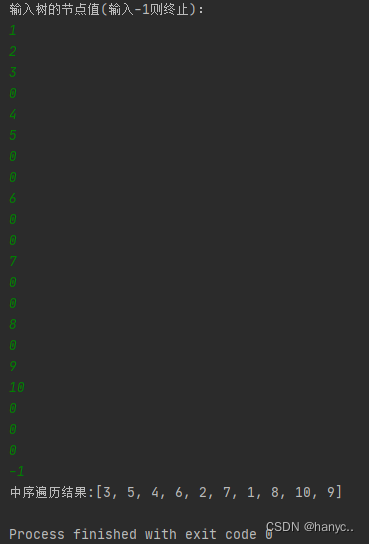

- 关于二叉树的遍历

- Graphic numpy array matrix

- Multithreading and high concurrency (3) -- synchronized principle

- JVM series (3) -- memory allocation and recycling strategy

- 基于thymeleaf实现数据库图片展示到浏览器表格

- 2.devops-sonar安装

- 自動控制(韓敏版)

- The difference between cookie and session

- The official website of UMI yarn create @ umijs / UMI app reports an error: the syntax of file name, directory name or volume label is incorrect

猜你喜欢

创建二叉树

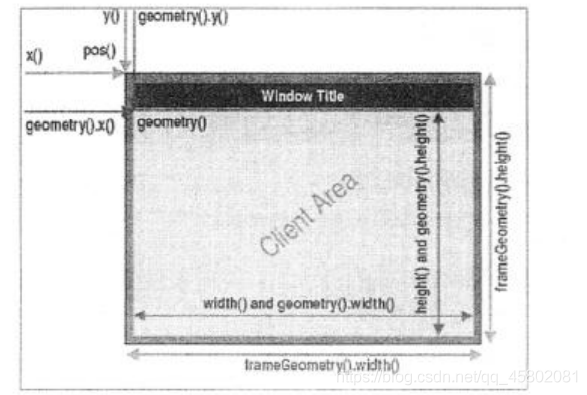

Pyqy5 learning (2): qmainwindow + QWidget + qlabel

关于二叉树的遍历

opensips(1)——安装opensips详细流程

Implementation of displaying database pictures to browser tables based on thymeleaf

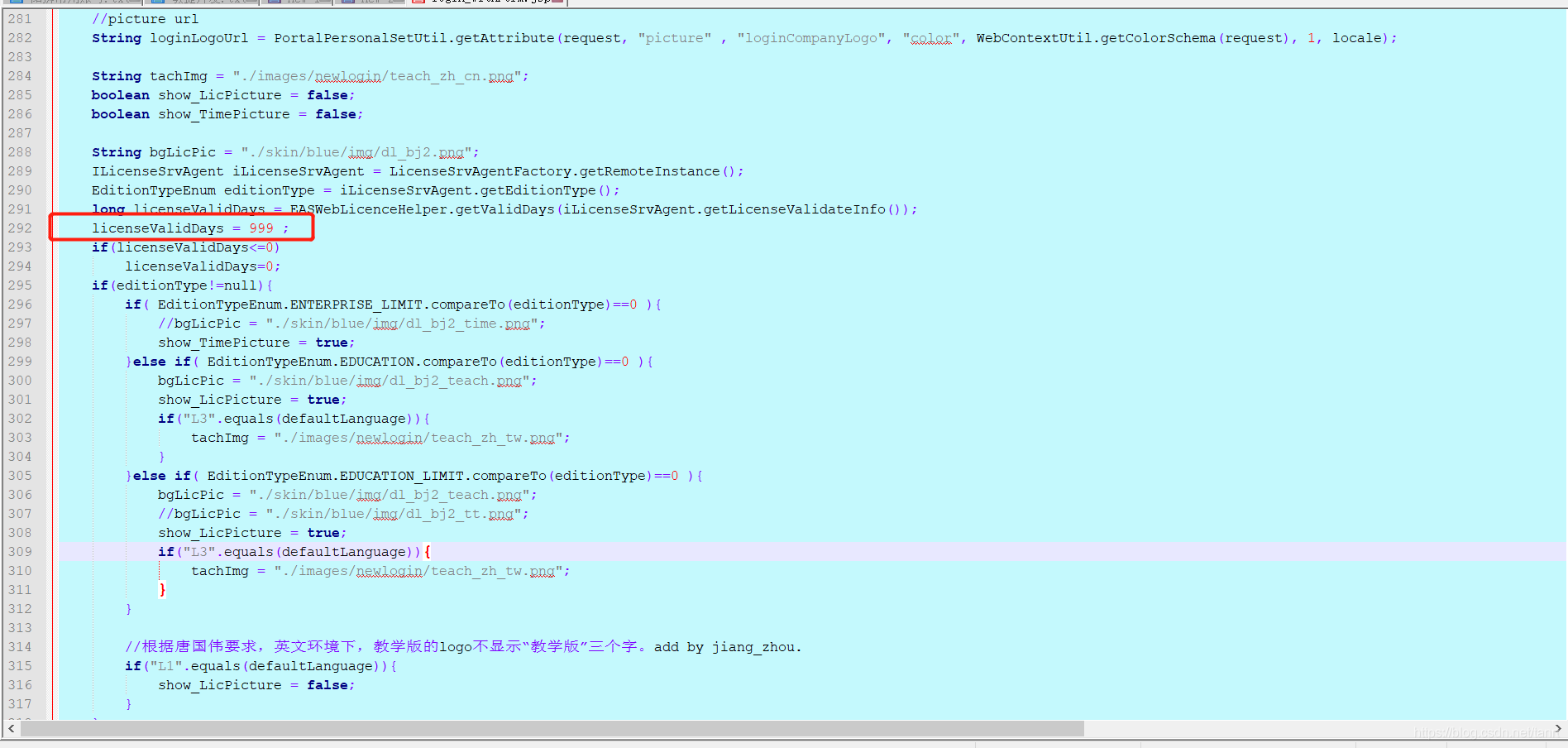

开发环境 EAS登录 license 许可修改

Pytorch Learning record (XIII): Recurrent Neural Network

![去噪论文——[Noise2Void,CVPR19]Noise2Void-Learning Denoising from Single Noisy Images](/img/9d/487c77b5d25d3e37fb629164c804e2.png)

去噪论文——[Noise2Void,CVPR19]Noise2Void-Learning Denoising from Single Noisy Images

Conda 虚拟环境管理(创建、删除、克隆、重命名、导出和导入)

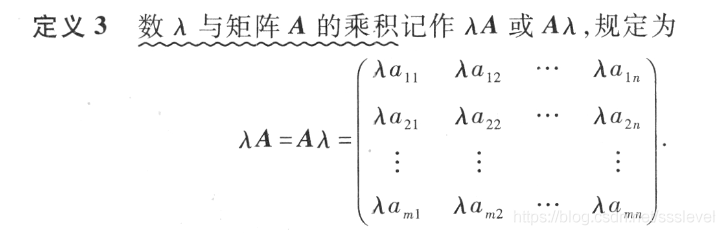

线性代数第二章-矩阵及其运算

随机推荐

治疗TensorFlow后遗症——简单例子记录torch.utils.data.dataset.Dataset重写时的图片维度问题

Complete example demonstration of creating table to page - joint table query

创建企业邮箱账户命令

Pytorch learning record (XII): learning rate attenuation + regularization

线代第四章-向量组的线性相关

PyTorch笔记——实现线性回归完整代码&手动或自动计算梯度代码对比

String notes

You cannot access this shared folder because your organization's security policy prevents unauthenticated guests from accessing it

2.devops-sonar安装

自動控制(韓敏版)

Use Matplotlib. In Jupiter notebook Pyplot server hangs up and crashes

深入源码分析Servlet第一个程序

字符串(String)笔记

图像恢复论文简记——Uformer: A General U-Shaped Transformer for Image Restoration

实操—Nacos安装与配置

创建二叉树

Pytoch -- data loading and processing

JVM series (3) -- memory allocation and recycling strategy

CONDA virtual environment management (create, delete, clone, rename, export and import)

给yarn配置国内镜像加速器