当前位置:网站首页>web crawler error

web crawler error

2022-08-10 02:53:00 【bamboogz99】

When using the request method in urllib, the system returns HTTPerror, but no error code is given

Re-wrote a piece of code to display specific errors:

# exception handlingfrom urllib import request,errortry:response = urllib.request.urlopen('https://movie.douban.com/top250')except error.HTTPError as e:print(e.reason,e.code,e.headers,sep='\n') # Use httperror to judge I visited Douban here, and the result returned error 418. I checked that it was anti-crawling.

Processing method: Instead of requesting the entire webpage at one time, add the header option and only read the header, as follows:

The second question is, how to read the information of multiple pages. At this time, through observation, we know that the page link of douban contains page number information, and the for loop can be used to match the page number:

边栏推荐

- mysql -sql编程

- color socks problem

- Visual low-code system practice based on design draft identification

- Research on Ethernet PHY Chip LAN8720A Chip

- 【每日一题】1413. 逐步求和得到正数的最小值

- Process management and task management

- 算法与语音对话方向面试题库

- 【QT】QT项目:自制Wireshark

- Sikuli's Automated Testing Technology Based on Pattern Recognition

- 卷积神经网络识别验证码

猜你喜欢

随机推荐

数据在内存中的存储

中级xss绕过【xss Game】

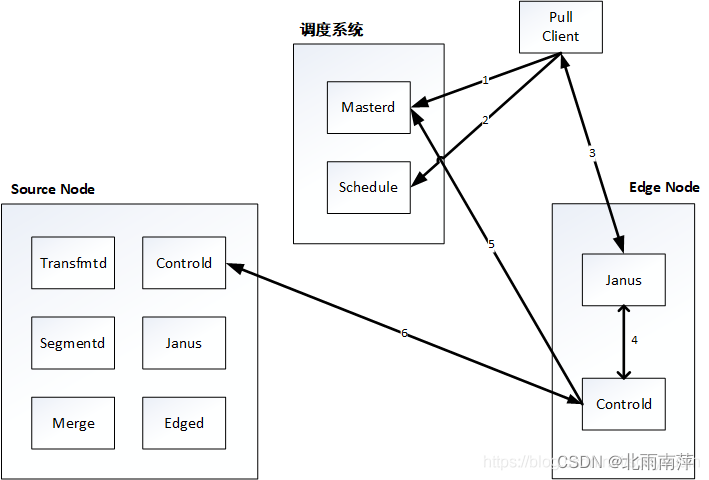

Janus实际生产案例

【引用计数器及学习MRC的理由 Objective-C语言】

grafana9配置邮箱告警

openpose脚部标注问题梳理

idea 删除文件空行

【UNR #6 C】稳健型选手(分治)(主席树)(二分)

《GB39732-2020》PDF download

51单片机驱动HMI串口屏,串口屏的下载方式

微透镜阵列的高级模拟

[网鼎杯 2020 青龙组]AreUSerialz

HCIP——综合交换实验

浏览器中的history详解

【QT】QT项目:自制Wireshark

翻译工具-翻译工具下载批量自动一键翻译免费

type-C 边充电边听歌(OTG) PD芯片方案,LDR6028 PD充电加OTG方案

牛客刷题——剑指offer(第四期)

夏克-哈特曼波前传感器

[QNX Hypervisor 2.2用户手册]10.14 smmu