当前位置:网站首页>Xgboost系列-XGB实际参数调优指南附源码

Xgboost系列-XGB实际参数调优指南附源码

2022-08-09 14:56:00 【wwlsm_zql】

Xgboost模型在机器学习、深度学习中经久不衰,不论是分类还是回归任务都是一个不错的baseline甚至最终可用的模型,XGB对任务的普适性也决定了其具有大量的可调节参数,针对同一个任务,不同的参数设置可能带来不同甚至相差甚远的性能结果,因为寻找当前任务下可用、有效的参数是一个必不可少的过程,在上一篇文章XGB系列-XGB参数指南_wwlsm_zql的博客-CSDN博客在运行 XGBoost 之前,我们必须设置三种类型的参数: 通用参数、提升参数和任务参数。本文提供了对XGB模型的全部参数的介绍,用于指导对参数的选择https://blog.csdn.net/wwlsm_zql/article/details/126192959介绍了XGB的所有参数,针对如果繁多的参数,试探枚举是一个非常庞大的工作量,因此本文介绍通过hyperopt实现自动参数寻优,找到适合自己任务的最佳参数。

安装依赖的包

!pip install xgboost sklearn hyperopt导入基本库

# 导入基本包

import pandas as pd

import numpy as np

import xgboost as xgb

from sklearn.metrics import accuracy_score

from hyperopt import STATUS_OK, Trials, fmin, hp, tpe

from sklearn.model_selection import train_test_split加载数据,并拆分

df = pd.read_csv("drive/MyDrive/data_daily/Wholesalecustomersdata.csv")

x = df.drop('Channel', axis=1)

y = df['Channel']

"""将分类任务转换为0-1"""

y[y == 2] = 0

y[y == 1] = 1

"""切分数据集"""

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size = 0.3, random_state = 0)

使用优化器进行参数寻优

- 定义参数空间,指定参数的所有候选空间

- 定义训练过程和评估的目标(损失函数)

- 执行寻优过程

- 获取最优的参数组合

初始化参数空间

The available hyperopt optimization algorithms are -

hp.choice(label, options) — Returns one of the options, which should be a list or tuple.

hp.randint(label, upper) — Returns a random integer between the range [0, upper).

hp.uniform(label, low, high) — Returns a value uniformly between low and high.

hp.quniform(label, low, high, q) — Returns a value round(uniform(low, high) / q) * q, i.e it rounds the decimal values and returns an integer.

hp.normal(label, mean, std) — Returns a real value that’s normally-distributed with mean and standard deviation sigma.

space={'max_depth': hp.quniform("max_depth", 3, 18, 1),

'gamma': hp.uniform ('gamma', 1,9),

'reg_alpha' : hp.quniform('reg_alpha', 40,180,1),

'reg_lambda' : hp.uniform('reg_lambda', 0,1),

'colsample_bytree' : hp.uniform('colsample_bytree', 0.5,1),

'min_child_weight' : hp.quniform('min_child_weight', 0, 10, 1),

'n_estimators': 180,

'seed': 0

}定义优化目标

def objective(space):

clf=xgb.XGBClassifier(

n_estimators =space['n_estimators'], max_depth = int(space['max_depth']), gamma = space['gamma'],

reg_alpha = int(space['reg_alpha']),min_child_weight=int(space['min_child_weight']),

colsample_bytree=int(space['colsample_bytree']))

evaluation = [( X_train, y_train), ( X_test, y_test)]

clf.fit(X_train, y_train,

eval_set=evaluation, eval_metric="auc",

early_stopping_rounds=10,verbose=False)

pred = clf.predict(X_test)

accuracy = accuracy_score(y_test, pred>0.5)

print ("SCORE:", accuracy)

return {'loss': -accuracy, 'status': STATUS_OK }寻优过程

trials = Trials()

best_hyperparams = fmin(fn = objective,

space = space,

algo = tpe.suggest,

max_evals = 100,

trials = trials)打印结果

Here best_hyperparams gives us the optimal parameters that best fit model and better loss function value.

trials is an object that contains or stores all the relevant information such as hyperparameter, loss-functions for each set of parameters that the model has been trained.

'fmin' is an optimization function that minimizes the loss function and takes in 4 inputs - fn, space, algo and max_evals.

Algorithm used is tpe.suggest.

print("The best hyperparameters are : ","\n")

print(best_hyperparams)边栏推荐

猜你喜欢

利用qrcode组件实现图片转二维码

原子的核型结构及氢原子的波尔理论

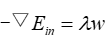

正则化原理的简单分析(L1/L2正则化)

C#轻量级ORM使用 Dapper+Contrib

写在光学之前--振动和波

【超级账本开发者系列】专访——肖慧 : 不忘初心,方得始终

Simple analysis of regularization principle (L1 / L2 regularization)

ASP.Net Core实战——身份认证(JWT鉴权)

It is deeply recognized that the compiler can cause differences in the compilation results

Linux安装mysql8.0详细步骤--(快速安装好)

随机推荐

微信小程序封装api

Simply record offsetof and container_of

小型项目如何使用异步任务管理器实现不同业务间的解耦

爱因斯坦的光子理论

如何防止浏览器指纹关联

排序方法(希尔、快速、堆)

pyspark explode时增加序号

OpenCV简介与搭建使用环境

pyspark dataframe分位数计算

【小白必看】初始C语言(下)

PAT1027 打印沙漏

LNK1123:转换到COFF期间失败:文件无效或损坏

编译器不同,模式不同,对结果的影响

二叉排序树的左旋与右旋

CV复习:BatchNorm

自定义指令,实现默认头像和用户上传头像的切换

Sequelize配置中的timezone测试

英语议论文读写02 Engineering

Qt控件-QTextEdit使用记录

The recycle bin has been showed no problem to empty the icon

https://colab.research.google.com/drive/1dm3Bk0VlEuBed8FMMeoZGWUR8xY84Ho9#scrollTo=ILPR3vXWdAvY

https://colab.research.google.com/drive/1dm3Bk0VlEuBed8FMMeoZGWUR8xY84Ho9#scrollTo=ILPR3vXWdAvY