当前位置:网站首页>Read LSTM (long short term memory)

Read LSTM (long short term memory)

2022-04-23 10:03:00 【Code ape chicken】

LSTM(Long Short-Term Memory)

0. from RNN Speaking of

Cyclic neural network (Recurrent Neural Network,RNN) It's a kind of neural network for processing sequence data . Compared to the general neural network , He's able to process data that changes in sequence . For example, the meaning of a word will have different meanings because of the content mentioned above ,RNN Can solve this kind of problem very well .

1. Ordinary RNN

Let's briefly introduce the general RNN.

The main form is shown in the figure below ( The pictures are from Professor Li Hongyi of NTU PPT):

here :

x x x Input data for the current state , h h h Represents the input received from the previous node .

y y y Is the output of the current node state , and h ′ h' h′ For the output passed to the next node .

From the formula above, we can see that , Output h’ And x and h All of the values are related .

and y We often use h’ Put it into a linear layer ( It's mainly about dimension mapping ) And then use s o f t m a x softmax softmax Classify to get the data you need .

Right here y y y How to use h ′ h' h′ Calculation often depends on the use of specific models .

Through input in the form of sequence , We can get the following form of RNN.

2. LSTM

2.1 What is? LSTM

Long and short term memory (Long short-term memory, LSTM) It's a special kind RNN, It is mainly to solve the problems in the process of long sequence training Gradient vanishing and gradient exploding problem . Simply speaking , Compared with ordinary RNN,LSTM Be able to perform better in longer sequences .

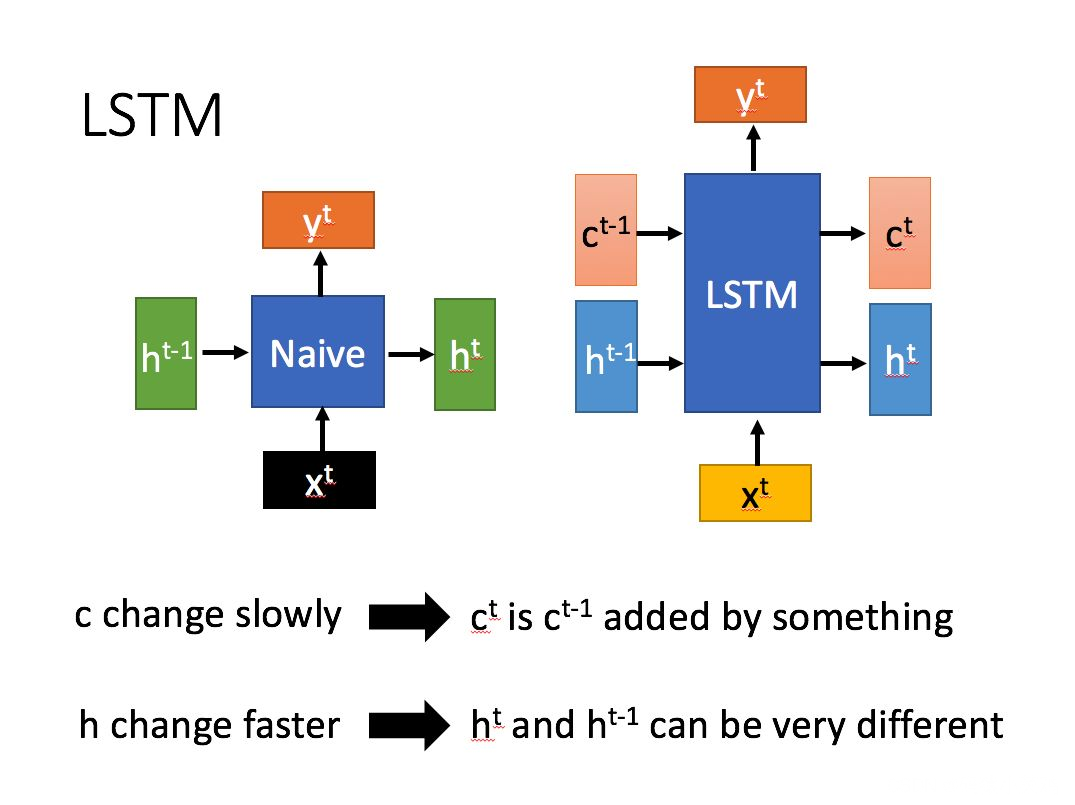

LSTM structure ( r ) And the general RNN The main input and output differences are as follows .

comparison RNN There is only one delivery state h t h^t ht,LSTM There are two transmission states , One c t c^t ct(cell state), And a h t h^t ht(hidden state).(Tips:RNN Medium h t h^t ht about LSTM Medium c t c^t ct )

Among them, for the transmission of c t c^t ct Change is slow , Usually the output is c t c^t ct It's from the last state c t − 1 c^{t-1} ct−1 Add some numerical values .

and h t h^t ht There are often big differences under different nodes .

2.2 thorough LSTM structure

The following is specific to LSTM To analyze the internal structure of .

use first LSTM The current input of x t x^t xt And from the last state h t − 1 h^{t-1} ht−1 You get four states in stitching training .

among , z f z^f zf , z i z^i zi , z o z^o zo It's the stitching vector multiplied by the weight matrix , Through one more s i g m o i d sigmoid sigmoid The activation function is converted to 0 0 0 To 1 1 1 Value between , As a gating state . and z z z The result is passed through a t a n h tanh tanh The activation function will be converted to -1 To 1 Between the value of the ( Use here t a n h tanh tanh Because it's used as input data , Instead of gating ).

Let's start with a further introduction of these four states LSTM Internal use .

⊙ \odot ⊙ yes Hadamard Product, That is, the multiplication of the corresponding elements in the operation matrix , Therefore, two multiplication matrices are required to be of the same type . ⊕ \oplus ⊕ It means matrix addition .

2.3 LSTM There are three main internal stages :

- Forget the stage . This stage is mainly used to input from the previous node selectivity forget . To put it simply, it will “ Forget the unimportant , Remember the important ”.

Specifically, it is calculated z f z^f zf (f Express forget) As a forgotten gatekeeper , To control the last state c t − 1 c^{t-1} ct−1 What needs to be left and what needs to be forgotten .

- Choose the stage of memory . This stage selectively inputs this stage “ memory ”. It's mainly about input x t x^t xt Choose to remember . What's important is to write down , What doesn't matter , Remember less . The current input is calculated from the previous z z z Express . And the gate control signal is chosen by z i z^i zi (i representative information) To control .

Add the results of the above two steps , You can get the... Transmitted to the next state [ The formula ] . That's the first formula in the picture above .

- Output stage . This stage will determine which output will be taken as the current state . Mainly through z o z^o zo To control . And also for what we got in the previous stage c o c^o co It's been released and retracted ( Through one tanh The activation function changes ).

And ordinary RNN similar , Output y t y^t yt It's often through h t h^t ht Change gets .

Reference

Link to the original text

Link to the original text :http://iloveeli.top:8090/archives/lstmlongshort-termmemory

版权声明

本文为[Code ape chicken]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230956434692.html

边栏推荐

- Classic routine: DP problem of a kind of string counting

- 第二章 Oracle Database In-Memory 体系结构(上) (IM-2.1)

- DBA常用SQL语句(6)- 日常管理

- [untitled]

- 2022茶艺师(初级)考试试题模拟考试平台操作

- CSP认证 202203-2 出行计划(多种解法)

- PHP two-dimensional array specifies that the elements are added after they are equal, otherwise new

- Go语言实践模式 - 函数选项模式(Functional Options Pattern)

- 中控学习型红外遥控模块支持网络和串口控制

- 最长公共前串

猜你喜欢

《Redis设计与实现》

0704、ansible----01

![[educational codeforces round 80] problem solving Report](/img/54/2fd298ddce3cd3e28a8fe42b3b8a42.png)

[educational codeforces round 80] problem solving Report

Educational Codeforces Round 81 (Rated for Div. 2)

Juc并发编程07——公平锁真的公平吗(源码剖析)

Yarn核心参数配置

Epidemic prevention registration applet

C language: expression evaluation (integer promotion, arithmetic conversion...)

lnmp的配置

Computer network security experiment II DNS protocol vulnerability utilization experiment

随机推荐

Juc并发编程09——Condition实现源码分析

(Extended) bsgs and higher order congruence equation

Failureforwardurl and failureurl

雨生百谷,万物生长

[hdu6833] a very easy math problem

Rain produces hundreds of valleys, and all things grow

Juc并发编程07——公平锁真的公平吗(源码剖析)

通过流式数据集成实现数据价值(3)- 实时持续数据收集

第一章 Oracle Database In-Memory 相关概念(续)(IM-1.2)

Career planning and implementation in the era of meta universe

Expansion of number theory Euclid

Prefix sum of integral function -- Du Jiao sieve

Pyqt5与通信

2022 mobile crane driver test question bank simulation test platform operation

Less than 100 secrets about prime numbers

使用IDEA开发Spark程序

C language: expression evaluation (integer promotion, arithmetic conversion...)

[COCI] lattice (dichotomy + tree divide and conquer + string hash)

杰理之AES能256bit吗【篇】

Odoo 服务器搭建备忘