当前位置:网站首页>Extensive reading of alexnet papers: landmark papers in the field of deep learning CV neurips2012

Extensive reading of alexnet papers: landmark papers in the field of deep learning CV neurips2012

2022-04-21 16:49:00 【zqwlearning】

Alexnet Network is deep learning CV Landmark papers in the field ,LSVRC2012 Champion network ( extensive reading )

List of articles

subject

ImageNet Classification with Deep Convolutional Neural Networks

Using deep convolution neural network ImageNet classification

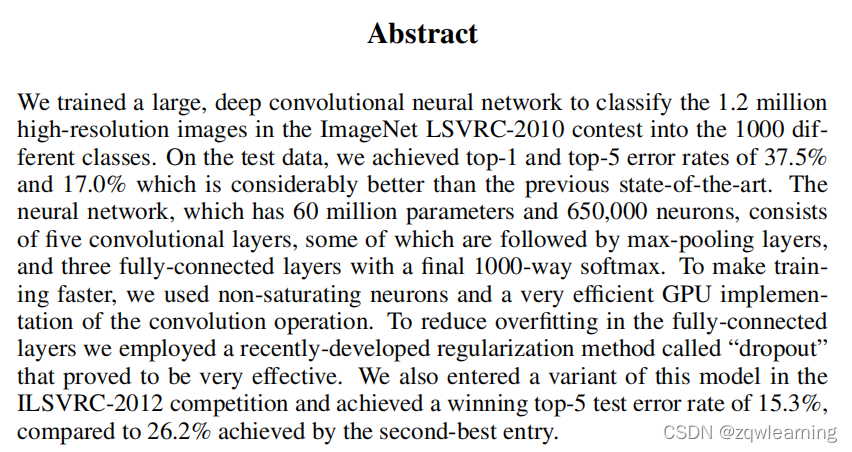

Abstract

We trained a large-scale deep convolution neural network , take ImageNet LSVRC-2010 In the competition 120 Ten thousand high-resolution images are divided into 1000 Different categories . On the test data , We have 37.5% and 17.0% Of top-1 and top-5 Error rate , Much better than the previous state-of-the-art level . The neural network has 6000 Ten thousand parameters and 65 Ten thousand neurons , from 5 It is composed of convolution layers , Some of these layers are followed by the largest collection layer . And three fully connected layers , And finally 1000 road softmax. To make training faster , We used unsaturated neurons and a very effective GPU Implementation of convolution operation . In order to reduce the overfitting of the full connection layer , We use a recently developed technology called "dropout " The regularization method of , It turns out that this method is very effective . We also ILSVRC-2012 A variant of this model was used in the competition , And obtained 15.3% Of top-5 Test error rate , And the second best work reaches 26.2%.

notes :ILSVRC(ImageNet Large Scale Visual Recognition Challenge) It is one of the most sought after and authoritative academic competitions in the field of machine vision in recent years , It represents the highest level in the image field .ImageNet The dataset is ILSVRC The competition uses data sets , Led by Professor Li Feifei of Stanford University , Contains more than 1400 Ten thousand full-size marked pictures .ILSVRC The competition will start from ImageNet Extract some samples from the data set , With 2012 Year as an example , The training set of the game contains 1281167 A picture , The validation set contains 50000 A picture , The test set is 100000 A picture .

ILSVRC The competition involves a variety of tasks , Image classification is just one of them . Mainly adopts top-5 How to evaluate the error rate , That is to say, for each graph, we give 5 Second guess result , as long as 5 If you hit the real category once in a time, you will be classified correctly , Finally, count the error rate of miss . and top-1 The error rate is given one guess at a time

1 introduction

At present, object recognition methods mainly use machine learning methods . To improve their performance , We can collect larger data sets , Learn more powerful models , And use better techniques to prevent over fitting . Until recently , In the order of tens of thousands of images, the data set of labeled images is relatively small ( for example NORB, Caltech-101/256,CIFAR-10/100 ). The simple identification task can be well solved through this scale of data set , Especially when they are enhanced by label protective conversion . for example , Currently in MNIST The best error rate on the number recognition task (<0.3%) Close to human performance . But objects in the real environment show considerable differences , So learn to recognize them , You have to use a larger training set . It is necessary to use a much larger training set . As a matter of fact , The disadvantages of small image data sets have been widely recognized , But only recently has it been possible to collect labeled data sets with millions of images . New large data sets include LabelMe and ImageNet, The former consists of hundreds of thousands of completely segmented images , The latter consists of more than 2.2 Of 10000 categories 1500 High resolution images of 10000 markers .

To learn thousands of objects from millions of images , We need a person with great learning ability / Capacity model . However , The huge complexity of the object recognition task means that this problem cannot even be solved by ImageNet Such a large data set to specify , So our model should also have a lot of prior knowledge to make up for the fact that we don't have all the data . Convolutional neural networks (CNNs) It makes up such a kind of model . Their ability can be controlled by changing their depth and breadth , And they also have a strong influence on the characteristics of the image 、 Mostly correct assumptions ( That is, the stationarity of statistical data and the locality of pixel dependence ). therefore , Compared with standard feedforward neural networks with similar layers ,CNNs The connection and parameters are much less , So they're easier to train . There are far fewer connections and parameters , So they're easier to train , Their theoretical best performance may be just a little worse .

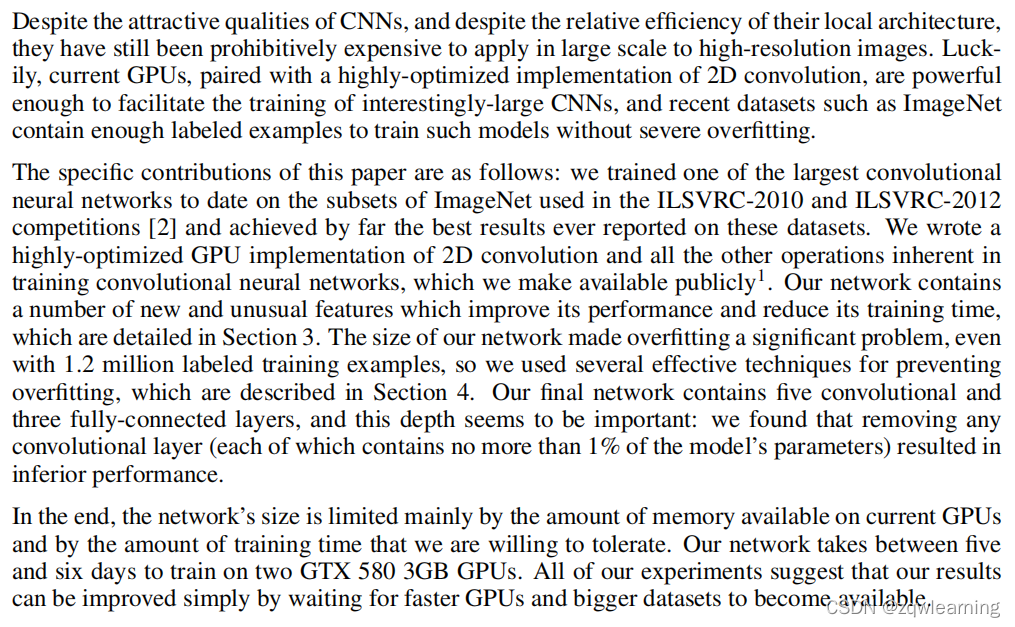

Even though CNNs Have attractive qualities , Although its local structure has considerable efficiency , However, their cost in large-scale application to high-resolution images is still too high . Fortunately, , current GPU Matched with highly optimized two-dimensional convolution implementation , Enough to promote interesting large-scale CNNs Training for , And the latest data set , Such as ImageNet, Include enough tag instances to train such a model , Without serious over fitting .

The contribution of this paper is as follows : We are ILSVRC-2010 and ILSVRC-2012 Used in the game ImageNet One of the largest convolutional neural networks has been trained on subsets , And achieved the best results reported on these data sets so far . We write a highly optimized two-dimensional convolution and train all other operations inherent in convolutional neural networks GPU Realization , We publicly provide this implementation . Our network contains some new and unusual features , These features improve its performance and reduce its training time , These will be in the 3 Section . The size of our network makes over quasi synthesis an important problem , Even if there is 120 10000 tagged training instances , So we use several effective techniques to prevent over fitting , These technologies will be introduced in the 4 Section description . Our final network consists of five convolution layers and three full connection layers , This depth seems to matter : We found that , Remove any accretion layer ( The parameters contained in each convolution layer do not exceed that of the model 1%) Will lead to performance degradation . Last , The scale of the network is mainly limited by the current GPU The amount of memory available on the and the training time we are willing to tolerate . Our network is in two GTX580 3GB GPU It takes five to six days to train . All our experiments show that , Just wait for faster GPU And larger data sets appear , Our results can be improved .

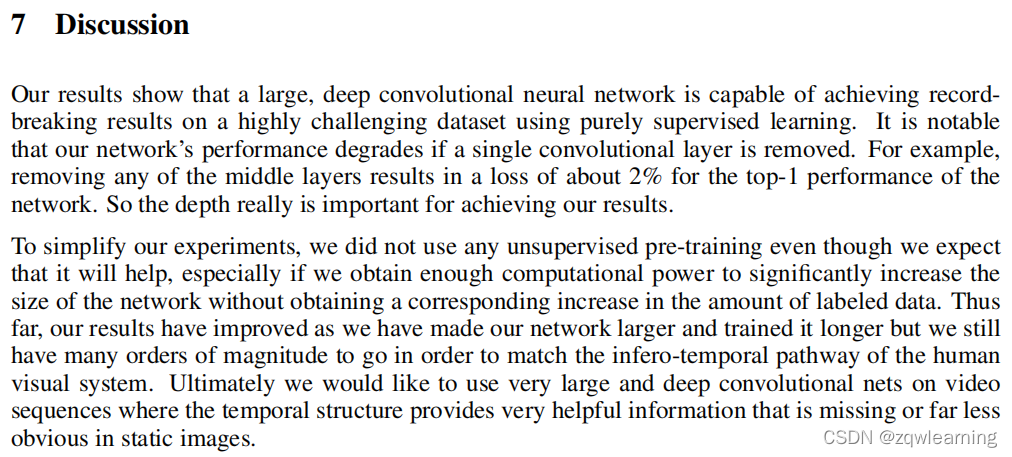

7 Discuss

Our results show that , A large-scale deep convolutional neural network can achieve record breaking results using pure supervised learning on a challenging data set . It is worth noting that , If you remove a convolution , The performance of our network will decline . for example , Remove any intermediate layer , Will make the network top-1 The performance loss is about 2%. therefore , Depth is really important to achieve our results .

To simplify our experiment , We didn't use any unsupervised pre training , Even if we expect it to help , Especially if we get enough computing power to greatly increase the scale of the network , Without obtaining the corresponding increase in the amount of tag data . up to now , With the expansion of our network scale and the extension of training time , Our results have improved , But we have to do with reaching the spatiotemporal reasoning ability of the human visual system (infero-temporal pathway of the human visual system) It's far away . Final , We hope to use a very large deep convolution network on video sequences , Because the time structure provides very useful information , And this information is missing or far from obvious in the still image .

版权声明

本文为[zqwlearning]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204211639366991.html

边栏推荐

猜你喜欢

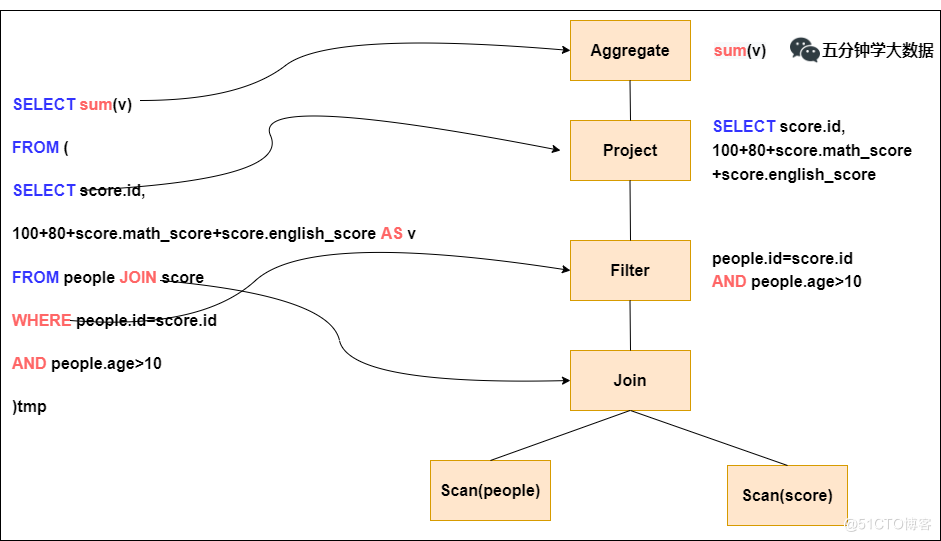

Detailed explanation of spark SQL underlying execution process

信号与系统2022春季作业-第九次作业

Historical evolution, application and security requirements of the Internet of things

C语言程序的环境,编译+链接

IvorySQL亮相于PostgresConf SV 2022 硅谷Postgres大会

机器学习吴恩达课程总结(五)

2018-8-10-使用-Resharper-特性

物联网的历史演进、应用和安全要求

Are you sure you don't want to see it yet? Managing your code base in this way is both convenient and worry free

IOS development interview strategy (KVO, KVC, multithreading, lock, runloop, timer)

随机推荐

ls -al各字段意思

What is the future development trend of mobile processor

高数 | 【多元函数微分学】如何判断二元微分式是否为全微分

30. 构造方法的重载

俄罗斯门户网站 Yandex 开源 YDB 数据库

Program design TIANTI race l2-007 family real estate (it's too against the sky. I always look at the solution of the problem, resulting in forgetting the problem and looking up how to write the collec

OTS parsing error: invalid version tag解决方法

k线图及单个k线图形态分析

力扣55. 跳跃游戏

Summary of Wu Enda's course of machine learning (5)

微软IE本地文件探测漏洞

Start redis process

C语言-细说函数与结构体

C sliding verification code | puzzle verification | slidecaptcha

中国创投,凛冬将至

29. There are 1, 2, 3 and 4 numbers. How many three digits that are different from each other and have no duplicate numbers

Sogou website divulges information

Discussion on next generation security protection

把握住这5点!在职考研也能上岸!

What role can NPU in mobile phone play