当前位置:网站首页>记一次 ERROR scheduler.AsyncEventQueue: Dropping event from queue shared导致OOM

记一次 ERROR scheduler.AsyncEventQueue: Dropping event from queue shared导致OOM

2022-08-09 13:11:00 【南风知我意丿】

文章目录

问题描述

日志:

2022-07-23 01:03:40 ERROR scheduler.AsyncEventQueue: Dropping event from queue shared. This likely means one of the listeners is too slow and cannot keep up with the rate at which tasks are being started by the scheduler.

2022-07-23 01:03:40 WARN scheduler.AsyncEventQueue: Dropped 1 events from shared since the application started.

2022-07-23 01:04:41 WARN scheduler.AsyncEventQueue: Dropped 2335 events from shared since Sat Jul 23 01:03:40 CST 2022.

2022-07-23 01:05:42 WARN scheduler.AsyncEventQueue: Dropped 2252 events from shared since Sat Jul 23 01:04:41 CST 2022.

2022-07-23 01:06:42 WARN scheduler.AsyncEventQueue: Dropped 1658 events from shared since Sat Jul 23 01:05:42 CST 2022.

2022-07-23 01:07:42 WARN scheduler.AsyncEventQueue: Dropped 1405 events from shared since Sat Jul 23 01:06:42 CST 2022.

2022-07-23 01:08:43 WARN scheduler.AsyncEventQueue: Dropped 1651 events from shared since Sat Jul 23 01:07:42 CST 2022.

2022-07-23 01:09:43 WARN scheduler.AsyncEventQueue: Dropped 1983 events from shared since Sat Jul 23 01:08:43 CST 2022.

2022-07-23 01:10:43 WARN scheduler.AsyncEventQueue: Dropped 1680 events from shared since Sat Jul 23 01:09:43 CST 2022.

2022-07-23 01:11:43 WARN scheduler.AsyncEventQueue: Dropped 1643 events from shared since Sat Jul 23 01:10:43 CST 2022.

2022-07-23 01:12:44 WARN scheduler.AsyncEventQueue: Dropped 1959 events from shared since Sat Jul 23 01:11:43 CST 2022.

2022-07-23 01:13:45 WARN scheduler.AsyncEventQueue: Dropped 2315 events from shared since Sat Jul 23 01:12:44 CST 2022.

2022-07-23 01:14:47 WARN scheduler.AsyncEventQueue: Dropped 2473 events from shared since Sat Jul 23 01:13:45 CST 2022.

2022-07-23 01:15:47 WARN scheduler.AsyncEventQueue: Dropped 1962 events from shared since Sat Jul 23 01:14:47 CST 2022.

2022-07-23 01:16:48 WARN scheduler.AsyncEventQueue: Dropped 1645 events from shared since Sat Jul 23 01:15:47 CST 2022.

2022-07-23 01:17:48 WARN scheduler.AsyncEventQueue: Dropped 1885 events from shared since Sat Jul 23 01:16:48 CST 2022.

2022-07-23 01:18:48 WARN scheduler.AsyncEventQueue: Dropped 2391 events from shared since Sat Jul 23 01:17:48 CST 2022.

2022-07-23 01:19:48 WARN scheduler.AsyncEventQueue: Dropped 1501 events from shared since Sat Jul 23 01:18:48 CST 2022.

2022-07-23 01:20:49 WARN scheduler.AsyncEventQueue: Dropped 1733 events from shared since Sat Jul 23 01:19:48 CST 2022.

2022-07-23 01:21:49 WARN scheduler.AsyncEventQueue: Dropped 1867 events from shared since Sat Jul 23 01:20:49 CST 2022.

2022-07-23 01:22:50 WARN scheduler.AsyncEventQueue: Dropped 1561 events from shared since Sat Jul 23 01:21:49 CST 2022.

2022-07-23 01:23:51 WARN scheduler.AsyncEventQueue: Dropped 1364 events from shared since Sat Jul 23 01:22:50 CST 2022.

2022-07-23 01:24:52 WARN scheduler.AsyncEventQueue: Dropped 1579 events from shared since Sat Jul 23 01:23:51 CST 2022.

2022-07-23 01:25:52 WARN scheduler.AsyncEventQueue: Dropped 1847 events from shared since Sat Jul 23 01:24:52 CST 2022.

Exception in thread "streaming-job-executor-0" java.lang.OutOfMemoryError: GC overhead limit exceeded

at org.apache.xbean.asm7.ClassReader.readLabel(ClassReader.java:2447)

at org.apache.xbean.asm7.ClassReader.createDebugLabel(ClassReader.java:2477)

at org.apache.xbean.asm7.ClassReader.readCode(ClassReader.java:1689)

at org.apache.xbean.asm7.ClassReader.readMethod(ClassReader.java:1284)

at org.apache.xbean.asm7.ClassReader.accept(ClassReader.java:688)

at org.apache.xbean.asm7.ClassReader.accept(ClassReader.java:400)

at org.apache.spark.util.ClosureCleaner$.clean(ClosureCleaner.scala:359)

at org.apache.spark.util.ClosureCleaner$.clean(ClosureCleaner.scala:162)

at org.apache.spark.SparkContext.clean(SparkContext.scala:2362)

at org.apache.spark.rdd.RDD.$anonfun$mapPartitions$1(RDD.scala:834)

at org.apache.spark.rdd.RDD$$Lambda$2785/604434085.apply(Unknown Source)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:388)

at org.apache.spark.rdd.RDD.mapPartitions(RDD.scala:833)

at org.apache.spark.sql.Dataset.rdd$lzycompute(Dataset.scala:3200)

at org.apache.spark.sql.Dataset.rdd(Dataset.scala:3198)

at cn.huorong.utils.PhoenixUtil$.jdbcBatchInsert(PhoenixUtil.scala:216)

at cn.huorong.run.SampleTaskSinkHbaseMapping_OfficialService.storePhoenix(SampleTaskSinkHbaseMapping_OfficialService.scala:94)

at cn.huorong.run.SampleTaskSinkHbaseMapping_OfficialService.$anonfun$sink$1(SampleTaskSinkHbaseMapping_OfficialService.scala:74)

at cn.huorong.run.SampleTaskSinkHbaseMapping_OfficialService.$anonfun$sink$1$adapted(SampleTaskSinkHbaseMapping_OfficialService.scala:37)

at cn.huorong.run.SampleTaskSinkHbaseMapping_OfficialService$$Lambda$1277/1357069303.apply(Unknown Source)

at org.apache.spark.streaming.dstream.DStream.$anonfun$foreachRDD$2(DStream.scala:629)

at org.apache.spark.streaming.dstream.DStream.$anonfun$foreachRDD$2$adapted(DStream.scala:629)

at org.apache.spark.streaming.dstream.DStream$$Lambda$1291/1167476357.apply(Unknown Source)

at org.apache.spark.streaming.dstream.ForEachDStream.$anonfun$generateJob$2(ForEachDStream.scala:51)

at org.apache.spark.streaming.dstream.ForEachDStream$$Lambda$1576/1966952151.apply$mcV$sp(Unknown Source)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at org.apache.spark.streaming.dstream.DStream.createRDDWithLocalProperties(DStream.scala:417)

at org.apache.spark.streaming.dstream.ForEachDStream.$anonfun$generateJob$1(ForEachDStream.scala:51)

at org.apache.spark.streaming.dstream.ForEachDStream$$Lambda$1563/607343052.apply$mcV$sp(Unknown Source)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

2022-07-23 02:01:34 WARN scheduler.AsyncEventQueue: Dropped 429 events from shared since Sat Jul 23 02:00:29 CST 2022.

Exception in thread "dispatcher-event-loop-0" java.lang.OutOfMemoryError: GC overhead limit exceeded

at scala.runtime.ObjectRef.create(ObjectRef.java:24)

at org.apache.spark.rpc.netty.Inbox.process(Inbox.scala:86)

at org.apache.spark.rpc.netty.MessageLoop.org$apache$spark$rpc$netty$MessageLoop$$receiveLoop(MessageLoop.scala:75)

at org.apache.spark.rpc.netty.MessageLoop$$anon$1.run(MessageLoop.scala:41)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

原因分析:

1.主要原因:

2022-07-23 01:03:40 WARN scheduler.AsyncEventQueue: Dropped 1 events from shared since the application started.

- 所有 Spark 作业、阶段和任务都被推送到事件队列。

- 后端侦听器从该队列读取 Spark UI 事件,并呈现 Spark UI。

- 事件队列(

spark.scheduler.listenerbus.eventqueue.capacity)的默认容量为 10000。

如果推送到事件队列的事件数超过后端侦听器可使用的事件数,则会从队列中删除最旧的事件,并且侦听器永远不会使用它们。

这些事件会丢失,并且不会在 Spark UI 中呈现。

2.源码分析

/** 初始化 event队列大小 LISTENER_BUS_EVENT_QUEUE_PREFIX = "spark.scheduler.listenerbus.eventqueue" LISTENER_BUS_EVENT_QUEUE_CAPACITY = .createWithDefault(10000) **/

private[scheduler] def capacity: Int = {

val queueSize = conf.getInt(s"$LISTENER_BUS_EVENT_QUEUE_PREFIX.$name.capacity",

conf.get(LISTENER_BUS_EVENT_QUEUE_CAPACITY))

assert(queueSize > 0, s"capacity for event queue $name must be greater than 0, " +

s"but $queueSize is configured.")

queueSize //默认10000

}

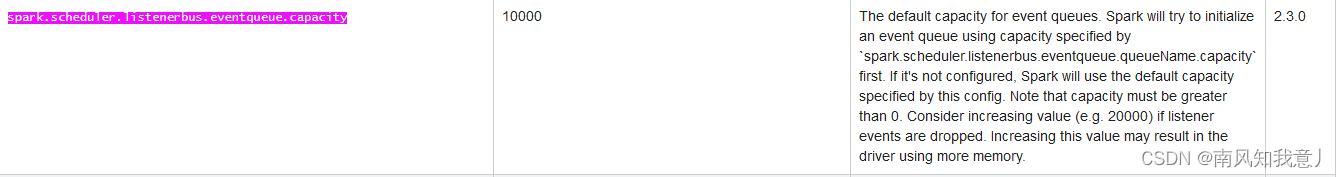

3.Spark官网

解决方案:

1.解决丢event的方法实际上是用Spark提供的参数,静态的让队列在初始化的时候容量变大了,这需要driver的内存大一点

2.将群集级别的群集的 Spark 配置中的 spark.scheduler.listenerbus.eventqueue.capacity 值设置为大于 10000 的值。

3.此值设置应用状态事件队列的容量,其中包含内部应用程序状态侦听器的事件。 增大此值后,事件队列可以容纳更多的事件,但可能会导致驱动程序使用更多内存。

#参考

Spark里Histroy Server丢task,job和Stage问题调研

新发现

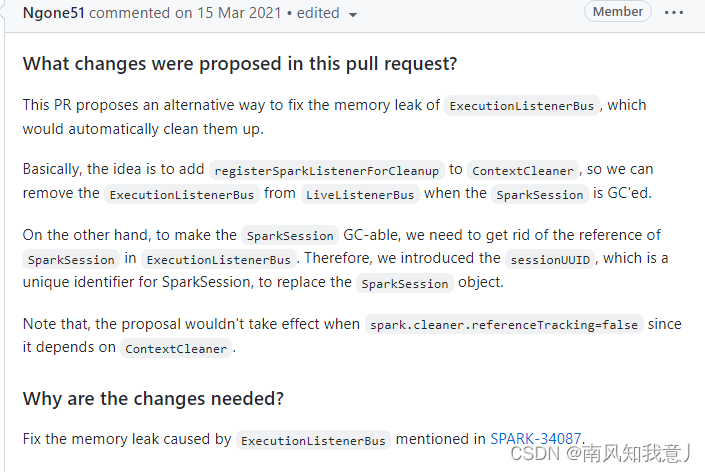

最近,同事找到了git上别人提的PR,发现了问题的本质,下面贴出原链接

https://github.com/apache/spark/pull/31839

原文:

翻译一下:

这个 PR 提出了一种修复 ExectionListenerBus 的内存泄漏的替代方法,这种方法会自动清除这些内存泄漏。

基本上,我们的想法是将 registerSparkListenerForCleanup 添加到 ContextCleaner,

这样当 SparkSession 被 GC‘ ed 时,我们就可以从 LiveListenerBus 中删除 ExectionListenerBus。

另一方面,为了使 SparkSession 能够 GC,我们需要去掉 ExectionListenerBus 中的 SparkSession 引用。

因此,我们引入了 sessionUUID (一个 SparkSession 的唯一标识符)来替换 SparkSession 对象。

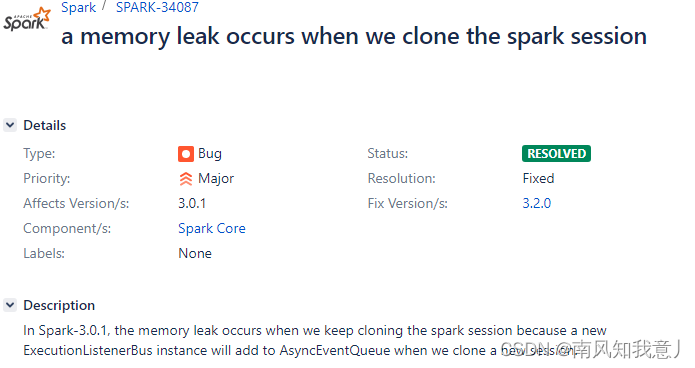

SPARK-34087

分析

从这我们可以看出,这个是spark3.0.1的一个bug,ExecutionListenerBus这个玩意会一直增长,gc后也不会减小,又因为默认队列长度只有1万,增长到1万,会删除旧的,但是有个问题就是删除旧的速度 小于新增加的速度,那队列就会变得很长,一直在内存里面就会导致Driver OOM

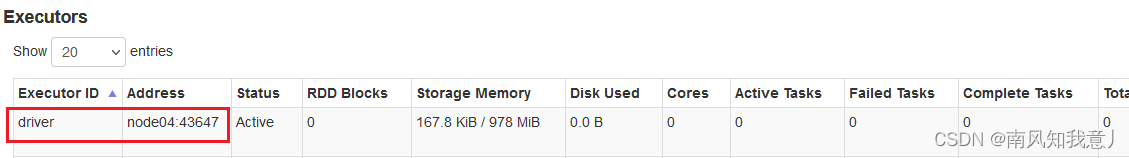

下面记录一下如何查看 ExecutionListenerBus

- 1.找到driver所在节点

- 找到driver所在的AM

[node04 userconf]# jps | grep ApplicationMaster

168299 ApplicationMaster

168441 ApplicationMaster

[node04 userconf]# ps -ef | grep application_1658675121201_0408 | grep 168441

hadoop 168441 168429 24 Jul27 ? 07:53:51 /usr/java/jdk1.8.0_202/bin/java -server -Xmx2048m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1658675121201_0408/container_e47_1658675121201_0408_01_000001/tmp -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+PrintHeapAtGC -Dspark.yarn.app.container.log.dir=/var/log/udp/2.0.0.0/hadoop/userlogs/application_1658675121201_0408/container_e47_1658675121201_0408_01_000001 org.apache.spark.deploy.yarn.ApplicationMaster --class cn.huorong.SampleTaskScanMapping_Official --jar file:/data/udp/2.0.0.0/dolphinscheduler/exec/process/5856696115520/5888199825472_7/70/4192/spark实时/taskmapping_official_stream-3.0.3.jar --arg -maxR --arg 100 --arg -t --arg hr_task_scan_official --arg -i --arg 3 --arg -g --arg mappingOfficialHbaseOfficial --arg -pn --arg OFFICIAL --arg -ptl --arg SAMPLE_TASK_SCAN,SCAN_SHA1_M_TASK,SCAN_TASK_M_SHA1 --arg -hp --arg p --arg -local --arg false --properties-file /tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1658675121201_0408/container_e47_1658675121201_0408_01_000001/__spark_conf__/__spark_conf__.properties --dist-cache-conf /tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1658675121201_0408/container_e47_1658675121201_0408_01_000001/__spark_conf__/__spark_dist_cache__.properties

- 3.进入 arthas

//切到普通用户

java -jar arthas-boot.jar --telnet-port 9998 -http-port -1

找到168441 对应坐标

- 4 利用arthas 查看实例数量

//多执行几次 可以看到 实例数量一直在增长

[[email protected]]$ vmtool --action getInstances --className *ExecutionListenerBus --limit 10000 --express 'instances.length'

@Integer[2356]

解决:

spark官方建议,升级到spark 3.0.3即可解决,我们原本是3.0.1,小版本升级,就替换了spark jar包即可,再去监控一下listenerBus数量,就发现数量就会出现波动了。

把那个任务开启,观察了2天,发现一切正常。至此,排查了5天算是解决了。

边栏推荐

猜你喜欢

随机推荐

NC84 完全二叉树结点数

Process/Thread Related in Sandbox - 2

力扣解法汇总1413-逐步求和得到正数的最小值

机器学习web服务化实战:一次吐血的服务化之路 (转载非原创)

[MRCTF2020]套娃-1

NC61 两数之和

面试攻略系列(三)-- 高级开发工程师面试问些啥?

gin's middleware and routing grouping

RobotFramework 之 条件判断

NC53 删除链表的倒数第n个节点

【瑞吉外卖】day05:增、删、改、查分类以及公共字段自动填充

FFmpeg multimedia file processing (the basic concept of ffmpeg processing stream data)

音频基础学习——声音的本质、术语与特性

关于做2D游戏时,Canvas边界显示在Game窗口的问题

Microsoft 10/11 命令行打开系统设置页(WUAP,!WIN32)

The sword refers to the offer, cuts the rope 2

将 .json 格式 转换成 .xml格式

音视频录入的pts和dts问题

vim常用命令

tkiner组件之滚动文本框(scrolledtext )相关操作