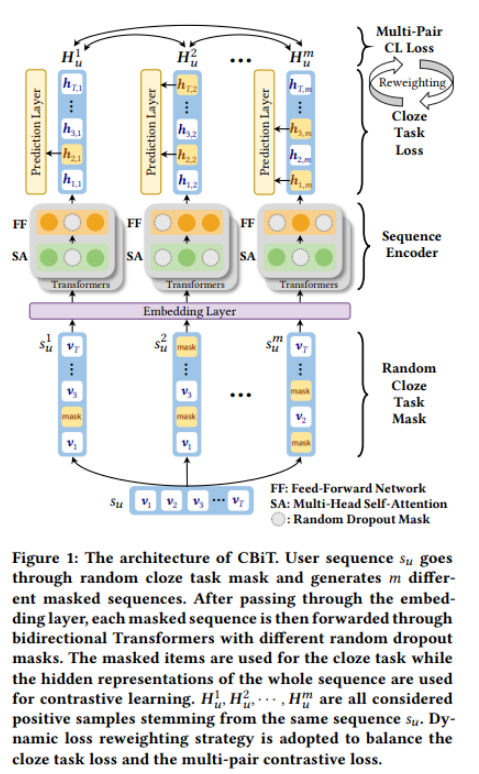

Using the Transformer-based sequence encoder for contrastive learning has achieved advantages in sequence recommendation.It maximizes the consistency between paired sequence augmentations that share similar semantics.However, the existing contrastive learning methods for sequence recommendation mainly use the left and right unidirectional Transformer as the base encoder, which is not optimal for sequence recommendation since user behavior may not be strictly in left-to-right order.To address this problem, we propose a new framework named Contrastive Learning with Bidirectional Transformer Sequence Recommendation (CBiT).Specifically, we first apply a sliding window technique to long user sequences in a bidirectional Transformer, which allows for more fine-grained partitioning of user sequences.Then we combine the cloze task mask and dropout mask to generate high-quality positive samples for multi-pair contrastive learning, which shows better performance and adaptability than ordinary one-pair contrastive learning.Furthermore, we introduce a new dynamic loss weighting strategy to balance the cloze task loss and the contrast task loss.Experimental results on three public benchmark datasets show that our model outperforms state-of-the-art models in sequence recommendation.

Paper link: https://arxiv.org/pdf/2208.03895.pdf

![68: Chapter 6: Develop article services: 1: Content sorting; article table introduction; creating [article] article services;](/img/95/7f21ecda19030c2faecbe373893d66.png)