当前位置:网站首页>南大通用数据库-Gbase-8a-学习-04-部署分布式集群

南大通用数据库-Gbase-8a-学习-04-部署分布式集群

2022-08-09 22:42:00 【阳光九叶草LXGZXJ】

一、环境

| 名称 | 值 |

|---|---|

| cpu | Intel Core i5-1035G1 CPU @ 1.00GHz |

| 操作系统 | CentOS Linux release 7.9.2009 (Core) |

| 内存 | 3G |

| 逻辑核数 | 2 |

| 节点1-IP | 192.168.142.10 |

| 节点2-IP | 192.168.142.11 |

二、集群部署

1、解压安装包

[[email protected] pkg]# tar -xvf GBase8a_MPP_Cluster-NoLicense-8.6.2_build43-R33-132743-redhat7-x86_64.tar.bz2

gcinstall/

gcinstall/bundle_data.tar.bz2

gcinstall/InstallTar.py

gcinstall/license.txt

gcinstall/CorosyncConf.py

gcinstall/gbase_data_timezone.sql

gcinstall/CGConfigChecker.py

gcinstall/gcinstall.py

gcinstall/cluster.conf

gcinstall/Restore.py

gcinstall/unInstall.py

gcinstall/fulltext.py

gcinstall/InstallFuns.py

gcinstall/extendCfg.xml

gcinstall/bundle.tar.bz2

gcinstall/BUILDINFO

gcinstall/GetOSType.py

gcinstall/RestoreLocal.py

gcinstall/FileCheck.py

gcinstall/rmt.py

gcinstall/dependRpms

gcinstall/demo.options

gcinstall/rootPwd.json

gcinstall/example.xml

gcinstall/pexpect.py

gcinstall/replace.py

gcinstall/SSHThread.py

gcinstall/loginUserPwd.json

2、配置demo.options

[[email protected] pkg]# cd gcinstall/

[[email protected] gcinstall]# ll

总用量 93016

-rw-r--r--. 1 gbase gbase 292 12月 17 2021 BUILDINFO

-rw-r--r--. 1 root root 2249884 12月 17 2021 bundle_data.tar.bz2

-rw-r--r--. 1 root root 87478657 12月 17 2021 bundle.tar.bz2

-rw-r--r--. 1 gbase gbase 1951 12月 17 2021 CGConfigChecker.py

-rw-r--r--. 1 gbase gbase 309 12月 17 2021 cluster.conf

-rwxr-xr-x. 1 gbase gbase 4167 12月 17 2021 CorosyncConf.py

-rw-r--r--. 1 gbase gbase 434 12月 17 2021 demo.options

-rw-r--r--. 1 root root 154 12月 17 2021 dependRpms

-rw-r--r--. 1 gbase gbase 684 12月 17 2021 example.xml

-rwxr-xr-x. 1 gbase gbase 419 12月 17 2021 extendCfg.xml

-rw-r--r--. 1 gbase gbase 781 12月 17 2021 FileCheck.py

-rw-r--r--. 1 gbase gbase 2700 12月 17 2021 fulltext.py

-rw-r--r--. 1 gbase gbase 4818440 12月 17 2021 gbase_data_timezone.sql

-rwxr-xr-x. 1 gbase gbase 76282 12月 17 2021 gcinstall.py

-rwxr-xr-x. 1 gbase gbase 3362 12月 17 2021 GetOSType.py

-rw-r--r--. 1 gbase gbase 156505 12月 17 2021 InstallFuns.py

-rw-r--r--. 1 gbase gbase 237364 12月 17 2021 InstallTar.py

-rw-r--r--. 1 gbase gbase 1114 12月 17 2021 license.txt

-rwxr-xr-x. 1 gbase gbase 296 12月 17 2021 loginUserPwd.json

-rwxr-xr-x. 1 gbase gbase 75990 12月 17 2021 pexpect.py

-rwxr-xr-x. 1 gbase gbase 25093 12月 17 2021 replace.py

-rw-r--r--. 1 gbase gbase 1715 12月 17 2021 RestoreLocal.py

-rwxr-xr-x. 1 gbase gbase 6622 12月 17 2021 Restore.py

-rw-r--r--. 1 gbase gbase 7312 12月 17 2021 rmt.py

-rwxr-xr-x. 1 gbase gbase 296 12月 17 2021 rootPwd.json

-rw-r--r--. 1 gbase gbase 2717 12月 17 2021 SSHThread.py

-rwxr-xr-x. 1 gbase gbase 21710 12月 17 2021 unInstall.py

[[email protected] gcinstall]# vim demo.options

[[email protected] gcinstall]# cat demo.options

installPrefix= /opt

coordinateHost = 192.168.142.10,192.168.142.11

#coordinateHostNodeID = 234,235,237

dataHost = 192.168.142.10,192.168.142.11

#existCoordinateHost =

#existDataHost =

loginUser= root

loginUserPwd = 'qwer1234'

#loginUserPwdFile = loginUserPwd.json

dbaUser = gbase

dbaGroup = gbase

dbaPwd = 'gbase'

rootPwd = 'qwer1234'

#rootPwdFile = rootPwd.json

dbRootPwd = ''

#mcastAddr = 226.94.1.39

mcastPort = 5493

| 参数名 | 描述 |

|---|---|

| installPrefix | 指定安装目录。 |

| coordinateHost | 所有 coordinator 节点 IP 列表,IP 地址之间用“,”分隔。 |

| coordinateHostNodeID | 支持对 IPV6 地址的输入,当集群节点为 IPV4 地址,则该参数无效,可不用设置;当集群节点为 IPV6 地址,则用户必须手动设置该参数,该参数为若干个正整数,需要与 coordinateHost 中的 IP 一一对应,用逗号隔开。例如:coordinateHostNodeID = 1,2,3。 |

| existCoordinateHost | 所有已存在的 coordinator 节点 IP 列表,IP 地址之间用“,”分隔。 |

| existDataHost | 所有已存在的 data 节点 IP 列表,IP 地址之间用“,”分隔。 |

| loginUser | 通过该用户 ssh 到集群各节点,再 su 切换到 root 用户执行命令,该参数必须使用。默认用户为 root。该用户所属用户组必须也是 loginUser。 |

| loginUserPwd | 表示用户 loginUser 在集群节点密码相同的方式; |

| loginUserPwdFile | 表示支持用户 loginUser 在集群节点不同密码的方式。二者不能兼用,否则报错,可以根据密码的异同选择参数使用,必须使用其一。默认密码均为 111111。 |

| dbaUser | 操作系统中的数据库管理员用户,默认gbase。 |

| dbaGroup | 操作系统中的数据库管理员用户的用户组,默认gbase。 |

| dbaPwd | 操作系统中的数据库管理员用户的密码。 |

| rootPwd | 操作系统中的root用户密码。 |

| rootPwdFile | 该参数支持 root 用户在多节点不同密码方式,与参数rootPwd 不能同时使用,否则报错。默认密码 111111。 |

| dbRootPwd | 数据库 root 用户密码。安装集群时无需设置,仅在集群升级、扩容、节点替换时使用生效。 |

| mcastAddr | 组播地址,缺省值为 226.94.1.39,组播方式会引起网络风暴,因此不建议使用此方式,如果将 mcastAddr 参数删除,则会按照 UDPU 方式进行安装,我们建议用户按此方式安装。 |

| mcastPort | 组播端口,缺省值为 5493,如果按照 UDPU 方式安装,则此参数无效。 |

3、安装软件

[[email protected] gcinstall]# ./gcinstall.py --silent=demo.options

显示如下表示成功

192.168.142.11 Installing gcluster.

192.168.142.10 Install gcluster on host 192.168.142.10 successfully.

192.168.142.11 Install gcluster on host 192.168.142.11 successfully.

192.168.142.10 Install gcluster on host 192.168.142.10 successfully.

Starting all gcluster nodes...

Adding new datanodes to gcware...

InstallCluster Successfully

4、查看集群状态

[[email protected] gcinstall]# gcadmin

CLUSTER STATE: ACTIVE

CLUSTER MODE: NORMAL

=====================================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

=====================================================================

| NodeName | IpAddress |gcware |gcluster |DataState |

---------------------------------------------------------------------

| coordinator1 | 192.168.142.10 | OPEN | OPEN | 0 |

---------------------------------------------------------------------

| coordinator2 | 192.168.142.11 | OPEN | OPEN | 0 |

---------------------------------------------------------------------

=================================================================

| GBASE DATA CLUSTER INFORMATION |

=================================================================

|NodeName | IpAddress |gnode |syncserver |DataState |

-----------------------------------------------------------------

| node1 | 192.168.142.10 | OPEN | OPEN | 0 |

-----------------------------------------------------------------

| node2 | 192.168.142.11 | OPEN | OPEN | 0 |

-----------------------------------------------------------------

5、设置分布式策略

[[email protected] gcinstall]# su - gbase

上一次登录:日 8月 7 20:29:00 CST 2022pts/3 上

[[email protected] ~]$ cd /opt/pkg/gcinstall/

[[email protected] gcinstall]$ vim gcChangeInfo.xml

[[email protected] gcinstall]$ cat gcChangeInfo.xml

<?xml version="1.0" encoding="utf-8"?>

<servers>

<rack>

<node ip="192.168.142.10"/>

<node ip="192.168.142.11"/>

</rack>

</servers>

[[email protected] gcinstall]$ gcadmin distribution gcChangeInfo.xml p 1 d 0

gcadmin generate distribution ...

[warning]: parameter [d num] is 0, the new distribution will has no segment backup

please ensure this is ok, input y or n: y

NOTE: node [192.168.142.10] is coordinator node, it shall be data node too

NOTE: node [192.168.142.11] is coordinator node, it shall be data node too

gcadmin generate distribution successful

| 参数 | 描述 |

|---|---|

| p | 每一个节点的分片数。 |

| d | 副本数量。 |

| pattern number | 数据分布模式,pattern 1 为负载均衡模式,pattern 2 为高可用模式。默认模式1。 |

注意:

gcChangeInfo.xml 文件中 rack 内 node 数量需要大于参数 p 的值(每个

节点存放主分片的数量),否则会出现如下错误:

[[email protected] gcinstall]$ gcadmin distribution gcChangeInfo.xml p 2 d 0

gcadmin generate distribution ...

[warning]: parameter [d num] is 0, the new distribution will has no segment backup

please ensure this is ok, input y or n: y

rack[1] node number:[2] shall be greater than segment number each node:[2]

please modify file [gcChangeInfo.xml] and try again

gcadmin generate distribution failed

6、检查集群分布策略

[[email protected] gcinstall]$ gcadmin showdistribution

Distribution ID: 1 | State: new | Total segment num: 2

Primary Segment Node IP Segment ID Duplicate Segment node IP

========================================================================================================================

| 192.168.142.10 | 1 | |

------------------------------------------------------------------------------------------------------------------------

| 192.168.142.11 | 2 | |

========================================================================================================================

7、初始化节点数据分布

[[email protected] gcinstall]$ gccli

GBase client 8.6.2.43-R33.132743. Copyright (c) 2004-2022, GBase. All Rights Reserved.

gbase> initnodedatamap;

Query OK, 0 rows affected, 1 warning (Elapsed: 00:00:01.87)

gbase>

这里就安装完成了,后面大家就可以正常操作数据库,命令类似于MySql。

8、错误记录

之前部署的错误记录,暂时未找到原因,后面知道原因了,和大家说一下解决办法,猜想会不会是资源不足。

我这里用的是一个虚机2G内存,1个核。

[[email protected] gcinstall]# ./gcinstall.py --silent=demo.options

*********************************************************************************

Thank you for choosing GBase product!

Please read carefully the following licencing agreement before installing GBase product:

TIANJIN GENERAL DATA TECHNOLOGY CO., LTD. LICENSE AGREEMENT

READ THE TERMS OF THIS AGREEMENT AND ANY PROVIDED SUPPLEMENTAL LICENSETERMS (COLLECTIVELY "AGREEMENT") CAREFULLY BEFORE OPENING THE SOFTWAREMEDIA PACKAGE. BY OPENING THE SOFTWARE

MEDIA PACKAGE, YOU AGREE TO THE TERMS OF THIS AGREEMENT. IF YOU ARE ACCESSING THE SOFTWARE ELECTRONICALLY, INDICATE YOUR ACCEPTANCE OF THESE TERMS. IF YOU DO NOT AGREE TO ALL THESE TERMS, PROMPTLY RETURN THE UNUSED SOFTWARE TO YOUR PLACE OF PURCHASE FOR A REFUND.

1. CHINESE GOVERNMENT RESTRICTED. If Software is being acquired by or on behalf of the Chinese Government , then the Government's rights in Software and accompanying documentatio n will be only as set forth in this Agreement. 2. GOVERNING LAW. Any action related to this Agreement will be governed by Chinese law: "COPYRIGHT LAW OF THE PEOPLE'S REPUBLIC OF CHINA","PATENT LAW OF THE PEOPLE'S REPUBLIC OF CHINA","TRADEMARK LAW OF THE PEOPLE'S REPUBLIC OF CHINA","COMPUTER SOFTWARE PROTECTION REGULATIONS OF THE PEOPLE'S REPUBLIC OF CHINA". No choice of law rules of any jurisdiction will apply."

*********************************************************************************

Do you accept the above licence agreement ([Y,y]/[N,n])? y

*********************************************************************************

Welcome to install GBase products

*********************************************************************************

Environmental Checking on gcluster nodes.

CoordinateHost:

192.168.217.66 192.168.217.67

DataHost:

192.168.217.66 192.168.217.67

Are you sure to install GCluster on these nodes ([Y,y]/[N,n])? [Y,y] or [N,n] : y

192.168.217.67 Start install on host 192.168.217.67

192.168.217.66 Start install on host 192.168.217.66

192.168.217.67 mkdir /opt_prepare on host 192.168.217.67.

192.168.217.66 Start install on host 192.168.217.66

192.168.217.67 Copying InstallFuns.py to host 192.168.217.67:/opt_prepare

192.168.217.66 Start install on host 192.168.217.66

192.168.217.67 Copying RestoreLocal.py to host 192.168.217.67:/opt_prepare

192.168.217.66 Start install on host 192.168.217.66

192.168.217.67 Copying pexpect.py to host 192.168.217.67:/opt_prepare

192.168.217.66 Start install on host 192.168.217.66

192.168.217.67 Copying bundle.tar.bz2 to host 192.168.217.67:/opt_prepare

192.168.217.66 Start install on host 192.168.217.66

192.168.217.67 Copying bundle_data.tar.bz2 to host 192.168.217.67:/opt_prepare

192.168.217.66 mkdir /opt_prepare on host 192.168.217.66.

192.168.217.67 Installing gcluster.

192.168.217.66 mkdir /opt_prepare on host 192.168.217.66.

192.168.217.67 Installing gcluster.

192.168.217.66 mkdir /opt_prepare on host 192.168.217.66.

192.168.217.67 Installing gcluster.

192.168.217.66 mkdir /opt_prepare on host 192.168.217.66.

192.168.217.67 Installing gcluster.

192.168.217.66 mkdir /opt_prepare on host 192.168.217.66.

192.168.217.67 Installing gcluster.

192.168.217.66 mkdir /opt_prepare on host 192.168.217.66.

192.168.217.67 Installing gcluster.

192.168.217.66 Copying InstallTar.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Installing gcluster.

192.168.217.66 Copying InstallTar.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Installing gcluster.

192.168.217.66 Copying InstallTar.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Installing gcluster.

192.168.217.66 Copying InstallTar.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying InstallTar.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying InstallTar.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying InstallFuns.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying InstallFuns.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying InstallFuns.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying InstallFuns.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying InstallFuns.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying rmt.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying rmt.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying rmt.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying rmt.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying rmt.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying rmt.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying SSHThread.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying SSHThread.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying SSHThread.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying SSHThread.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying SSHThread.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying RestoreLocal.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying RestoreLocal.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying RestoreLocal.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying RestoreLocal.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying RestoreLocal.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying RestoreLocal.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying pexpect.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying pexpect.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying pexpect.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying pexpect.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying pexpect.py to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying BUILDINFO to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying BUILDINFO to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying BUILDINFO to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying BUILDINFO to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying BUILDINFO to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying BUILDINFO to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying bundle.tar.bz2 to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying bundle.tar.bz2 to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying bundle.tar.bz2 to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying bundle.tar.bz2 to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying bundle.tar.bz2 to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying bundle.tar.bz2 to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying bundle_data.tar.bz2 to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying bundle_data.tar.bz2 to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying bundle_data.tar.bz2 to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying bundle_data.tar.bz2 to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Copying bundle_data.tar.bz2 to host 192.168.217.66:/opt_prepare

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Installing gcluster.

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Installing gcluster.

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Installing gcluster.

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Installing gcluster.

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Installing gcluster.

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Installing gcluster.

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Installing gcluster.

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Installing gcluster.

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Installing gcluster.

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Install gcluster on host 192.168.217.66 successfully.

192.168.217.67 Install gcluster on host 192.168.217.67 successfully.

192.168.217.66 Install gcluster on host 192.168.217.66 successfully.

Starting all gcluster nodes...

Adding new datanodes to gcware...

Fail to add new datanodes to gcware.

最后出现了报错,我们查看安装日志gcinstall.log中出现的错误,并没有太大头绪。

2022-08-02 09:45:13,652-root-DEBUG Starting all gcluster nodes...

2022-08-02 09:45:27,111-root-INFO start service successfull on host 192.168.217.67.

2022-08-02 09:45:37,324-root-INFO start service successfull on host 192.168.217.66.

2022-08-02 09:45:37,827-root-DEBUG /bin/chown -R gbase:gbase gcChangeInfo.xml

2022-08-02 09:45:37,830-root-DEBUG Adding new datanodes to gcware...

2022-08-02 09:45:37,830-root-DEBUG /bin/chown -R gbase:gbase dataHosts.xml

2022-08-02 09:45:42,838-root-DEBUG gcadmin addNodes dataHosts.xml

2022-08-02 09:45:42,851-root-ERROR gcadmin add nodes ...

NOTE: node [192.168.217.66] is coordinator node, it shall be data node too

NOTE: node [192.168.217.67] is coordinator node, it shall be data node too

gcadmin add nodes failed

2022-08-02 09:45:42,851-root-ERROR gcadmin addnodes error: [40]->[GC_AIS_ERR_CLUSTER_LOCKED]

2022-08-02 09:45:47,857-root-DEBUG gcadmin addNodes dataHosts.xml

2022-08-02 09:45:47,864-root-ERROR gcadmin add nodes ...

NOTE: node [192.168.217.66] is coordinator node, it shall be data node too

NOTE: node [192.168.217.67] is coordinator node, it shall be data node too

gcadmin add nodes failed

2022-08-02 09:45:47,864-root-ERROR gcadmin addnodes error: [40]->[GC_AIS_ERR_CLUSTER_LOCKED]

2022-08-02 09:45:52,870-root-DEBUG gcadmin addNodes dataHosts.xml

2022-08-02 09:45:52,882-root-ERROR gcadmin add nodes ...

每个节点停止数据库服务。

[[email protected] ~]# service gcware stop

Stopping GCMonit success!

Signaling GCRECOVER (gcrecover) to terminate: [ 确定 ]

Waiting for gcrecover services to unload:... [ 确定 ]

Signaling GCSYNC (gc_sync_server) to terminate: [ 确定 ]

Waiting for gc_sync_server services to unload: [ 确定 ]

Signaling GCLUSTERD to terminate: [ 确定 ]

Waiting for gclusterd services to unload:..................[ 确定 ]..................................

Signaling GCWARE (gcware) to terminate: [ 确定 ]

Waiting for gcware services to unload:.. [ 确定 ]

[[email protected] ~]# ps -ef|grep gbase

root 3743 3618 0 10:00 pts/1 00:00:00 su - gbase

gbase 3744 3743 0 10:00 pts/1 00:00:00 -bash

root 4216 3254 0 10:01 pts/0 00:00:00 grep --color=auto gbase

执行卸载脚本

[[email protected] gcinstall]# ./unInstall.py --silent=demo.options

Can not get gcluster node info:'NoneType' object has no attribute '__getitem__'

我们看一下源码unInstall.py,是在341和448两个中的一个抛出的错误,看着像是判断调度节点和数据节点是否存在函数出的错误,这里先不做探讨,后面有时间我们再看一下。

try:

existCoordinateHost = GetExistCoordinateHost()

existDataHost = GetExistDataHost(prefix,dbaUser)

except Exception, err:

raise Exception("Can not get gcluster node info:%s" % str(err))

InstallFuns.py中包含GetExistCoordinateHost和GetExistDataHost的定义。

def GetExistCoordinateHost():

coordinateHost = []

coroConf = '/etc/corosync/corosync.conf'

if(not path.exists('/var/lib/gcware/SCN')):

return coordinateHost

[rv, out, err] = ExecCMD("cat %s | grep memberaddr" % coroConf)

if(not rv):

lines = out.split('\n')

for l in lines:

if(len(l.strip()) > 0):

ip = l.split("memberaddr:")[1].strip()

if(len(ip) > 0):

coordinateHost.append(ip)

else:

raise Exception(err)

coordinateHost = Distinct(coordinateHost)

return coordinateHost

def GetExistDataHost(prefix,dbaUser):

dataNodes = []

if IsFederalCluster(dbaUser, prefix) != "GBase8a_85":

dataServerFile = '/var/lib/gcware/DATASERVER'

dataServerFileBak = '/var/lib/gcware/DATASERVER.bak'

JA=None

JB=None

if os.path.exists(dataServerFile):

FA = file(dataServerFile)

JA = json.load(FA)

FA.close()

if os.path.exists(dataServerFileBak):

FB = file(dataServerFileBak)

JB = json.load(FB)

FB.close()

JSON = JA

if int(JB['epoch']) > int(JA['epoch']):

JSON = JB

nodes = JSON['nodes']

for node in nodes:

dataNodes.append(node['ipaddr'])

else:

dataServerFile = '/var/lib/gcware/CIB.xml'

logger.debug("Get exist data hosts from %s." % dataServerFile)

if not os.path.exists(dataServerFile):

return dataNodes

f = file(dataServerFile)

s = f.read()

f.close()

root = ElementTree.fromstring(s)

for el in root.find('nodes').findall('node'):

ip = el.get('ipaddr')

dataNodes.append(ip)

return IPConvToComp(dataNodes)

由于上面卸载脚本报错,我们手动清理一下,每个节点都要做哦。

rm -fr /opt/gcluster

rm -fr /opt/gnode

rm -fr /opt/gcware

userdel -r gbase

rm -fr /opt/gcluster

rm -fr /opt/gnode

rm -fr /etc/corosync/corosync.conf

rm -fr /var/lib/gcware

rm -fr /etc/init.d/gbased

rm -fr /etc/init.d/gcrecover

rm -fr /etc/init.d/gclusterd

rm -fr /etc/init.d/gcsync

rm -fr /etc/init.d/gcware

边栏推荐

- 【JZOF】77按之字形打印二叉树

- String类常用方法

- Gumbel distribution of discrete choice model

- CMake使用记录

- 2022-08-09 mysql/stonedb-子查询性能提升-概论

- Gold Warehouse Database KingbaseGIS User Manual (6.2. Management Functions)

- 联盟链技术应用的难点

- 70. Stair Climbing Advanced Edition

- 2022-08-09 mysql/stonedb-subquery performance improvement-introduction

- 领跑政务云,连续五年中国第一

猜你喜欢

了解什么是架构基本概念和架构本质

【SSL集训DAY2】有趣的数【数位DP】

![[Cloud Native] This article explains how to add Tencent Crane to Kubevela addon](/img/42/384caec048e02f01461292afc931be.jpg)

[Cloud Native] This article explains how to add Tencent Crane to Kubevela addon

经济衰退即将来临前CIO控制成本的七种方法

【集训DAY3】中位数

YGG 经理人杯总决赛已圆满结束,来看看这份文字版总结!

6款跨境电商常用工具汇总

How to match garbled characters regularly?

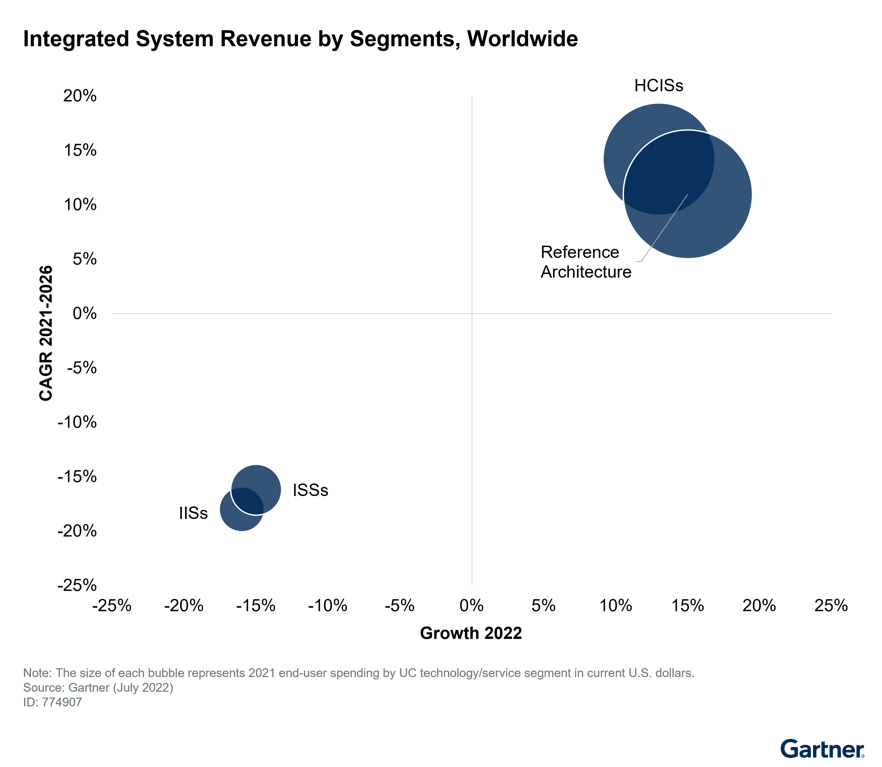

Gartner's global integrated system market data tracking, hyperconverged market growth rate is the first

中国SaaS企业排名,龙头企业Top10梳理

随机推荐

生成NC文件时,报错“未定义机床”

金仓数据库 KingbaseGIS 使用手册(6.3. 几何对象创建函数)

力扣:279.完全平方数

JS基础笔记-关于对象

34. Fabric2.2 证书目录里各文件作用

MVC与MVVM模式的区别

ALV报表总结2022.8.9

【诗歌】爱你就像爱生命

力扣:518. 零钱兑换 II

【云原生】一文讲透Kubevela addon如何添加腾讯Crane

【SSL集训DAY3】控制棋盘【二分图匹配】

直播间搭建,按钮左滑出现删除等操作按钮

领跑政务云,连续五年中国第一

上海一科技公司刷单被罚22万,揭露网络刷单灰色产业链

【JZOF】77 Print binary tree in zigzag

【集训DAY5】快速排序【模拟】【数学】

linux上使用docker安装redis

后台管理实现导入导出

Qt 之 QDateEdit 和 QTimeEdit

SRv6 performance measurement