当前位置:网站首页>3.2 - classification - Logistic regression

3.2 - classification - Logistic regression

2022-08-11 07:51:00 【A big boa constrictor 6666】

文章目录

一、函数集合(Function Set)

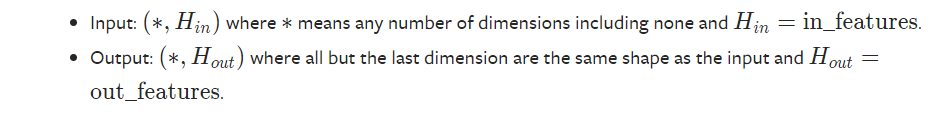

- 后验概率(Posterior probability):P(x)就是后验概率,右图是P(x)的图解,The whole process is also known as logistic regression(Logistic Regression)

二、better function(Goodness of a Function)

- 最好的w*和b*It is the training set that has the greatest probability of generating this training setw和b,The figure on the right is a simplified operation after the identity deformation of the formula.

- The blue lines are the two Bernoulli distributions(Bernoulli distribution)的交叉熵(Cross entropy),The cross entropy is used to calculate the two distributionsp和qhow close to each other,如果p和q一模一样的话,Then the final calculated cross entropy is 0

- 对于逻辑回归(Logistic Regression)而言,It is used to measure the quality of the modellossThe function is the sum of the cross-entropy over the training set.该值越小,The better the performance on the training set.

三、Find the best function(Find the best function)

- 对lossfunction to simplify,The final result is shown in green on the right,When the gap between the output of the model and the expected value is larger,Then the amount of our update should be larger

- We can see from the left image,Logistic regression and linear regression work in the same way when doing parameter updates,You just need to adjust the learning rate η \eta η

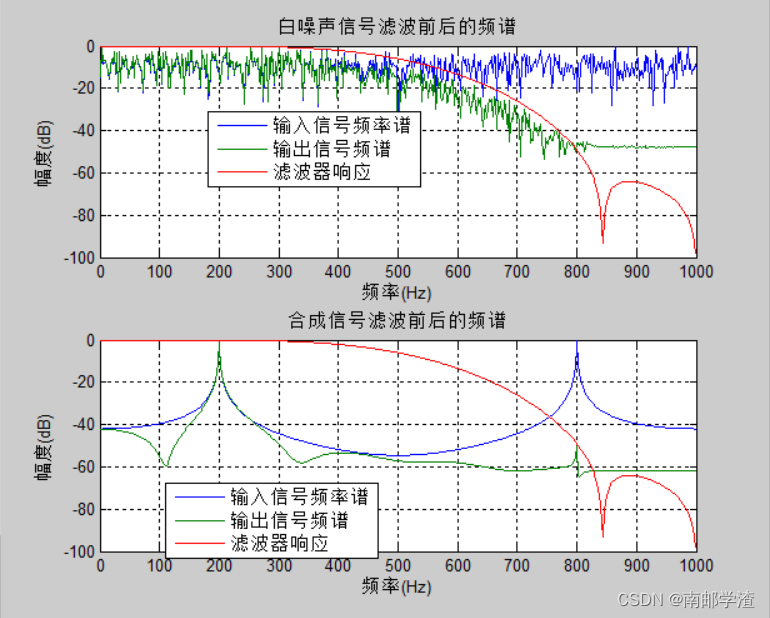

- According to the figure below, it can be clearly seen that variance is used when doing logistic regression(SquareError)来做loss函数的弊端,far from the optimal solution,Its differential value is still very small,This is not good for us to do gradient descent.

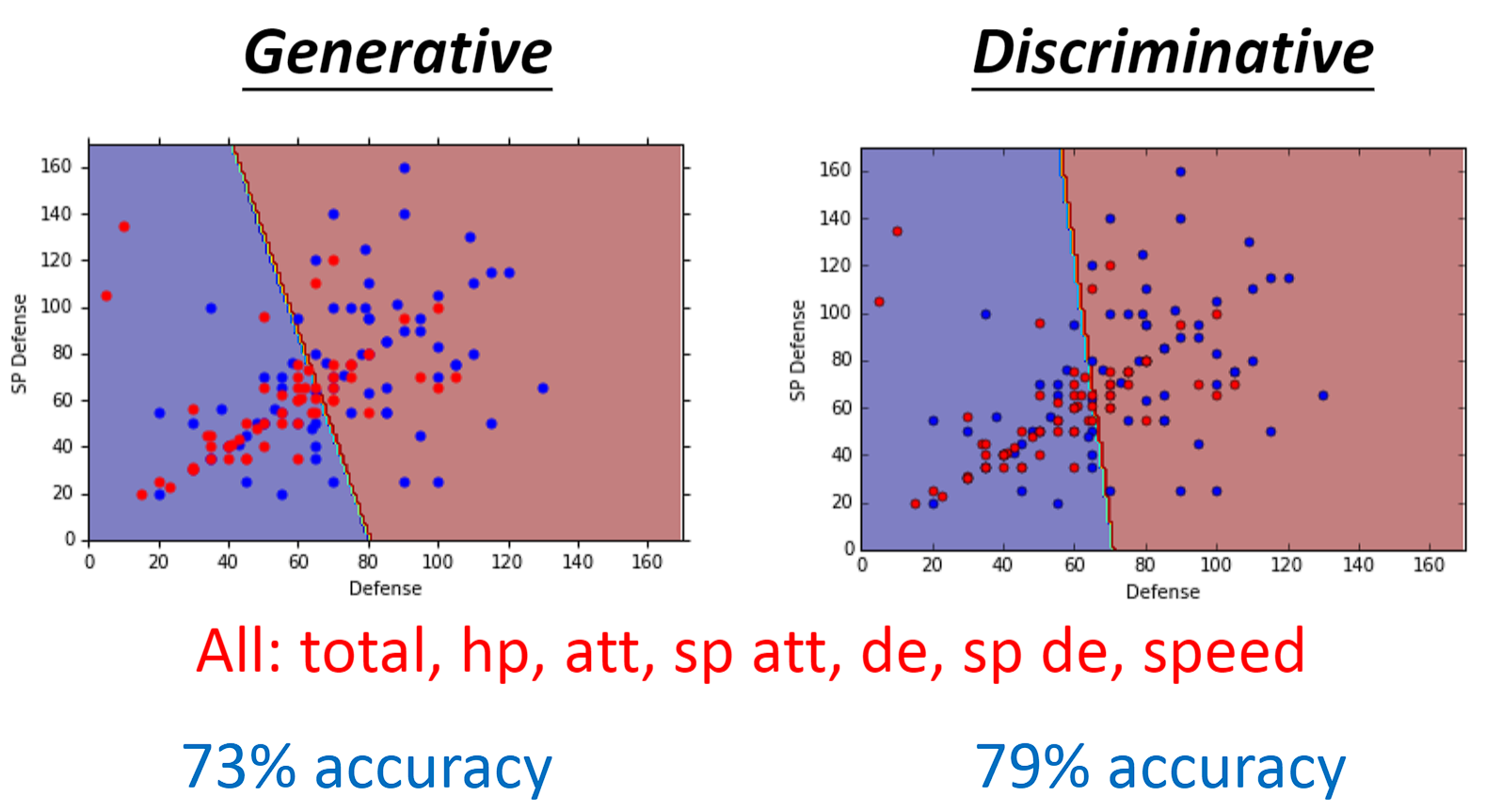

- Logistic regression is a discriminant method(Discriminative),Unlike the probabilistic generative model in the previous chapter, it is a generative method(Generative).

- Although both methods are looking for the best model in the same set of functions,But since logistic regression is found by doing gradient descent directlyw和b,Probabilistic generative models are created by finding𝜇1, 𝜇2, Σcome to findw和b,采取的方法不同,Therefore, the final model will also be very different.

- In the example of Pokémon,We found that logistic regression is better than probabilistic generative models.

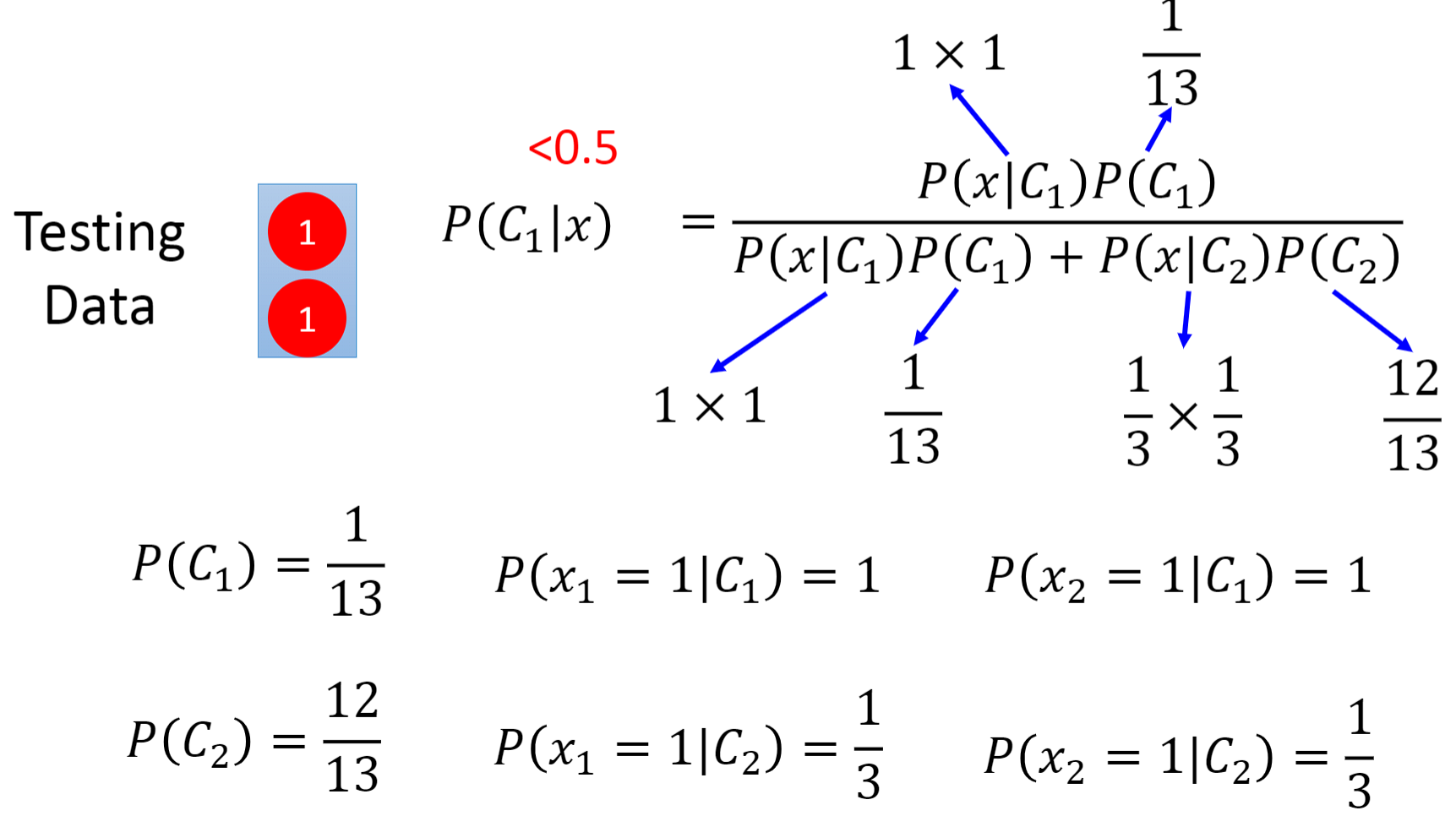

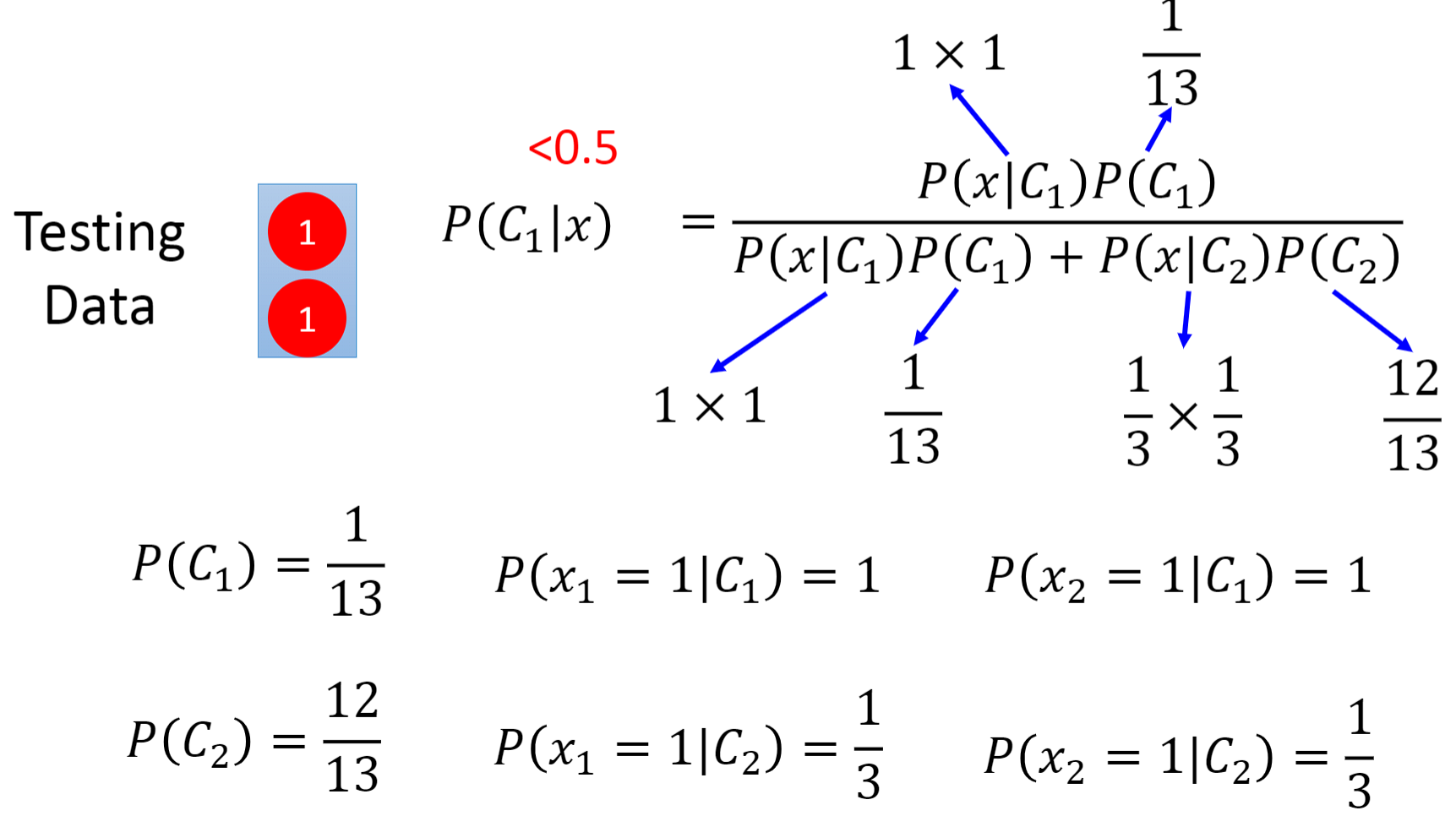

- In the example below it is easy to intuit that the test set belongsclass 1的,However naive Bayes classifiers in probabilistic generative models end up telling us where the test set came fromclass 2.这是为什么呢?

- This is because in the Naive Bayes classifier,It never considers correlations between different dimensions(correlation),It considers that the two-dimensional parameters in each of the following data are independent of each other.Because probabilistic generative models always make some assumptions,For example, suppose that the data comes from a probability distribution,It's like it's brainstorming something,

四、Advantages of probabilistic generative models

- Logistic regression is more affected by the data,Because he doesn't make any assumptions,So its error will decrease as the amount of data increases

- Probabilistic generative models are less affected by the data,Because he has an assumption of his own,Sometimes it ignores thatdata,And follow that assumption of its own heart.因此在数据量比较小的时候,It is possible for probabilistic generative models to outperform logistic regression.

- when the dataset is noisy,For example, part of the label is wrong,Because the probability generation model is less affected by the data,Then the final result may filter out these bad factors.

- 以语音辨识为例,Although a neural network is used,This is a logistic regression method.但事实上,The whole system is a probabilistic generative model,DNNJust a piece of it.

五、多分类

- SoftmaxIt means maximum reinforcement,Because a layer of index is passed in the middle(exponential)operation to amplify the gap between the outputs.

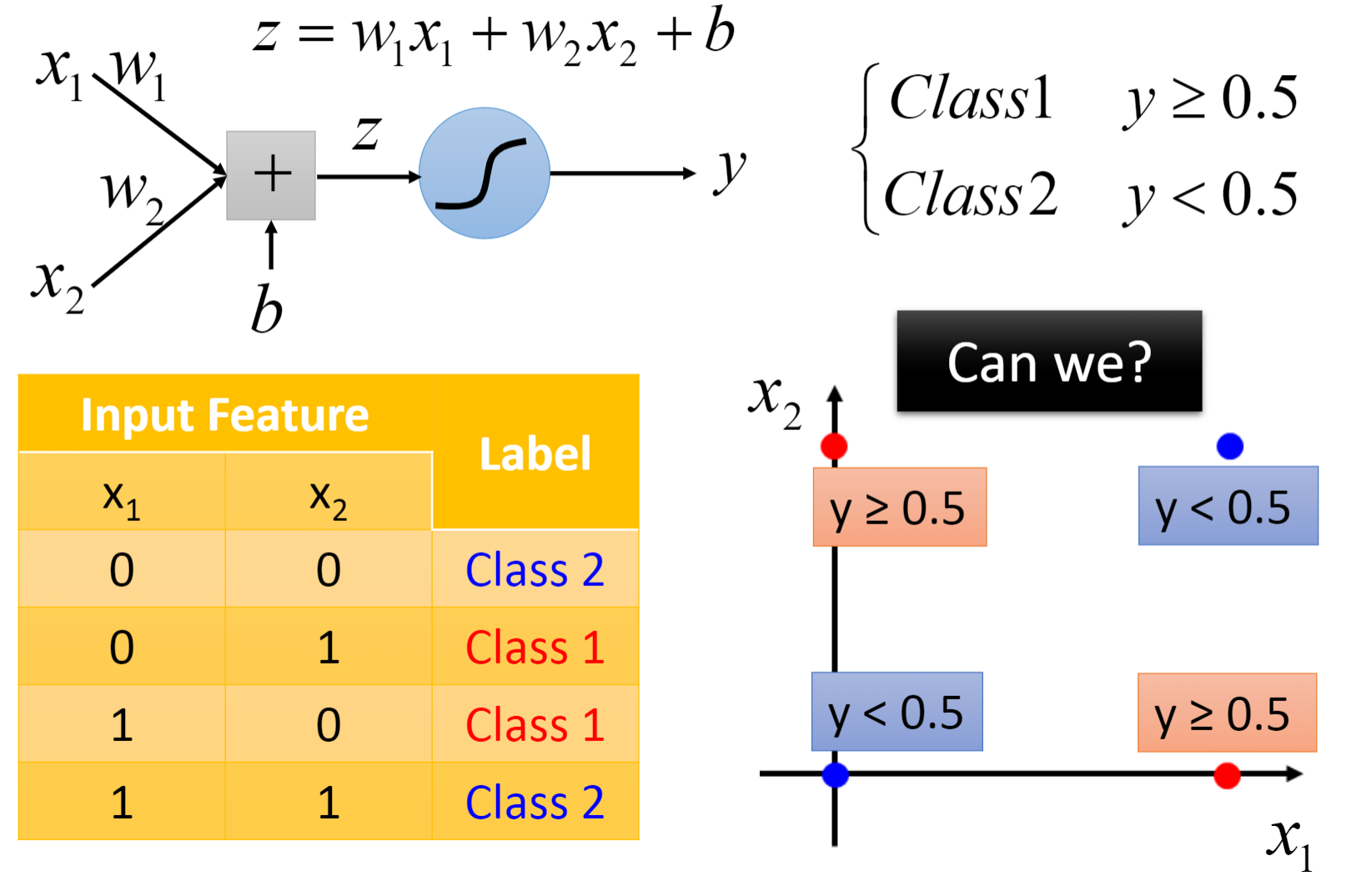

逻辑回归的限制(Limitation of Logistic Regression):

Here's the problem that logistic regression can't solve,We need to perform a certain feature transformation(Feature Transformation)

- 特征变换(Feature Transformation):In order to allow the machine to generate transformations autonomously(Transformation)规则,We can join multiple logistic regressions(Cascading)起来.The figure on the right nicely shows the two processes of feature transformation and classification.

- The box in the middle of the last picture is a class of neurons(Neuron),And this whole network is called a neural network(Neural Network),也被称为深度学习(Deep Learning)

边栏推荐

- 如何选择专业、安全、高性能的远程控制软件

- 接口测试的基础流程和用例设计方法你知道吗?

- Implementation of FIR filter based on FPGA (5) - FPGA code implementation of parallel structure FIR filter

- 为什么C#中对MySQL不支持中文查询

- 详述MIMIC 的ICU患者检测时间信息表(十六)

- 1081 检查密码 (15 分)

- 关于#sql#的问题:怎么将下面的数据按逗号分隔成多行,以列的形式展示出来

- Test cases are hard?Just have a hand

- 易观分析联合中小银行联盟发布海南数字经济指数,敬请期待!

- 【软件测试】(北京)字节跳动科技有限公司终面HR面试题

猜你喜欢

1091 N-Defensive Number (15 points)

囍楽云任务源码

【Pytorch】nn.Linear,nn.Conv

1071 小赌怡情 (15 分)

prometheus学习4Grafana监控mysql&blackbox了解

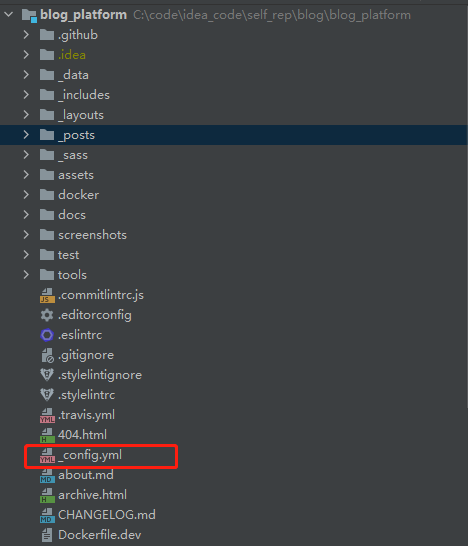

无服务器+域名也能搭建个人博客?真的,而且很快

基于FPGA的FIR滤波器的实现(4)— 串行结构FIR滤波器的FPGA代码实现

1096 big beautiful numbers (15 points)

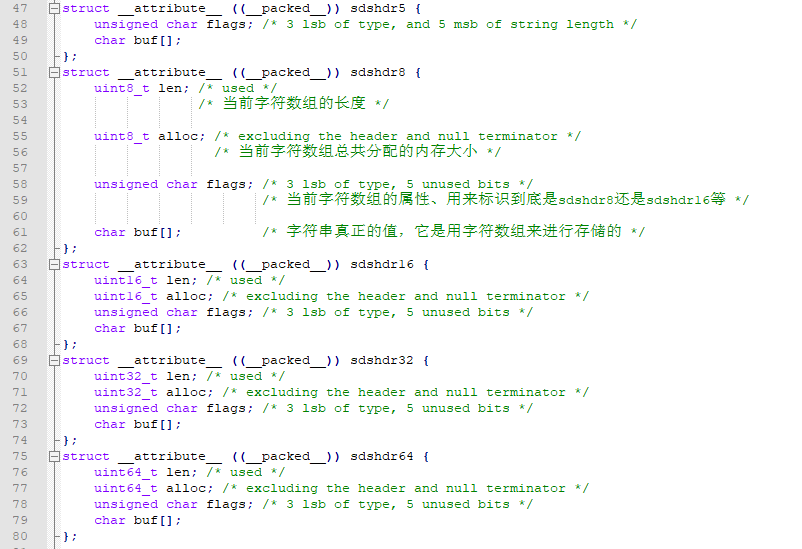

Redis源码:Redis源码怎么查看、Redis源码查看顺序、Redis外部数据结构到Redis内部数据结构查看源码顺序

3.2-分类-Logistic回归

随机推荐

6月各手机银行活跃用户较快增长,创半年新高

3.1-分类-概率生成模型

数仓开发知识总结

关于Android Service服务的面试题

SQL sliding window

基于FPGA的FIR滤波器的实现(4)— 串行结构FIR滤波器的FPGA代码实现

2022-08-10 Group 4 Self-cultivation class study notes (every day)

1002 Write the number (20 points)

线程交替输出(你能想出几种方法)

计算YUV文件的PSNR与SSIM

redis操作

3.2-分类-Logistic回归

Service的两种启动方式与区别

1096 big beautiful numbers (15 points)

TF中的One-hot

1061 True or False (15 points)

Tidb二进制集群搭建

Tensorflow中使用tf.argmax返回张量沿指定维度最大值的索引

matplotlib

Production and optimization of Unity game leaderboards