当前位置:网站首页>【环境搭建】onnx-tensorrt

【环境搭建】onnx-tensorrt

2022-08-09 09:03:00 【.云哲.】

1,介绍

onnx-tensorrt是一个模型推理框架。

2,安装

2.1 cuda,cudnn

2.2 cmake

2.3 protobuf,版本>=3.8.x

sudo apt-get install autoconf automake libtool curl make g++ unzip

sudo apt-get autoremove libprotobuf-dev protobuf-compiler # 卸载

git clone https://github.com/google/protobuf.git

cd protobuf

git submodule sync

git submodule update --init --recursive

./autogen.sh

./configure

make -j 8

make check

sudo make install

sudo ldconfig # refresh shared library cache.

protoc --version

export PATH=$PATH:/usr/local/protobuf/bin/

export PKG_CONFIG_PATH=/usr/local/protobuf/lib/pkgconfig/

2.4 tensorrt,tensorrt/lib -> /usr/lib, 版本:7.1.3.4

2.5 onnx-tensorrt

sudo apt-get install libprotobuf-dev protobuf-compiler

sudo apt-get install swig

git clone --branch 7.1 https://github.com/onnx/onnx-tensorrt.git

cd onnx-tensorrt

git submodule sync

git submodule update --init --recursive

mkdir build && cd build

cmake .. -DTENSORRT_ROOT=$TENSORRT_ROOT

make -j 8

sudo make install

注意:

1,vim setup.py -> 添加 INC_DIRS = ["$HOME/TensorRT/include"]

2,NvOnnxParser.h -> 添加 #define TENSORRTAPI

3,应用

python setup.py build # 虚拟环境

python setup.py install

python

>>> import onnx

>>> import onnx_tensorrt.backend as backend

>>> import numpy as np

>>> model = onnx.load("model.onnx")

>>> engine = backend.prepare(model, device='CUDA:0')

>>> input_data = np.random.random(size=(32, 3, 224, 224)).astype(np.float32)

>>> output_data = engine.run(input_data)[0]

>>> print(output_data)

>>> print(output_data.shape)

边栏推荐

猜你喜欢

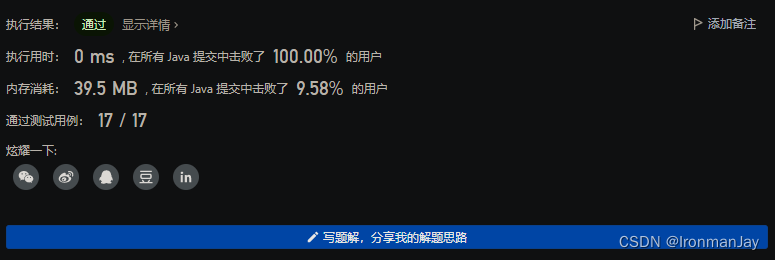

【LeetCode每日一题】——225.用队列实现栈

leetcode 36. 有效的数独(模拟题)

XCTF高校战“疫”网络安全分享赛Misc wp

![[Vulnerability reproduction] CVE-2018-12613 (remote file inclusion)](/img/0b/707eb4266cb5099ca1ef58225642bf.png)

[Vulnerability reproduction] CVE-2018-12613 (remote file inclusion)

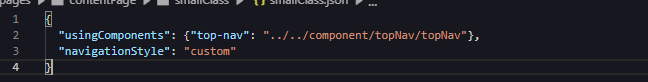

parse <compoN> error: Custom Component‘name should be form of my-component, not myComponent or MyCom

【场景化解决方案】构建门店通讯录,“门店通”实现零售门店标准化运营

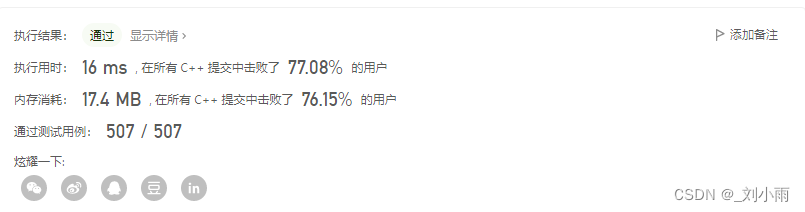

leetcode 34. 在排序数组中查找元素的第一个和最后一个位置(二分经典题)

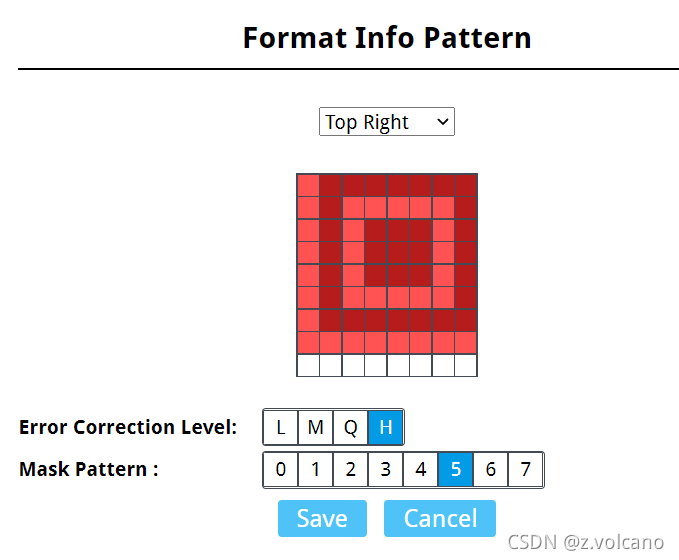

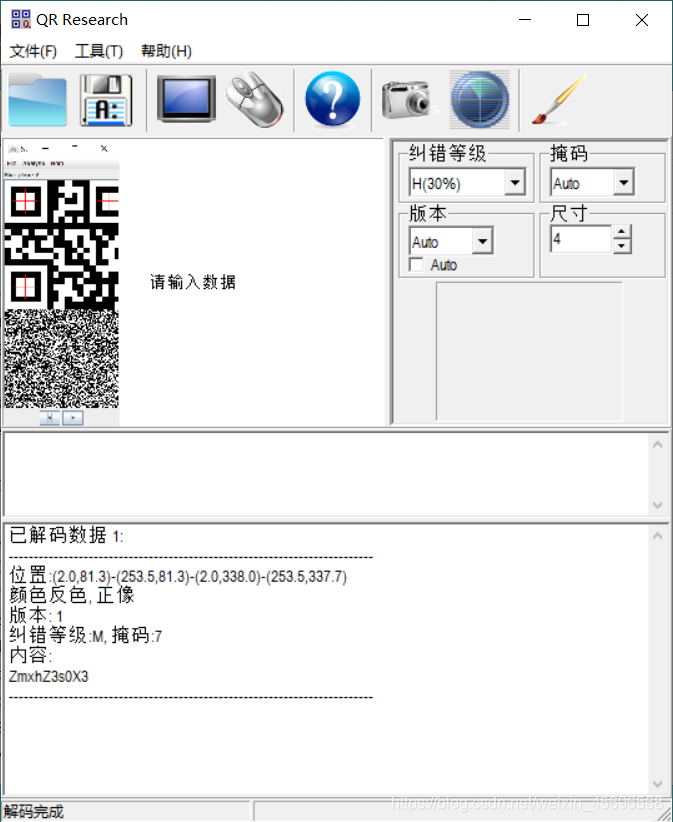

ctf misc 图片题知识点

BUUCTF MISC brush notes (2)

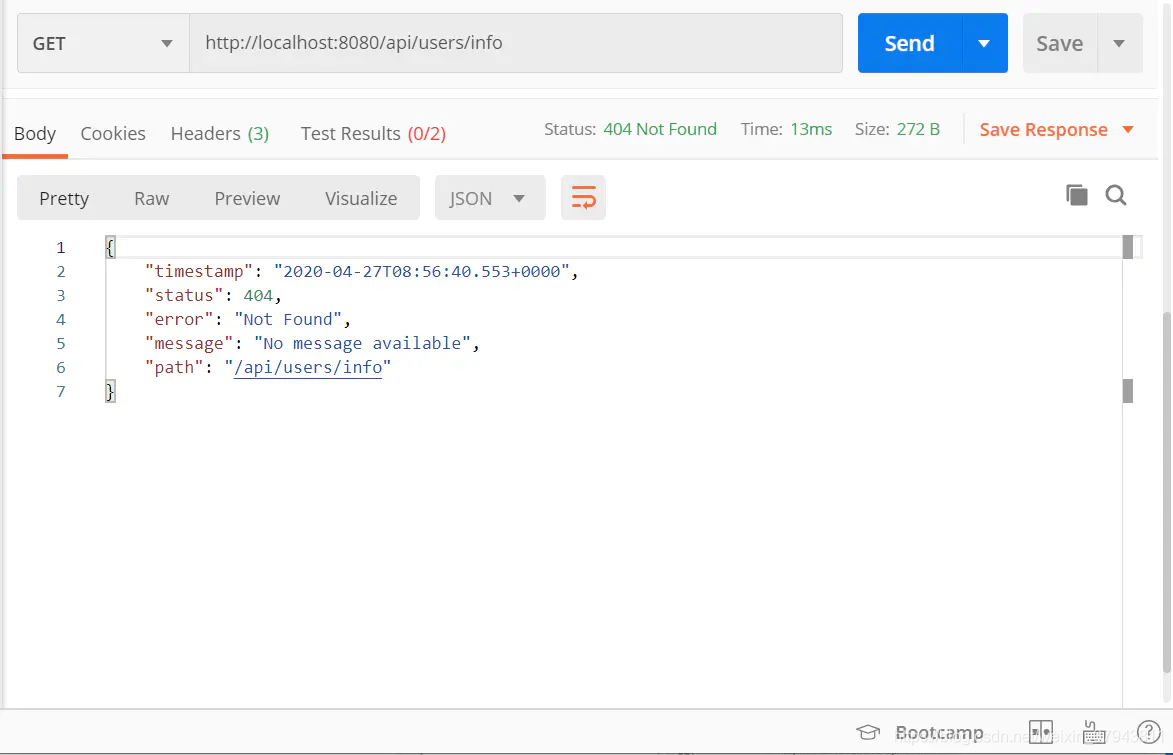

RESTful

随机推荐

内存监控以及优化

canal工作原理及简单案例演示

firefox e.path无效

无符号整数文法和浮点数文法

PoPW token distribution mechanism may ignite the next bull market

【KD】2022 KDD Compressing Deep Graph Neural Networks via Adversarial Knowledge Distillation

长辈相亲

基于 JSch 实现服务的自定义监控解决方案

epoll LT和ET 问题总结

【场景化解决方案】构建设备通讯录,制造业设备上钉实现设备高效管理

gin清晰简化版curd接口例子

ctf misc 图片题知识点

GBJ610-ASEMI超薄整流扁桥GBJ610

H5页面px不对,单位不对等问题

Regular Expressions for Shell Programming

二叉树的遍历(非递归)

【场景化解决方案】钉钉财务审批同步金蝶云星空

parse <compoN> error: Custom Component‘name should be form of my-component, not myComponent or MyCom

腾讯云服务器修改为root登录安装宝塔面板

【愚公系列】2022年08月 Go教学课程 033-结构体方法重写、方法值、方法表达式