当前位置:网站首页>How does redis solve the problems of cache avalanche, cache breakdown and cache penetration

How does redis solve the problems of cache avalanche, cache breakdown and cache penetration

2022-04-23 13:54:00 【ZHY_ ERIC】

We often face three problems of cache exceptions , Cache avalanche 、 Cache breakdown and cache penetration . Once these three problems happen , It will cause a large number of requests to backlog to the database layer . If the number of concurrent requests is large , It will lead to database downtime or failure .

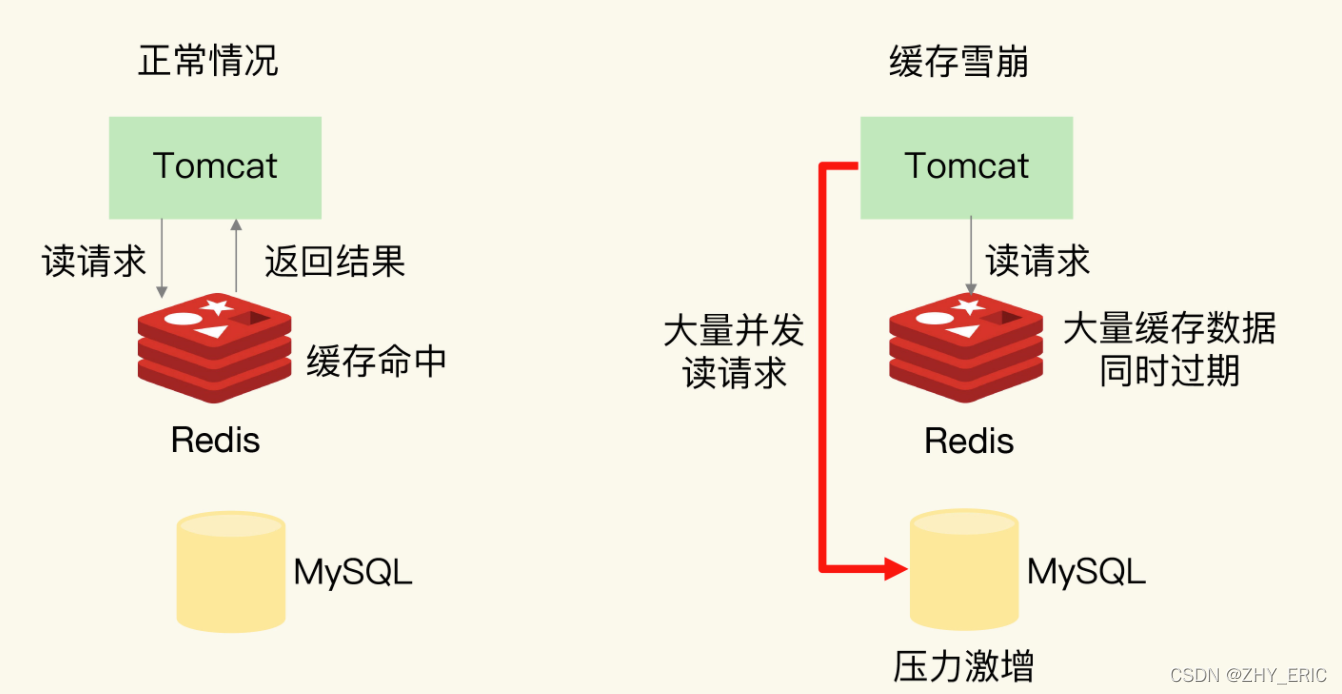

One 、 Cache avalanche

Cache avalanche It means that a large number of application requests cannot be in Redis Processing in the cache , Then , Applications send a large number of requests to the database layer , This leads to a surge of pressure on the database layer .

Cache avalanches are generally caused by Two reasons As a result of , The response is also different .

The first reason is : A large amount of data in the cache is out of date at the same time , A large number of requests cannot be processed .

say concretely , When data is saved in cache , When the expiration time is set , If at a certain moment , A lot of data is out of date at the same time , here , If the application accesses this data again , A cache miss occurs . Then , The application will send the request to the database , Reading data from a database . If the application has a large number of concurrent requests , Then the pressure on the database is great , This will further affect other normal business request processing of the database .

Aiming at the cache avalanche caused by the simultaneous failure of a large number of data , There are two solutions .

First , We can avoid setting the same expiration time for a large amount of data . If the business layer does require some data to fail at the same time , You can use EXPIRE When the command sets an expiration time for each data , Add a small random number to the expiration time of these data ( for example , Increase randomly 1~3 minute ), thus , The expiration time of different data varies , But the difference won't be too big , It avoids the simultaneous expiration of a large amount of data , At the same time, it also ensures that these data are basically invalid at a similar time , Still meet business needs .

In addition to fine tuning the expiration time , We can also downgrade the service , To deal with the cache avalanche . The so-called service degradation , When cache avalanche occurs , Take different processing methods for different data .

- When business applications access non core data ( For example, e-commerce commodity attributes ) when , Temporarily stop querying the data from the cache , Instead, it returns predefined information directly 、 Null value or error message ;

- When business applications access core data ( E-commerce inventory, for example ) when , Still allow query caching , If the cache is missing , You can also continue to read through the database .

thus , Only requests for partially expired data are sent to the database , The pressure on the database is not so great . The following figure shows the execution of data requests when the service is degraded , You can see .

In addition, simultaneous invalidation of a large amount of data will lead to cache avalanche , There is also a cache avalanche , That's it ,Redis The cache instance is down , Unable to process request , This will cause a large number of requests to backlog to the database layer all at once , This causes a cache avalanche .

The first suggestion is , It is to realize the service fusing or request current limiting mechanism in the business system .

The so-called service , In the event of a cache avalanche , To prevent cascading database avalanches , Even the collapse of the whole system , We suspend the business application's interface access to the cache system . More specifically , When the business application calls the cache interface , Caching clients don't send requests to Redis Cache instance , I'm going straight back , wait until Redis After the cache instance is restored to service , Then allow the application request to be sent to the cache system .

thus , We avoid a large number of requests due to missing cache , And the backlog to the database system , Ensure the normal operation of the database system .

Although the service can ensure the normal operation of the database , However, access to the entire cache system is suspended , It has a wide range of impact on business applications . In order to minimize this impact , We can also request current limiting .

The request flow restriction here , Is refers to , We control the number of requests entering the system per second at the request entry front end of the business system , Avoid sending too many requests to the database .

The second suggestion is to prevent .

It is built by means of master-slave nodes Redis Cache highly reliable clusters . If Redis The primary node of the cache is down , The slave node can also switch to the master node , Continue to provide caching services , Avoid cache avalanche caused by cache instance downtime .

Cache avalanche occurs when a large amount of data fails at the same time , And then I'll introduce you to cache breakdown , It occurs in the scenario of a hot data failure . Compared with cache avalanche , The amount of cache breakdown data is much smaller , The way to deal with it is also different .

Two 、 Cache breakdown

Cache breakdown means , A request for a hot data that is accessed very frequently , Cannot process in cache , Then , A large number of requests to access this data , All at once sent to the back-end database , Caused a surge in database pressure , It will affect the database to process other requests . Cache breakdown , It often occurs when hot data expires , As shown in the figure below :

In order to avoid the surge pressure on the database caused by cache breakdown , Our solution is also more direct , For hot data that is accessed very frequently , We won't set the expiration time . thus , Access requests to hotspot data , Can be processed in the cache , and Redis Tens of thousands of levels of high throughput can cope with a large number of concurrent requests .

3、 ... and 、 Cache penetration

Cache penetration means that the data to be accessed is not in Redis In cache , It's not in the database , Causes the request to access the cache , A cache miss occurred , When you visit the database again , It is found that there is no data to access in the database . here , The application cannot read data from the database and write it to the cache , To serve subsequent requests , thus , Caching becomes “ The decoration ”, If the application continues to have a large number of requests to access the data , It puts a lot of pressure on both the cache and the database , As shown in the figure below :

that , When will cache penetration occur ? Generally speaking , There are two situations .

- Business layer misoperation : The data in the cache and the data in the database were deleted by mistake , So there is no data in the cache and database ;

- A malicious attack : Dedicated access to data not in the database .

To avoid the impact of cache penetration , Give you three solutions .

The first is , Cache null or default values .

Once cache penetration occurs , We can query the data , stay Redis Cache a null value or a default value determined by negotiation with the business layer ( for example , The default value of inventory can be set to 0). Then , When the subsequent request sent by the application is queried again , You can directly from Redis Read null or default values in , Returned to the business application , Avoid sending a large number of requests to the database for processing , Maintain the normal operation of the database .

Second option yes , Use bloom filter to quickly determine whether the data exists , Avoid querying the database for the existence of data , Reduce database pressure .

The last option is , Request detection at the front end of the request entry . One reason for cache penetration is that there are a large number of malicious requests to access non-existent data , therefore , An effective solution is at the front end of the request entry , Check the validity of the request received by the business system , Put a malicious request ( For example, the request parameters are unreasonable 、 The request parameter is an illegal value 、 Request field does not exist ) Filter out , Don't let them access the back-end cache and database . thus , There will be no cache penetration problem .

Four 、 Summary

From the cause of the problem , Cache avalanche and breakdown are mainly because the data is not in the cache , Cache penetration is because the data is not in the cache , It's not in the database . therefore , Cache avalanche or breakdown , Once the data in the database is written to the cache again , Applications can quickly access data in the cache again , The pressure on the database will be reduced accordingly , When cache penetration occurs ,Redis The cache and database will continue to be under request pressure at the same time .

emphasize , Service failure 、 service degradation 、 Request current limiting these methods belong to “ lossy ” programme , While ensuring the stability of the database and the overall system , It will have a negative impact on business applications . For example, when using service degradation , Requests with partial data can only get error return information , Can't handle it properly . If a service is used , that , The service of the whole cache system has been suspended , The business scope of the impact is larger . After using the request current limiting mechanism , The throughput of the whole business system will be reduced , The number of user requests that can be processed concurrently will be reduced , Will affect the user experience .

therefore , The advice to you is , Try to use preventive schemes :

- For cache avalanche , Set the data expiration time reasonably , And build a highly reliable cache cluster ;

- For cache breakdown , When caching hot data that is accessed very frequently , Do not set expiration time ;

- For cache penetration , Detect malicious requests in front of the portal in advance , Or standardize the data deletion operation of the database , Avoid deleting by mistake .

版权声明

本文为[ZHY_ ERIC]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231350270749.html

边栏推荐

- Express ② (routing)

- Oracle view related

- Oracle defines self incrementing primary keys through triggers and sequences, and sets a scheduled task to insert a piece of data into the target table every second

- Modify the Jupiter notebook style

- freeCodeCamp----arithmetic_ Arranger exercise

- Problems encountered in the project (V) understanding of operating excel interface poi

- Oracle database combines the query result sets of multiple columns into one row

- leetcode--380.O(1) 时间插入、删除和获取随机元素

- 10g database cannot be started when using large memory host

- 19c RAC steps for modifying VIP and scanip - same network segment

猜你喜欢

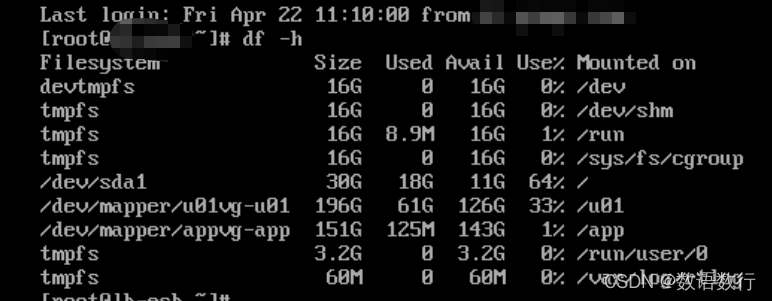

crontab定时任务输出产生大量邮件耗尽文件系统inode问题处理

Special window function rank, deny_ rank, row_ number

Dolphin scheduler configuring dataX pit records

大专的我,闭关苦学 56 天,含泪拿下阿里 offer,五轮面试,六个小时灵魂拷问

Handling of high usage of Oracle undo

记录一个奇怪的bug:缓存组件跳转之后出现组件复制

![Three characteristics of volatile keyword [data visibility, prohibition of instruction rearrangement and no guarantee of operation atomicity]](/img/ec/b1e99e0f6e7d1ef1ce70eb92ba52c6.png)

Three characteristics of volatile keyword [data visibility, prohibition of instruction rearrangement and no guarantee of operation atomicity]

Express ② (routing)

![MySQL [SQL performance analysis + SQL tuning]](/img/71/2ca1a5799a2c7a822158d8b73bd539.png)

MySQL [SQL performance analysis + SQL tuning]

![MySQL [acid + isolation level + redo log + undo log]](/img/52/7e04aeeb881c8c000cc9de82032e97.png)

MySQL [acid + isolation level + redo log + undo log]

随机推荐

Oracle generates millisecond timestamps

Atcoder beginer contest 248c dice sum (generating function)

Decentralized Collaborative Learning Framework for Next POI Recommendation

Static interface method calls are not supported at language level '5'

Ora-16047 of a DG environment: dgid mismatch between destination setting and target database troubleshooting and listening vncr features

【项目】小帽外卖(八)

10g database cannot be started when using large memory host

Window function row commonly used for fusion and de duplication_ number

Detailed explanation and usage of with function in SQL

OSS cloud storage management practice (polite experience)

Storage scheme of video viewing records of users in station B

Oracle modify default temporary tablespace

Test the time required for Oracle library to create an index with 7 million data in a common way

Crontab timing task output generates a large number of mail and runs out of file system inode problem processing

redis如何解决缓存雪崩、缓存击穿和缓存穿透问题

Special window function rank, deny_ rank, row_ number

Oracle view related

神经元与神经网络

函数只执行第一次的执行一次 once函数

Dynamic subset division problem