Yapılacaklar:

- Yolov3 model.py ve detect.py dosyası basitleştirilecek.

- Farklı nms algoritmaları test edilecek.

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. Skin Lesion detection using YOLO This project deal

🆕 Are you looking for a new YOLOv3 implemented by TF2.0 ? If you hate the fucking tensorflow1.x very much, no worries! I have implemented a new YOLOv

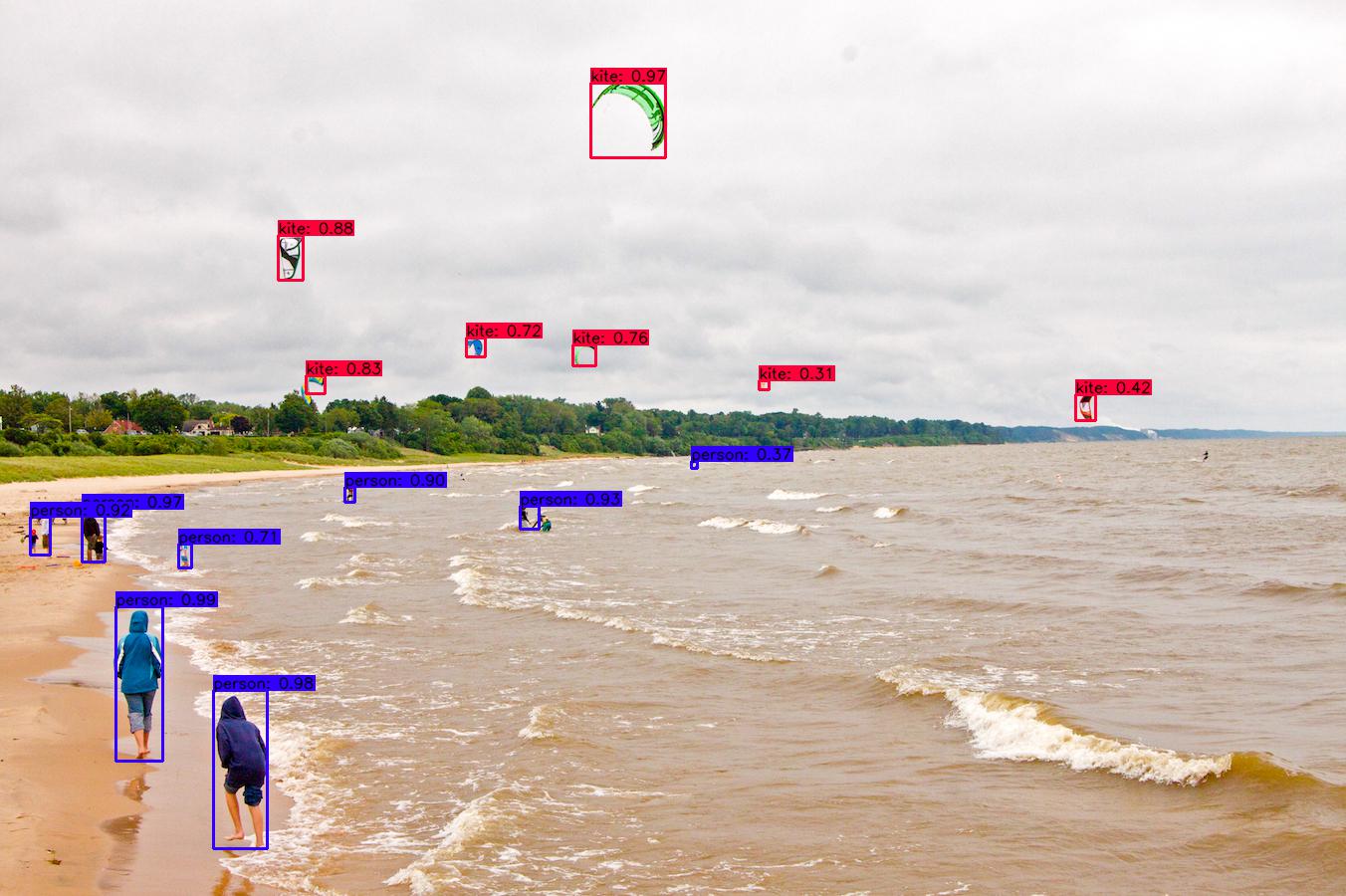

Object Detection with YOLOv3 Bu projede YOLOv3-608 modeli kullanılmıştır. Requirements Python 3.8 OpenCV Numpy Documentation Yolo ile ilgili detaylı b

Electronic-Component-YOLOv3 Introduce This project created to detect, count, and recognize multiple custom object using YOLOv3-Tiny method. The target

YOLOV4:You Only Look Once目标检测模型-修改mobilenet系列主干网络-在Keras当中的实现 2021年2月8日更新: 加入letterbox_image的选项,关闭letterbox_image后网络的map一般可以得到提升。

YOLTv4 builds upon YOLT and SIMRDWN, and updates these frameworks to use the most performant version of YOLO, YOLOv4. YOLTv4 is designed to detect objects in aerial or satellite imagery in arbitrarily large images that far exceed the ~600×600 pixel size typically ingested by deep learning object detection frameworks.

YOLOV4:You Only Look Once目标检测模型在pytorch当中的实现 2021年2月7日更新: 加入letterbox_image的选项,关闭letterbox_image后网络的map得到大幅度提升。 目录 性能情况 Performance 实现的内容 Achievement

Movement classification The goal of this project would be movement classification of people, in other words, walking (normal and fast) and running. Yo

Object tracking implemented with YOLOv4, DeepSort, and TensorFlow. YOLOv4 is a state of the art algorithm that uses deep convolutional neural networks to perform object detections. We can take the output of YOLOv4 feed these object detections into Deep SORT (Simple Online and Realtime Tracking with a Deep Association Metric) in order to create a highly accurate object tracker.

This is model use their own visualization libraries. But the visualization parameters are not enough. That's why the visualization module of the torchyolo library will be added.

bug enhancement| Model | Test Size | APtest | AP50test | AP75test | batch 1 fps | batch 32 average time | | :-- | :-: | :-: | :-: | :-: | :-: | :-: | | YOLOv7 | 640 | 51.4% | 69.7% | 55.9% | 161 fps | 2.8 ms | | YOLOv7-X | 640 | 53.1% | 71.2% | 57.8% | 114 fps | 4.3 ms | | | | | | | | | | YOLOv7-W6 | 1280 | 54.9% | 72.6% | 60.1% | 84 fps | 7.6 ms | | YOLOv7-E6 | 1280 | 56.0% | 73.5% | 61.2% | 56 fps | 12.3 ms | | YOLOv7-D6 | 1280 | 56.6% | 74.0% | 61.8% | 44 fps | 15.0 ms | | YOLOv7-E6E | 1280 | 56.8% | 74.4% | 62.1% | 36 fps | 18.7 ms |

Model | Size | mAPval0.5:0.95 | SpeedT4trt fp16 b1(fps) | SpeedT4trt fp16 b32(fps) | Params(M) | FLOPs(G) -- | -- | -- | -- | -- | -- | -- YOLOv6-N | 640 | 37.5 | 779 | 1187 | 4.7 | 11.4 YOLOv6-S | 640 | 45.0 | 339 | 484 | 18.5 | 45.3 YOLOv6-M | 640 | 50.0 | 175 | 226 | 34.9 | 85.8 YOLOv6-L | 640 | 52.8 | 98 | 116 | 59.6 | 150.7 YOLOv6-N6 | 1280 | 44.9 | 228 | 281 | 10.4 | 49.8 YOLOv6-S6 | 1280 | 50.3 | 98 |108 | 41.4 | 198.0 YOLOv6-M6 | 1280 | 55.2 | 47 | 55 | 79.6 | 379.5 YOLOv6-L6 | 1280 | 57.2 | 26 | 29 | 140.4 | 673.4

| Model | size

(pixels) | mAPval

50-95 | mAPval

50 | Speed

CPU b1

(ms) | Speed

V100 b1

(ms) | Speed

V100 b32

(ms) | params

(M) | FLOPs

@640 (B) |

|------------------------------------------------------------------------------------------------------|-----------------------|----------------------|-------------------|------------------------------|-------------------------------|--------------------------------|--------------------|------------------------|

| YOLOv5n | 640 | 28.0 | 45.7 | 45 | 6.3 | 0.6 | 1.9 | 4.5 |

| YOLOv5s | 640 | 37.4 | 56.8 | 98 | 6.4 | 0.9 | 7.2 | 16.5 |

| YOLOv5m | 640 | 45.4 | 64.1 | 224 | 8.2 | 1.7 | 21.2 | 49.0 |

| YOLOv5l | 640 | 49.0 | 67.3 | 430 | 10.1 | 2.7 | 46.5 | 109.1 |

| YOLOv5x | 640 | 50.7 | 68.9 | 766 | 12.1 | 4.8 | 86.7 | 205.7 |

| | | | | | | | | |

| YOLOv5n6 | 1280 | 36.0 | 54.4 | 153 | 8.1 | 2.1 | 3.2 | 4.6 |

| YOLOv5s6 | 1280 | 44.8 | 63.7 | 385 | 8.2 | 3.6 | 12.6 | 16.8 |

| YOLOv5m6 | 1280 | 51.3 | 69.3 | 887 | 11.1 | 6.8 | 35.7 | 50.0 |

| YOLOv5l6 | 1280 | 53.7 | 71.3 | 1784 | 15.8 | 10.5 | 76.8 | 111.4 |

| YOLOv5x6

+ [TTA] | 1280

1536 | 55.0

55.8 | 72.7

72.7 | 3136

- | 26.2

- | 19.4

- | 140.7

- | 209.8

- |

|Model |size |mAPval

0.5:0.95 |mAPtest

0.5:0.95 | Speed V100

(ms) | Params

(M) |FLOPs

(G)| weights |

| ------ |:---: | :---: | :---: |:---: |:---: | :---: | :----: |

|YOLOX-s |640 |40.5 |40.5 |9.8 |9.0 | 26.8 | github |

|YOLOX-m |640 |46.9 |47.2 |12.3 |25.3 |73.8| github |

|YOLOX-l |640 |49.7 |50.1 |14.5 |54.2| 155.6 | github |

|YOLOX-x |640 |51.1 |51.5 | 17.3 |99.1 |281.9 | github |

|YOLOX-Darknet53 |640 | 47.7 | 48.0 | 11.1 |63.7 | 185.3 | github |

|Model |size |mAPval

0.5:0.95 | Params

(M) |FLOPs

(G)| weights |

| ------ |:---: | :---: |:---: |:---: | :---: |

|YOLOX-Nano |416 |25.8 | 0.91 |1.08 | github |

|YOLOX-Tiny |416 |32.8 | 5.06 |6.45 | github |

Full Changelog: https://github.com/kadirnar/torchyolo/commits/v0.0.1

Source code(tar.gz)FILM: Frame Interpolation for Large Scene Motion Project | Paper | YouTube | Benchmark Scores Tensorflow 2 implementation of our high quality frame in

ADDS-DepthNet This is the official implementation of the paper Self-supervised Monocular Depth Estimation for All Day Images using Domain Separation I

Attention-based CNN-LSTM and XGBoost hybrid model for stock prediction Requirements The code has been tested running under Python 3.7.4, with the foll

SSL_OSC Graph Self-Supervised Learning for Optoelectronic Properties of Organic Semiconductors

An Image is Worth 16x16 Words, What is a Video Worth? paper Official PyTorch Implementation Gilad Sharir, Asaf Noy, Lihi Zelnik-Manor DAMO Academy, Al

STRIVE: Scene Text Replacement In Videos Dataset Types: RoboText SynthText RealWorld videos RoboText : Videos of texts collected using navigation robo

The Empirical Investigation of Representation Learning for Imitation (EIRLI)

NDC PyTorch implementation of Neural Dual Contouring. Citation We are still writing the paper while adding more improvements and applications. If you

Unified Instance and Knowledge Alignment Pretraining for Aspect-based Sentiment Analysis Requirements python 3.7 pytorch-gpu 1.7 numpy 1.19.4 pytorch_

Point Transformer - Pytorch Implementation of the Point Transformer self-attention layer, in Pytorch. The simple circuit above seemed to have allowed

MMDetection_Lite 基于mmdetection 实现一些轻量级检测模型,安装方式和mmdeteciton相同 voc0712 voc 0712训练 voc2007测试 coco预训练 thundernet_voc_shufflenetv2_1.5 input shape mAP 320

jax-variational-diffwave Jax/Flax implementation of Variational-DiffWave. (Zhifeng Kong et al., 2020, Diederik P. Kingma et al., 2021.) DiffWave with

Non-attentive Tacotron - PyTorch Implementation This is Pytorch Implementation of Google's Non-attentive Tacotron, text-to-speech system. There is som

coldcuts coldcuts is an R package that allows you to draw and plot automatically segmentations from 3D voxel arrays. The name is inspired by one of It

Label-Free Model Evaluation with Semi-Structured Dataset Representations Prerequisites This code uses the following libraries Python 3.7 NumPy PyTorch

Beyond Self-attention: External Attention using Two Linear Layers for Visual Tasks paper : https://arxiv.org/abs/2105.02358 Jittor code will come soon

LightningDOT: Pre-training Visual-Semantic Embeddings for Real-Time Image-Text Retrieval This repository contains source code and pre-trained/fine-tun

Kaggle: Cell Instance Segmentation The goal of this challenge is to detect cells in microscope images. with simple view on how many cels have been ann

NHS AI Lab Skunkworks project: Long Stayer Risk Stratification A pilot project for the NHS AI Lab Skunkworks team, Long Stayer Risk Stratification use

Face-Detection-flask-gunicorn-nginx-docker This is a simple implementation of dockerized face-detection restful-API implemented with flask, Nginx, and