当前位置:网站首页>File system read and write performance test practice

File system read and write performance test practice

2022-04-23 16:20:00 【Dinosaur brother Wang Zi】

Preface :

I was going to directly test the read-write performance of disk blocks ( Random, speaking, reading and writing 、 Sequential reading and writing ), However, the environment has not been built well , So we can only go back to the next place , Test the reading and writing performance of the file system ( Random, speaking, reading and writing 、 Sequential reading and writing ).

There is a difference between the two , You can refer to another blog of the writer : Disk performance index monitoring practice

1. Environmental preparation

I installed docker Ubuntu:18.04 edition , All commands are tested in this container ;

Command to install (fio command )

apt-get install -y fio2.fio command

fio Tools are mainstream third parties I/O Testing tools , It offers a wide range of customizable options , It can be used to test bare disks 、 The application of file system in various scenarios I/O performance

You can use man fio Order to see , Here are some of the parameters that support :

filename=/dev/emcpowerb Support file system or bare device ,-filename=/dev/sda2 or -filename=/dev/sdb

direct=1 The test process bypasses the machine's own buffer, Make the test results more realistic

rw=randwread Test random reading I/O

rw=randwrite Test randomly written I/O

rw=randrw Test a random mix of write and read I/O

rw=read Test sequential reading I/O

rw=write The test sequence is written I/O

rw=rw Test the sequence of mixed write and read I/O

bs=4k A single io The block file size of is 4k

bsrange=512-2048 ditto , Determine the size range of data blocks

size=5g This test file size is 5g, Every time 4k Of io To test

numjobs=30 This test thread is 30

runtime=1000 Test time is 1000 second , If you don't write, you'll always 5g File Division 4k Every time I finish writing

ioengine=psync Express I/O engine , It supports synchronization (sync)、 asynchronous (libaio)、 Memory mapping (mmap)、 The Internet (net) And so on I/O engine

rwmixwrite=30 In mixed read-write mode , Write to account for 30%

group_reporting About displaying results , Aggregate information for each process

Besides

lockmem=1g Use only 1g Memory for testing

zero_buffers use 0 Initialize system buffer

nrfiles=8 Number of files generated per process And about the output result parameters :

io= How many M Of IO

bw= Average IO bandwidth

iops=IOPS

runt= Thread run time

slat= Submission delay

clat= Completion delay

lat= response time

bw= bandwidth

cpu= utilization

IO depths=io queue

IO submit= Single IO Submit to submit IO Count

IO complete=Like the above submit number, but for completions instead.

IO issued=The number of read/write requests issued, and how many of them were short.

IO latencies=IO Distribution of completion delay

io= How many... Were executed in total size Of IO

aggrb=group Total bandwidth

minb= Minimum . Average bandwidth .

maxb= Maximum average bandwidth .

mint=group The shortest running time of a thread in .

maxt=group The maximum running time of a thread in the .

ios= all group All in all IO Count .

merge= All in all IO Consolidated number .

ticks=Number of ticks we kept the disk busy.

io_queue= The total time spent in the queue .

util= Disk utilization We will refer to these fields later

3. File system performance test

The author intends to test from four aspects , Namely : Sequential reading 、 random block read 、 Sequential writing 、 Write at random

The test file is /tmp/filetest.txt

3.1 random block read

The author uses the following commands :

fio -name=randread -direct=1 -iodepth=64 -rw=randread -ioengine=libaio -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txtaccording to 2 Parameter interpretation in , We are right. /tmp/filetest.txt Random reading , Each read 4K, Total size is 1G

-direct=1 Skip built-in buffer, Avoid affecting the real test results

-ioengine=libaio Use asynchronous I/O To test

-iodepth=64 Send out at the same time I/O The maximum number of requests is 64

The test results are as follows :

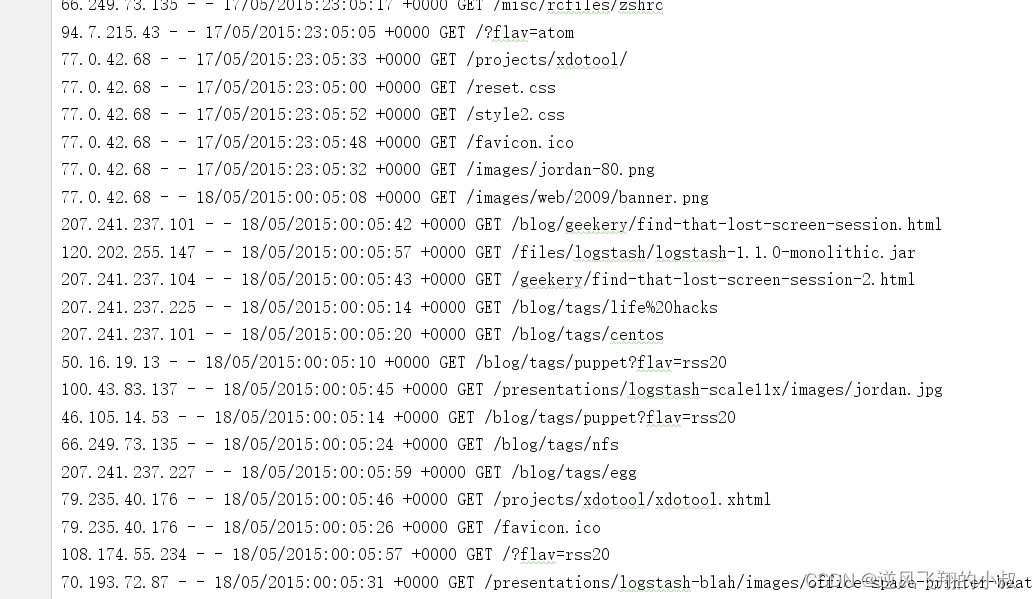

root@7bc18553126f:/tmp# fio -name=randread -direct=1 -iodepth=64 -rw=randread -ioengine=libaio -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txt

randread: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=64

fio-3.1

Starting 1 process

randread: Laying out IO file (1 file / 1024MiB)

Jobs: 1 (f=1): [r(1)][-.-%][r=372MiB/s,w=0KiB/s][r=95.3k,w=0 IOPS][eta 00m:00s]

randread: (groupid=0, jobs=1): err= 0: pid=836: Sun Apr 3 10:40:41 2022

read: IOPS=92.7k, BW=362MiB/s (380MB/s)(1024MiB/2828msec)

slat (nsec): min=1375, max=900000, avg=9439.98, stdev=18216.37

clat (usec): min=84, max=2436, avg=680.01, stdev=128.14

lat (usec): min=85, max=2439, avg=689.57, stdev=130.14

clat percentiles (usec):

| 1.00th=[ 469], 5.00th=[ 529], 10.00th=[ 562], 20.00th=[ 594],

| 30.00th=[ 619], 40.00th=[ 644], 50.00th=[ 668], 60.00th=[ 693],

| 70.00th=[ 717], 80.00th=[ 742], 90.00th=[ 799], 95.00th=[ 865],

| 99.00th=[ 1156], 99.50th=[ 1385], 99.90th=[ 1844], 99.95th=[ 1991],

| 99.99th=[ 2147]

bw ( KiB/s): min=342171, max=380912, per=99.14%, avg=367594.00, stdev=15369.66, samples=5

iops : min=85542, max=95228, avg=91898.00, stdev=3842.59, samples=5

lat (usec) : 100=0.01%, 250=0.04%, 500=2.25%, 750=78.87%, 1000=17.09%

lat (msec) : 2=1.70%, 4=0.04%

cpu : usr=12.31%, sys=87.23%, ctx=34, majf=0, minf=80

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwt: total=262144,0,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

READ: bw=362MiB/s (380MB/s), 362MiB/s-362MiB/s (380MB/s-380MB/s), io=1024MiB (1074MB), run=2828-2828msecLet's focus on the following outputs :

| throughput | bw | 380M/s |

| IOPS | IOPS | 92.7K |

| response time | lat | min=85, max=2439, avg=689.57, stdev=130.14(usec) |

| Submission delay | slat | min=1375, max=900000, avg=9439.98, stdev=18216.37(nsec) |

| Completion delay | clat | min=84, max=2436, avg=680.01, stdev=128.14(usec) |

In general response time = Submission delay + Completion delay ( Pay attention to the time unit above )

3.2 Sequential reading

Sequential reading is mainly -name=read

root@7bc18553126f:/tmp# fio -name=read -direct=1 -iodepth=64 -rw=read -ioengine=libaio -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txt

read: (g=0): rw=read, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=64

fio-3.1

Starting 1 process

Jobs: 1 (f=1)

read: (groupid=0, jobs=1): err= 0: pid=842: Sun Apr 3 10:43:20 2022

read: IOPS=145k, BW=567MiB/s (594MB/s)(1024MiB/1807msec)

slat (nsec): min=1291, max=822125, avg=5795.16, stdev=12100.41

clat (usec): min=100, max=2339, avg=434.78, stdev=122.26

lat (usec): min=104, max=2340, avg=440.68, stdev=123.16

clat percentiles (usec):

| 1.00th=[ 255], 5.00th=[ 302], 10.00th=[ 326], 20.00th=[ 355],

| 30.00th=[ 375], 40.00th=[ 396], 50.00th=[ 416], 60.00th=[ 437],

| 70.00th=[ 461], 80.00th=[ 494], 90.00th=[ 545], 95.00th=[ 611],

| 99.00th=[ 922], 99.50th=[ 1074], 99.90th=[ 1385], 99.95th=[ 1778],

| 99.99th=[ 2212]

bw ( KiB/s): min=553768, max=596884, per=98.92%, avg=574033.33, stdev=21673.95, samples=3

iops : min=138442, max=149221, avg=143508.33, stdev=5418.49, samples=3

lat (usec) : 250=0.81%, 500=80.98%, 750=16.00%, 1000=1.48%

lat (msec) : 2=0.69%, 4=0.04%

cpu : usr=14.62%, sys=83.61%, ctx=130, majf=0, minf=83

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwt: total=262144,0,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

READ: bw=567MiB/s (594MB/s), 567MiB/s-567MiB/s (594MB/s-594MB/s), io=1024MiB (1074MB), run=1807-1807msecFocus on the output :

| throughput | bw | 594M/s |

| IOPS | IOPS | 145K |

| response time | lat | min=104, max=2340, avg=440.68, stdev=123.16(usec) |

| Submission delay | slat | min=1291, max=822125, avg=5795.16, stdev=12100.41(nsec) |

| Completion delay | clat | min=100, max=2339, avg=434.78, stdev=122.26(usec) |

Sequential reading is compared to random reading , Improved performance ( throughput 、IOPS Almost doubled )

3.3 Write at random

root@7bc18553126f:/tmp# fio -name=randwrite -direct=1 -iodepth=64 -rw=randwrite -ioengine=libaio -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txt

randwrite: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=64

fio-3.1

Starting 1 process

Jobs: 1 (f=1): [w(1)][-.-%][r=0KiB/s,w=310MiB/s][r=0,w=79.5k IOPS][eta 00m:00s]

randwrite: (groupid=0, jobs=1): err= 0: pid=839: Sun Apr 3 10:42:20 2022

write: IOPS=82.9k, BW=324MiB/s (339MB/s)(1024MiB/3163msec)

slat (nsec): min=1416, max=561625, avg=10713.06, stdev=23076.82

clat (usec): min=62, max=2612, avg=760.65, stdev=140.25

lat (usec): min=64, max=2614, avg=771.51, stdev=142.09

clat percentiles (usec):

| 1.00th=[ 502], 5.00th=[ 578], 10.00th=[ 619], 20.00th=[ 660],

| 30.00th=[ 693], 40.00th=[ 717], 50.00th=[ 750], 60.00th=[ 775],

| 70.00th=[ 807], 80.00th=[ 840], 90.00th=[ 914], 95.00th=[ 988],

| 99.00th=[ 1254], 99.50th=[ 1385], 99.90th=[ 1713], 99.95th=[ 1942],

| 99.99th=[ 2343]

bw ( KiB/s): min=314504, max=338570, per=99.50%, avg=329858.00, stdev=10161.04, samples=6

iops : min=78626, max=84642, avg=82464.33, stdev=2540.12, samples=6

lat (usec) : 100=0.01%, 250=0.03%, 500=0.94%, 750=50.71%, 1000=43.79%

lat (msec) : 2=4.48%, 4=0.04%

cpu : usr=9.49%, sys=73.66%, ctx=41102, majf=0, minf=19

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwt: total=0,262144,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

WRITE: bw=324MiB/s (339MB/s), 324MiB/s-324MiB/s (339MB/s-339MB/s), io=1024MiB (1074MB), run=3163-3163msecFocus on the output :

| throughput | bw | 339M/s |

| IOPS | IOPS | 82.9K |

| response time | lat | min=64, max=2614, avg=771.51, stdev=142.09(usec) |

| Submission delay | slat | min=1416, max=561625, avg=10713.06, stdev=23076.82(nsec) |

| Completion delay | clat | min=62, max=2612, avg=760.65, stdev=140.25(usec) |

The performance of random reading and writing is basically the same

3.4 Sequential writing

root@7bc18553126f:/tmp# fio -name=write -direct=1 -iodepth=64 -rw=write -ioengine=libaio -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txt

write: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=64

fio-3.1

Starting 1 process

Jobs: 1 (f=1)

write: (groupid=0, jobs=1): err= 0: pid=845: Sun Apr 3 10:44:31 2022

write: IOPS=123k, BW=481MiB/s (504MB/s)(1024MiB/2129msec)

slat (nsec): min=1375, max=1015.5k, avg=6901.34, stdev=13482.94

clat (usec): min=10, max=3391, avg=512.35, stdev=147.78

lat (usec): min=52, max=3392, avg=519.37, stdev=148.93

clat percentiles (usec):

| 1.00th=[ 262], 5.00th=[ 330], 10.00th=[ 363], 20.00th=[ 404],

| 30.00th=[ 437], 40.00th=[ 465], 50.00th=[ 494], 60.00th=[ 523],

| 70.00th=[ 553], 80.00th=[ 594], 90.00th=[ 668], 95.00th=[ 750],

| 99.00th=[ 1057], 99.50th=[ 1139], 99.90th=[ 1450], 99.95th=[ 1680],

| 99.99th=[ 2540]

bw ( KiB/s): min=459872, max=514994, per=99.58%, avg=490430.50, stdev=24701.13, samples=4

iops : min=114968, max=128748, avg=122607.50, stdev=6175.12, samples=4

lat (usec) : 20=0.01%, 50=0.01%, 100=0.01%, 250=0.69%, 500=51.74%

lat (usec) : 750=42.62%, 1000=3.50%

lat (msec) : 2=1.42%, 4=0.02%

cpu : usr=12.22%, sys=63.96%, ctx=31059, majf=0, minf=21

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwt: total=0,262144,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

WRITE: bw=481MiB/s (504MB/s), 481MiB/s-481MiB/s (504MB/s-504MB/s), io=1024MiB (1074MB), run=2129-2129msec

Focus on the output :

| throughput | bw | 504M/s |

| IOPS | IOPS | 123K |

| response time | lat | min=52, max=3392, avg=519.37, stdev=148.93(usec) |

| Submission delay | slat | min=1375, max=1015.5k, avg=6901.34, stdev=13482.94(nsec) |

| Completion delay | clat | min=10, max=3391, avg=512.35, stdev=147.78(usec) |

Relative to random writing , Sequential write throughput 、 Response time and IOPS Have improved (1.5 Double the look )

3.5 Synchronous random read

We 3.1-3.4 All the cases tested in are asynchronous operations , So let's test it with synchronization , The author will test the scenario of synchronous random reading

root@7bc18553126f:/tmp# fio -name=randread -direct=1 -iodepth=64 -rw=randread -ioengine=sync -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txt

randread: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=sync, iodepth=64

fio-3.1

Starting 1 process

Jobs: 1 (f=1): [r(1)][100.0%][r=89.3MiB/s,w=0KiB/s][r=22.9k,w=0 IOPS][eta 00m:00s]

randread: (groupid=0, jobs=1): err= 0: pid=848: Sun Apr 3 10:48:04 2022

read: IOPS=22.0k, BW=86.0MiB/s (90.2MB/s)(1024MiB/11903msec)

clat (usec): min=28, max=9261, avg=44.04, stdev=35.40

lat (usec): min=28, max=9262, avg=44.13, stdev=35.41

clat percentiles (usec):

| 1.00th=[ 36], 5.00th=[ 37], 10.00th=[ 38], 20.00th=[ 38],

| 30.00th=[ 39], 40.00th=[ 39], 50.00th=[ 43], 60.00th=[ 43],

| 70.00th=[ 44], 80.00th=[ 44], 90.00th=[ 46], 95.00th=[ 61],

| 99.00th=[ 112], 99.50th=[ 127], 99.90th=[ 221], 99.95th=[ 343],

| 99.99th=[ 881]

bw ( KiB/s): min=79304, max=91680, per=99.56%, avg=87701.35, stdev=3585.21, samples=23

iops : min=19826, max=22920, avg=21925.17, stdev=896.39, samples=23

lat (usec) : 50=92.70%, 100=5.92%, 250=1.31%, 500=0.05%, 750=0.01%

lat (usec) : 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%

cpu : usr=6.89%, sys=20.18%, ctx=262035, majf=0, minf=17

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwt: total=262144,0,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

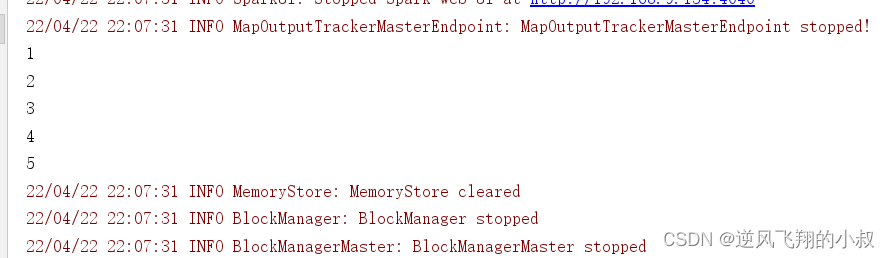

READ: bw=86.0MiB/s (90.2MB/s), 86.0MiB/s-86.0MiB/s (90.2MB/s-90.2MB/s), io=1024MiB (1074MB), run=11903-11903msecFocus on the output :

| Synchronous random read | Asynchronous random read | ||

| throughput | bw | 86M/s | 380M/s |

| IOPS | IOPS | 22K | 92.7K |

| response time | lat | min=28, max=9262, avg=44.13, stdev=35.41(usec) | min=85, max=2439, avg=689.57, stdev=130.14(usec) |

| Submission delay | slat | 0(nsec) | min=1375, max=900000, avg=9439.98, stdev=18216.37(nsec) |

| Completion delay | clat | min=28, max=9261, avg=44.04, stdev=35.40(usec) | min=84, max=2436, avg=680.01, stdev=128.14(usec) |

Synchronous random read , Relative to us 3.1 Asynchronous random read in , The performance is not a little poor

summary :

In the case of the above test results , We can draw two conclusions

1. Sequential reading and writing is better than random reading and writing

2. Asynchronous read-write performance is better than synchronous read-write performance

Reference resources :

IO One of the testing tools fio Detailed explanation - raykuan - Blog Garden

Geek time <<Linux Performance optimization practice >>

版权声明

本文为[Dinosaur brother Wang Zi]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231618401228.html

边栏推荐

猜你喜欢

Filter usage of spark operator

Unity shader learning

Countdown 1 day ~ 2022 online conference of cloud disaster tolerance products is about to begin

Best practice of cloud migration in education industry: Haiyun Jiexun uses hypermotion cloud migration products to implement progressive migration for a university in Beijing, with a success rate of 1

Distinct use of spark operator

一文读懂串口及各种电平信号含义

Day (9) of picking up matlab

力扣-746.使用最小花费爬楼梯

GRBL学习(一)

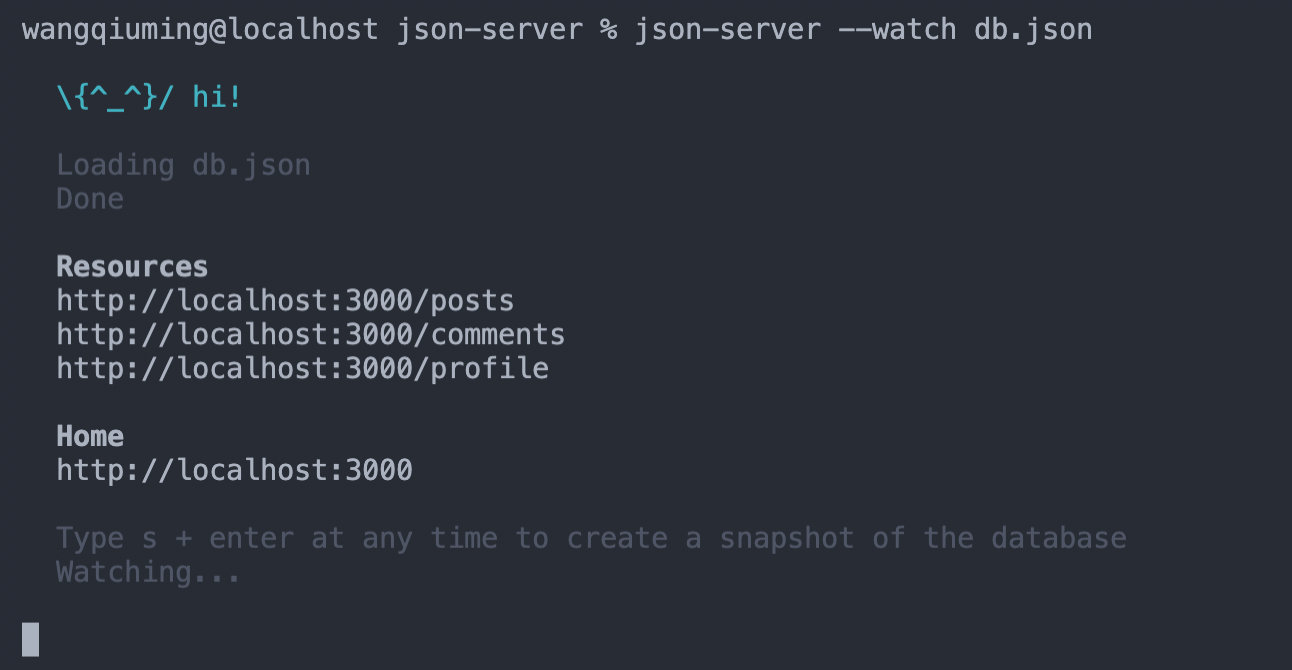

Using JSON server to create server requests locally

随机推荐

How important is the operation and maintenance process? I heard it can save 2 million a year?

各大框架都在使用的Unsafe类,到底有多神奇?

漫画:什么是IaaS、PaaS、SaaS?

Download and install mongodb

Database dbvisualizer Pro reported file error, resulting in data connection failure

The biggest winner is China Telecom. Why do people dislike China Mobile and China Unicom?

5 minutes, turn your excel into an online database, the magic cube net table Excel database

捡起MATLAB的第(6)天

Gartner predicts that the scale of cloud migration will increase significantly; What are the advantages of cloud migration?

js正則判斷域名或者IP的端口路徑是否正確

Day (3) of picking up matlab

What is cloud migration? The four modes of cloud migration are?

Sort by character occurrence frequency 451

04 Lua 运算符

Leetcode-374 guess the size of the number

volatile的含义以及用法

C语言自编字符串处理函数——字符串分割、字符串填充等

Upgrade MySQL 5.1 to 5.610

捡起MATLAB的第(4)天

JSP learning 3