当前位置:网站首页>Filter usage of spark operator

Filter usage of spark operator

2022-04-23 15:48:00 【Uncle flying against the wind】

Preface

filter, It can be understood as filtering , Intuitive, , Is to filter a group of data according to the specified rules ,filter This operator is in Java Or in other languages , It can easily help us filter the desired data from a set of data ;

Function signature

def filter(f: T => Boolean ): RDD[T]

Function description

Filter the data according to the specified rules , Consistent data retention , Data that does not conform to the rules is discarded . When the data is filtered , The partition does not change , But the data in the partition may be uneven , In the production environment , There may be Data skew ;

Case a , Filter out even numbers from a set of data

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object Filter_Test {

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf().setMaster("local[*]").setAppName("Operator")

val sc = new SparkContext(sparkConf)

val rdd = sc.makeRDD(List(1,2,3,4,5,6))

val result = rdd.filter(

item => item % 2 ==0

)

result.collect().foreach(println)

sc.stop()

}

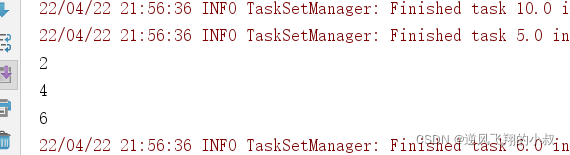

}Run this code , Observe the console output

Case 2 , Filter out from log file 2015 year 5 month 17 The data of

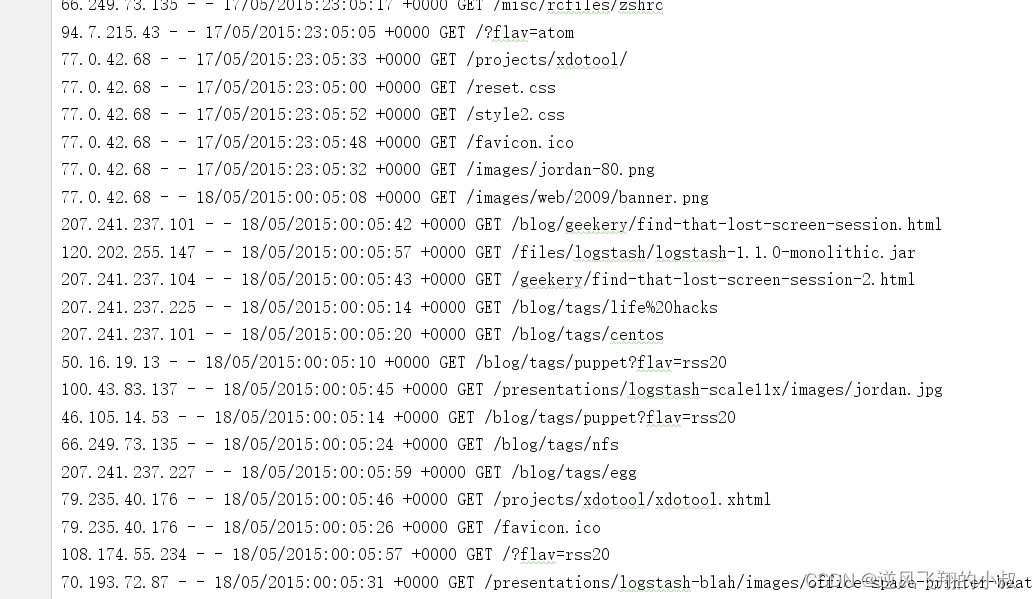

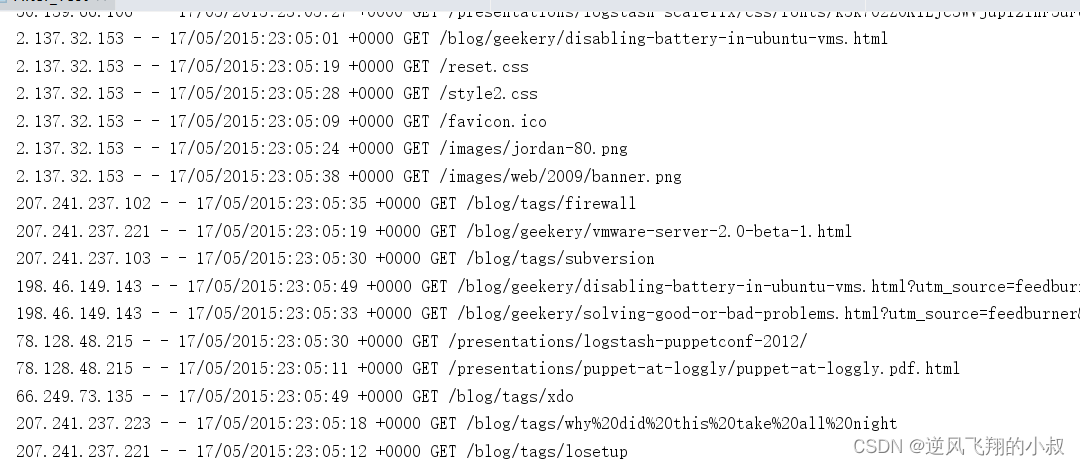

The contents of the log file are as follows :

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object Filter_Test {

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf().setMaster("local[*]").setAppName("Operator")

val sc = new SparkContext(sparkConf)

val rdd: RDD[String] = sc.textFile("E:\\code-self\\spi\\datas\\apache.log")

rdd.filter(

line =>{

val datas = line.split(" ")

val time = datas(3)

time.contains("17/05/2015")

}

).collect().foreach(println)

sc.stop()

}

}Run the above code , Observe the console output ,

版权声明

本文为[Uncle flying against the wind]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231544587482.html

边栏推荐

- ICE -- 源码分析

- [self entertainment] construction notes week 2

- Interview questions of a blue team of Beijing Information Protection Network

- Go语言数组,指针,结构体

- 计算某字符出现次数

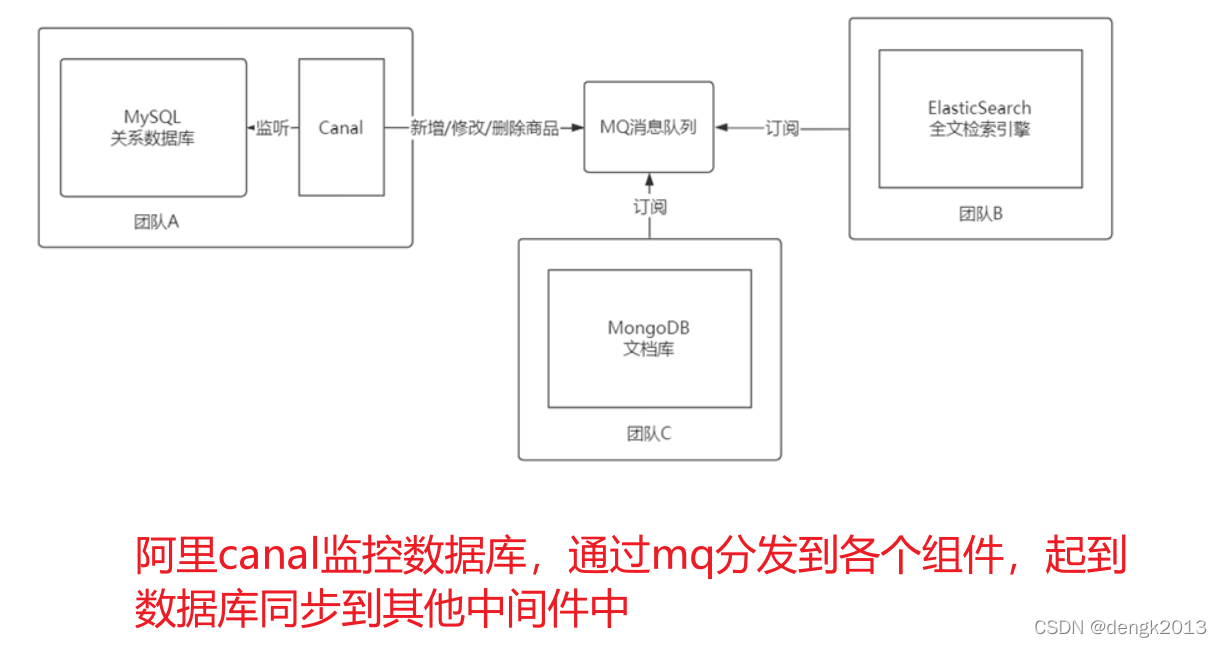

- CAP定理

- 基于 TiDB 的 Apache APISIX 高可用配置中心的最佳实践

- [AI weekly] NVIDIA designs chips with AI; The imperfect transformer needs to overcome the theoretical defect of self attention

- 怎么看基金是不是reits,通过银行购买基金安全吗

- What if the server is poisoned? How does the server prevent virus intrusion?

猜你喜欢

导入地址表分析(根据库文件名求出:导入函数数量、函数序号、函数名称)

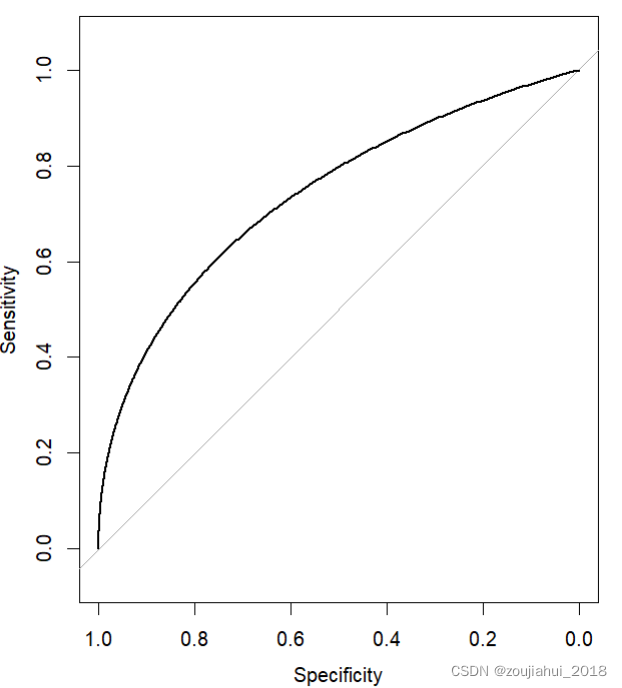

R语言中绘制ROC曲线方法二:pROC包

MySQL Cluster Mode and application scenario

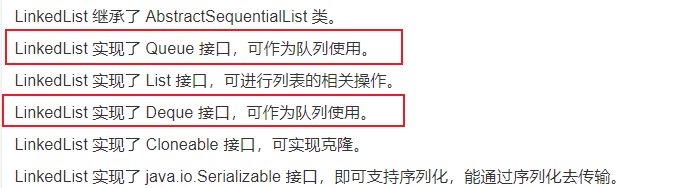

一刷314-剑指 Offer 09. 用两个栈实现队列(e)

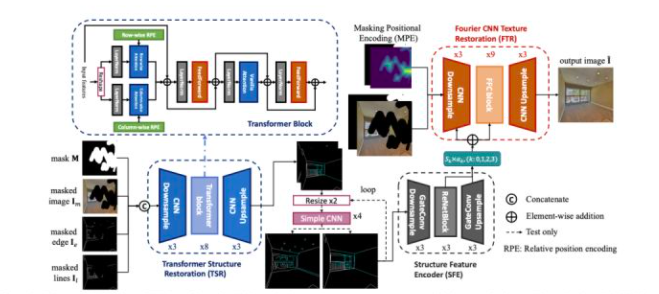

CVPR 2022 quality paper sharing

王启亨谈Web3.0与价值互联网“通证交换”

CAP定理

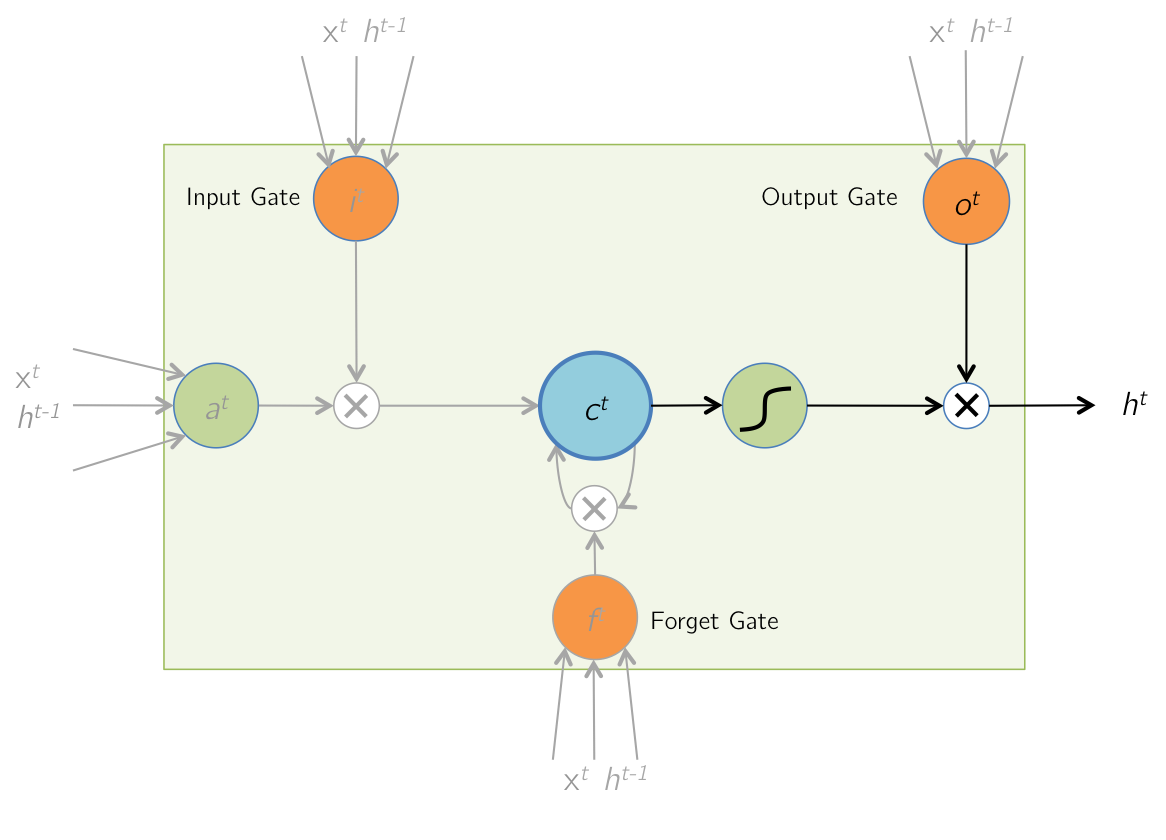

Temporal model: long-term and short-term memory network (LSTM)

What if the server is poisoned? How does the server prevent virus intrusion?

Codejock Suite Pro v20. three

随机推荐

腾讯Offer已拿,这99道算法高频面试题别漏了,80%都败在算法上

pgpool-II 4.3 中文手册 - 入门教程

IronPDF for .NET 2022.4.5455

String sorting

Temporal model: long-term and short-term memory network (LSTM)

负载均衡器

Spark 算子之groupBy使用

Multitimer V2 reconstruction version | an infinitely scalable software timer

MySQL Cluster Mode and application scenario

Basic greedy summary

MetaLife与ESTV建立战略合作伙伴关系并任命其首席执行官Eric Yoon为顾问

实现缺省页面

[open source tool sharing] MCU debugging assistant (oscillograph / modification / log) - linkscope

Control structure (I)

Today's sleep quality record 76 points

Deletes the least frequently occurring character in the string

dlopen/dlsym/dlclose的简单用法

APISIX jwt-auth 插件存在错误响应中泄露信息的风险公告(CVE-2022-29266)

携号转网最大赢家是中国电信,为何人们嫌弃中国移动和中国联通?

基础贪心总结