当前位置:网站首页>Yarn online dynamic resource tuning

Yarn online dynamic resource tuning

2022-04-23 13:57:00 【Contestant No. 1 position】

background

on-line Hadoop The cluster resources are seriously insufficient , There may be problems adding disks , add to CPU, Add the operation of the node , So after adding these hardware resources , Our cluster cannot use these resources immediately , The cluster needs to be modified Yarn Resource allocation , And then make it work .

Existing environment

The server :12 platform , Memory 64Gx12=768G, Physics cpu16x12=192, disk 12Tx12=144T

Components :Hadoop-2.7.7,Hive-2.3.4,Presto-220,Dolphinscheduler-1.3.6,Sqoop-1.4.7

Allocation policy

Because our version is Hadoop-2.7.7, Some default configurations are fixed to a value , Like available memory 8G, You can use CPU Check the number 8 nucleus , If tuning, we need to configure more things .

official Yarn Parameter configuration :https://hadoop.apache.org/docs/r2.7.7/hadoop-yarn/hadoop-yarn-common/yarn-default.xml

Future versions (Hadoop-3.0+) In fact, there is a mechanism to automatically detect hardware resources , Need to open configuration :yarn.nodemanager.resource.detect-hardware-capabilities, Then the resource allocation will be calculated automatically , But this is off by default , For each node NodeManager Available memory configuration yarn.nodemanager.resource.memory-mb and CPU Check the number yarn.nodemanager.resource.cpu-vcores Also affected by this configuration , The default configuration is -1, The available memory is 8G,CPU Auditing for 8 nucleus . If automatic monitoring of hardware resources is enabled , Other configurations can be ignored without configuration , Simplified configuration .

official Yarn Parameter configuration :https://hadoop.apache.org/docs/stable/hadoop-yarn/hadoop-yarn-common/yarn-default.xml

There is also an important configuration yarn.nodemanager.vmem-pmem-ratio , Express NodeManager Upper Container When there is not enough physical memory , Available virtual memory , Default to physical memory 2.1 times .

Modify the configuration

yarn-site.xml

modify yarn-site.xml, It was modified , Add if not , Here we set the available memory of a single node 30G, You can use CPU Auditing for 16

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>30720</value>

<discription> Memory available per node , Default 8192M(8G), Set up here 30G</discription>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

<discription> A single task can request the least memory , Default 1024MB</discription>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>20480</value>

<discription> A single task can request the maximum memory , Default 8192M(8G), Set up here 20G</discription>

</property>

<property>

<name>yarn.app.mapreduce.am.resource.mb</name>

<value>2048</value>

<discription> The default is 1536.MR To run on YARN Upper time , by AM How much memory is allocated . The default value is usually small , Recommended setting is 2048 or 4096 Wait for a larger value .</discription>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>16</value>

<discription> The default is 8. How many virtual cores can be allocated to each node YARN Use , It is usually set as the total number of virtual cores defined by the node .</discription>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>32</value>

<discription> Respectively 1/32, Appoint RM Can be for each container The minimum amount allocated / Maximum number of virtual cores , low Nuclear applications at or above this limit , Will be allocated according to the minimum or maximum number of cores . The default value is suitable for Generally, clusters use .</discription>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-vcores</name>

<value>1</value>

<discription> Respectively 1/32, Appoint RM Can be for each container The minimum amount allocated / Maximum number of virtual cores , low Nuclear applications at or above this limit , Will be allocated according to the minimum or maximum number of cores . The default value is suitable for General set > Group use .</discription>

</property>

<property>

<name>yarn.nodemanager.vcores-pcores-ratio</name>

<value>2</value>

<discription> Every physical cpu, Virtual that can be used cpu The proportion of , The default is 2</discription>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>5.2</value>

<discription> When there is not enough physical memory , Virtual memory used , The default is 2.1, Means every time you use 1MB Physical memory , You can use at most 2.1MB The total amount of virtual memory .</discription>

</property>

mapred-site.xml

modify mapred-site.xml, It was modified , Add if not

Set memory resources for a single task , Note that the value here cannot be greater than the corresponding memory yarn.scheduler.maximum-allocation-mb

<property>

<name>mapreduce.map.memory.mb</name>

<value>2048</value>

<discription> The default is 1024, Scheduler for each map/reduce task Amount of memory requested . various Job You can also specify .</discription>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>2048</value>

<discription> The default is 1024, Scheduler for each map/reduce task Amount of memory requested . various Job You can also specify .</discription>

</property>

Cluster takes effect

distribution

After modifying the configuration , Be sure to distribute the configuration to other cluster nodes , Use scp perhaps xsync Distribute tools to other nodes , Here is an example of distribution to a node

cd /data/soft/hadoop/hadoop-2.7.7/etc/hadoop

scp -r yarn-site.xml mapred-site.xml data002:`pwd`

Dynamic restart

In order not to affect cluster usage , We start and stop alone Yarn,Yarn There are two main services NodeManager and ResourceManager, Separate start stop command

yarn-daemon.sh stop nodemanager yarn-daemon.sh start nademanager

yarn-daemon.sh stop resourcemanager yarn-daemon.sh start resourcemanager

Execute the above commands one by one according to the cluster nodes ,ResourceManager The corresponding node executes the corresponding command . In this way, we can ensure the dynamic optimization of cluster resources .

Of course , If a single restart is troublesome , It can also be executed Yarn The restart command for

stop-yarn.sh start-yarn.sh

ResourceManager The corresponding slave node can be started and stopped separately .

For more information, please search on the public account platform : Contestant number one , Article number :2004, Reply to get .

版权声明

本文为[Contestant No. 1 position]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231346425078.html

边栏推荐

- Parameter comparison of several e-book readers

- UNIX final exam summary -- for direct Department

- Special test 05 · double integral [Li Yanfang's whole class]

- SQL learning | complex query

- Ora-600 encountered in Oracle environment [qkacon: fjswrwo]

- Analysis of unused index columns caused by implicit conversion of timestamp

- 【报名】TF54:工程师成长地图与卓越研发组织打造

- 19c environment ora-01035 login error handling

- JMeter pressure test tool

- Two ways to deal with conflicting data in MySQL and PG Libraries

猜你喜欢

Using Baidu Intelligent Cloud face detection interface to achieve photo quality detection

Small case of web login (including verification code login)

Express ② (routage)

Express②(路由)

Dolphin scheduler source package Src tar. GZ decompression problem

![Three characteristics of volatile keyword [data visibility, prohibition of instruction rearrangement and no guarantee of operation atomicity]](/img/ec/b1e99e0f6e7d1ef1ce70eb92ba52c6.png)

Three characteristics of volatile keyword [data visibility, prohibition of instruction rearrangement and no guarantee of operation atomicity]

Solution of discarding evaluate function in surprise Library

Express middleware ③ (custom Middleware)

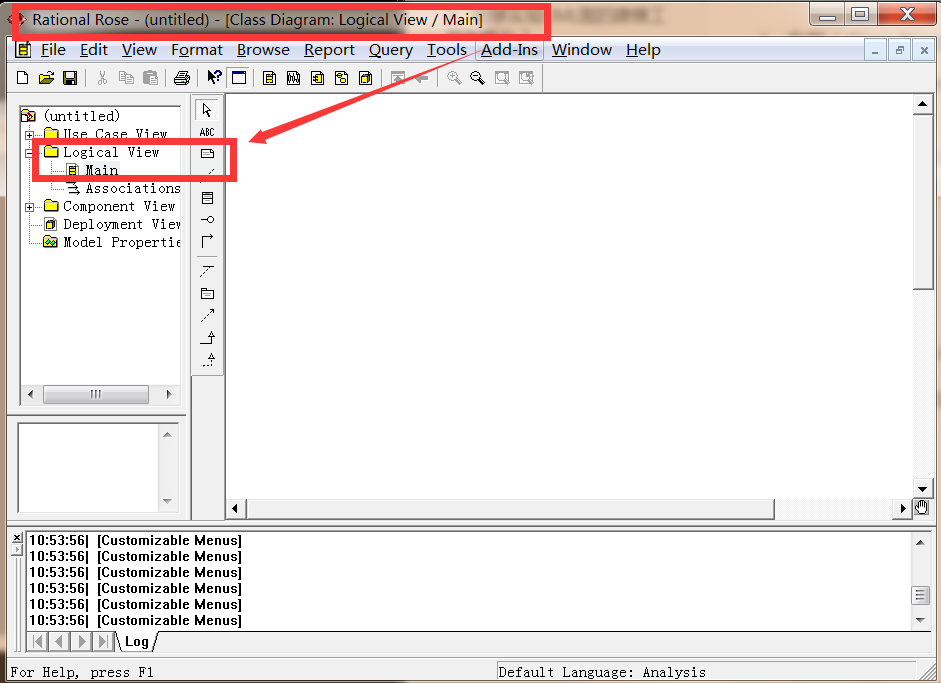

UML Unified Modeling Language

Window analysis function last_ VALUE,FIRST_ VALUE,lag,lead

随机推荐

Information: 2021 / 9 / 29 10:01 - build completed with 1 error and 0 warnings in 11S 30ms error exception handling

Detailed explanation of Oracle tablespace table partition and query method of Oracle table partition

AtomicIntegerArray源码分析与感悟

Business case | how to promote the activity of sports and health app users? It is enough to do these points well

Atcoder beginer contest 248c dice sum (generating function)

SSM project deployed in Alibaba cloud

Search ideas and cases of large amount of Oracle redo log

Generate 32-bit UUID in Oracle

Quartus Prime硬件实验开发(DE2-115板)实验二功能可调综合计时器设计

Force deduction brush question 101 Symmetric binary tree

Android interview theme collection

pycharm Install packages failed

RAC environment alert log error drop transient type: systp2jw0acnaurdgu1sbqmbryw = = troubleshooting

低频量化之明日涨停预测

自动化的艺术

Using Jupiter notebook in virtual environment

JS 力扣刷题 102. 二叉树的层序遍历

UML统一建模语言

解决方案架构师的小锦囊 - 架构图的 5 种类型

ACFs file system creation, expansion, reduction and other configuration steps