当前位置:网站首页>PyTorch 10. Learning rate

PyTorch 10. Learning rate

2022-04-23 07:28:00 【DCGJ666】

PyTorch 10. Learning rate

scheduler

scheduler: An optimizer must adjust its learning rate

class _LRScheduler(object):

def __init__(self, optimizer, last_epoch=-1):

pass

def get_lr(self):

return [base_lr * self.gamma ** (self.last_epoch//self.step_size) for base_lr in self.base_lrs]

def step(self):

if epoch is None:

epoch = self.last_epoch + 1

self.last_epoch = epoch

for param_group, lr in zip(self.optimizer.param_groups, self.get_lr()):

param_group['lr'] = lr

optimizer: Associated optimizer

last_epoch: Record epoch Count

base_lrs: Record the initial learning rate

The main method :

step(): Update next epoch Learning rate of

get_lr(): Calculate next epoch Learning rate of

StepLR

lr_scheduler.StepLR(optimizer, step_size, gamma=0.1,last_epoch=-1)

function : Adjust the learning rate at equal intervals

main parameter :

step_size: Adjust the number of intervals

gamma: Adjustment factor

arrange mode : lr = lr * gamma

MultiStepLR

lr_scheduler.MultiStepLR(optimizer, milestones, gamma=0.1, last_epoch=-1)

function : Adjust the learning rate at a given interval

main parameter :

milestones: Set the number of adjustment times ,milestones = [50, 125, 160]

gamma: Adjustment factor

arrange mode : lr = lr *gamma

ExponentialLR

lr_scheduler.ExponentialLR(optimizer, gamma, last-epoch=-1)

function : Adjust the learning rate by exponential decay

main parameter :

gamma: The bottom of the index

CosineAnnealingLR

lr_scheduler.CosineAnnealingLR(optimizer, T_max, eta_min=0, last_epoch=-1)

function : Cosine period adjusted learning rate

main parameter :

T_max: Descent cycle

eta_min: Lower limit of learning rate

arrange mode :

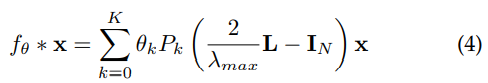

l r t = l r m i n + 1 2 ( l r m a x − l r m i n ) ( 1 + c o s ( T c u r T m a x π ) ) lr_t = lr_{min}+\frac{1}{2}(lr_{max}-lr_{min})(1+cos(\frac{T_{cur}}{T_{max}}\pi)) lrt=lrmin+21(lrmax−lrmin)(1+cos(TmaxTcurπ))

ReduceLRonPlateau

lr_scheduler.ReduceLROnPlateau(optimizer, mode='min', factor=0.1, patience=10, verbose=False, threshold=0.0001, threshold_mode='rel',cooldown=0, min_lr=0, eps=1e-08)

function : Monitoring indicators , When the index no longer changes, adjust

main parameter :

mode: min/max Two modes

factor: Adjustment factor

patience: “ Patience, ”, Accept several times without change

cooldown: “ Cooling time ”, Stop monitoring for a while

verbose: Whether to print the log

min_lr: Lower limit of learning rate

eps: Minimum attenuation of learning rate

Reference resources :

https://zhuanlan.zhihu.com/p/146865009

版权声明

本文为[DCGJ666]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230611343971.html

边栏推荐

- 《Multi-modal Visual Tracking:Review and Experimental Comparison》翻译

- CMSIS CM3源码注解

- Machine learning II: logistic regression classification based on Iris data set

- PyTorch 18. torch.backends.cudnn

- AUTOSAR从入门到精通100讲(八十四)-UDS之时间参数总结篇

- PyTorch 20. PyTorch技巧(持续更新)

- 机器学习——PCA与LDA

- Intuitive understanding of torch nn. Unfold

- 主流 RTOS 评估

- STM32多路测温无线传输报警系统设计(工业定时测温/机舱温度定时检测等)

猜你喜欢

【51单片机交通灯仿真】

Raspberry Pie: two color LED lamp experiment

【点云系列】Pointfilter: Point Cloud Filtering via Encoder-Decoder Modeling

Machine learning notes 1: learning ideas

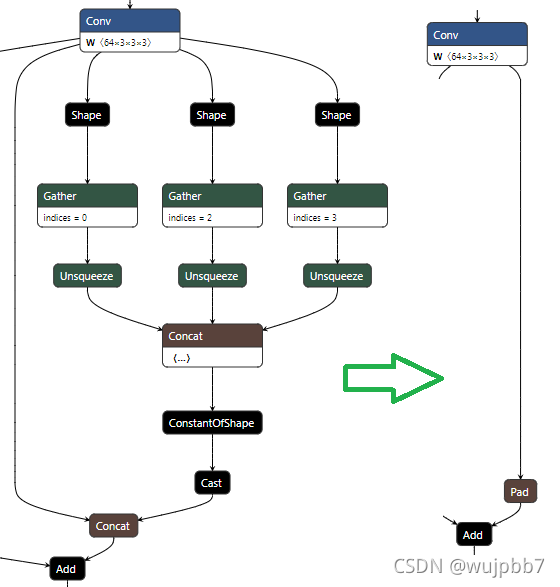

F. The wonderful use of pad

【點雲系列】SG-GAN: Adversarial Self-Attention GCN for Point Cloud Topological Parts Generation

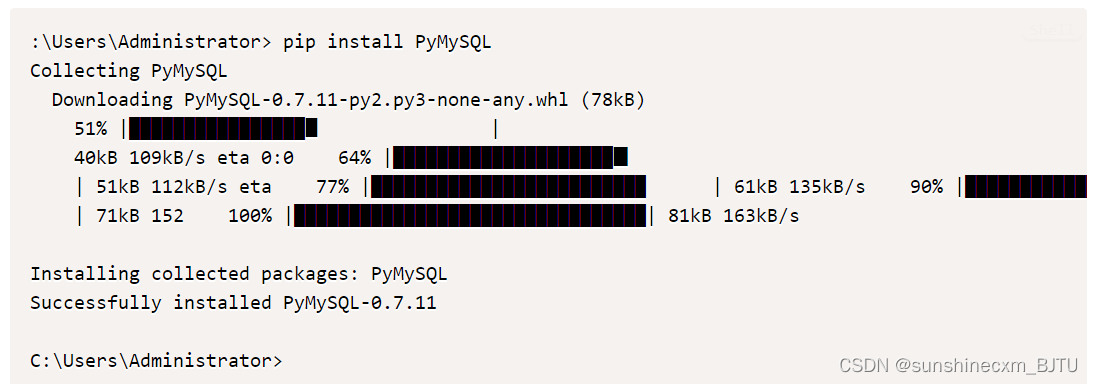

Pymysql connection database

Intuitive understanding of torch nn. Unfold

【期刊会议系列】IEEE系列模板下载指南

【无标题】PID控制TT编码器电机

随机推荐

Write a wechat double open gadget to your girlfriend

Machine learning notes 1: learning ideas

重大安保事件应急通信系统解决方案

【點雲系列】SG-GAN: Adversarial Self-Attention GCN for Point Cloud Topological Parts Generation

[point cloud series] pnp-3d: a plug and play for 3D point clouds

Device Tree 详解

Résolution du système

Systrace 解析

多机多卡训练时的错误

主流 RTOS 评估

《Multi-modal Visual Tracking:Review and Experimental Comparison》翻译

GIS实战应用案例100篇(五十三)-制作三维影像图用以作为城市空间格局分析的底图

Systrace 解析

By onnx checker. check_ Common errors detected by model

EMMC/SD学习小记

imx6ull-qemu 裸机教程1:GPIO,IOMUX,I2C

rearrange 和 einsum 真的优雅吗

PyTorch 20. PyTorch技巧(持续更新)

Wechat applet uses wxml2canvas plug-in to generate some problem records of pictures

【点云系列】FoldingNet:Point Cloud Auto encoder via Deep Grid Deformation