当前位置:网站首页>文件系统读写性能测试实战

文件系统读写性能测试实战

2022-04-23 16:18:00 【恐龙弟旺仔】

前言:

笔者本来准备直接测试磁盘块的读写性能(随机读写、顺序读写),但是奈何环境一直搭建不好,所以只能退而求其次,测试下文件系统的读写性能(随机读写、顺序读写)。

有关于两者的区别,可以参考下笔者另一篇博客:磁盘性能指标监控实战

1.环境准备

笔者安装了docker Ubuntu:18.04版本,所有的命令就在该容器中测试 ;

命令安装(fio命令)

apt-get install -y fio2.fio命令

fio工具是主流的第三方I/O测试工具,它提供了大量的可定制化选项,可以用来测试裸磁盘、文件系统在各种场景下的I/O性能

具体可以使用 man fio命令来查看,以下是其中一些参数支持:

filename=/dev/emcpowerb 支持文件系统或者裸设备,-filename=/dev/sda2或-filename=/dev/sdb

direct=1 测试过程绕过机器自带的buffer,使测试结果更真实

rw=randwread 测试随机读的I/O

rw=randwrite 测试随机写的I/O

rw=randrw 测试随机混合写和读的I/O

rw=read 测试顺序读的I/O

rw=write 测试顺序写的I/O

rw=rw 测试顺序混合写和读的I/O

bs=4k 单次io的块文件大小为4k

bsrange=512-2048 同上,提定数据块的大小范围

size=5g 本次的测试文件大小为5g,以每次4k的io进行测试

numjobs=30 本次的测试线程为30

runtime=1000 测试时间为1000秒,如果不写则一直将5g文件分4k每次写完为止

ioengine=psync 表示 I/O 引擎,它支持同步(sync)、异步(libaio)、内存映射(mmap)、网络(net)等各种 I/O 引擎

rwmixwrite=30 在混合读写的模式下,写占30%

group_reporting 关于显示结果的,汇总每个进程的信息

此外

lockmem=1g 只使用1g内存进行测试

zero_buffers 用0初始化系统buffer

nrfiles=8 每个进程生成文件的数量而关于输出结果参数:

io=执行了多少M的IO

bw=平均IO带宽

iops=IOPS

runt=线程运行时间

slat=提交延迟

clat=完成延迟

lat=响应时间

bw=带宽

cpu=利用率

IO depths=io队列

IO submit=单个IO提交要提交的IO数

IO complete=Like the above submit number, but for completions instead.

IO issued=The number of read/write requests issued, and how many of them were short.

IO latencies=IO完延迟的分布

io=总共执行了多少size的IO

aggrb=group总带宽

minb=最小.平均带宽.

maxb=最大平均带宽.

mint=group中线程的最短运行时间.

maxt=group中线程的最长运行时间.

ios=所有group总共执行的IO数.

merge=总共发生的IO合并数.

ticks=Number of ticks we kept the disk busy.

io_queue=花费在队列上的总共时间.

util=磁盘利用率后续我们会参考这些字段

3.文件系统性能测试

笔者准备从四个方面来测试,分别是:顺序读、随机读、顺序写、随机写

测试的文件为/tmp/filetest.txt

3.1 随机读

笔者使用命令如下:

fio -name=randread -direct=1 -iodepth=64 -rw=randread -ioengine=libaio -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txt按照2中的参数解释,我们对/tmp/filetest.txt进行随机读,每次读取4K,总大小为1G

-direct=1 跳过自带buffer,避免影响真实测试结果

-ioengine=libaio 使用异步I/O来测试

-iodepth=64 同时发出I/O请求上限为64

测试结果如下:

root@7bc18553126f:/tmp# fio -name=randread -direct=1 -iodepth=64 -rw=randread -ioengine=libaio -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txt

randread: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=64

fio-3.1

Starting 1 process

randread: Laying out IO file (1 file / 1024MiB)

Jobs: 1 (f=1): [r(1)][-.-%][r=372MiB/s,w=0KiB/s][r=95.3k,w=0 IOPS][eta 00m:00s]

randread: (groupid=0, jobs=1): err= 0: pid=836: Sun Apr 3 10:40:41 2022

read: IOPS=92.7k, BW=362MiB/s (380MB/s)(1024MiB/2828msec)

slat (nsec): min=1375, max=900000, avg=9439.98, stdev=18216.37

clat (usec): min=84, max=2436, avg=680.01, stdev=128.14

lat (usec): min=85, max=2439, avg=689.57, stdev=130.14

clat percentiles (usec):

| 1.00th=[ 469], 5.00th=[ 529], 10.00th=[ 562], 20.00th=[ 594],

| 30.00th=[ 619], 40.00th=[ 644], 50.00th=[ 668], 60.00th=[ 693],

| 70.00th=[ 717], 80.00th=[ 742], 90.00th=[ 799], 95.00th=[ 865],

| 99.00th=[ 1156], 99.50th=[ 1385], 99.90th=[ 1844], 99.95th=[ 1991],

| 99.99th=[ 2147]

bw ( KiB/s): min=342171, max=380912, per=99.14%, avg=367594.00, stdev=15369.66, samples=5

iops : min=85542, max=95228, avg=91898.00, stdev=3842.59, samples=5

lat (usec) : 100=0.01%, 250=0.04%, 500=2.25%, 750=78.87%, 1000=17.09%

lat (msec) : 2=1.70%, 4=0.04%

cpu : usr=12.31%, sys=87.23%, ctx=34, majf=0, minf=80

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwt: total=262144,0,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

READ: bw=362MiB/s (380MB/s), 362MiB/s-362MiB/s (380MB/s-380MB/s), io=1024MiB (1074MB), run=2828-2828msec我们重点关注以下输出即可:

| 吞吐量 | bw | 380M/s |

| IOPS | IOPS | 92.7K |

| 响应时间 | lat | min=85, max=2439, avg=689.57, stdev=130.14(usec) |

| 提交延迟 | slat | min=1375, max=900000, avg=9439.98, stdev=18216.37(nsec) |

| 完成延迟 | clat | min=84, max=2436, avg=680.01, stdev=128.14(usec) |

一般情况下 响应时间=提交延迟+完成延迟(这里要注意上面的时间单位)

3.2 顺序读

顺序读主要就是-name=read

root@7bc18553126f:/tmp# fio -name=read -direct=1 -iodepth=64 -rw=read -ioengine=libaio -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txt

read: (g=0): rw=read, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=64

fio-3.1

Starting 1 process

Jobs: 1 (f=1)

read: (groupid=0, jobs=1): err= 0: pid=842: Sun Apr 3 10:43:20 2022

read: IOPS=145k, BW=567MiB/s (594MB/s)(1024MiB/1807msec)

slat (nsec): min=1291, max=822125, avg=5795.16, stdev=12100.41

clat (usec): min=100, max=2339, avg=434.78, stdev=122.26

lat (usec): min=104, max=2340, avg=440.68, stdev=123.16

clat percentiles (usec):

| 1.00th=[ 255], 5.00th=[ 302], 10.00th=[ 326], 20.00th=[ 355],

| 30.00th=[ 375], 40.00th=[ 396], 50.00th=[ 416], 60.00th=[ 437],

| 70.00th=[ 461], 80.00th=[ 494], 90.00th=[ 545], 95.00th=[ 611],

| 99.00th=[ 922], 99.50th=[ 1074], 99.90th=[ 1385], 99.95th=[ 1778],

| 99.99th=[ 2212]

bw ( KiB/s): min=553768, max=596884, per=98.92%, avg=574033.33, stdev=21673.95, samples=3

iops : min=138442, max=149221, avg=143508.33, stdev=5418.49, samples=3

lat (usec) : 250=0.81%, 500=80.98%, 750=16.00%, 1000=1.48%

lat (msec) : 2=0.69%, 4=0.04%

cpu : usr=14.62%, sys=83.61%, ctx=130, majf=0, minf=83

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwt: total=262144,0,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

READ: bw=567MiB/s (594MB/s), 567MiB/s-567MiB/s (594MB/s-594MB/s), io=1024MiB (1074MB), run=1807-1807msec重点关注的输出:

| 吞吐量 | bw | 594M/s |

| IOPS | IOPS | 145K |

| 响应时间 | lat | min=104, max=2340, avg=440.68, stdev=123.16(usec) |

| 提交延迟 | slat | min=1291, max=822125, avg=5795.16, stdev=12100.41(nsec) |

| 完成延迟 | clat | min=100, max=2339, avg=434.78, stdev=122.26(usec) |

顺序读相比较于随机读,性能有所提高(吞吐量、IOPS几乎提高了一倍)

3.3 随机写

root@7bc18553126f:/tmp# fio -name=randwrite -direct=1 -iodepth=64 -rw=randwrite -ioengine=libaio -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txt

randwrite: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=64

fio-3.1

Starting 1 process

Jobs: 1 (f=1): [w(1)][-.-%][r=0KiB/s,w=310MiB/s][r=0,w=79.5k IOPS][eta 00m:00s]

randwrite: (groupid=0, jobs=1): err= 0: pid=839: Sun Apr 3 10:42:20 2022

write: IOPS=82.9k, BW=324MiB/s (339MB/s)(1024MiB/3163msec)

slat (nsec): min=1416, max=561625, avg=10713.06, stdev=23076.82

clat (usec): min=62, max=2612, avg=760.65, stdev=140.25

lat (usec): min=64, max=2614, avg=771.51, stdev=142.09

clat percentiles (usec):

| 1.00th=[ 502], 5.00th=[ 578], 10.00th=[ 619], 20.00th=[ 660],

| 30.00th=[ 693], 40.00th=[ 717], 50.00th=[ 750], 60.00th=[ 775],

| 70.00th=[ 807], 80.00th=[ 840], 90.00th=[ 914], 95.00th=[ 988],

| 99.00th=[ 1254], 99.50th=[ 1385], 99.90th=[ 1713], 99.95th=[ 1942],

| 99.99th=[ 2343]

bw ( KiB/s): min=314504, max=338570, per=99.50%, avg=329858.00, stdev=10161.04, samples=6

iops : min=78626, max=84642, avg=82464.33, stdev=2540.12, samples=6

lat (usec) : 100=0.01%, 250=0.03%, 500=0.94%, 750=50.71%, 1000=43.79%

lat (msec) : 2=4.48%, 4=0.04%

cpu : usr=9.49%, sys=73.66%, ctx=41102, majf=0, minf=19

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwt: total=0,262144,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

WRITE: bw=324MiB/s (339MB/s), 324MiB/s-324MiB/s (339MB/s-339MB/s), io=1024MiB (1074MB), run=3163-3163msec重点关注的输出:

| 吞吐量 | bw | 339M/s |

| IOPS | IOPS | 82.9K |

| 响应时间 | lat | min=64, max=2614, avg=771.51, stdev=142.09(usec) |

| 提交延迟 | slat | min=1416, max=561625, avg=10713.06, stdev=23076.82(nsec) |

| 完成延迟 | clat | min=62, max=2612, avg=760.65, stdev=140.25(usec) |

随机读写的性能基本差不多

3.4 顺序写

root@7bc18553126f:/tmp# fio -name=write -direct=1 -iodepth=64 -rw=write -ioengine=libaio -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txt

write: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=64

fio-3.1

Starting 1 process

Jobs: 1 (f=1)

write: (groupid=0, jobs=1): err= 0: pid=845: Sun Apr 3 10:44:31 2022

write: IOPS=123k, BW=481MiB/s (504MB/s)(1024MiB/2129msec)

slat (nsec): min=1375, max=1015.5k, avg=6901.34, stdev=13482.94

clat (usec): min=10, max=3391, avg=512.35, stdev=147.78

lat (usec): min=52, max=3392, avg=519.37, stdev=148.93

clat percentiles (usec):

| 1.00th=[ 262], 5.00th=[ 330], 10.00th=[ 363], 20.00th=[ 404],

| 30.00th=[ 437], 40.00th=[ 465], 50.00th=[ 494], 60.00th=[ 523],

| 70.00th=[ 553], 80.00th=[ 594], 90.00th=[ 668], 95.00th=[ 750],

| 99.00th=[ 1057], 99.50th=[ 1139], 99.90th=[ 1450], 99.95th=[ 1680],

| 99.99th=[ 2540]

bw ( KiB/s): min=459872, max=514994, per=99.58%, avg=490430.50, stdev=24701.13, samples=4

iops : min=114968, max=128748, avg=122607.50, stdev=6175.12, samples=4

lat (usec) : 20=0.01%, 50=0.01%, 100=0.01%, 250=0.69%, 500=51.74%

lat (usec) : 750=42.62%, 1000=3.50%

lat (msec) : 2=1.42%, 4=0.02%

cpu : usr=12.22%, sys=63.96%, ctx=31059, majf=0, minf=21

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwt: total=0,262144,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

WRITE: bw=481MiB/s (504MB/s), 481MiB/s-481MiB/s (504MB/s-504MB/s), io=1024MiB (1074MB), run=2129-2129msec

重点关注的输出:

| 吞吐量 | bw | 504M/s |

| IOPS | IOPS | 123K |

| 响应时间 | lat | min=52, max=3392, avg=519.37, stdev=148.93(usec) |

| 提交延迟 | slat | min=1375, max=1015.5k, avg=6901.34, stdev=13482.94(nsec) |

| 完成延迟 | clat | min=10, max=3391, avg=512.35, stdev=147.78(usec) |

相对于随机写,顺序写的吞吐量、响应时间和IOPS都有所提高(1.5倍的样子)

3.5 同步随机读

我们3.1-3.4中所测试的情况都是异步操作,那么我们换成同步来测试呢,笔者来测试下同步随机读的场景

root@7bc18553126f:/tmp# fio -name=randread -direct=1 -iodepth=64 -rw=randread -ioengine=sync -bs=4k -size=1G -numjobs=1 -runtime=1000 -group_reporting -filename=/tmp/filetest.txt

randread: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=sync, iodepth=64

fio-3.1

Starting 1 process

Jobs: 1 (f=1): [r(1)][100.0%][r=89.3MiB/s,w=0KiB/s][r=22.9k,w=0 IOPS][eta 00m:00s]

randread: (groupid=0, jobs=1): err= 0: pid=848: Sun Apr 3 10:48:04 2022

read: IOPS=22.0k, BW=86.0MiB/s (90.2MB/s)(1024MiB/11903msec)

clat (usec): min=28, max=9261, avg=44.04, stdev=35.40

lat (usec): min=28, max=9262, avg=44.13, stdev=35.41

clat percentiles (usec):

| 1.00th=[ 36], 5.00th=[ 37], 10.00th=[ 38], 20.00th=[ 38],

| 30.00th=[ 39], 40.00th=[ 39], 50.00th=[ 43], 60.00th=[ 43],

| 70.00th=[ 44], 80.00th=[ 44], 90.00th=[ 46], 95.00th=[ 61],

| 99.00th=[ 112], 99.50th=[ 127], 99.90th=[ 221], 99.95th=[ 343],

| 99.99th=[ 881]

bw ( KiB/s): min=79304, max=91680, per=99.56%, avg=87701.35, stdev=3585.21, samples=23

iops : min=19826, max=22920, avg=21925.17, stdev=896.39, samples=23

lat (usec) : 50=92.70%, 100=5.92%, 250=1.31%, 500=0.05%, 750=0.01%

lat (usec) : 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%

cpu : usr=6.89%, sys=20.18%, ctx=262035, majf=0, minf=17

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwt: total=262144,0,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

READ: bw=86.0MiB/s (90.2MB/s), 86.0MiB/s-86.0MiB/s (90.2MB/s-90.2MB/s), io=1024MiB (1074MB), run=11903-11903msec重点关注的输出:

| 同步随机读 | 异步随机读 | ||

| 吞吐量 | bw | 86M/s | 380M/s |

| IOPS | IOPS | 22K | 92.7K |

| 响应时间 | lat | min=28, max=9262, avg=44.13, stdev=35.41(usec) | min=85, max=2439, avg=689.57, stdev=130.14(usec) |

| 提交延迟 | slat | 0(nsec) | min=1375, max=900000, avg=9439.98, stdev=18216.37(nsec) |

| 完成延迟 | clat | min=28, max=9261, avg=44.04, stdev=35.40(usec) | min=84, max=2436, avg=680.01, stdev=128.14(usec) |

同步随机读,相对于我们3.1中的异步随机读,性能差的不是一点点

总结:

在上述测试结果情况下,我们可以得出两个结论

1.顺序读写比随机读写性能更好

2.异步读写比同步读写性能更好

参考:

极客时间 <<Linux性能优化实战>>

版权声明

本文为[恐龙弟旺仔]所创,转载请带上原文链接,感谢

https://blog.csdn.net/qq_26323323/article/details/124361645

边栏推荐

- Hyperbdr cloud disaster recovery v3 Release of version 3.0 | upgrade of disaster recovery function and optimization of resource group management function

- Solution to the fourth "intelligence Cup" National College Students' IT skills competition (group B of the final)

- Day (5) of picking up matlab

- Hypermotion cloud migration helped China Unicom. Qingyun completed the cloud project of a central enterprise and accelerated the cloud process of the group's core business system

- JSP learning 2

- The first line and the last two lines are frozen when paging

- Matplotlib tutorial 05 --- operating images

- Groupby use of spark operator

- Ice -- source code analysis

- 【Pygame小游戏】10年前风靡全球的手游《愤怒的小鸟》,是如何霸榜的?经典回归......

猜你喜欢

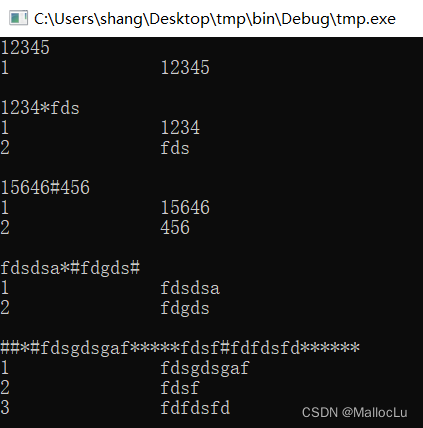

C language self compiled string processing function - string segmentation, string filling, etc

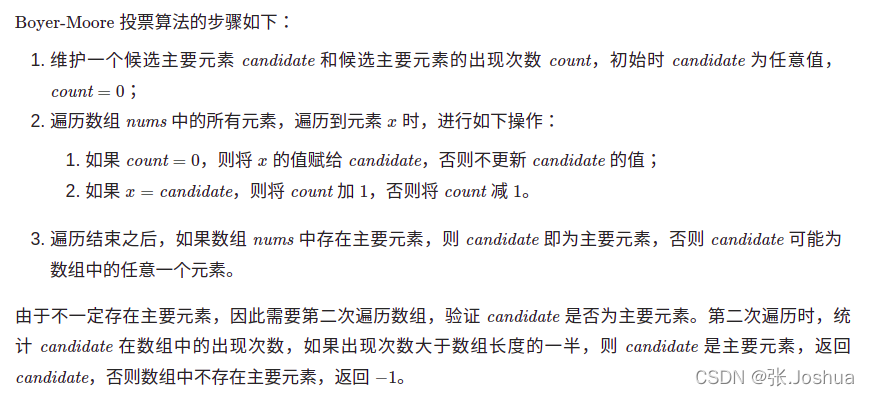

Interview question 17.10 Main elements

volatile的含义以及用法

How important is the operation and maintenance process? I heard it can save 2 million a year?

299. Number guessing game

homwbrew安装、常用命令以及安装路径

捡起MATLAB的第(6)天

Filter usage of spark operator

Meaning and usage of volatile

MySQL - MySQL查询语句的执行过程

随机推荐

Review 2021: how to help customers clear the obstacles in the last mile of going to the cloud?

TIA博图——基本操作

js正则判断域名或者IP的端口路径是否正确

JSP learning 3

运维流程有多重要,听说一年能省下200万?

Countdown 1 day ~ 2022 online conference of cloud disaster tolerance products is about to begin

一文读懂串口及各种电平信号含义

捡起MATLAB的第(6)天

Unity Shader学习

Win11 / 10 home edition disables the edge's private browsing function

Unity shader learning

保姆级Anaconda安装教程

Using JSON server to create server requests locally

PHP 零基础入门笔记(13):数组相关函数

最詳細的背包問題!!!

撿起MATLAB的第(9)天

299. 猜数字游戏

VIM specifies the line comment and reconciliation comment

Postman batch production body information (realize batch modification of data)

C language self compiled string processing function - string segmentation, string filling, etc