当前位置:网站首页>On distributed lock

On distributed lock

2022-04-23 05:04:00 【canger_】

Why locks are needed

Stand alone program , In the case of multi-threaded concurrency , When operating on the same resource , It needs to be locked and other synchronization measures to ensure atomicity . Take an example of multithreading self increment :

package main

import (

"sync"

)

// Global variables

var counter int

func main() {

var wg sync.WaitGroup

for i := 0; i < 100; i++ {

wg.Add(1)

go func() {

counter++

wg.Done()

}()

}

wg.Wait()

println(counter)

}

Multiple runs will get different results :

> go run test.go

98

> go run test.go

99

> go run test.go

100

Obviously, the result is not satisfactory , Full of unpredictability . To get the right results , You need to increase the count self lock

package main

import (

"sync"

)

// Global variables

var counter int

var mtx sync.Mutex

func main() {

var wg sync.WaitGroup

for i := 0; i < 100; i++ {

wg.Add(1)

go func() {

mtx.Lock()

counter++

mtx.Unlock()

wg.Done()

}()

}

wg.Wait()

println(counter)

}

The results obtained after multiple runs :

> go run test.go

100

> go run test.go

100

> go run test.go

100

One 、 be based on Redis Of setnx

In a distributed scenario , We also need this " preemption " The logic of , Now what? ? We can use Redis Provided setnx command :

package main

import (

"fmt"

"strconv"

"sync"

"time"

"gopkg.in/redis.v5"

)

var rds = redis.NewFailoverClient(&redis.FailoverOptions{

MasterName: "mymaster",

SentinelAddrs: []string{

"127.0.0.1:26379"},

})

// Global variables

func incrby() error {

lockkey := "count_key"

counterkey := "counter"

succ, err := rds.SetNX(lockkey, 1, time.Second*time.Duration(5)).Result()

if err != nil || !succ {

fmt.Println(err, " lock result:", succ)

return err

}

defer func() {

succ, err := rds.Del(lockkey).Result()

if err == nil && succ > 0 {

fmt.Println("unlock sucess")

} else {

fmt.Println("unlock failed, err=", err)

}

}()

resp, err := rds.Get(counterkey).Result()

if err != nil && err != redis.Nil {

fmt.Println("get count failed, err=", err)

return err

}

var cnt int64

if err == nil {

cnt, err = strconv.ParseInt(resp, 10, 64)

if err != nil {

fmt.Println("parse string failed, s=", resp)

return err

}

}

fmt.Println("curr cnt:", cnt)

cnt++

_, err = rds.Set(counterkey, cnt, 0).Result()

if err != nil {

fmt.Println("set value fialed,err=", err)

return err

}

return nil

}

func main() {

var wg sync.WaitGroup

for i := 0; i < 10; i++ {

wg.Add(1)

go func() {

defer wg.Done()

incrby()

}()

}

wg.Wait()

}

Running results :

> go run test.go

curr cnt: 0

<nil> lock result: false

unlock sucess

<nil> lock result: false

curr cnt: 1

<nil> lock result: false

unlock sucess

curr cnt: 2

<nil> lock result: false

unlock sucess

curr cnt: 3

<nil> lock result: false

<nil> lock result: false

unlock sucess

The remote invocation setnx Running process and stand-alone trylock Very similar , If the lock acquisition fails , Then the relevant task logic will not continue to execute downward .

setnx It is very suitable for high concurrency scenarios , To compete for some of the only resources .

Two 、 be based on zookeeper

package main

import (

"fmt"

"sync"

"time"

"github.com/samuel/go-zookeeper/zk"

)

var zkconn *zk.Conn

var count int64

func incrby() {

lock := zk.NewLock(zkconn, "/lock", zk.WorldACL(zk.PermAll))

err := lock.Lock()

if err != nil {

panic(err)

}

count++

lock.Unlock()

}

func main() {

c, _, err := zk.Connect([]string{

"127.0.0.1"}, time.Second)

if err != nil {

fmt.Println("connect zookeeper failed, err=", err)

return

}

zkconn = c

var wg sync.WaitGroup

for i := 0; i < 10; i++ {

wg.Add(1)

go func() {

defer wg.Done()

incrby()

}()

}

wg.Wait()

fmt.Println(" cnt :", count)

}

Running results :

$ > go run test.go

Connected to 127.0.0.1:2181

authenticated: id=72138376348368897, timeout=4000

re-submitting `0` credentials after reconnect

cnt : 10

be based on ZooKeeper Lock and based on Redis The difference between locks is lock It will be blocked until it succeeds , This is related to sync.Mutex Of Lock The method is similar to .

The principle is based on temporary Sequence Nodes and watch API, For example, what we use here is /lock node .Lock It will insert its own value in the node column under the node , As long as the child nodes under the node change , Will notify all watch The program of this node . At this time, the program will check the status of the smallest child node under the current node id Whether it is consistent with your own , If it is consistent, the locking is successful .

This distributed blocking lock is more suitable for Distributed task scheduling scene , However, it is not suitable for lock grabbing scenes with high frequency and short lock holding time . according to Google Of Chubby The exposition in the paper , Lock based on strong consistency protocol is suitable for Coarse grained locking operation . Coarse granularity here means that the lock takes a long time . When using it, we should also consider whether it is appropriate to use it in our own business scenarios

3、 ... and 、 be based on etcd

This etcd My bag "github.com/zieckey/etcdsync" Pull go mod There will be two problems

# for the first time

/etcd imports

github.com/coreos/etcd/clientv3 tested by

github.com/coreos/etcd/clientv3.test imports

github.com/coreos/etcd/auth imports

github.com/coreos/etcd/mvcc/backend imports

github.com/coreos/bbolt: github.com/coreos/[email protected]: parsing go.mod:

module declares its path as: go.etcd.io/bbolt

but was required as: github.com/coreos/bbolt

# The second time

imports

google.golang.org/grpc/naming: module google.golang.org/grpc@latest found (v1.32.0), but does not contain package google.golang.org/grpc/naming

Need to be in go.mod Medium plus

replace (

github.com/coreos/bbolt v1.3.4 => go.etcd.io/bbolt v1.3.4

go.etcd.io/bbolt v1.3.4 => github.com/coreos/bbolt v1.3.4

google.golang.org/grpc => google.golang.org/grpc v1.26.0

)

import (

"log"

"github.com/zieckey/etcdsync"

)

func main() {

m, err := etcdsync.New("/lock", 10, []string{

"http://127.0.0.1:2379"})

if m == nil || err != nil {

log.Printf("etcdsync.New failed")

return

}

err = m.Lock()

if err != nil {

log.Println("etcdsync.Lock failed, err=", err)

return

}

log.Printf("etcdsync.Lock OK")

log.Printf("Get the lock. Do something here.")

err = m.Unlock()

if err != nil {

log.Println("etcdsync.Unlock failed, err=", err)

} else {

log.Printf("etcdsync.Unlock OK")

}

}

etcd There is no such thing as ZooKeeper Like that Sequence node . Therefore, its lock implementation is based on ZooKeeper The implementation is different . Used in the above example code etcdsync Of Lock The process is :

- 1、 First check /lock Whether there is a value under the path , If it's worth it , It means that the lock has been robbed by others

- 2、 If there is no value , Then write your own value . Write success returns , It indicates that the lock is successful . When writing, if the node has been written by other nodes , Then locking will fail .

- 3、watch /lock Next event , At this time, it is blocked

- 4、 When /lock When an event occurs under the path , The current process is awakened . Check whether the event occurred is a delete event ( It means that the lock is actively locked by the holder unlock), Or expiration events ( The lock expires ), If so , go back to 1, Follow the lock grabbing process .

How to choose the right lock

Single machine level

The business is still in the order of single machine , Then you can use any single lock scheme according to your needs .

Distributed order of magnitude

- Lower order

If we develop to the stage of distributed service , But the business scale is small ,QPS Very small case , The scheme of which lock to use is almost the same . If there is something that can be used inside the company ZooKeeper、etcd perhaps Redis colony , So try not to introduce new technology stacks . - Higher order of magnitude

If the lock is under the condition of bad task, data loss is not allowed , Then you can't use Redis Of setnx Simple lock .

If the reliability of lock data is very high , You can only use etcd perhaps ZooKeeper This kind of locking scheme ensures data reliability through consistency protocol .( But behind the reliability is always the low throughput and high latency . You need to stress test the business according to its magnitude , To ensure that distributed locks are used etcd and ZooKeeper The cluster can withstand the pressure of actual business requests .

版权声明

本文为[canger_]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220552063534.html

边栏推荐

- Independent station operation | Facebook marketing artifact - chat robot manychat

- Spell it! Two A-level universities and six B-level universities have abolished master's degree programs in software engineering!

- PHP counts the number of files in the specified folder

- 洛谷P2731骑马修栅栏

- Customize the navigation bar at the top of wechat applet (adaptive wechat capsule button, flex layout)

- Mac 进入mysql终端命令

- Pixel mobile phone brick rescue tutorial

- 深度学习笔记 —— 微调

- Leetcode -- heuristic search

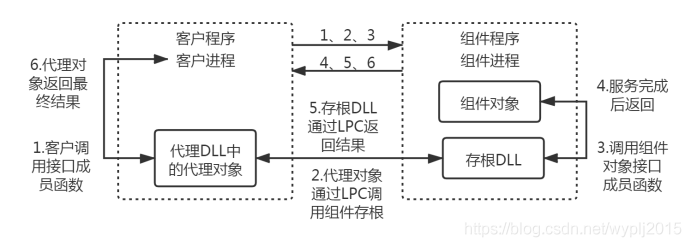

- COM in wine (2) -- basic code analysis

猜你喜欢

Wine (COM) - basic concept

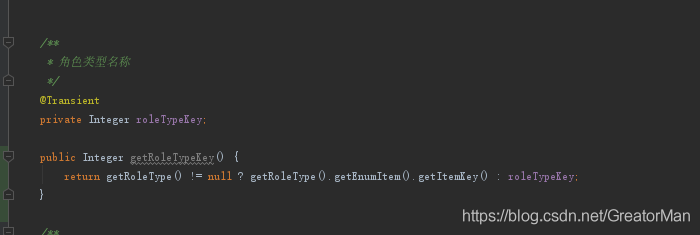

A trinomial expression that causes a null pointer

Sword finger offer: the median in the data stream (priority queue large top heap small top heap leetcode 295)

JS engine loop mechanism: synchronous, asynchronous, event loop

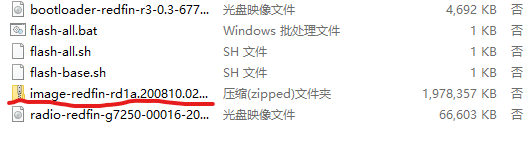

Pixel 5 5g unlocking tutorial (including unlocking BL, installing edxposed and root)

Perfect test of coil in wireless charging system with LCR meter

Live delivery form template - automatically display pictures - automatically associate series products

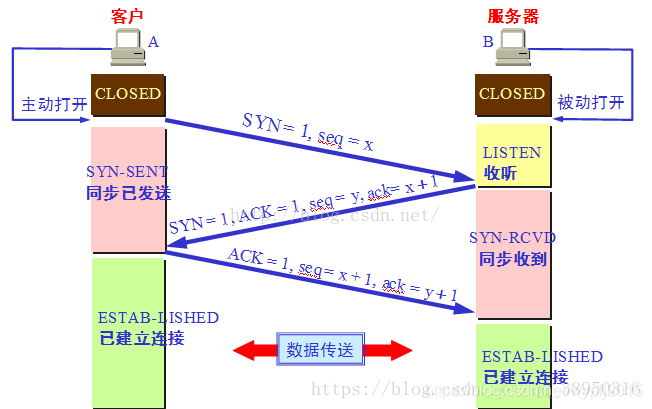

Detailed explanation of the differences between TCP and UDP

MySQL -- execution process and principle of a statement

Restful toolkit of idea plug-in

随机推荐

Restful toolkit of idea plug-in

QPushbutton 槽函数被多次触发

C# List字段排序含有数字和字符

The vscode ipynb file does not have code highlighting and code completion solutions

redis数据类型有哪些

Live delivery form template - automatically display pictures - automatically associate series products

Chapter I overall project management of information system project manager summary

Acid of MySQL transaction

Solve valueerror: argument must be a deny tensor: 0 - got shape [198602], but wanted [198602, 16]

信息学奥赛一本通 1955:【11NOIP普及组】瑞士轮 | OpenJudge 4.1 4363:瑞士轮 | 洛谷 P1309 [NOIP2011 普及组] 瑞士轮

【数据库】MySQL单表查询

[database] MySQL single table query

2022/4/22

Leetcode -- heuristic search

Custom switch control

Progress of innovation training (III)

js 判斷數字字符串中是否含有字符

[WinUI3]编写一个仿Explorer文件管理器

TypeError: ‘Collection‘ object is not callable. If you meant to call the ......

用LCR表完美测试无线充电系统中的线圈