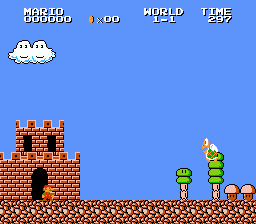

gym-super-mario-bros

An OpenAI Gym environment for Super Mario Bros. & Super Mario Bros. 2 (Lost Levels) on The Nintendo Entertainment System (NES) using the nes-py emulator.

Installation

The preferred installation of gym-super-mario-bros is from pip:

pip install gym-super-mario-bros

Usage

Python

You must import gym_super_mario_bros before trying to make an environment. This is because gym environments are registered at runtime. By default, gym_super_mario_bros environments use the full NES action space of 256 discrete actions. To contstrain this, gym_super_mario_bros.actions provides three actions lists (RIGHT_ONLY, SIMPLE_MOVEMENT, and COMPLEX_MOVEMENT) for the nes_py.wrappers.JoypadSpace wrapper. See gym_super_mario_bros/actions.py for a breakdown of the legal actions in each of these three lists.

from nes_py.wrappers import JoypadSpace

import gym_super_mario_bros

from gym_super_mario_bros.actions import SIMPLE_MOVEMENT

env = gym_super_mario_bros.make('SuperMarioBros-v0')

env = JoypadSpace(env, SIMPLE_MOVEMENT)

done = True

for step in range(5000):

if done:

state = env.reset()

state, reward, done, info = env.step(env.action_space.sample())

env.render()

env.close()

NOTE: gym_super_mario_bros.make is just an alias to gym.make for convenience.

NOTE: remove calls to render in training code for a nontrivial speedup.

Command Line

gym_super_mario_bros features a command line interface for playing environments using either the keyboard, or uniform random movement.

gym_super_mario_bros -e <the environment ID to play> -m <`human` or `random`>

NOTE: by default, -e is set to SuperMarioBros-v0 and -m is set to human.

Environments

These environments allow 3 attempts (lives) to make it through the 32 stages in the game. The environments only send reward-able game-play frames to agents; No cut-scenes, loading screens, etc. are sent from the NES emulator to an agent nor can an agent perform actions during these instances. If a cut-scene is not able to be skipped by hacking the NES's RAM, the environment will lock the Python process until the emulator is ready for the next action.

Individual Stages

These environments allow a single attempt (life) to make it through a single stage of the game.

Use the template

SuperMarioBros-<world>-<stage>-v<version>

where:

<world>is a number in {1, 2, 3, 4, 5, 6, 7, 8} indicating the world<stage>is a number in {1, 2, 3, 4} indicating the stage within a world<version>is a number in {0, 1, 2, 3} specifying the ROM mode to use- 0: standard ROM

- 1: downsampled ROM

- 2: pixel ROM

- 3: rectangle ROM

For example, to play 4-2 on the downsampled ROM, you would use the environment id SuperMarioBros-4-2-v1.

Random Stage Selection

The random stage selection environment randomly selects a stage and allows a single attempt to clear it. Upon a death and subsequent call to reset, the environment randomly selects a new stage. This is only available for the standard Super Mario Bros. game, not Lost Levels (at the moment). To use these environments, append RandomStages to the SuperMarioBros id. For example, to use the standard ROM with random stage selection use SuperMarioBrosRandomStages-v0. To seed the random stage selection use the seed method of the env, i.e., env.seed(1), before any calls to reset.

Step

Info about the rewards and info returned by the step method.

Reward Function

The reward function assumes the objective of the game is to move as far right as possible (increase the agent's x value), as fast as possible, without dying. To model this game, three separate variables compose the reward:

- v: the difference in agent x values between states

- in this case this is instantaneous velocity for the given step

- v = x1 - x0

- x0 is the x position before the step

- x1 is the x position after the step

- moving right ⇔ v > 0

- moving left ⇔ v < 0

- not moving ⇔ v = 0

- c: the difference in the game clock between frames

- the penalty prevents the agent from standing still

- c = c0 - c1

- c0 is the clock reading before the step

- c1 is the clock reading after the step

- no clock tick ⇔ c = 0

- clock tick ⇔ c < 0

- d: a death penalty that penalizes the agent for dying in a state

- this penalty encourages the agent to avoid death

- alive ⇔ d = 0

- dead ⇔ d = -15

r = v + c + d

The reward is clipped into the range (-15, 15).

info dictionary

The info dictionary returned by the step method contains the following keys:

| Key | Type | Description |

|---|---|---|

coins |

int |

The number of collected coins |

flag_get |

bool |

True if Mario reached a flag or ax |

life |

int |

The number of lives left, i.e., {3, 2, 1} |

score |

int |

The cumulative in-game score |

stage |

int |

The current stage, i.e., {1, ..., 4} |

status |

str |

Mario's status, i.e., {'small', 'tall', 'fireball'} |

time |

int |

The time left on the clock |

world |

int |

The current world, i.e., {1, ..., 8} |

x_pos |

int |

Mario's x position in the stage (from the left) |

y_pos |

int |

Mario's y position in the stage (from the bottom) |

Citation

Please cite gym-super-mario-bros if you use it in your research.

@misc{gym-super-mario-bros,

author = {Christian Kauten},

howpublished = {GitHub},

title = {{S}uper {M}ario {B}ros for {O}pen{AI} {G}ym},

URL = {https://github.com/Kautenja/gym-super-mario-bros},

year = {2018},

}