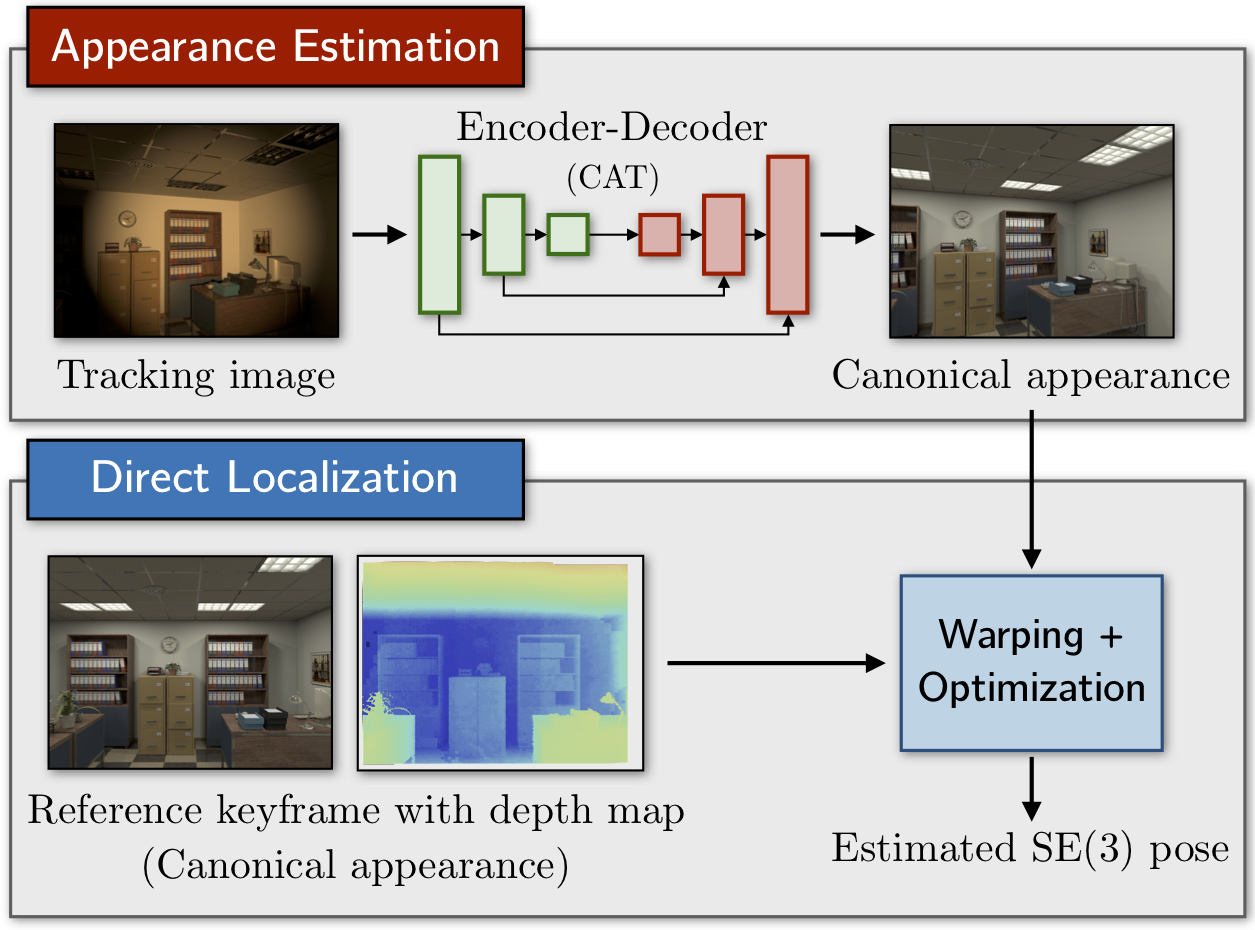

CAT-Net: Learning Canonical Appearance Transformations

Code to accompany our paper "How to Train a CAT: Learning Canonical Appearance Transformations for Direct Visual Localization Under Illumination Change".

Dependencies

- numpy

- matpotlib

- pytorch + torchvision (1.2)

- Pillow

- progress (for progress bars in train/val/test loops)

- tensorboard + tensorboardX (for visualization)

- pyslam + liegroups (optional, for running odometry/localization experiments)

- OpenCV (optional, for running odometry/localization experiments)

Training the CAT

- Download the ETHL dataset from here or the Virtual KITTI dataset from here

- ETHL only: rename

ethl1/2toethl1/2_static. - ETHL only: Update the local paths in

tools/make_ethl_real_sync.pyand runpython3 tools/make_ethl_real_sync.pyto generate a synchronized copy of therealsequences.

- ETHL only: rename

- Update the local paths in

run_cat_ethl/vkitti.pyand runpython3 run_cat_ethl/vkitti.pyto start training. - In another terminal run

tensorboard --port [port] --logdir [path]to start the visualization server, where[port]should be replaced by a numeric value (e.g., 60006) and[path]should be replaced by your local results directory. - Tune in to

localhost:[port]and watch the action.

Running the localization experiments

- Ensure the pyslam and liegroups packages are installed.

- Update the local paths in

make_localization_data.pyand runpython3 make_localization_data.py [dataset]to compile the model outputs into alocalization_datadirectory. - Update the local paths in

run_localization_[dataset].pyand runpython3 run_localization_[dataset].py [rgb,cat]to compute VO and localization results using either the original RGB or CAT-transformed images. - You can compute localization errors against ground truth using the

compute_localization_errors.pyscript, which generates CSV files and several plots. Update the local paths and runpython3 compute_localization_errors.py [dataset].

Citation

If you use this code in your research, please cite:

@article{2018_Clement_Learning,

author = {Lee Clement and Jonathan Kelly},

journal = {{IEEE} Robotics and Automation Letters},

link = {https://arxiv.org/abs/1709.03009},

title = {How to Train a {CAT}: Learning Canonical Appearance Transformations for Direct Visual Localization Under Illumination Change},

year = {2018}

}