当前位置:网站首页>Practical data Lake iceberg lesson 30 MySQL - > iceberg, time zone problems of different clients

Practical data Lake iceberg lesson 30 MySQL - > iceberg, time zone problems of different clients

2022-04-23 11:28:00 【*Spark*】

List of articles

Practical data Lake iceberg The first lesson introduction

Practical data Lake iceberg The second lesson iceberg be based on hadoop The underlying data format

Practical data Lake iceberg The third class stay sqlclient in , With sql The way is from kafka Read data to iceberg

Practical data Lake iceberg The fourth lesson stay sqlclient in , With sql The way is from kafka Read data to iceberg( Upgrade to flink1.12.7)

Practical data Lake iceberg Lesson Five hive catalog characteristic

Practical data Lake iceberg Lesson 6 from kafka Write to iceberg The problem of failure solve

Practical data Lake iceberg Lesson 7 Write to in real time iceberg

Practical data Lake iceberg The eighth lesson hive And iceberg Integrate

Practical data Lake iceberg The ninth lesson Merge small files

Practical data Lake iceberg The tenth lesson Snapshot delete

Practical data Lake iceberg Lesson Eleven Test the complete process of partition table ( Make numbers 、 Build table 、 Merge 、 Delete snapshot )

Practical data Lake iceberg The twelfth lesson catalog What is it?

Practical data Lake iceberg The thirteenth lesson metadata Problems many times larger than data files

Practical data Lake iceberg lesson fourteen Metadata merge ( Solve the problem that metadata expands with time )

Practical data Lake iceberg Lesson 15 spark Installation and integration iceberg(jersey Packet collision )

Practical data Lake iceberg Lesson 16 adopt spark3 open iceberg The gate of cognition

Practical data Lake iceberg Lesson 17 hadoop2.7,spark3 on yarn function iceberg To configure

Practical data Lake iceberg Lesson 18 Multiple clients and iceberg Interactive start command ( Common commands )

Practical data Lake iceberg Lesson 19 flink count iceberg, No result problem

Practical data Lake iceberg Lesson 20 flink + iceberg CDC scene ( Version of the problem , Test to fail )

Practical data Lake iceberg Lesson 21 flink1.13.5 + iceberg0.131 CDC( Test success INSERT, Change operation failed )

Practical data Lake iceberg Lesson 22 flink1.13.5 + iceberg0.131 CDC(CRUD Test success )

Practical data Lake iceberg Lesson 23 flink-sql from checkpoint restart

Practical data Lake iceberg Lesson 24 iceberg Metadata detailed analysis

Practical data Lake iceberg Lesson 25 Background operation flink sql The effect of addition, deletion and modification

Practical data Lake iceberg Lesson 26 checkpoint Setup method

Practical data Lake iceberg A wet night flink cdc Test program failure restart : From the last time checkpoint Click continue working

Practical data Lake iceberg Lesson 28 Deploy packages that do not exist in the public warehouse to the local warehouse

Practical data Lake iceberg Lesson 29 How to get... Gracefully and efficiently flink Of jobId

Practical data Lake iceberg Lesson 30 mysql->iceberg, Different clients have time zone problems

List of articles

- List of articles

- Preface

- One 、spark Inquire about iceberg data , Date plus 8, Urban reasons

- Two 、 Use flink-sql Inquire about , Found no problem with the time

- 3、 ... and 、 Force to source Table addition timezone, Report errors

- Four 、 The upstream watch is not timezone, Downstream surface plus timezone

- Conclusion

Preface

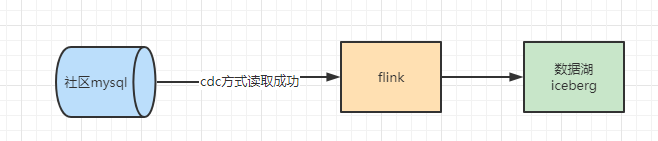

mysql->flink-sql-cdc->iceberg. from flink Check the data time is no problem , from spark-sql check , The time zone +8 了 . Record this problem

The final solution : The source table does not have timezone, Downstream tables need to be set local timezone, So there's no problem !

One 、spark Inquire about iceberg data , Date plus 8, Urban reasons

1、spark sql Inquire about iceberg The field with date reports about timezone The fault of

java.lang.IllegalArgumentException: Cannot handle timestamp without timezone fields in Spark. Spark does not natively support this type but if you would like to handle all timestamps as timestamp with timezone set 'spark.sql.iceberg.handle-timestamp-without-timezone' to true. This will not change the underlying values stored but will change their displayed values in Spark. For more information please see https://docs.databricks.com/spark/latest/dataframes-datasets/dates-timestamps.html#ansi-sql-and-spark-sql-timestamps

at org.apache.iceberg.relocated.com.google.common.base.Preconditions.checkArgument(Preconditions.java:142)

at org.apache.iceberg.spark.source.SparkBatchScan.readSchema(SparkBatchScan.java:127)

at org.apache.spark.sql.execution.datasources.v2.PushDownUtils$.pruneColumns(PushDownUtils.scala:136)

at org.apache.spark.sql.execution.datasources.v2.V2ScanRelationPushDown$$anonfun$applyColumnPruning$1.applyOrElse(V2ScanRelationPushDown.scala:191)

at org.apache.spark.sql.execution.datasources.v2.V2ScanRelationPushDown$$anonfun$applyColumnPruning$1.applyOrElse(V2ScanRelationPushDown.scala:184)

at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformDownWithPruning$1(TreeNode.scala:481)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:82)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDownWithPruning(TreeNode.scala:481)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.org$apache$spark$sql$catalyst$plans$logical$AnalysisHelper$$super$transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning(AnalysisHelper.scala:267)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning$(AnalysisHelper.scala:263)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDown(TreeNode.scala:457)

at org.apache.spark.sql.catalyst.trees.TreeNode.transform(TreeNode.scala:425)

at org.apache.spark.sql.execution.datasources.v2.V2ScanRelationPushDown$.applyColumnPruning(V2ScanRelationPushDown.scala:184)

at org.apache.spark.sql.execution.datasources.v2.V2ScanRelationPushDown$.apply(V2ScanRelationPushDown.scala:39)

at org.apache.spark.sql.execution.datasources.v2.V2ScanRelationPushDown$.apply(V2ScanRelationPushDown.scala:35)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.$anonfun$execute$2(RuleExecutor.scala:211)

at scala.collection.LinearSeqOptimized.foldLeft(LinearSeqOptimized.scala:126)

at scala.collection.LinearSeqOptimized.foldLeft$(LinearSeqOptimized.scala:122)

at scala.collection.immutable.List.foldLeft(List.scala:91)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.$anonfun$execute$1(RuleExecutor.scala:208)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.$anonfun$execute$1$adapted(RuleExecutor.scala:200)

at scala.collection.immutable.List.foreach(List.scala:431)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.execute(RuleExecutor.scala:200)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.$anonfun$executeAndTrack$1(RuleExecutor.scala:179)

at org.apache.spark.sql.catalyst.QueryPlanningTracker$.withTracker(QueryPlanningTracker.scala:88)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.executeAndTrack(RuleExecutor.scala:179)

at org.apache.spark.sql.execution.QueryExecution.$anonfun$optimizedPlan$1(QueryExecution.scala:138)

at org.apache.spark.sql.catalyst.QueryPlanningTracker.measurePhase(QueryPlanningTracker.scala:111)

at org.apache.spark.sql.execution.QueryExecution.$anonfun$executePhase$1(QueryExecution.scala:196)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:775)

at org.apache.spark.sql.execution.QueryExecution.executePhase(QueryExecution.scala:196)

at org.apache.spark.sql.execution.QueryExecution.optimizedPlan$lzycompute(QueryExecution.scala:134)

at org.apache.spark.sql.execution.QueryExecution.optimizedPlan(QueryExecution.scala:130)

at org.apache.spark.sql.execution.QueryExecution.assertOptimized(QueryExecution.scala:148)

at org.apache.spark.sql.execution.QueryExecution.$anonfun$executedPlan$1(QueryExecution.scala:166)

at org.apache.spark.sql.execution.QueryExecution.withCteMap(QueryExecution.scala:73)

at org.apache.spark.sql.execution.QueryExecution.executedPlan$lzycompute(QueryExecution.scala:163)

at org.apache.spark.sql.execution.QueryExecution.executedPlan(QueryExecution.scala:163)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$5(SQLExecution.scala:101)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:163)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$1(SQLExecution.scala:90)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:775)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:64)

at org.apache.spark.sql.hive.thriftserver.SparkSQLDriver.run(SparkSQLDriver.scala:69)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processCmd(SparkSQLCLIDriver.scala:384)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.$anonfun$processLine$1(SparkSQLCLIDriver.scala:504)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.$anonfun$processLine$1$adapted(SparkSQLCLIDriver.scala:498)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1431)

at scala.collection.IterableLike.foreach(IterableLike.scala:74)

at scala.collection.IterableLike.foreach$(IterableLike.scala:73)

at scala.collection.AbstractIterable.foreach(Iterable.scala:56)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.processLine(SparkSQLCLIDriver.scala:498)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver$.main(SparkSQLCLIDriver.scala:287)

at org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver.main(SparkSQLCLIDriver.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:955)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1043)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1052)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

2、 Follow the instructions , Go to time zone processing

set spark.sql.iceberg.handle-timestamp-without-timezone=true;

spark-sql (default)> set `spark.sql.iceberg.handle-timestamp-without-timezone`=true;

key value

spark.sql.iceberg.handle-timestamp-without-timezone true

Time taken: 0.016 seconds, Fetched 1 row(s)

spark-sql (default)> select * from stock_basic2_iceberg_sink;

i ts_code symbol name area industry list_date actural_controller update_time update_timestamp

0 000001.SZ 000001 Ping An Bank Shenzhen Bank 19910403 NULL 2022-04-14 03:53:24 2022-04-21 00:58:59

1 000002.SZ 000002 vanke A Shenzhen National real estate 19910129 NULL 2022-04-14 03:53:31 2022-04-21 00:59:06

4 000006.SZ 000006 Shenzhenye A Shenzhen Regional property 19920427 State owned assets supervision and Administration Commission of Shenzhen Municipal People's government NULL NULL

2 000004.SZ 000004 Guohua Wangan Shenzhen Software services 19910114 Li Yingtong 2022-04-14 03:53:34 2022-04-21 00:59:11

3 000005.SZ 000005 ST Star source Shenzhen Environmental protection 19901210 Zheng lielie , Ding Peng 2022-04-14 03:53:40 2022-04-22 00:59:15

Time taken: 1.856 seconds, Fetched 5 row(s)

Data Lake time and mysql Time for , Obviously inconsistent .spark check iceberg The time is obviously increased 8 Hours .

Conclusion : You can't simply go to the time zone

3. change local timezone

SET `table.local-time-zone` = 'Asia/Shanghai';

set spark.sql.iceberg.handle-timestamp-without-timezone=true; after

Then set the time zone , Find no effect :

Set to Shanghai time zone :

spark-sql (default)> SET `table.local-time-zone` = 'Asia/Shanghai';

key value

table.local-time-zone 'Asia/Shanghai'

Time taken: 0.014 seconds, Fetched 1 row(s)

spark-sql (default)> select * from stock_basic2_iceberg_sink;

i ts_code symbol name area industry list_date actural_controller update_time update_timestamp

0 000001.SZ 000001 Ping An Bank Shenzhen Bank 19910403 NULL 2022-04-14 03:53:24 2022-04-21 00:58:59

1 000002.SZ 000002 vanke A Shenzhen National real estate 19910129 NULL 2022-04-14 03:53:31 2022-04-21 00:59:06

4 000006.SZ 000006 Shenzhenye A Shenzhen Regional property 19920427 State owned assets supervision and Administration Commission of Shenzhen Municipal People's government NULL NULL

2 000004.SZ 000004 Guohua Wangan Shenzhen Software services 19910114 Li Yingtong 2022-04-14 03:53:34 2022-04-21 00:59:11

3 000005.SZ 000005 ST Star source Shenzhen Environmental protection 19901210 Zheng lielie , Ding Peng 2022-04-14 03:53:40 2022-04-22 00:59:15

Time taken: 0.187 seconds, Fetched 5 row(s)

Set to utc The time zone :

spark-sql (default)> SET `table.local-time-zone` = 'UTC';

key value

table.local-time-zone 'UTC'

Time taken: 0.015 seconds, Fetched 1 row(s)

spark-sql (default)> select * from stock_basic2_iceberg_sink;

i ts_code symbol name area industry list_date actural_controller update_time update_timestamp

0 000001.SZ 000001 Ping An Bank Shenzhen Bank 19910403 NULL 2022-04-14 03:53:24 2022-04-21 00:58:59

1 000002.SZ 000002 vanke A Shenzhen National real estate 19910129 NULL 2022-04-14 03:53:31 2022-04-21 00:59:06

4 000006.SZ 000006 Shenzhenye A Shenzhen Regional property 19920427 State owned assets supervision and Administration Commission of Shenzhen Municipal People's government NULL NULL

2 000004.SZ 000004 Guohua Wangan Shenzhen Software services 19910114 Li Yingtong 2022-04-14 03:53:34 2022-04-21 00:59:11

3 000005.SZ 000005 ST Star source Shenzhen Environmental protection 19901210 Zheng lielie , Ding Peng 2022-04-14 03:53:40 2022-04-22 00:59:15

Time taken: 0.136 seconds, Fetched 5 row(s)

Set time zone , Find no effect

spark.sql.session.timeZone To be tested

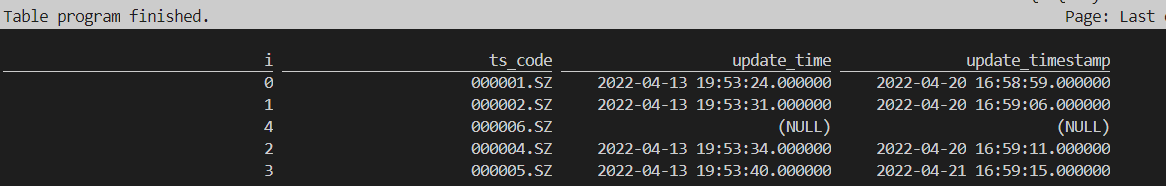

Two 、 Use flink-sql Inquire about , Found no problem with the time

Conclusion : Temporal semantics and mysql The ends are consistent :

- flink sql End query results :

Flink SQL> select i,ts_code,update_time,update_timestamp from stock_basic2_iceberg_sink;

2. mysql End query results :

Conclusion : Temporal semantics and mysql The ends are consistent

3、 ... and 、 Force to source Table addition timezone, Report errors

hold timestamp Change it to TIMESTAMP_LTZ

String createSql = "CREATE TABLE stock_basic_source(\n" +

" `i` INT NOT NULL,\n" +

" `ts_code` CHAR(10) NOT NULL,\n" +

" `symbol` CHAR(10) NOT NULL,\n" +

" `name` char(10) NOT NULL,\n" +

" `area` CHAR(20) NOT NULL,\n" +

" `industry` CHAR(20) NOT NULL,\n" +

" `list_date` CHAR(10) NOT NULL,\n" +

" `actural_controller` CHAR(100),\n" +

" `update_time` TIMESTAMP_LTZ\n," +

" `update_timestamp` TIMESTAMP_LTZ\n," +

" PRIMARY KEY(i) NOT ENFORCED\n" +

") WITH (\n" +

" 'connector' = 'mysql-cdc',\n" +

" 'hostname' = 'hadoop103',\n" +

" 'port' = '3306',\n" +

" 'username' = 'xxxxx',\n" +

" 'password' = 'XXXx',\n" +

" 'database-name' = 'xxzh_stock',\n" +

" 'table-name' = 'stock_basic2'\n" +

")" ;

Operation error reporting ,

Caused by: java.lang.IllegalArgumentException: Unable to convert to TimestampData from unexpected value '1649879611000' of type java.lang.Long

at com.ververica.cdc.debezium.table.RowDataDebeziumDeserializeSchema$12.convert(RowDataDebeziumDeserializeSchema.java:504)

at com.ververica.cdc.debezium.table.RowDataDebeziumDeserializeSchema$17.convert(RowDataDebeziumDeserializeSchema.java:641)

at com.ververica.cdc.debezium.table.RowDataDebeziumDeserializeSchema.convertField(RowDataDebeziumDeserializeSchema.java:626)

at com.ververica.cdc.debezium.table.RowDataDebeziumDeserializeSchema.access$000(RowDataDebeziumDeserializeSchema.java:63)

at com.ververica.cdc.debezium.table.RowDataDebeziumDeserializeSchema$16.convert(RowDataDebeziumDeserializeSchema.java:611)

at com.ververica.cdc.debezium.table.RowDataDebeziumDeserializeSchema$17.convert(RowDataDebeziumDeserializeSchema.java:641)

at com.ververica.cdc.debezium.table.RowDataDebeziumDeserializeSchema.extractAfterRow(RowDataDebeziumDeserializeSchema.java:146)

at com.ververica.cdc.debezium.table.RowDataDebeziumDeserializeSchema.deserialize(RowDataDebeziumDeserializeSchema.java:121)

at com.ververica.cdc.connectors.mysql.source.reader.MySqlRecordEmitter.emitElement(MySqlRecordEmitter.java:118)

at com.ververica.cdc.connectors.mysql.source.reader.MySqlRecordEmitter.emitRecord(MySqlRecordEmitter.java:100)

at com.ververica.cdc.connectors.mysql.source.reader.MySqlRecordEmitter.emitRecord(MySqlRecordEmitter.java:54)

at org.apache.flink.connector.base.source.reader.SourceReaderBase.pollNext(SourceReaderBase.java:128)

at org.apache.flink.streaming.api.operators.SourceOperator.emitNext(SourceOperator.java:294)

at org.apache.flink.streaming.runtime.io.StreamTaskSourceInput.emitNext(StreamTaskSourceInput.java:69)

at org.apache.flink.streaming.runtime.io.StreamOneInputProcessor.processInput(StreamOneInputProcessor.java:66)

at org.apache.flink.streaming.runtime.tasks.StreamTask.processInput(StreamTask.java:423)

at org.apache.flink.streaming.runtime.tasks.mailbox.MailboxProcessor.runMailboxLoop(MailboxProcessor.java:204)

at org.apache.flink.streaming.runtime.tasks.StreamTask.runMailboxLoop(StreamTask.java:684)

at org.apache.flink.streaming.runtime.tasks.StreamTask.executeInvoke(StreamTask.java:639)

at org.apache.flink.streaming.runtime.tasks.StreamTask.runWithCleanUpOnFail(StreamTask.java:650)

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:623)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:779)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:566)

at java.lang.Thread.run(Thread.java:748)

22/04/21 10:49:30 INFO akka.AkkaRpcService: Stopping Akka RPC service.

Can you add... To the downstream meter timezone?

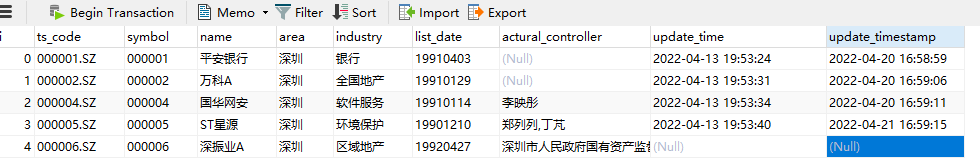

Four 、 The upstream watch is not timezone, Downstream surface plus timezone

Downstream table :mysql Of datetime and timestamp, From the original corresponding TIMESTAMP, Change it to TIMESTAMP_LTZ

LTZ: LOCAL TIME ZONE It means

mysql Table structure :

mysql surface :

String createSql = "CREATE TABLE stock_basic_source(\n" +

" `i` INT NOT NULL,\n" +

" `ts_code` CHAR(10) NOT NULL,\n" +

" `symbol` CHAR(10) NOT NULL,\n" +

" `name` char(10) NOT NULL,\n" +

" `area` CHAR(20) NOT NULL,\n" +

" `industry` CHAR(20) NOT NULL,\n" +

" `list_date` CHAR(10) NOT NULL,\n" +

" `actural_controller` CHAR(100),\n" +

" `update_time` TIMESTAMP\n," +

" `update_timestamp` TIMESTAMP\n," +

" PRIMARY KEY(i) NOT ENFORCED\n" +

") WITH (\n" +

" 'connector' = 'mysql-cdc',\n" +

" 'hostname' = 'hadoop103',\n" +

" 'port' = '3306',\n" +

" 'username' = 'XX',\n" +

" 'password' = 'XX" +

" 'database-name' = 'xxzh_stock',\n" +

" 'table-name' = 'stock_basic2'\n" +

")" ;

Downstream table :

String createSQl = "CREATE TABLE if not exists stock_basic2_iceberg_sink(\n" +

" `i` INT NOT NULL,\n" +

" `ts_code` CHAR(10) NOT NULL,\n" +

" `symbol` CHAR(10) NOT NULL,\n" +

" `name` char(10) NOT NULL,\n" +

" `area` CHAR(20) NOT NULL,\n" +

" `industry` CHAR(20) NOT NULL,\n" +

" `list_date` CHAR(10) NOT NULL,\n" +

" `actural_controller` CHAR(100) ,\n" +

" `update_time` TIMESTAMP_LTZ\n," +

" `update_timestamp` TIMESTAMP_LTZ\n," +

" PRIMARY KEY(i) NOT ENFORCED\n" +

") with(\n" +

" 'write.metadata.delete-after-commit.enabled'='true',\n" +

" 'write.metadata.previous-versions-max'='5',\n" +

" 'format-version'='2'\n" +

")";

After testing , Find out OK 了 .

spark-sql Query results of :

flink-sql Result of query :

Conclusion

Questions about dates : The source table does not have timezone, Downstream tables need to be set local timezone

版权声明

本文为[*Spark*]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231117528960.html

边栏推荐

- Summary of the relationship among GPU, CUDA and cudnn

- Canvas详解

- Solve the problem of "suncertpathbuilderexception: unable to find valid certification path to requested target"

- 得物技术网络优化-CDN资源请求优化实践

- On lambda powertools typescript

- My creation anniversary

- 零钱兑换II——【LeetCode】

- Usage of rename in cygwin

- laravel-admin时间范围选择器dateRange默认值问题

- 分享两个实用的shell脚本

猜你喜欢

Analyzing the role of social robots in basic science

MQ is easy to use in laravel

nacos基础(9):nacos配置管理之从单体架构到微服务

How to count fixed assets and how to generate an asset count report with one click

nacos基础(6):nacos配置管理模型

初探 Lambda Powertools TypeScript

Interpreting the art created by robots

SOFA Weekly | 年度优秀 Committer 、本周 Contributor、本周 QA

nacos基础(5):nacos配置入门

After the MySQL router is reinstalled, it reconnects to the cluster for boot - a problem that has been configured in this host before

随机推荐

MIT: label every pixel in the world with unsupervised! Humans: no more 800 hours for an hour of video

Nacos Foundation (8): login management

Learn go language 0x05: the exercise code of map in go language journey

GPU, CUDA,cuDNN三者的关系总结

MySQL数据库10秒内插入百万条数据的实现

云呐|如何管理好公司的固定资产,固定资产管理怎么做

Nacos Foundation (7): Configuration Management

SOFA Weekly | 年度优秀 Committer 、本周 Contributor、本周 QA

AcWing 1874. Moo encryption (enumeration, hash)

卷积层和池化层总结

golang之笔试题&面试题01

简易投票系统数据库设计

Change exchange II - [leetcode]

How does QT turn qwigdet into qdialog

Usage Summary of datetime and timestamp in MySQL

MySQL数据库事务transaction示例讲解教程

Laravel always returns JSON response

Detailed explanation of MySQL creation stored procedure and function

Usage of rename in cygwin

Learning go language 0x02: understanding slice