当前位置:网站首页>Dolphin scheduler scheduling spark task stepping record

Dolphin scheduler scheduling spark task stepping record

2022-04-23 13:42:00 【Ruo Xiaoyu】

1、 About spark The scheduling of worker Deploy

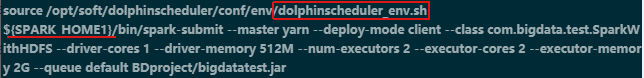

I'm testing Dolphinscheduler It adopts the cluster mode , Two machines are deployed master, Two machines are deployed worker, and hadoop and spark It is deployed on other machines . In the configuration dolphinscheduler_env.sh How to set the file spark The environmental address is very confused . The first problem in test scheduling is that you can't find spark-submit file

command: line 5: /bin/spark-submit: No such file or directory

Viewing the scheduling process through the log will clearly see ,DS Need to pass through dolphinscheduler_env.sh Configured in the file SPARK_HOME1 Look for spark-submit Script . It cannot find the path on different servers .

So I think of two solutions to the problem :

1、 hold spark Get your installation package worker Next , But this may involve hadoop Of yarn Other configuration .

2、 stay spark client Deploy another one on the deployment machine Dolphinscheduler worker, In this way, only consider DS Its own configuration is enough .

I finally chose option two .

In addition to worker Copy the installation file of the node to spark client On and off the machine , Also pay attention to follow the relevant steps in the installation :

- Create the same user as other nodes , for example dolphinscheduler

- take DS The installation directory is authorized to dolphinscheduler user

- Modify the of each node /etc/hosts file

- Create secret free

- Modify each DS Node dolphinscheduler/conf/common.properties File configuration

- Create the corresponding directory according to the configuration file , And authorize , Such as /tmp/dolphinscheduler Catalog

- Reconfigure the worker Node dolphinscheduler_env.sh file , add to SPARK_HOME route .

- Restart the cluster .

2、spark-submit The problem of Execution Authority

In the process of task submission and execution , my spark The test task also involves testing hdfs The operation of , So the running tenant owns hdfs The powers of the bigdata.

function spark Failure , Tips :

/opt/soft/spark/bin/spark-submit: Permission denied

At first, I thought it was the wrong tenant to choose , But think about it bigdata and hadoop Deployed together , and bidata Users also have spark jurisdiction , Obviously it's not the user's problem . Then you should think of spark-submit It's execution authority , So give users excute jurisdiction .

- chmod 755 spark

3、 Mingming spark The task was executed successfully , however DS The interface still fails to display

In operation , Found me spark The task has written the processed file to HDFS Catalog , In line with my mission logic . Check the log , Show that the task is successful , But there is one error:

[ERROR] 2021-11-15 16:16:26.012 - [taskAppId=TASK-3-43-72]:[418] - yarn applications: application_1636962361310_0001 , query status failed, exception:{

}

java.lang.Exception: yarn application url generation failed

at org.apache.dolphinscheduler.common.utils.HadoopUtils.getApplicationUrl(HadoopUtils.java:208)

at org.apache.dolphinscheduler.common.utils.HadoopUtils.getApplicationStatus(HadoopUtils.java:418)

at org.apache.dolphinscheduler.server.worker.task.AbstractCommandExecutor.isSuccessOfYarnState(AbstractCommandExecutor.java:404)

This error report can be seen as DS Need to go to a yarn Query under the path of application The state of , After getting this status, show the execution results , But I didn't get it , Obviously we're going to see where he goes to get , Can you configure this address .

I check the source code , find HadoopUtils.getApplicationUrl This method

appaddress Need to get a yarn.application.status.address Configuration parameters for

Find the default configuration in the source code , Although it says HA The default mode can be retained , But watch my yarn Not installed in ds1 Upper , So here we need to change it to ourselves yarn Address .

Configure this parameter to scheduling spark Of worker node /opt/soft/dolphinscheduler/conf/common.properties file

# if resourcemanager HA is enabled or not use resourcemanager, please keep the default value; If resourcemanager is single, you only need to replace ds1 to actual resourcemanager hostname

yarn.application.status.address=http://ds1:%s/ws/v1/cluster/apps/%s

版权声明

本文为[Ruo Xiaoyu]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230602186775.html

边栏推荐

- 面试官给我挖坑:单台服务器并发TCP连接数到底可以有多少 ?

- Oracle lock table query and unlocking method

- Playwright contrôle l'ouverture de la navigation Google locale et télécharge des fichiers

- Two ways to deal with conflicting data in MySQL and PG Libraries

- PG library checks the name

- [point cloud series] summary of papers related to implicit expression of point cloud

- 解决tp6下载报错Could not find package topthink/think with stability stable.

- [point cloud series] full revolutionary geometric features

- TIA博途中基於高速計數器觸發中斷OB40實現定點加工動作的具體方法示例

- Opening: identification of double pointer instrument panel

猜你喜欢

./gradlew: Permission denied

TIA博途中基於高速計數器觸發中斷OB40實現定點加工動作的具體方法示例

100000 college students have become ape powder. What are you waiting for?

@Excellent you! CSDN College Club President Recruitment!

![[point cloud series] pointfilter: point cloud filtering via encoder decoder modeling](/img/da/02d1e18400414e045ce469425db644.png)

[point cloud series] pointfilter: point cloud filtering via encoder decoder modeling

Common types and basic usage of input plug-in of logstash data processing service

切线空间(tangent space)

浅谈js正则之test方法bug篇

面试官给我挖坑:单台服务器并发TCP连接数到底可以有多少 ?

![[point cloud series] summary of papers related to implicit expression of point cloud](/img/71/2ea1e8a0d505577c9057670bd06046.png)

[point cloud series] summary of papers related to implicit expression of point cloud

随机推荐

校园外卖系统 - 「农职邦」微信原生云开发小程序

How to build a line of code with M4 qprotex

Django::Did you install mysqlclient?

NPM err code 500 solution

Example of specific method for TIA to trigger interrupt ob40 based on high-speed counter to realize fixed-point machining action

Campus takeout system - "nongzhibang" wechat native cloud development applet

切线空间(tangent space)

Resolution: argument 'radius' is required to be an integer

Two ways to deal with conflicting data in MySQL and PG Libraries

[point cloud series] Introduction to scene recognition

[point cloud series] pointfilter: point cloud filtering via encoder decoder modeling

Cross carbon market and Web3 to achieve renewable transformation

[point cloud series] multi view neural human rendering (NHR)

XML

Opening: identification of double pointer instrument panel

[point cloud series] neural opportunity point cloud (NOPC)

playwright控制本地谷歌浏览打开,并下载文件

Special window function rank, deny_ rank, row_ number

Lpddr4 notes

Oracle lock table query and unlocking method