当前位置:网站首页>Using oes texture + glsurfaceview + JNI to realize player picture processing based on OpenGL es

Using oes texture + glsurfaceview + JNI to realize player picture processing based on OpenGL es

2022-04-23 19:14:00 【cjzcjl】

Preface :

Use the android SurfaceView + SurfaceTexture + Surface Video playback or camera preview , You can only see the primary color picture , However, many occasions require that the picture can realize secondary processing , For example, adjust the proportion of red, green and blue primary colors 、 Add filters 、 Noise reduction, etc , If you use GLSurfaceview collocation GL Shader Language Based on GPU Real time processing of , We can meet this demand quickly and energy saving . The effect is as follows :

Android utilization GLSurfaceview + OpenGL Implementation of slice renderer MediaPlayer When playing, the picture is white balanced 、 Adjustment of contrast and brightness

One 、 Pre knowledge :

0、OES What is the texture :

2、BufferQueue What is it?

//todo

3、surface What is it?

//todo

4、surfaceTexture What is it?

purpose :OES Get texture data for Android cameras , Or the acquisition of player playing picture , Are relatively easy to achieve a way . hold OES Texture input to slice shader after , Various image processing operations can be realized in the sampling process , Such as noise reduction 、 Skin care 、 Filters, etc , This is a common entrance for complex real-time image processing under Android . collocation NDK Image processing and OpenGL Aspect code use C++ Write code to achieve cross end , It can be used on Android , It can also be reused on other platforms . In fact, Android GLES30、GLES20 Waiting for the library itself is also through JNI call native Under the GL Function only , as long as GLSurfaceView Build success , Of the current process EGLContext It has been established successfully , At this time, whether you call GLES20 Such as the library , Or directly in JNI On the call C Under the GL function , As long as it's still in the same process, the same EGLContext In the environment , Then this call is basically equivalent .

5、 How the picture is transferred from the player to OES Textured :

//todo

6、 Why use JNI:

because GL The grammar of , It is basically the same on each platform , So you can use C/C++ Write a copy of OpenGL Processing logic can be used across platforms . But if GLES20/GLES30 Android comes with JNI Bridge library , It cannot be directly applied to such as PC Etc . therefore GL Code using JNI I think it is reasonable to write .

Two 、 Concrete practice :

0、 establish GLSurfaceView With the renderer GLSurfaceView.Renderer:

First create GLSurfaceview, When creating this instance , Will take the initiative to help you create EGLContext, Then you can be free to drawCall Flexible use in threads GL Of API Various drawing operations have been carried out . And you want to use GLSurfaceview, Then it must be assigned a series of operations , To guide it on how to render , So we need to implements One GLSurfaceView.Renderer, As our GLSurfaceview The instance is the renderer , Among them, the following methods should be realized :

onSurfaceCreated: When GLSurfaceview Of surface After creation , The method will be called back . At this time, you can do some initialization operations , For example, I created a OES_TEXTURE, And pass the index value of the texture through SurfaceTexture For packaging , Again Surface Pack again , At this point Surface Can be used as MediaPlayer Or a camera API A bridge for writing data , The picture of the camera or the picture of the player will be OES_TEXTURE Recorded , meanwhile OpenGL You can use this texture index as a texture ( need external Feature declaration ), You can perform various screen operations in the slice renderer , Such as noise reduction 、 Convolution image processing 、 Filter 、 Color adjustment, etc . In addition, I also created an experiment here MediaPlayer example .

onSurfaceChanged: When GLSurfaceview When the size of changes , This method will be called back , At this point, you can use the passed in width、height Variables update their own GL Drawing viewport size and other operations .

onDrawFrame:EGLContext Where GL Threads , This method is called back every time you need to draw , At this point, the drawing code used for filling will be executed . Because all drawing operations need to be in GL Execute in thread , So this is the starting point of all drawing operations . Execute on other threads GL Drawing operation is not allowed , Although there will be no problem with compilation , But it won't do anything you want to see . in addition , because SurfaceTexture from BufferQueue You need to call... To get the frame updateTexImage Method , It is more appropriate to put this calling process into the callback drawn in each frame .

Specific to the whole GLSurfaceView The code for is as follows :

package com.opengldecoder.jnibridge;

import android.content.Context;

import android.graphics.Bitmap;

import android.graphics.SurfaceTexture;

import android.media.MediaPlayer;

import android.opengl.GLES11Ext;

import android.opengl.GLES30;

import android.opengl.GLSurfaceView;

import android.util.AttributeSet;

import android.util.Log;

import android.view.MotionEvent;

import android.view.Surface;

import java.nio.ByteBuffer;

import javax.microedition.khronos.egl.EGLConfig;

import javax.microedition.khronos.opengles.GL10;

public class NativeGLSurfaceView extends GLSurfaceView {

private Bitmap mTestBmp;

private Renderer mRenderer;

/** Layers native The pointer **/

private long mLayer = Long.MIN_VALUE;

private long mRenderOES = Long.MIN_VALUE;

private long mRenderNoiseReduction = Long.MIN_VALUE;

private long mRenderConvolutionDemo = Long.MIN_VALUE;

private long mRenderLut = Long.MIN_VALUE;

private long mRenderDeBackground = Long.MIN_VALUE;

//Android Picture data input Surface

private Surface mDataInputSurface = null;

//Android Picture data input texture

private int[] mDataInputTexturesPointer = null;

private SurfaceTexture mInputDataSurfaceTexture;

private Player mDemoPlayer;

private float mdx, mdy;

private float mPrevX, mPrevY;

public NativeGLSurfaceView(Context context) {

super(context);

}

public NativeGLSurfaceView(Context context, AttributeSet attrs) {

super(context, attrs);

init();

Log.i("cjztest", "NativeGLSurfaceView222");

}

private void init() {

this.setEGLContextClientVersion(3);// Use OpenGL ES 3.0 This parameter needs to be set to 3

mRenderer = new Renderer();// establish Renderer Class object

this.setRenderer(mRenderer); // Set up the renderer

this.setRenderMode(GLSurfaceView.RENDERMODE_CONTINUOUSLY);

}

public Surface getSurface() {

Log.i("cjztest", "GLRenderer.getSurface:" + mDataInputSurface.toString());

return mDataInputSurface;

}

/** Brightness adjustment **/

public void setRenderBrightness(float brightness) {

if (mRenderOES != Long.MIN_VALUE) {

JniBridge.setBrightness(mRenderOES, brightness);

}

}

/** Contrast adjustment **/

public void setRenderContrast(float contrast) {

if (mRenderOES != Long.MIN_VALUE) {

JniBridge.setContrast(mRenderOES, contrast);

}

}

/** White balance adjustment **/

public void setRenderWhiteBalance(float rWeight, float gWeight, float bWeight) {

if (mRenderOES != Long.MIN_VALUE) {

JniBridge.setWhiteBalance(mRenderOES, rWeight, gWeight, bWeight);

}

}

/** Noise reduction renderer switch **/

public void setRenderNoiseReductionOnOff(boolean sw) {

if (mLayer != Long.MIN_VALUE) {

if (mRenderNoiseReduction != Long.MIN_VALUE) {

if (sw) {

JniBridge.addRenderToLayer(mLayer, mRenderNoiseReduction);

} else {

JniBridge.removeRenderForLayer(mLayer, mRenderNoiseReduction);

}

}

}

}

/** Filter switch **/

public void setRenderLutOnOff(boolean sw) {

if (mLayer != Long.MIN_VALUE && mRenderLut != Long.MIN_VALUE) {

if (sw) {

JniBridge.addRenderToLayer(mLayer, mRenderLut);

} else {

JniBridge.removeRenderForLayer(mLayer, mRenderLut);

}

}

}

/** Background removal program switch **/

public void setRenderDeBackgroundOnOff(boolean sw) {

if (mLayer != Long.MIN_VALUE && mRenderDeBackground != Long.MIN_VALUE) {

if (sw) {

JniBridge.addRenderToLayer(mLayer, mRenderDeBackground);

} else {

JniBridge.removeRenderForLayer(mLayer, mRenderDeBackground);

}

}

}

/** Length width scaling **/

public void setScale(float sx, float sy) {

if (mLayer != Long.MIN_VALUE) {

JniBridge.layerScale(mLayer, sx, sy);

}

}

/** Move **/

public void setTrans(float x, float y) {

if (mLayer != Long.MIN_VALUE) {

JniBridge.layerTranslate(mLayer, x, y);

}

}

/** rotate **/

public void setRotate(float angle) {

if (mLayer != Long.MIN_VALUE) {

JniBridge.layerRotate(mLayer, angle);

}

}

/** Load filter **/

public void setLut(Bitmap lutBMP) {

if (mLayer != Long.MIN_VALUE && mRenderLut != Long.MIN_VALUE) {

byte b[] = new byte[lutBMP.getByteCount()];

ByteBuffer bb = ByteBuffer.wrap(b);

lutBMP.copyPixelsToBuffer(bb);

JniBridge.renderLutTextureLoad(mRenderLut, b, lutBMP.getWidth(), lutBMP.getHeight(), lutBMP.getWidth());

Log.i("cjztest", "lut pixels size:" + lutBMP.getByteCount());

}

}

@Override

public boolean onTouchEvent(MotionEvent event) {

switch (event.getAction()) {

case MotionEvent.ACTION_DOWN:

mPrevX = event.getX();

mPrevY = event.getY();

break;

case MotionEvent.ACTION_MOVE:

mdx += (float) (event.getX() - mPrevX) / getWidth();

mdy -= (float) (event.getY() - mPrevY) / getHeight();

setTrans(mdx, mdy);

mPrevX = event.getX();

mPrevY = event.getY();

break;

}

return true;

}

private class Renderer implements GLSurfaceView.Renderer {

private int mWidth;

private int mHeight;

private int mVideoWidth;

private int mVideoHeight;

private boolean mIsFirstFrame = true;

@Override

public void onSurfaceCreated(GL10 gl, EGLConfig config) {

Log.i("cjztest", String.format("NativeGlSurfaceView.onSurfaceCreated"));

mWidth = 0;

mHeight = 0;

mVideoWidth = 0;

mVideoHeight = 0;

mIsFirstFrame = true;

// Create a OES Textures and related supporting objects

if (mDataInputSurface == null) {

// establish OES texture

mDataInputTexturesPointer = new int[1];

GLES30.glGenTextures(1, mDataInputTexturesPointer, 0);

GLES30.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, mDataInputTexturesPointer[0]);

// Set zoom in and zoom out . Set the edge measurement

GLES30.glTexParameterf(GLES11Ext.GL_TEXTURE_EXTERNAL_OES,

GL10.GL_TEXTURE_MIN_FILTER, GL10.GL_LINEAR);

GLES30.glTexParameterf(GLES11Ext.GL_TEXTURE_EXTERNAL_OES,

GL10.GL_TEXTURE_MAG_FILTER, GL10.GL_LINEAR);

GLES30.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES,

GL10.GL_TEXTURE_WRAP_S, GL10.GL_CLAMP_TO_EDGE);

GLES30.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES,

GL10.GL_TEXTURE_WRAP_T, GL10.GL_CLAMP_TO_EDGE);

mInputDataSurfaceTexture = new SurfaceTexture(mDataInputTexturesPointer[0]);

mDataInputSurface = new Surface(mInputDataSurfaceTexture);

}

// Create a demo player

if (mDemoPlayer == null) {

mDemoPlayer = new Player(getContext(), getSurface(), new MediaPlayer.OnVideoSizeChangedListener() {

@Override

public void onVideoSizeChanged(MediaPlayer mp, int width, int height) {

/** Set up OES The size of the layer content **/

if ((width != mVideoWidth || height != mVideoHeight) && width > 0 && height > 0) {

Log.i("cjztest", String.format("onSurfaceChanged: w:%d, h:%d", width, height));

mVideoWidth = width;

mVideoHeight = height;

}

}

});

}

}

@Override

public void onSurfaceChanged(GL10 gl, int width, int height) {

if ((width != mWidth || height != mHeight) && width > 0 && height > 0) {

this.mWidth = width;

this.mHeight = height;

Log.i("cjztest", String.format("NativeGlSurfaceView.onSurfaceChanged:width:%d, height:%d", mWidth, mHeight));

JniBridge.nativeGLInit(width, height);

mIsFirstFrame = true;

}

}

@Override

public void onDrawFrame(GL10 gl) {

if (mIsFirstFrame) { // It can't be done asynchronously gl operation , So we can only move to the first frame ( or glrender Among the various callbacks , But here we need to wait onVideoSizeChanged Get ready ) Create a layer

if (mVideoWidth > 0 && mVideoHeight > 0) {

// Clear the last used layer

if (mLayer != Long.MIN_VALUE) {

JniBridge.removeLayer(mLayer);

}

// Create a layer ( Because of this usage scenario, there is no array data , Only OES texture , therefore dataPointer by 0)

mLayer = JniBridge.addFullContainerLayer(mDataInputTexturesPointer[0], new int[]{mVideoWidth, mVideoHeight}, 0, new int[]{0, 0}, GLES30.GL_RGBA); // Pass in textures in turn 、 Width and height of texture 、 Data address ( If there is )、 The width and height of the data

// Add one oes Renderers

mRenderOES = JniBridge.makeRender(JniBridge.RENDER_PROGRAM_KIND.RENDER_OES_TEXTURE.ordinal()); // add to oes texture

// mRenderConvolutionDemo = JniBridge.addRenderForLayer(mLayer, JniBridge.RENDER_PROGRAM_KIND.RENDER_CONVOLUTION.ordinal()); // Add convolution image processing demo

mRenderNoiseReduction = JniBridge.makeRender(JniBridge.RENDER_PROGRAM_KIND.NOISE_REDUCTION.ordinal()); // Add noise reduction renderer

mRenderLut = JniBridge.makeRender(JniBridge.RENDER_PROGRAM_KIND.RENDER_LUT.ordinal()); // add to Lut Renderers

mRenderDeBackground = JniBridge.makeRender(JniBridge.RENDER_PROGRAM_KIND.DE_BACKGROUND.ordinal()); // Create a background removal renderer

JniBridge.addRenderToLayer(mLayer, mRenderOES);

JniBridge.addRenderToLayer(mLayer, mRenderNoiseReduction);

mIsFirstFrame = false;

}

}

mInputDataSurfaceTexture.updateTexImage();

JniBridge.renderLayer(0, mWidth, mHeight);

}

}

}

MediaPlayer The creation code of the instance is as follows :

package com.opengldecoder.jnibridge;

import android.content.Context;

import android.content.res.AssetFileDescriptor;

import android.media.AudioManager;

import android.media.MediaPlayer;

import android.view.Surface;

import java.io.IOException;

public class Player {

private MediaPlayer mMediaPlayer;

public Player(Context context, Surface surface, MediaPlayer.OnVideoSizeChangedListener sizeChangedListener) {

initMediaPlayer(context, surface, sizeChangedListener);

}

private void initMediaPlayer(Context context, Surface surface, MediaPlayer.OnVideoSizeChangedListener sizeChangedListener) {

mMediaPlayer = new MediaPlayer();

try {

AssetFileDescriptor afd = context.getAssets().openFd("car_race.mp4");

mMediaPlayer.setDataSource(afd.getFileDescriptor(), afd.getStartOffset(), afd.getLength());

// String path = "http://192.168.1.254:8192";

// mediaPlayer.setDataSource(path);

// mediaPlayer.setDataSource(TextureViewMediaActivity.videoPath);

} catch (IOException e) {

e.printStackTrace();

}

mMediaPlayer.setAudioStreamType(AudioManager.STREAM_MUSIC);

mMediaPlayer.setLooping(true);

mMediaPlayer.setOnVideoSizeChangedListener(sizeChangedListener);

mMediaPlayer.setSurface(surface);

mMediaPlayer.prepareAsync();

mMediaPlayer.setOnPreparedListener(new MediaPlayer.OnPreparedListener() {

@Override

public void onPrepared(MediaPlayer mediaPlayer) {

mediaPlayer.start();

}

});

}

}

1、 utilize OES_TEXTURE stay JNI Texture rendering and processing in :

At this time, no matter in JNI neutralization GLSurfaceview in , In fact, the same process space is used 、 The same EGLContext, Therefore, texture indexes are common , It refers to the same thing . So in onDrawFrame in , I put the previously created OES Pass to the bottom JNI Method , stay C Can be used directly in :

mLayer = JniBridge.addFullContainerLayer(mDataInputTexturesPointer[0], new int[]{mVideoWidth, mVideoHeight}, 0, new int[]{0, 0}, GLES30.GL_RGBA); // Pass in textures in turn 、 Width and height of texture 、 Data address ( If there is )、 The width and height of the data

This is what I wrote JNI Bridging files :

package com.opengldecoder.jnibridge;

import android.graphics.Bitmap;

import android.view.Surface;

public class JniBridge {

static {

System.loadLibrary("opengl_decoder");

}

/** Renderer type enumerator todo java To be called , Then you have to make a copy **/

public enum RENDER_PROGRAM_KIND {

RENDER_OES_TEXTURE, //OES Texture rendering

RENDER_YUV, //YUV Data or texture rendering

RENDER_CONVOLUTION, // Add convolution processing

NOISE_REDUCTION, // Add noise handling

RENDER_LUT, // Add filter processing renderer

DE_BACKGROUND, // Remove the background

}

public static native void nativeGLInit(int viewPortWidth, int viewPortHeight);

// public static native void drawRGBABitmap(Bitmap bmp, int bmpW, int bmpH);

public static native void drawToSurface(Surface surface, int color);

public static native void drawBuffer();

public static native long addFullContainerLayer(int texturePointer, int textureWidthAndHeight[], long dataPointer,

int dataWidthAndHeight[],

int dataPixelFormat);

public static native void removeLayer(long layerPointer);

/** Create a renderer

@param renderProgramKind Renderer type , Reference resources RENDER_PROGRAM_KIND**/

public static native long makeRender(int renderProgramKind);

public static native void addRenderToLayer(long layerPointer, long renderPointer);

public static native void removeRenderForLayer(long layerPointer, long renderPointer);

public static native void setRenderAlpha(long renderPointer, float alpha);

/** Renderer brightness adjustment **/

public static native void setBrightness(long renderPointer, float brightness);

/** Renderer contrast adjustment **/

public static native void setContrast(long renderPointer, float contrast);

/** White balance adjustment **/

public static native void setWhiteBalance(long renderPointer, float redWeight, float greenWeight, float blueWeight);

public static native void renderLayer(int fboPointer, int fboWidth, int fboHeight);

public static native void layerScale(long layerPointer, float scaleX, float scaleY);

public static native void layerTranslate(long layerPointer, float dx, float dy);

public static native void layerRotate(long layerPointer, float angle);

/****************************************** Specific layer non general function setting area *************************************************/

public static native void renderLutTextureLoad(long lutRenderPointer, byte lutPixels[], int w, int h, int unitLen);

}

In this demo I've experimented with a variety of renderers , But because the input is a OES texture , So first use OES Renderers :

mRenderOES = JniBridge.makeRender(JniBridge.RENDER_PROGRAM_KIND.RENDER_OES_TEXTURE.ordinal()); // add to oes texture

JniBridge.addRenderToLayer(mLayer, mRenderOES);

You can see , The method I wrote will be called makeRender, Create a... Based on the passed enumeration parameters OES Texture renderer object instance , And back to C Object pointer under ( A pointer is essentially a number of memory addresses ). The specific code is as follows :

/** Create a renderer

@param layerPointer Memory address of the layer

@@param renderProgramKind Renderer type **/

JNIEXPORT jlong JNICALL

Java_com_opengldecoder_jnibridge_JniBridge_makeRender(JNIEnv *env, jobject activity,

int renderProgramKind) {

RenderProgram *resultProgram = nullptr;

switch (renderProgramKind) {

default:

break;

// establish OES Texture renderer

case RENDER_OES_TEXTURE: {

RenderProgramOESTexture *renderProgramOesTexture = new RenderProgramOESTexture();

renderProgramOesTexture->createRender(-1, -mRatio, 0, 2,

mRatio * 2,

mWidth,

mHeight);

resultProgram = renderProgramOesTexture;

break;

}

case NOISE_REDUCTION: {

RenderProgramNoiseReduction *renderProgramNoiseReduction = new RenderProgramNoiseReduction();

renderProgramNoiseReduction->createRender(-1, -mRatio, 0, 2,

mRatio * 2,

mWidth,

mHeight);

resultProgram = renderProgramNoiseReduction;

break;

}

case RENDER_YUV: {

//todo Not completed yet

break;

}

// Create a convolution renderer

case RENDER_CONVOLUTION: {

float kernel[] = {

1.0, 1.0, 1.0,

1.0, -7.0, 1.0,

1.0, 1.0, 1.0

};

RenderProgramConvolution *renderProgramConvolution = new RenderProgramConvolution(

kernel);

renderProgramConvolution->createRender(-1, -mRatio, 0, 2,

mRatio * 2,

mWidth,

mHeight);

resultProgram = renderProgramConvolution;

break;

}

// Create a filter renderer :

case RENDER_LUT: {

RenderProgramFilter *renderProgramFilter = new RenderProgramFilter();

renderProgramFilter->createRender(-1, -mRatio, 0, 2,

mRatio * 2,

mWidth,

mHeight);

resultProgram = renderProgramFilter;

break;

}

//tddo Remove the background :

case DE_BACKGROUND: {

RenderProgramDebackground *renderProgramDebackground = new RenderProgramDebackground();

renderProgramDebackground->createRender(-1, -mRatio, 0, 2,

mRatio * 2,

mWidth,

mHeight);

resultProgram = renderProgramDebackground;

}

}

return (jlong) resultProgram;

}For the time being, we only focus on creating RENDER_OES_TEXTURE Type of renderer , The specific code is as follows :

//

// Created by jiezhuchen on 2021/6/21.

//

#include <GLES3/gl3.h>

#include <GLES3/gl3ext.h>

#include <string.h>

#include <jni.h>

#include "RenderProgramOESTexture.h"

#include "android/log.h"

using namespace OPENGL_VIDEO_RENDERER;

static const char *TAG = "nativeGL";

#define LOGI(fmt, args...) __android_log_print(ANDROID_LOG_INFO, TAG, fmt, ##args)

#define LOGD(fmt, args...) __android_log_print(ANDROID_LOG_DEBUG, TAG, fmt, ##args)

#define LOGE(fmt, args...) __android_log_print(ANDROID_LOG_ERROR, TAG, fmt, ##args)

RenderProgramOESTexture::RenderProgramOESTexture() {

vertShader = GL_SHADER_STRING(

\n

uniform mat4 uMVPMatrix; // Rotate, translate, zoom Total transformation matrix . The object matrix is multiplied by it to produce a transformation

attribute vec3 objectPosition; // Object position vector , Participate in the operation but not output to the film source

attribute vec4 objectColor; // Physical color vector

attribute vec2 vTexCoord; // Coordinates within the texture

varying vec4 fragObjectColor;// Output the processed color value to the chip program

varying vec2 fragVTexCoord;// Output the processed texture coordinates to the slice program

void main() {

vec2 temp = vec2(1.0, 1.0);

gl_Position = uMVPMatrix * vec4(objectPosition, 1.0); // Set object position

fragVTexCoord = vTexCoord; // No processing by default , Directly output physical internal sampling coordinates

fragObjectColor = objectColor; // No processing by default , Output color values to the source

}

);

fragShader = GL_SHADER_STRING(

$#extension GL_OES_EGL_image_external : require\n

precision highp float;

uniform samplerExternalOES oesTexture;//OES Form of texture input

uniform int funChoice;

uniform float frame;// Frame number

uniform float brightness;// brightness

uniform float contrast;// Contrast

uniform vec3 rgbWeight; // white balance

uniform vec2 resolution;// The resolution of the container

uniform vec2 videoResolution;// The resolution of the video itself

varying vec4 fragObjectColor;// receive vertShader The processed color value is given to the chip element program

varying vec2 fragVTexCoord;// receive vertShader The processed texture coordinates are given to the slice element program

float fakeRandom(vec2 st) {

return fract(sin(dot(st.xy, vec2(12.9898, 78.233))) * 43758.5453123 * frame / 1000.0);

}

// Add noise to test

vec3 getNoise(vec2 st) {

float rnd = fakeRandom(st);

return vec3(rnd);

}

void main() {

vec2 xy = vec2(fragVTexCoord.s, 1.0 - fragVTexCoord.t);

vec3 rgbWithBrightness = texture2D(oesTexture, xy).rgb * rgbWeight + brightness; // Brightness adjustment

vec3 rgbWithContrast = rgbWithBrightness + (rgbWithBrightness - 0.5) * contrast / 1.0; // Contrast adjustment Reference resources https://blog.csdn.net/yuhengyue/article/details/103856476

gl_FragColor = vec4(rgbWithContrast, fragObjectColor.a);

//cjztest Noise test

// gl_FragColor = vec4(getNoise(fragVTexCoord) + rgbWithContrast.rgb, 1.0);

}

);

float tempTexCoord[] = // Sampling coordinates in texture , Be similar to canvas coordinate // There's something wrong with this thing , It leads to two problems framebuffer When the pictures take textures from each other, they are upside down

{

1.0, 0.0,

0.0, 0.0,

1.0, 1.0,

0.0, 1.0

};

memcpy(mTexCoor, tempTexCoord, sizeof(tempTexCoord));

float tempColorBuf[] = {

1.0, 1.0, 1.0, 1.0,

1.0, 1.0, 1.0, 1.0,

1.0, 1.0, 1.0, 1.0,

1.0, 1.0, 1.0, 1.0

};

memcpy(mColorBuf, tempColorBuf, sizeof(tempColorBuf));

}

RenderProgramOESTexture::~RenderProgramOESTexture() {

destroy();

}

void RenderProgramOESTexture::createRender(float x, float y, float z, float w, float h, int windowW,

int windowH) {

mWindowW = windowW;

mWindowH = windowH;

initObjMatrix(); // Initialize the object matrix to the identity matrix , Otherwise, the following matrix operations are multiplied by 0 It's invalid

float vertxData[] = {

x + w, y, z,

x, y, z,

x + w, y + h, z,

x, y + h, z,

};

memcpy(mVertxData, vertxData, sizeof(vertxData));

mImageProgram = createProgram(vertShader + 1, fragShader + 1);

// Get the vertex position attribute reference in the program " The pointer "

mObjectPositionPointer = glGetAttribLocation(mImageProgram.programHandle, "objectPosition");

// Texture sampling coordinates

mVTexCoordPointer = glGetAttribLocation(mImageProgram.programHandle, "vTexCoord");

// Get the vertex color attribute reference in the program " The pointer "

mObjectVertColorArrayPointer = glGetAttribLocation(mImageProgram.programHandle, "objectColor");

// Get the total transformation matrix reference in the program " The pointer "

muMVPMatrixPointer = glGetUniformLocation(mImageProgram.programHandle, "uMVPMatrix");

// Choose how to render ,0 For lines ,1 For texture

mGLFunChoicePointer = glGetUniformLocation(mImageProgram.programHandle, "funChoice");

// Rendered frame count pointer

mFrameCountPointer = glGetUniformLocation(mImageProgram.programHandle, "frame");

// Brightness pointer

mBrightnessPointer = glGetUniformLocation(mImageProgram.programHandle, "brightness");

// Contrast pointer

mContrastPointer = glGetUniformLocation(mImageProgram.programHandle, "contrast");

// White balance pointer

mRGBWeightPointer = glGetUniformLocation(mImageProgram.programHandle, "rgbWeight");

// Set the resolution pointer , tell gl The current resolution of the script

mResoulutionPointer = glGetUniformLocation(mImageProgram.programHandle, "resolution");

}

void RenderProgramOESTexture::setAlpha(float alpha) {

if (mColorBuf != nullptr) {

for (int i = 3; i < sizeof(mColorBuf) / sizeof(float); i += 4) {

mColorBuf[i] = alpha;

}

}

}

void RenderProgramOESTexture::setBrightness(float brightness) {

mBrightness = brightness;

}

void RenderProgramOESTexture::setContrast(float contrast) {

mContrast = contrast;

}

void RenderProgramOESTexture::setWhiteBalance(float redWeight, float greenWeight, float blueWeight) {

mRedWeight = redWeight;

mGreenWeight = greenWeight;

mBlueWeight = blueWeight;

}

void RenderProgramOESTexture::loadData(char *data, int width, int height, int pixelFormat, int offset) {

// Don't have to implement

}

/**@param texturePointers Pass in the texture to be rendered , Can be the result of the last processing , For example, after processing FBOTexture **/

void RenderProgramOESTexture::loadTexture(Textures textures[]) {

mInputTexturesArray = textures[0].texturePointers;

mInputTextureWidth = textures[0].width;

mInputTextureHeight = textures[0].height;

}

/**@param outputFBOPointer Draw to which framebuffer, The system defaults to 0 **/

void RenderProgramOESTexture::drawTo(float *cameraMatrix, float *projMatrix, DrawType drawType, int outputFBOPointer, int fboW, int fboH) {

if (mIsDestroyed) {

return;

}

glUseProgram(mImageProgram.programHandle);

glUniform1f(mBrightnessPointer, mBrightness);

glUniform1f(mContrastPointer, mContrast);

float whiteBalanceWeight[3] = {mRedWeight, mGreenWeight, mBlueWeight};

glUniform3fv(mRGBWeightPointer, 1, whiteBalanceWeight);

// Set window size and position

glBindFramebuffer(GL_FRAMEBUFFER, outputFBOPointer);

glViewport(0, 0, mWindowW, mWindowH);

glUniform1i(mGLFunChoicePointer, 1);

glUniform1f(mFrameCountPointer, mframeCount++);

// Pass in location information

locationTrans(cameraMatrix, projMatrix, muMVPMatrixPointer);

// Start rendering :

if (mVertxData != nullptr && mColorBuf != nullptr) {

// Feed vertex position data into rendering pipeline

glVertexAttribPointer(mObjectPositionPointer, 3, GL_FLOAT, false, 0, mVertxData); // Three dimensional vector ,size by 2

// Send vertex color data to the rendering pipeline

glVertexAttribPointer(mObjectVertColorArrayPointer, 4, GL_FLOAT, false, 0, mColorBuf);

// Transfer vertex texture coordinate data into the rendering pipeline

glVertexAttribPointer(mVTexCoordPointer, 2, GL_FLOAT, false, 0, mTexCoor); // Two dimensional vector ,size by 2

glEnableVertexAttribArray(mObjectPositionPointer); // Turn on vertex properties

glEnableVertexAttribArray(mObjectVertColorArrayPointer); // Enable color properties

glEnableVertexAttribArray(mVTexCoordPointer); // Enable texture sampling to locate coordinates

float resolution[2];

switch (drawType) {

case OPENGL_VIDEO_RENDERER::RenderProgram::DRAW_DATA:

break;

case OPENGL_VIDEO_RENDERER::RenderProgram::DRAW_TEXTURE:

glActiveTexture(GL_TEXTURE0); // Activate 0 No. texture

// glBindTexture(36197, mInputTexturesArrayPointer); //0 No. texture binding content

glBindTexture(GL_TEXTURE_2D, mInputTexturesArray); //0 No. texture binding content , Discover the use of GL_TEXTURE_2D You can also bind OES texture

glUniform1i(glGetUniformLocation(mImageProgram.programHandle, "oesTexture"), 0); // Map to rendering script , Get the pointer of texture attribute

resolution[0] = (float) mInputTextureWidth;

resolution[1] = (float) mInputTextureHeight;

glUniform2fv(mResoulutionPointer, 1, resolution);

break;

}

glDrawArrays(GL_TRIANGLE_STRIP, 0, /*mPointBufferPos / 3*/ 4); // Draw lines , Added point Floating point numbers /3 Is the coordinate number ( Because a coordinate consists of x,y,z3 individual float constitute , Can't be used directly )

glDisableVertexAttribArray(mObjectPositionPointer);

glDisableVertexAttribArray(mObjectVertColorArrayPointer);

glDisableVertexAttribArray(mVTexCoordPointer);

}

}

void RenderProgramOESTexture::destroy() {

if (!mIsDestroyed) {

// Release the video memory occupied by the texture

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, 0);

glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, 0, 0, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, nullptr);

// Delete the unused shaderprogram

destroyProgram(mImageProgram);

}

mIsDestroyed = true;

}The most important part is the design of the slice renderer main Method :

void main() {

vec2 xy = vec2(fragVTexCoord.s, 1.0 - fragVTexCoord.t);

vec3 rgbWithBrightness = texture2D(oesTexture, xy).rgb * rgbWeight + brightness; // Brightness adjustment

vec3 rgbWithContrast = rgbWithBrightness + (rgbWithBrightness - 0.5) * contrast / 1.0; // Contrast adjustment Reference resources https://blog.csdn.net/yuhengyue/article/details/103856476

gl_FragColor = vec4(rgbWithContrast, fragObjectColor.a);

//cjztest Noise test

// gl_FragColor = vec4(getNoise(fragVTexCoord) + rgbWithContrast.rgb, 1.0);

}The purpose is to index the incoming texture , adopt glBindTexture Bind texture to glActiveTexture Active texture index , Then the active texture index is passed through glGetUniformLocation Get the compiled shaderProgram Middle name is “oesTexture” Index of texture , And bind each other , Then the slice renderer can pass texture2D Method (GLES20 Of 2d Texture sampling function ) The texture transferred from the player , sampling , The type is vec4(rgba) Pixel data . In this case, if it is directly assigned to the reserved variable gl_FragColor, At this time, the corresponding vertex closed figure can be pasted with the primary color of the video picture . But I added something extra here rgbWeight White balance matrix 、rgbWithBrightness Luminance coefficient and rgbWithContrast coefficient , Make the playing picture red, green and blue 3 The color scale of the channel 、 brightness 、 The contrast can be adjusted .

Code address :

Summary :

In Android audio and video processing , If you just use surfaceview/textureView + surfaceTexture + surface This combination is used for video playback , In fact, it is difficult to make complex changes and adjustments . But through glsurfaceview and glsl, You can stick 3D Model , You can adjust the white balance and other functions commonly used in players , It greatly increases the flexibility of picture processing , At the same time as OpenGL be based on GPU Hardware acceleration features , The processing performance is better than that of conventional Java perhaps C Rely directly on... Pixel by pixel CPU It's much faster , At the same time, it consumes less energy ,glsurfaceView+glsl Processing video images is a very good match .

版权声明

本文为[cjzcjl]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204210600587732.html

边栏推荐

- 机器学习目录

- Redis optimization series (III) solve common problems after master-slave configuration

- ArcMap connecting ArcGIS Server

- One stop service platform for high-level talents and development of comprehensive service platform system for talents

- Class loading process of JVM

- c#:泛型反射

- SSDB基础3

- Simple use of viewbinding

- SQL常用的命令

- Common SQL commands

猜你喜欢

Client interns of a large factory share their experience face to face

MySQL Téléchargement et installation de la version Linux

The fifth bullet of MySQL learning -- detailed explanation of transaction and its operation characteristics

Using Visual Studio code to develop Arduino

2022.04.23(LC_714_买卖股票的最佳时机含手续费)

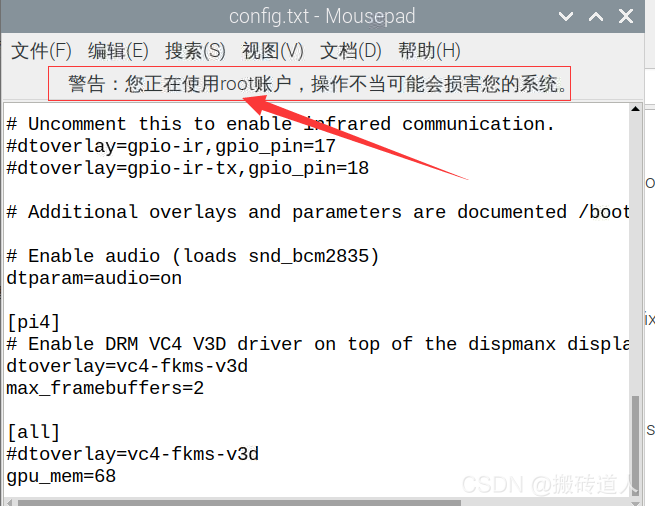

Raspberry pie uses root operation, and the graphical interface uses its own file manager

Audio signal processing and coding - 2.5.3 the discrete cosine transform

Keysight has chosen what equipment to buy for you

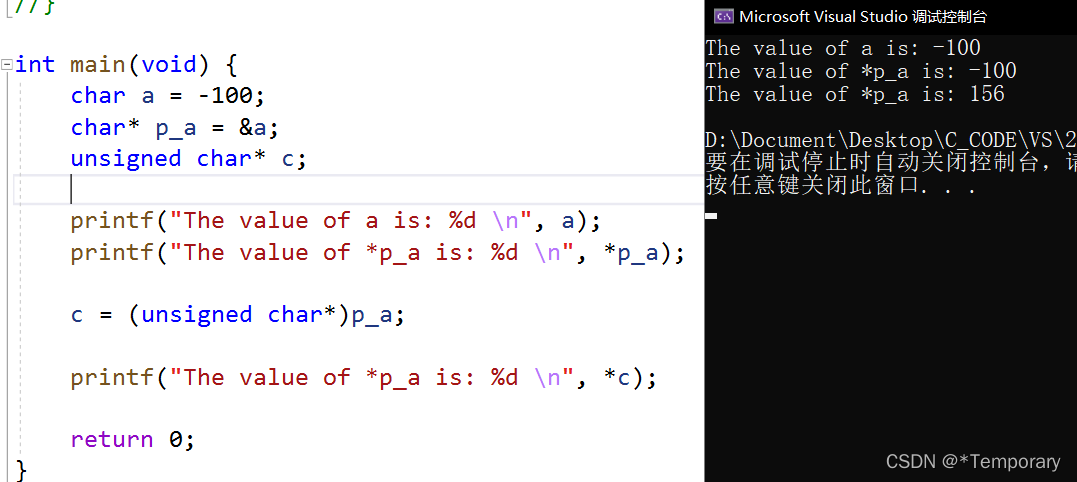

浅谈c语言指针的强制转换

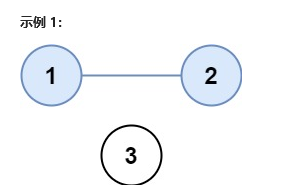

剑指 Offer II 116. 省份数量-空间复杂度O(n),时间复杂度O(n)

随机推荐

2022.04.23(LC_714_买卖股票的最佳时机含手续费)

After opening the original normal project, the dependency package displays red and does not exist.

JS calculation time difference

MySQL Téléchargement et installation de la version Linux

js上传文件时控制文件类型和大小

Redis optimization series (III) solve common problems after master-slave configuration

机器学习目录

@Analysis of conditional on Web Application

SQL server requires to query the information of all employees with surname 'Wang'

Use of kotlin collaboration in the project

Customize the non slidable viewpage and how to use it

优先使用组合而不使用继承

Getting started with vcpkg

SQL of contention for system time plus time in ocrale database

2022.04.23 (the best time for lc_714_to buy and sell stocks, including handling charges)

White screen processing method of fulter startup page

8266 obtain 18b20 temperature

Modify the font size of hint in editext

SQL常用的命令

12个例子夯实promise基础

https://gitee.com/cjzcjl/learnOpenGLDemo/tree/main/app/src/main

https://gitee.com/cjzcjl/learnOpenGLDemo/tree/main/app/src/main