当前位置:网站首页>Opencv -- yoact case segmentation model reasoning

Opencv -- yoact case segmentation model reasoning

2022-04-23 03:57:00 【qq_ thirty-seven million one hundred and seventy-two thousand o】

Subject requirements : understand opencv Of DNN library , Give a picture of a natural scene , Use the trained yolact Model , Output the target detection results .

analysis :

1)opencv Of DNN The module integrates many deep learning models , Including face detection 、 Image classification 、 Division 、 Target detection, etc , Integrated Pytorch、tensorflow、paddlepaddle Equal model framework ( See code base OpenCV/dnn)

2) General steps of deep learning reasoning model : Load model , Including configuration file and weight file ; Input image preprocessing , Convert to file types and sizes acceptable to the model ; Model prediction post-processing , For instance segmentation , Mainly NMS Post processing method and segmentation ,YOLACT These post-processing processes have been completed automatically ;

- Result display :

main.exe -h

#######

Usage: main.exe [params] image confThreshold nmsThresshold

-?, -h, --help, --usage (value:true)

opecv based deep learining demo

image

Image to process

confThreshold (value:0.5)

confidence threshold, default 0.5

nmsThresshold (value:0.5)

nms threshold, default 0.3

main.exe example_01.jpg

- Code example :

// main.cpp //

#include <fstream>

#include <iostream>

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

#include "yolact.cpp"

using namespace cv;

using namespace dnn;

using namespace std;

bool parseParam(int argc, char** argv, const char* keys, Mat& img, float& confThread, float& nmsTheshold){

CommandLineParser parser(argc, argv, keys);

if(parser.has("help")){

parser.printMessage();

return false;

}

if(!parser.check()){

parser.printErrors();

return false;

}

String imgFile = parser.get<String>(0);

img = imread(imgFile);

if(img.empty()){

cout << "wrong image path ! please check again." << endl;

return false;

}

confThread = parser.get<float>(1);

nmsTheshold = parser.get<float>(2);

return true;

}

int main(int argc, char** argv)

{

const char* keys = {

"{help h usage ? | | opecv based deep learining demo}"

"{@image | | Image to process}"

"{@confThreshold | 0.5 | confidence threshold, default 0.5}"

"{@nmsThresshold | 0.3 | nms threshold, default 0.3}"};

float confThreshold, nmsThreshold;

Mat srcimg;

if(!parseParam(argc, argv, keys, srcimg, confThreshold, nmsThreshold)){

return 0;

}

yolact yolactnet(confThreshold, nmsThreshold);

yolactnet.detect(srcimg);

static const string kWinName = "Deep learning object detection in OpenCV";

namedWindow(kWinName, WINDOW_AUTOSIZE);

imshow(kWinName, srcimg);

waitKey(0);

destroyAllWindows();

}

// yolact.cpp

#include <fstream>

#include <iostream>

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

#include "config.cpp"

using namespace cv;

using namespace dnn;

using namespace std;

class yolact

{

public:

yolact(float confThreshold, float nmsThreshold, const int keep_top_k = 200);

void detect(Mat& srcimg);

private:

const int target_size = 550;

const float MEANS[3] = {

123.68, 116.78, 103.94 };

const float STD[3] = {

58.40, 57.12, 57.38 };

float confidence_threshold;

float nms_threshold;

int keep_top_k;

const int conv_ws[5] = {

69, 35, 18, 9, 5 };

const int conv_hs[5] = {

69, 35, 18, 9, 5 };

const float aspect_ratios[3] = {

1.f, 0.5f, 2.f };

const float scales[5] = {

24.f, 48.f, 96.f, 192.f, 384.f };

const float var[4] = {

0.1f, 0.1f, 0.2f, 0.2f };

const int mask_h = 138;

const int mask_w = 138;

int num_priors;

float* priorbox;

Net net;

void normalize(Mat& img);

void sigmoid(Mat& out, int length);

};

yolact::yolact(float confThreshold, float nmsThreshold, const int keep_top_k)

{

this->confidence_threshold = confThreshold;

this->nms_threshold = nmsThreshold;

this->keep_top_k = keep_top_k;

this->net = readNet("yolact_base_54_800000.onnx");

this->num_priors = 0;

int p = 0;

for (p = 0; p < 5; p++)

{

this->num_priors += this->conv_ws[p] * this->conv_hs[p] * 3;

}

this->priorbox = new float[4 * this->num_priors];

generate priorbox

float* pb = priorbox;

for (p = 0; p < 5; p++)

{

int conv_w = this->conv_ws[p];

int conv_h = this->conv_hs[p];

float scale = this->scales[p];

for (int i = 0; i < conv_h; i++)

{

for (int j = 0; j < conv_w; j++)

{

// +0.5 because priors are in center-size notation

float cx = (j + 0.5f) / conv_w;

float cy = (i + 0.5f) / conv_h;

for (int k = 0; k < 3; k++)

{

float ar = aspect_ratios[k];

ar = sqrt(ar);

float w = scale * ar / this->target_size;

float h = scale / ar / this->target_size;

// This is for backward compatability with a bug where I made everything square by accident

// cfg.backbone.use_square_anchors:

h = w;

pb[0] = cx;

pb[1] = cy;

pb[2] = w;

pb[3] = h;

pb += 4;

}

}

}

}

}

void yolact::normalize(Mat& img)

{

img.convertTo(img, CV_32F);

int i = 0, j = 0;

for (i = 0; i < img.rows; i++)

{

float* pdata = (float*)(img.data + i * img.step);

for (j = 0; j < img.cols; j++)

{

pdata[0] = (pdata[0] - this->MEANS[0]) / this->STD[0];

pdata[1] = (pdata[1] - this->MEANS[1]) / this->STD[1];

pdata[2] = (pdata[2] - this->MEANS[2]) / this->STD[2];

pdata += 3;

}

}

}

void yolact::sigmoid(Mat& out, int length)

{

float* pdata = (float*)(out.data);

int i = 0;

for (i = 0; i < length; i++)

{

pdata[i] = 1.0 / (1 + expf(-pdata[i]));

}

}

void yolact::detect(Mat& srcimg)

{

int img_w = srcimg.cols;

int img_h = srcimg.rows;

Mat img;

resize(srcimg, img, Size(this->target_size, this->target_size), INTER_LINEAR);

cvtColor(img, img, COLOR_BGR2RGB);

this->normalize(img);

Mat blob = blobFromImage(img);

this->net.setInput(blob);

vector<Mat> outs;

this->net.forward(outs, this->net.getUnconnectedOutLayersNames());

/generate proposals

vector<int> classIds;

vector<float> confidences;

vector<Rect> boxes;

vector<int> maskIds;

const int num_class = outs[1].cols;

for (int i = 0; i < this->num_priors; i++)

{

Mat scores = outs[1].row(i).colRange(1, num_class);

Point classIdPoint;

double score;

// Get the value and location of the maximum score

minMaxLoc(scores, 0, &score, 0, &classIdPoint);

if (score > this->confidence_threshold)

{

const float* loc = (float*)outs[0].data + i * 4;

const float* pb = this->priorbox + i * 4;

float pb_cx = pb[0];

float pb_cy = pb[1];

float pb_w = pb[2];

float pb_h = pb[3];

float bbox_cx = var[0] * loc[0] * pb_w + pb_cx;

float bbox_cy = var[1] * loc[1] * pb_h + pb_cy;

float bbox_w = (float)(exp(var[2] * loc[2]) * pb_w);

float bbox_h = (float)(exp(var[3] * loc[3]) * pb_h);

float obj_x1 = bbox_cx - bbox_w * 0.5f;

float obj_y1 = bbox_cy - bbox_h * 0.5f;

float obj_x2 = bbox_cx + bbox_w * 0.5f;

float obj_y2 = bbox_cy + bbox_h * 0.5f;

// clip

obj_x1 = max(min(obj_x1 * img_w, (float)(img_w - 1)), 0.f);

obj_y1 = max(min(obj_y1 * img_h, (float)(img_h - 1)), 0.f);

obj_x2 = max(min(obj_x2 * img_w, (float)(img_w - 1)), 0.f);

obj_y2 = max(min(obj_y2 * img_h, (float)(img_h - 1)), 0.f);

classIds.push_back(classIdPoint.x);

confidences.push_back(score);

boxes.push_back(Rect((int)obj_x1, (int)obj_y1, (int)(obj_x2 - obj_x1 + 1), (int)(obj_y2 - obj_y1 + 1)));

maskIds.push_back(i);

}

}

// Perform non maximum suppression to eliminate redundant overlapping boxes with

// lower confidences

vector<int> indices;

NMSBoxes(boxes, confidences, this->confidence_threshold, this->nms_threshold, indices, 1.f, this->keep_top_k);

for (size_t i = 0; i < indices.size(); ++i)

{

int idx = indices[i];

Rect box = boxes[idx];

int xmax = box.x + box.width;

int ymax = box.y + box.height;

rectangle(srcimg, Point(box.x, box.y), Point(xmax, ymax), Scalar(0, 0, 255), 3);

//Get the label for the class name and its confidence

char text[256];

sprintf(text, "%s: %.2f", class_names[classIds[idx] + 1], confidences[idx]);

//Display the label at the top of the bounding box

int baseLine;

Size labelSize = getTextSize(text, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int ymin = max(box.y, labelSize.height);

//rectangle(frame, Point(left, top - int(1.5 * labelSize.height)), Point(left + int(1.5 * labelSize.width), top + baseLine), Scalar(0, 255, 0), FILLED);

putText(srcimg, text, Point(box.x, ymin), FONT_HERSHEY_SIMPLEX, 0.75, Scalar(0, 255, 0), 1);

Mat mask(this->mask_h, this->mask_w, CV_32FC1);

mask = cv::Scalar(0.f);

int channel = outs[2].cols;

int area = this->mask_h * this->mask_w;

float* coeff = (float*)outs[2].data + maskIds[idx] * channel;

float* pm = (float*)mask.data;

const float* pmaskmap = (float*)outs[3].data;

for (int j = 0; j < area; j++)

{

for (int p = 0; p < channel; p++)

{

pm[j] += pmaskmap[p] * coeff[p];

}

pmaskmap += channel;

}

this->sigmoid(mask, area);

Mat mask2;

resize(mask, mask2, Size(img_w, img_h));

// draw mask

for (int y = 0; y < img_h; y++)

{

const float* pmask = (float*)mask2.data + y * img_w;

uchar* p = srcimg.data + y * img_w * 3;

for (int x = 0; x < img_w; x++)

{

if (pmask[x] > 0.5)

{

p[0] = (uchar)(p[0] * 0.5 + colors[classIds[idx] + 1][0] * 0.5);

p[1] = (uchar)(p[1] * 0.5 + colors[classIds[idx] + 1][1] * 0.5);

p[2] = (uchar)(p[2] * 0.5 + colors[classIds[idx] + 1][2] * 0.5);

}

p += 3;

}

}

}

}

// config.hpp //

extern const char* class_names[];

extern const unsigned char colors[81][3];

// config.cpp //

#include"config.hpp"

extern const char* class_names[] = {

"background",

"person", "bicycle", "car", "motorcycle", "airplane", "bus",

"train", "truck", "boat", "traffic light", "fire hydrant",

"stop sign", "parking meter", "bench", "bird", "cat", "dog",

"horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe",

"backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket",

"bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl",

"banana", "apple", "sandwich", "orange", "broccoli", "carrot",

"hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop",

"mouse", "remote", "keyboard", "cell phone", "microwave", "oven",

"toaster", "sink", "refrigerator", "book", "clock", "vase",

"scissors", "teddy bear", "hair drier", "toothbrush"

};

extern const unsigned char colors[81][3] = {

{

56, 0, 255}, {

226, 255, 0}, {

0, 94, 255},

{

0, 37, 255}, {

0, 255, 94}, {

255, 226, 0}, {

0, 18, 255}, {

255, 151, 0},

{

170, 0, 255}, {

0, 255, 56}, {

255, 0, 75}, {

0, 75, 255}, {

0, 255, 169},

{

255, 0, 207}, {

75, 255, 0}, {

207, 0, 255}, {

37, 0, 255}, {

0, 207, 255},

{

94, 0, 255}, {

0, 255, 113}, {

255, 18, 0}, {

255, 0, 56}, {

18, 0, 255},

{

0, 255, 226}, {

170, 255, 0}, {

255, 0, 245}, {

151, 255, 0}, {

132, 255, 0},

{

75, 0, 255}, {

151, 0, 255}, {

0, 151, 255}, {

132, 0, 255}, {

0, 255, 245},

{

255, 132, 0}, {

226, 0, 255}, {

255, 37, 0}, {

207, 255, 0},

{

0, 255, 207}, {

94, 255, 0}, {

0, 226, 255},

{

56, 255, 0}, {

255, 94, 0}, {

255, 113, 0},{

0, 132, 255}, {

255, 0, 132},

{

255, 170, 0}, {

255, 0, 188}, {

113, 255, 0}, {

245, 0, 255}, {

113, 0, 255},

{

255, 188, 0}, {

0, 113, 255}, {

255, 0, 0}, {

0, 56, 255}, {

255, 0, 113},

{

0, 255, 188}, {

255, 0, 94}, {

255, 0, 18}, {

18, 255, 0}, {

0, 255, 132},

{

0, 188, 255}, {

0, 245, 255}, {

0, 169, 255},{

37, 255, 0},

{

255, 0, 151}, {

188, 0, 255}, {

0, 255, 37}, {

0, 255, 0},

{

255, 0, 170}, {

255, 0, 37}, {

255, 75, 0}, {

0, 0, 255}, {

255, 207, 0},

{

255, 0, 226}, {

255, 245, 0}, {

188, 255, 0}, {

0, 255, 18}, {

0, 255, 75},

{

0, 255, 151}, {

255, 56, 0}, {

245, 255, 0}

};

版权声明

本文为[qq_ thirty-seven million one hundred and seventy-two thousand o]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230350397758.html

边栏推荐

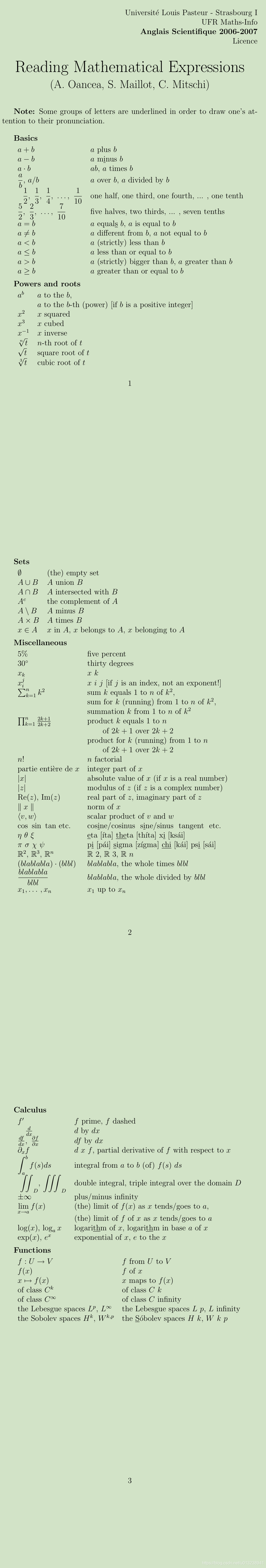

- [mapping program design] coordinate azimuth calculation artifact (version C)

- 创下国产手机在海外市场销量最高纪录的小米,重新关注国内市场

- Vscode download and installation + running C language

- Installation and configuration of MinGW under win10

- A sword is a sword. There is no difference between a wooden sword and a copper sword

- vscode删除卸载残余

- Why is it necessary to divide the variance by 255^2 when adding Gaussian noise using the imnoise function of MATLAB

- Picture synthesis video

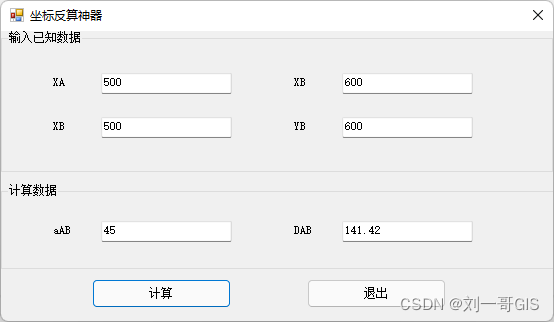

- STM32 advanced timer com event

- AI CC 2019 installation tutorial under win10 (super detailed - small white version)

猜你喜欢

Express中间件①(中间件的使用)

OpenCV----YOLACT实例分割模型推理

What if win10 doesn't have a local group policy?

【BIM入门实战】Revit中的墙体层次以及常见问题解答

![[BIM introduction practice] Revit building wall: detailed picture and text explanation of structure, envelope and lamination](/img/cb/86b5898609800a80592fceb782503f.png)

[BIM introduction practice] Revit building wall: detailed picture and text explanation of structure, envelope and lamination

![[AI vision · quick review of robot papers today, issue 28] wed, 1 Dec 2021](/img/c8/90d020d192fe791c4dec5f4161e597.png)

[AI vision · quick review of robot papers today, issue 28] wed, 1 Dec 2021

What if you encounter symbols you don't know in mathematical formulas

【测绘程序设计】坐标反算神器V1.0(附C/C#/VB源程序)

STM32 advanced timer com event

作为一名码农,女友比自己更能码是一种什么体验?

随机推荐

Mysql出现2013 Lost connection to MySQL server during query

The great gods in acmer like mathematics very much

Key point detection of human hand based on mediapipe

The super large image labels in remote sensing data set are cut into specified sizes and saved into coco data set - target detection

减治思想——二分查找详细总结

【ICCV 2019】MAP-VAE:Multi-Angle Point Cloud-VAE: Unsupervised Feature Learning for 3D Point Clouds..

標識符、關鍵字、數據類型

Basic knowledge of convolutional neural network

Basic usage of Google colab (I)

What if win10 doesn't have a local group policy?

Common auxiliary classes

[BIM introduction practice] Revit building wall: detailed picture and text explanation of structure, envelope and lamination

将编译安装的mysql加入PATH环境变量

Picture synthesis video

RuntimeError: output with shape [4, 1, 512, 512] doesn‘t match the broadcast shape[4, 4, 512, 512]

ERROR: Could not find a version that satisfies the requirement win32gui

OpenCV----YOLACT实例分割模型推理

AI CC 2019 installation tutorial under win10 (super detailed - small white version)

Cmake qmake simple knowledge

标识符、关键字、数据类型