当前位置:网站首页>Scripy tutorial - (2) write a simple crawler

Scripy tutorial - (2) write a simple crawler

2022-04-23 20:22:00 【Winding Liao】

Scrapy course - (2) Write a simple reptile

Purpose : Crawl through all the book names on this page , Price ,url, Inventory , Comments and cover pictures . This paper is based on Website For example

Check robotstxt_obey

Create good scrapy project After , Come first settings.py find ROBOTSTXT_OBEY, And set it to False.

( This action means not complying with the website's robots.txt, Please apply after obtaining the approval of the website . Note : This website is a sample practice website .)

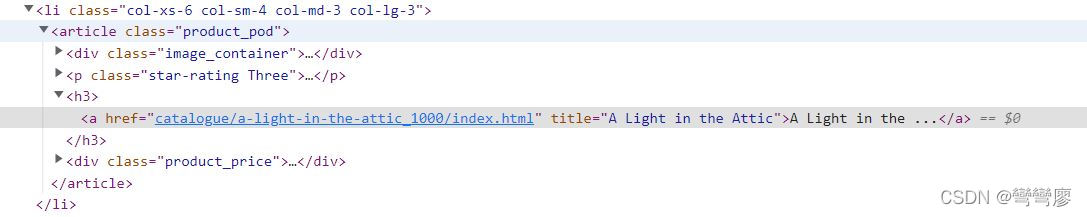

Look at the location of the elements

Back to the example website , Press F12 Open developer tools .

Start with 2 A little exercise to familiarize yourself with xpath ~

First , The title of the book is h3 Inside a tag Inside , Location xpath as follows :

// parse book titles

response.xpath('//h3/a/@title').extract()

// extract Can parse out all title The name of

// If you use extract_first() Will resolve the first title The name of

Then check the price location ,xpath as follows :

// parse book price

response.xpath('//p[@class="price_color"]/text()').extract()

lookup url Is quite important , Because we have to find all the books first url, Further in request all url, And get the information we want , Its xpath as follows :

response.xpath('//h3/a/@href').extract_first()

// Output results : 'catalogue/a-light-in-the-attic_1000/index.html'

Request The first book

Then observe url It can be found that , What has just been resolved is the suffix of the book website , That means we have to add the prefix , Is a complete url. So here , Let's start writing the first function.

def parse(self, response):

// Find all the books url

books = response.xpath('//h3/a/@href').extract()

for book in books:

// Combine URL prefix with suffix

url = response.urljoin(book)

yield response.follow(url = url,

callback = self.parse_book)

def parse_book(self, response):

pass

Parse Data

def parse_book(self, response):

title = response.xpath('//h1/text()').extract_first()

price = response.xpath('//*[@class="price_color"]/text()').extract_first()

image_url = response.xpath('//img/@src').extract_first()

image_url = image_url.replace('../../', 'http://books.toscrape.com/')

rating = response.xpath('//*[contains(@class, "star-rating")]/@class').extract_first()

rating = rating.replace('star-rating', '')

description = response.xpath('//*[@id="product_description"]/following-sibling::p/text()').extract_first()

View the analysis results

Here you can use yield To view the analysis results :

// inside parse_book function

yield {

'title': title,

'price': price,

'image_url': image_url,

'rating': rating,

'description': description}

Complete a simple crawler

def parse(self, response):

// Find all the books url

books = response.xpath('//h3/a/@href').extract()

for book in books:

// Combine URL prefix with suffix

url = response.urljoin(book)

yield response.follow(url = url,

callback = self.parse_book)

def parse_book(self, response):

title = response.xpath('//h1/text()').extract_first()

price = response.xpath('//*[@class="price_color"]/text()').extract_first()

image_url = response.xpath('//img/@src').extract_first()

image_url = image_url.replace('../../', 'http://books.toscrape.com/')

rating = response.xpath('//*[contains(@class, "star-rating")]/@class').extract_first()

rating = rating.replace('star-rating', '')

description = response.xpath('//*[@id="product_description"]/following-sibling::p/text()').extract_first()

yield {

'title': title,

'price': price,

'image_url': image_url,

'rating': rating,

'description': description}

Execution crawler

scrapy crawl <your_spider_name>

版权声明

本文为[Winding Liao]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204232021221091.html

边栏推荐

- Es index (document name) fuzzy query method (database name fuzzy query method)

- 三十.什么是vm和vc?

- Alicloud: could not connect to SMTP host: SMTP 163.com, port: 25

- 如何做产品创新?——产品创新方法论探索一

- Click an EL checkbox to select all questions

- Don't bother tensorflow learning notes (10-12) -- Constructing a simple neural network and its visualization

- 16MySQL之DCL 中 COMMIT和ROllBACK

- 还在用 ListView?使用 AnimatedList 让列表元素动起来

- The market share of the financial industry exceeds 50%, and zdns has built a solid foundation for the financial technology network

- [graph theory brush question-5] Li Kou 1971 Find out if there is a path in the graph

猜你喜欢

Development of Matlab GUI bridge auxiliary Designer (functional introduction)

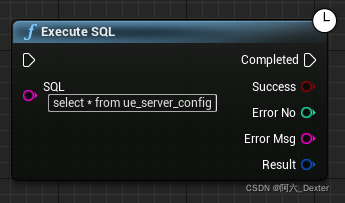

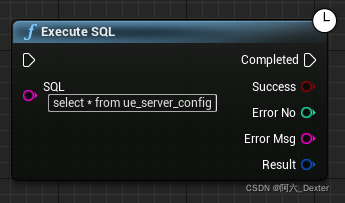

SQL Server connectors by thread pool 𞓜 instructions for dtsqlservertp plug-in

SQL Server Connectors By Thread Pool | DTSQLServerTP plugin instructions

DTMF dual tone multi frequency signal simulation demonstration system

PCL点云处理之计算两平面交线(五十一)

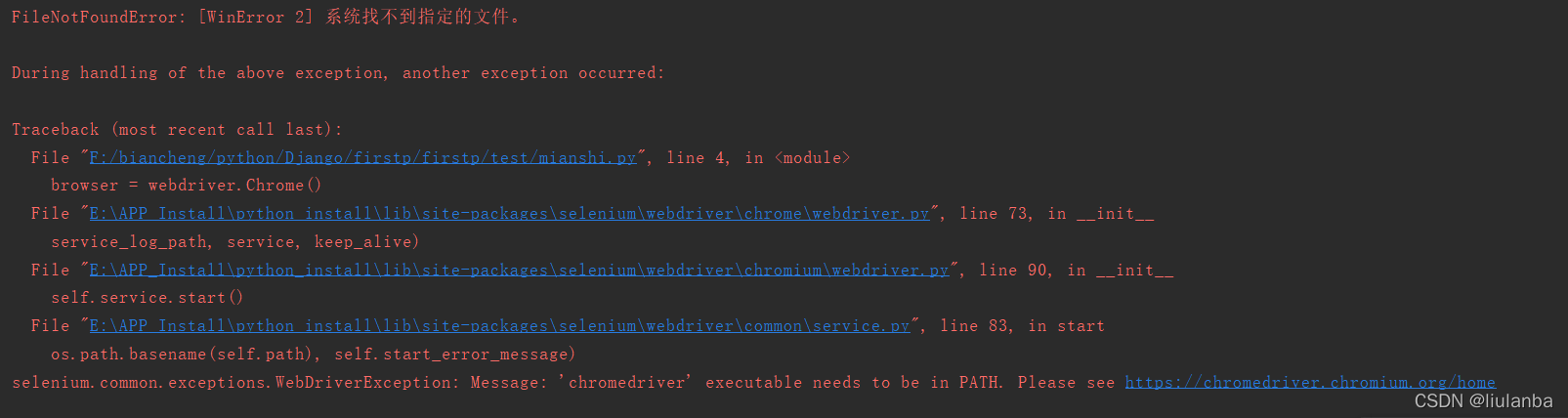

selenium.common.exceptions.WebDriverException: Message: ‘chromedriver‘ executable needs to be in PAT

Building the tide, building the foundation and winning the future -- the successful holding of zdns Partner Conference

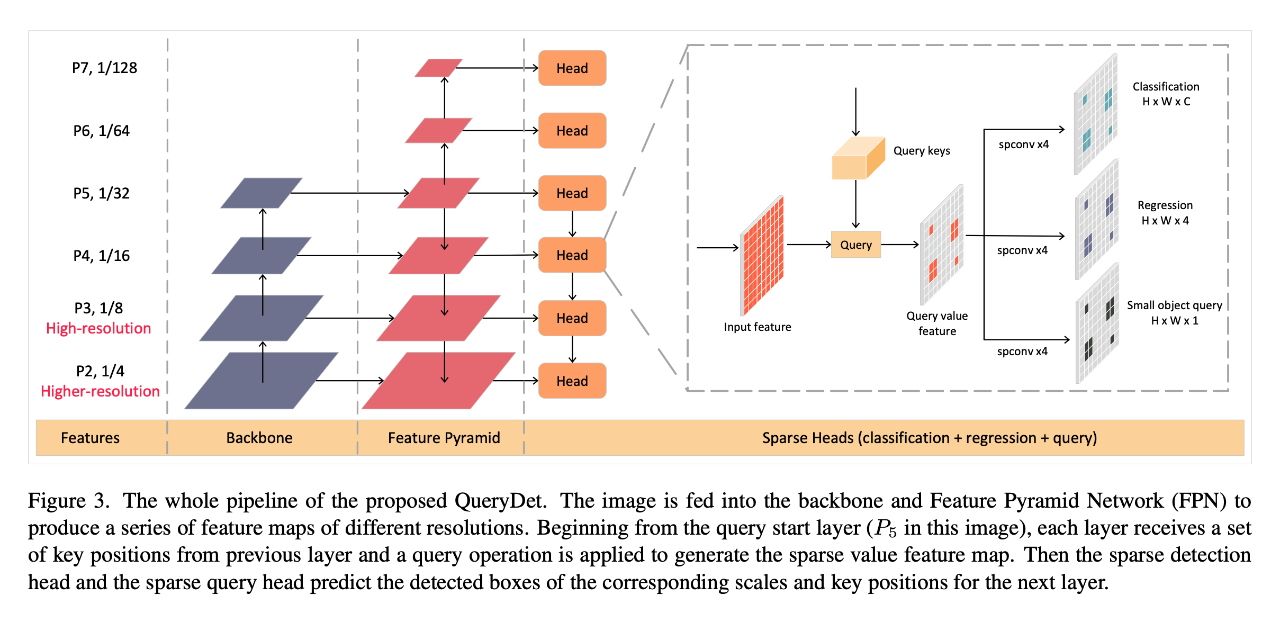

CVPR 2022 | querydet: use cascaded sparse query to accelerate small target detection under high resolution

![Es error: request contains unrecognized parameter [ignore_throttled]](/img/17/9131c3eb023b94b3e06b0e1a56a461.png)

Es error: request contains unrecognized parameter [ignore_throttled]

16MySQL之DCL 中 COMMIT和ROllBACK

随机推荐

AQS learning

ArcGIS js api 4. X submergence analysis and water submergence analysis

selenium. common. exceptions. WebDriverException: Message: ‘chromedriver‘ executable needs to be in PAT

Numpy mathematical function & logical function

[problem solving] 'ASCII' codec can't encode characters in position XX XX: ordinal not in range (128)

【PTA】L2-011 玩转二叉树

Servlet learning notes

R language ggplot2 visualization: ggplot2 visualizes the scatter diagram and uses geom_ mark_ The ellipse function adds ellipses around data points of data clusters or data groups for annotation

Cadence Orcad Capture CIS更换元器件之Link Database 功能介绍图文教程及视频演示

Experience of mathematical modeling in 18 year research competition

还在用 ListView?使用 AnimatedList 让列表元素动起来

On BIM data redundancy theory

一. js的深拷贝和浅拷贝

After route link navigation, the sub page does not display the navigation style problem

2022DASCTF Apr X FATE 防疫挑战赛 CRYPTO easy_real

Redis installation (centos7 command line installation)

【栈和队列专题】—— 滑动窗口

论文写作 19: 会议论文与期刊论文的区别

SIGIR'22 "Microsoft" CTR estimation: using context information to promote feature representation learning

論文寫作 19: 會議論文與期刊論文的區別