当前位置:网站首页>Don't bother tensorflow learning notes (10-12) -- Constructing a simple neural network and its visualization

Don't bother tensorflow learning notes (10-12) -- Constructing a simple neural network and its visualization

2022-04-23 20:14:00 【Trabecular trying to write code】

This note is based on Mo fan python Of Tensorflow course

Personally, I don't think the video tutorial of don't bother God is suitable for Xiaobai with zero Foundation , If it's Xiaobai, you can watch the videos of Li Hongyi or Wu Enda first or read directly . Don't bother the great God's tutorial, which is suitable for in-depth learning. You already have some theoretical knowledge , But students who lack code foundation ( For example, I ), Basically, don't bother to write the code again , Xiaobian has been right tensorflow Have a certain understanding of the application of , Also mastered some training skills of neural network .

The following corresponds to... In the video Tensorflow course (10)(11)(12), Xiaobian will record according to Mo fan's explanation and my own understanding .

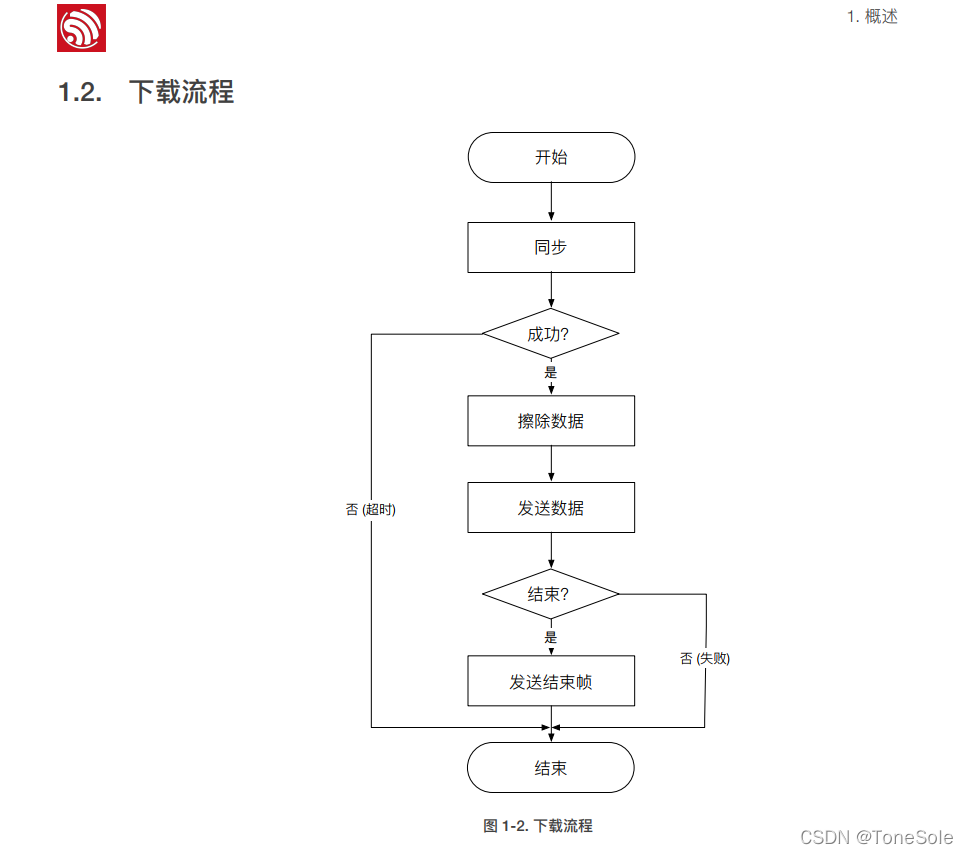

One 、 Understanding the structure of neural networks

The purpose of this tutorial is to build the simplest neural network . The simplest neural network structure is that it contains only one input layer( Input layer ), One hidden layer, One output layer( Output layer ). Each layer contains neurons , except hidden layer The number of neurons is a super parameter , The number of neurons in input layer and output layer is closely related to input data and output data . for instance , The input data defined in the program is :x_data, Then the number of neurons in the input layer is the same as that of the input data data The number of is related to . Be careful ! Not with data The number of samples in ! The same goes for the output layer .

The number of neurons in the hidden layer should not be too many , Because deep learning should be reflected in the number of hidden layers , If there are too many neurons in one layer , Then it should be breadth learning . Also important is the number of hidden layers , Blindly adding hidden layers will lead to over fitting( Over fitting ) problem . So the design of hidden layer is a knowledge based on experience .

Two 、 Code details

1、 Add layer creation def add_layer()

def add_layer(inputs,in_size,out_size,activation_function=None):

Weights=tf.Variable(tf.random_normal([in_size,out_size]))

biases=tf.Variable(tf.zeros([1,out_size])+0.1)

Wx_plus_b=tf.matmul(inputs,Weights)+biases

if activation_function is None:

outputs=Wx_plus_b

else:

outputs=activation_function(Wx_plus_b)

return outputsFirst define the function as the input layer for subsequent creation , The basis of hidden layer and output layer . What needs special attention here , In many cases, we are defining bias Time will make bias=0, But I don't want to bias=0, So in the biases Add after 0.1, Guaranteed not to be zero .

Yes activation_function The judgment of the : In the connection between input layer and hidden layer , We need to use activation_function Convert the data into a non-linear form , But in the connection between the hidden layer and the output layer , We no longer need to activate the function . Because there is a judgment , When you don't need to activate a function , Direct output Wx_plus_b; When needed , will Wx_plus_b Output after activating the function .

2、 Data import and neural network establishment

x_data=np.linspace(-1,1,300)[:,np.newaxis]

noise=np.random.normal(0,0.05,x_data.shape)

y_data=np.square(x_data)-0.5+noise

xs=tf.placeholder(tf.float32,[None,1])

ys=tf.placeholder(tf.float32,[None,1])

l1=add_layer(xs,1,10,activation_function=tf.nn.relu)#l1 is an input layer

#activation function is a relu function

prediction=add_layer(l1,10,1,activation_function=None)#prediction is an hidden layer

loss=tf.reduce_mean(tf.reduce_sum(tf.square(ys-prediction),reduction_indices=[1]))

#loss function is based on MSE(mean square error)

train_step=tf.train.GradientDescentOptimizer(0.1).minimize(loss)

init=tf.global_variables_initializer() #initialize all variables

sess=tf.Session()

sess.run(init) #very important We use noise to import data (noise). use noise To get y_data Not exactly according to the direction of univariate quadratic function ,y_data The results must be random , So in bias Added... To the location noise, Got y-x The image is shown below :

Here we use tensorflow Medium placeholder function .placeholder() Functions are constructed in neural networks graph In the model , The data to be input is not passed into the model at this time , It just allocates the necessary memory . Etc session, In conversation , Run the model by feed_dict() Function passes data to the placeholder .

use placeholder Is that , You can customize the amount of incoming data . In the training of deep learning , Generally, the whole data set will not be trained directly , For example, random gradient descent (Stochastic Gradient Descent) Is to take mini batch Train the data in a way .

3、 Neural network training visualization

In this program, visualization uses matplotlib library , And dynamically display the training results . But don't bother with the code directly in jupyter notebook It will happen when running on. Only function images can be displayed , The problem of not displaying images dynamically . stay youtube In the comments under Mofan's video , Many small partners also reflect that whether it is IDLE still terminal Or other compilation environments , Add... To the front in time %matplotlib inline It can't achieve the expected effect . Small series search method , Several methods can be found in jupyter notebook Method of dynamic display on . But this method needs to install Qt,jupyter Will use Qt As drawing backend .

The code is as follows :

%matplotlib # Declare when importing the library

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

ax.scatter(x_data,y_data)

plt.ion()

plt.show()

for i in range(2000):

sess.run(train_step,feed_dict={xs:x_data,ys:y_data})

if i%50==0:

try:

plt.pause(0.5)

except Exception:

pass

try:

ax.lines.remove(lines[0])

plt.show()

except Exception as e:

pass

prediction_value=sess.run(prediction,feed_dict={xs:x_data})

lines=ax.plot(x_data,prediction_value,'r-',lw=10)

This is the training effect after adding dynamic images , The red curve is the curve trained by computer , The blue dot area is x、y The area of the true value of the point . You can see that after computer training , We can get a curve that roughly matches the regional trend .

That's the whole content of the tutorial , If you have any questions, please communicate in the comment area !

版权声明

本文为[Trabecular trying to write code]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204210555381778.html

边栏推荐

- Efficient serial port cyclic buffer receiving processing idea and code 2

- 使用 WPAD/PAC 和 JScript在win11中进行远程代码执行

- Mysql database - connection query

- LeetCode动态规划训练营(1~5天)

- Shanda Wangan shooting range experimental platform project - personal record (V)

- 如何在BNB鏈上創建BEP-20通證

- Lpc1768 optimization comparison of delay time and different levels

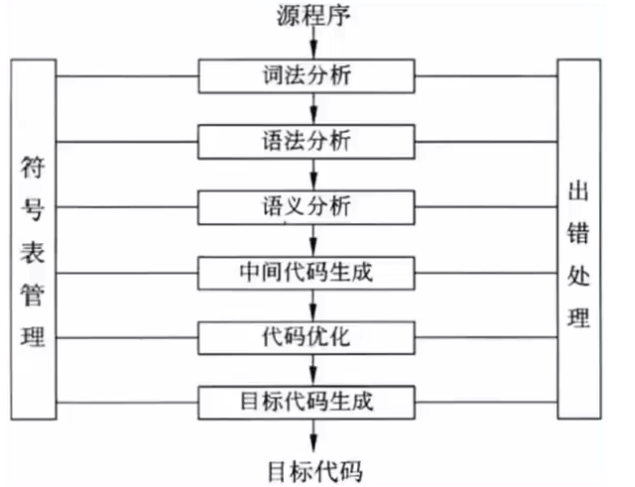

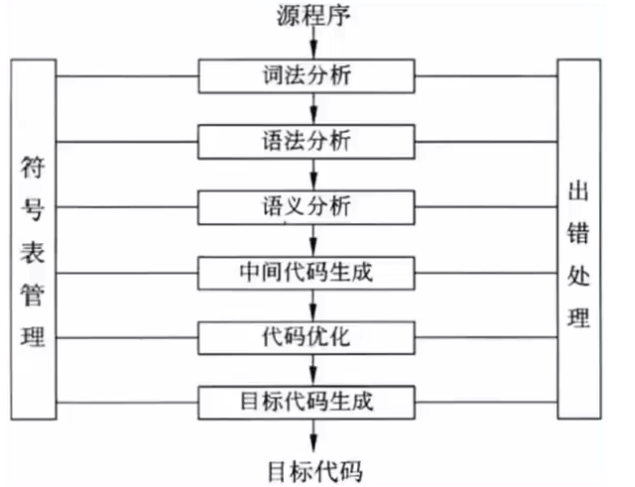

- Fundamentals of programming language (2)

- Change the material of unity model as a whole

- STM32 Basics

猜你喜欢

程序设计语言基础(2)

Wave field Dao new species end up, how does usdd break the situation and stabilize the currency market?

Mysql database backup scheme

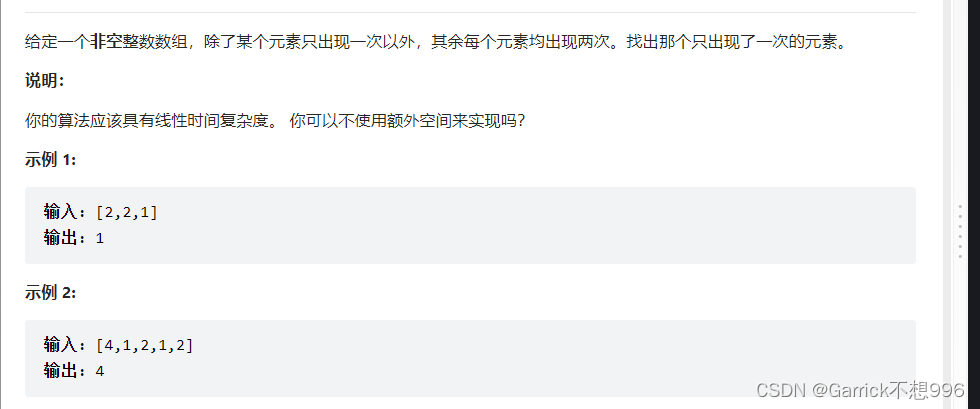

Leetcode XOR operation

The textarea cursor cannot be controlled by the keyboard due to antd dropdown + modal + textarea

Fundamentals of programming language (2)

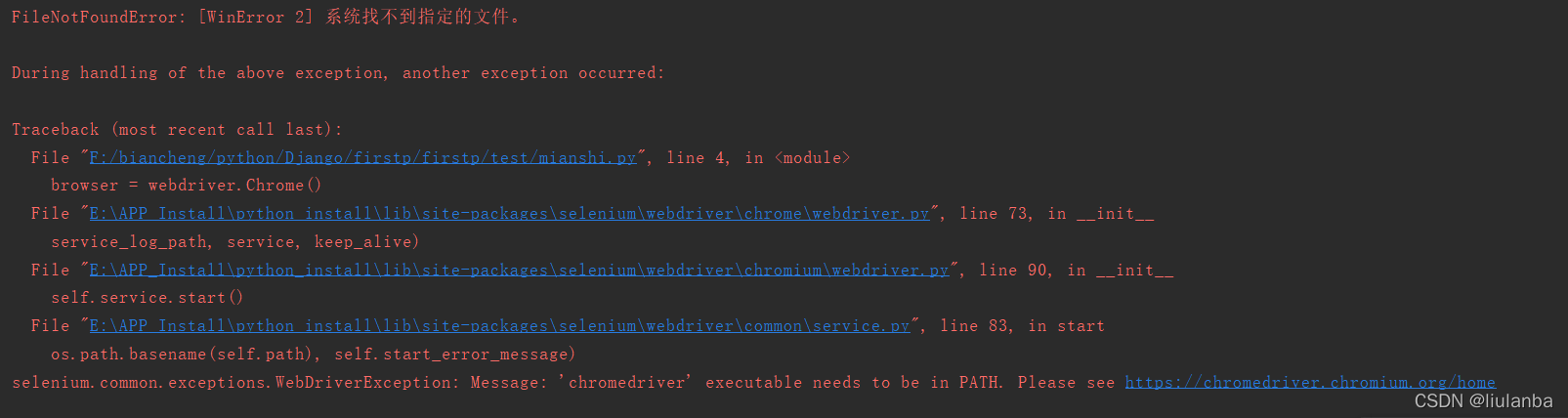

selenium.common.exceptions.WebDriverException: Message: ‘chromedriver‘ executable needs to be in PAT

山东大学软件学院项目实训-创新实训-网络安全靶场实验平台(五)

LeetCode动态规划训练营(1~5天)

Esp8266 - beginner level Chapter 1

随机推荐

NC basic usage

网络通信基础(局域网、广域网、IP地址、端口号、协议、封装、分用)

STM32基础知识

Esp8266 - beginner level Chapter 1

微信中金财富高端专区安全吗,证券如何开户呢

如何在BNB鏈上創建BEP-20通證

MySQL数据库 - 单表查询(一)

Openharmony open source developer growth plan, looking for new open source forces that change the world!

Project training of Software College of Shandong University - Innovation Training - network security shooting range experimental platform (V)

How about Bohai futures. Is it safe to open futures accounts?

[numerical prediction case] (3) LSTM time series electricity quantity prediction, with tensorflow complete code attached

MySQL数据库 - 连接查询

使用 WPAD/PAC 和 JScript在win11中进行远程代码执行1

Redis installation (centos7 command line installation)

[text classification cases] (4) RNN and LSTM film evaluation Tendency Classification, with tensorflow complete code attached

Electron入门教程4 —— 切换应用的主题

Redis cache penetration, cache breakdown, cache avalanche

R语言使用timeROC包计算无竞争风险情况下的生存资料多时间AUC值、使用confint函数计算无竞争风险情况下的生存资料多时间AUC指标的置信区间值

Software College of Shandong University Project Training - Innovation Training - network security shooting range experimental platform (8)

R language ggplot2 visualization: ggplot2 visualizes the scatter diagram and uses geom_ mark_ The ellipse function adds ellipses around data points of data clusters or data groups for annotation