当前位置:网站首页>Int8 quantification and inference of onnx model using TRT

Int8 quantification and inference of onnx model using TRT

2022-04-23 07:28:00 【wujpbb7】

2022-04-06 to update :

Clear up a few concepts :

1、onnx The model itself should have dynamic dimensions , Otherwise, it can only be converted to static dimension trt engine.

2、 As long as a profile That's enough , Set a minimum and maximum dimension , Optimality is the most commonly used dimension . When inferring, bind .

3、builder and config There are many of the same settings in , If used config, You don't need to set up builder The same parameters in .

def onnx_2_trt(onnx_filename, engine_filename, mode='fp32', max_batch_size=1, min_wh=(160,160), max_wh=(320,320), int8_calib=None):

''' convert onnx to tensorrt engine, use mode of ['fp32', 'fp16', 'int8']

:return: trt engine

'''

assert mode in ['fp32', 'fp16', 'int8'], "mode should be in ['fp32', 'fp16', 'int8']"

G_LOGGER = trt.Logger(trt.Logger.WARNING)

# TRT7 Medium onnx The parser's network, You need to specify the EXPLICIT_BATCH

EXPLICIT_BATCH = 1 << (int)(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)

with trt.Builder(G_LOGGER) as builder, builder.create_network(EXPLICIT_BATCH) as network, \

trt.OnnxParser(network, G_LOGGER) as parser:

print('Loading ONNX file from path %s...'%(onnx_filename))

with open(onnx_filename, 'rb') as model:

print('Beginning ONNX file parsing')

if not parser.parse(model.read()):

for e in range(parser.num_errors):

print(parser.get_error(e))

raise TypeError('Parser parse failed.')

print('Completed parsing of ONNX file')

# wujp 2022-03-29 If used config,builder There's no need to set .

#builder.max_batch_size = max_batch_size

#builder.max_workspace_size = 1 << 30

if mode == 'int8':

assert (builder.platform_has_fast_int8 == True), 'not support int8'

#builder.int8_mode = True

#builder.int8_calibrator = calib

elif mode == 'fp16':

assert (builder.platform_has_fast_fp16 == True), 'not support fp16'

#builder.fp16_mode = True

profile = builder.create_optimization_profile()

inputs = [network.get_input(i) for i in range(network.num_inputs)]

for inp in inputs:

fbs, shape = inp.shape[0], inp.shape[1:]

if (shape[1] == -1 and shape[2] == -1): # height and width It's all dynamic .

profile.set_shape(inp.name, min=(1, shape[0], *min_wh), opt=(8, shape[0], *min_wh), max=(max_batch_size, shape[0], *max_wh))

else:

profile.set_shape(inp.name, min=(1, *shape), opt=(8, *shape), max=(max_batch_size, *shape))

config = builder.create_builder_config()

config.max_workspace_size = 1 << 30

if mode == 'int8':

config.set_flag(trt.BuilderFlag.INT8)

config.int8_calibrator = int8_calib

elif mode == 'fp16':

config.set_flag(trt.BuilderFlag.FP16)

config.add_optimization_profile(profile)

config.set_calibration_profile(profile) # If not, there will be a warning [TensorRT] WARNING: Calibration Profile is not defined. Runing calibration with Profile 0

print('Building an engine from file %s; this may take a while...'%(onnx_filename))

#engine = builder.build_cuda_engine(network, config)

engine = builder.build_engine(network, config)

print('Created engine success! ')

# Save plan file

print('Saving TRT engine file to path %s...'%(engine_filename))

with open(engine_filename, 'wb') as f:

f.write(engine.serialize())

print('Engine file has already saved to %s!'%(engine_filename))

return engine--------------------------------------------------------------------

2022-03-27

The following code sheet batch That's all right. , many batch no way .

Catalog

Generate trt Model

1、 Use the code

https://github.com/rmccorm4/tensorrt-utils.git

2、onnx Models and pictures

Model : dynamic batch Input ( Assuming that mob_w160_h160.onnx, Input is [batchsize, 3, 160, 160]).

picture : A pile of pictures ( Suppose there is 1024 Zhang ), No other description files are required .

stay tensorrt-utils/int8/calibration/ Create subfolders under ( Suppose it's called my), Put models and pictures in it .

3、 Modify the code

Came to tensorrt-utils/int8/calibration/ Catalog .

modify onnx_to_tensorrt.py in main, Mainly to modify the path and calibrate the number of pictures .

def main():

my_dir = os.path.dirname(os.path.realpath(__file__)) + '/my/'

parser = argparse.ArgumentParser(description="Creates a TensorRT engine from the provided ONNX file.\n")

parser.add_argument("--onnx", help="The ONNX model file to convert to TensorRT",

default=my_dir+'mob_w160_h160.onnx')

parser.add_argument("-o", "--output", type=str, help="The path at which to write the engine",

default=my_dir+'mob_w160_h160.trt')

parser.add_argument("-b", "--max-batch-size", type=int, default=32, help="The max batch size for the TensorRT engine input")

parser.add_argument("-v", "--verbosity", action="count", help="Verbosity for logging. (None) for ERROR, (-v) for INFO/WARNING/ERROR, (-vv) for VERBOSE.")

# If explicit-batch yes False, There will be mistakes

# In node -1 (importModel): INVALID_VALUE: Assertion failed: !_importer_ctx.network()->hasImplicitBatchDimension() &&

# "This version of the ONNX parser only supports TensorRT INetworkDefinitions with an explicit batch dimension. Please ensure the network was created using the EXPLICIT_BATCH NetworkDefinitionCreationFlag."

parser.add_argument("--explicit-batch", action='store_true', help="Set trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH.",

default=True)

# If explicit-precision yes True, There will be mistakes

# "python: ../builder/cudnnBuilderWeightConverters.cpp:162: std::vector<float> nvinfer1::cudnn::makeConvDeconvInt8Weights(nvinfer1::ConvolutionParameters&,

# const nvinfer1::rt::EngineTensor&, const nvinfer1::rt::EngineTensor&, float, bool, bool): Assertion `sI.count() == 1' failed."

parser.add_argument("--explicit-precision", action='store_true', help="Set trt.NetworkDefinitionCreationFlag.EXPLICIT_PRECISION.",

default=False)

parser.add_argument("--gpu-fallback", action='store_true', help="Set trt.BuilderFlag.GPU_FALLBACK.")

parser.add_argument("--refittable", action='store_true', help="Set trt.BuilderFlag.REFIT.")

parser.add_argument("--debug", action='store_true', help="Set trt.BuilderFlag.DEBUG.")

parser.add_argument("--strict-types", action='store_true', help="Set trt.BuilderFlag.STRICT_TYPES.")

parser.add_argument("--fp16", action="store_true", help="Attempt to use FP16 kernels when possible.")

parser.add_argument("--int8", action="store_true", help="Attempt to use INT8 kernels when possible. This should generally be used in addition to the --fp16 flag. \

ONLY SUPPORTS RESNET-LIKE MODELS SUCH AS RESNET50/VGG16/INCEPTION/etc.",

default = True)

parser.add_argument("--calibration-cache", help="(INT8 ONLY) The path to read/write from calibration cache.",

default=my_dir+"calibration.cache")

parser.add_argument("--calibration-data", help="(INT8 ONLY) The directory containing {*.jpg, *.jpeg, *.png} files to use for calibration. (ex: Imagenet Validation Set)",

default=my_dir+'images/')

parser.add_argument("--calibration-batch-size", help="(INT8 ONLY) The batch size to use during calibration.", type=int, default=32)

parser.add_argument("--max-calibration-size", help="(INT8 ONLY) The max number of data to calibrate on from --calibration-data.", type=int,

default=1024)

parser.add_argument("-p", "--preprocess_func", type=str, default=None, help="(INT8 ONLY) Function defined in 'processing.py' to use for pre-processing calibration data.")

parser.add_argument("-s", "--simple", action="store_true", help="Use SimpleCalibrator with random data instead of ImagenetCalibrator for INT8 calibration.")

args, _ = parser.parse_known_args()

# The following code does not need to be changed modify processing.py in preprocess_imagenet, Change the width and height to 160, Use your own mean and standard deviation .

#def preprocess_imagenet(image, channels=3, height=224, width=224):

def preprocess_imagenet(image, channels=3, height=160, width=160):

"""Pre-processing for Imagenet-based Image Classification Models:

resnet50, vgg16, mobilenet, etc. (Doesn't seem to work for Inception)

Parameters

----------

image: PIL.Image

The image resulting from PIL.Image.open(filename) to preprocess

channels: int

The number of channels the image has (Usually 1 or 3)

height: int

The desired height of the image (usually 224 for Imagenet data)

width: int

The desired width of the image (usually 224 for Imagenet data)

Returns

-------

img_data: numpy array

The preprocessed image data in the form of a numpy array

"""

# Get the image in CHW format

resized_image = image.resize((width, height), Image.ANTIALIAS)

img_data = np.asarray(resized_image).astype(np.float32)

if len(img_data.shape) == 2:

# For images without a channel dimension, we stack

img_data = np.stack([img_data] * 3)

logger.debug("Received grayscale image. Reshaped to {:}".format(img_data.shape))

else:

img_data = img_data.transpose([2, 0, 1])

#mean_vec = np.array([0.485, 0.456, 0.406])

#stddev_vec = np.array([0.229, 0.224, 0.225])

my_mean = np.array([104, 117, 123])

assert img_data.shape[0] == channels

for i in range(img_data.shape[0]):

# Scale each pixel to [0, 1] and normalize per channel.

#img_data[i, :, :] = (img_data[i, :, :] / 255 - mean_vec[i]) / stddev_vec[i]

img_data[i, :, :] = img_data[i, :, :] - my_mean[i]

return img_data4、 result

stay my Generated in the mob_w160_h160.trt You can infer ,calibration.cache No dice .

Why? trt Than onnx Much more , Because of the dynamic batch,onnx_to_tensorrt.py Use in [1, 8, 16, 32, 64] Five specifications batch To generate optimization profile.

infer trt Model

Came to tensorrt-utils/inference/ Catalog .

modify infer.py Medium main, It is mainly to modify the path and model input .

def main():

import os

my_dir = os.path.dirname(os.path.realpath(__file__)) + '/../int8/calibration/my/'

parser = argparse.ArgumentParser()

#parser.add_argument("-e", "--engine", required=True, type=str,

# help="Path to TensorRT engine file.")

parser.add_argument("-e", "--engine", type=str, help="Path to TensorRT engine file.",

default = my_dir+'mob_w160_h160.trt')

parser.add_argument("-s", "--seed", type=int, default=42,

help="Random seed for reproducibility.")

args = parser.parse_args()

...

# Generate random inputs based on profile shapes

#host_inputs = get_random_inputs(engine, context, input_binding_idxs, seed=args.seed)

# hold host_inputs Just replace it with the required input ( With a single batch For example , from test.jpg Read data from ).

# host_inputs It's a list, With a few inputs, there are several elements , Elements type yes np.array,shape yes [N,3,160,160].

batch_img = []

img = cv2.imread('test.jpg')

img = np.float32(img)

img = cv2.resize(img, (160,160))

img -= my_mean

img = img.transpose(2, 0, 1)

batch_img.append(img)

host_inputs = [np.array(batch_img)]

...

Pay attention to create_execution_context and execute_v2 There should be no dispute between trt Of CUDA operation ,( such as Initialize a EP by CUDA Of onnxruntime), Otherwise, there will be inexplicable errors in execution , such as :

Use synchronous execute_v2 when , There will be mistakes :( Need to set up context.debug_sync = True, To see the error )

[TensorRT] ERROR: safeContext.cpp (184) - Cudnn Error in configure: 7 (CUDNN_STATUS_MAPPING_ERROR)

[TensorRT] ERROR: FAILED_EXECUTION: std::exception

Use asynchronous execute_async when , There will be mistakes :( The complete code of asynchronous inference can be referred to https://github.com/RizhaoCai/PyTorch_ONNX_TensorRT)

[TensorRT] ERROR: ../rtSafe/cuda/reformat.cu (740) - Cuda Error in NCHWToNCQHW4: 400 (invalid resource handle)

[TensorRT] ERROR: FAILED_EXECUTION: std::exception

版权声明

本文为[wujpbb7]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230611550065.html

边栏推荐

- pth 转 onnx 时出现的 gather、unsqueeze 等算子

- Infrared sensor control switch

- 项目文件“ ”已被重命名或已不在解决方案中、未能找到与解决方案关联的源代码管理提供程序——两个工程问题

- Pep517 error during pycuda installation

- 【点云系列】 A Rotation-Invariant Framework for Deep Point Cloud Analysis

- Gephi tutorial [1] installation

- [3D shape reconstruction series] implicit functions in feature space for 3D shape reconstruction and completion

- EMMC/SD学习小记

- 初探智能指针之std::shared_ptr、std::unique_ptr

- 安装 pycuda 出现 PEP517 的错误

猜你喜欢

【点云系列】点云隐式表达相关论文概要

机器视觉系列(01)---综述

【点云系列】DeepMapping: Unsupervised Map Estimation From Multiple Point Clouds

GIS实战应用案例100篇(五十三)-制作三维影像图用以作为城市空间格局分析的底图

Chapter 2 pytoch foundation 2

第1章 NumPy基础

SPI NAND FLASH小结

AUTOSAR从入门到精通100讲(五十一)-AUTOSAR网络管理

ArcGIS license server administrator cannot start the workaround

pth 转 onnx 时出现的 gather、unsqueeze 等算子

随机推荐

Systrace parsing

AMBA协议学习小记

Gobang games

【期刊会议系列】IEEE系列模板下载指南

Paddleocr image text extraction

[3D shape reconstruction series] implicit functions in feature space for 3D shape reconstruction and completion

GIS实战应用案例100篇(五十二)-ArcGIS中用栅格裁剪栅格,如何保持行列数量一致并且对齐?

美摄科技受邀LVSon2020大会 分享《AI合成虚拟人物的技术框架与挑战》

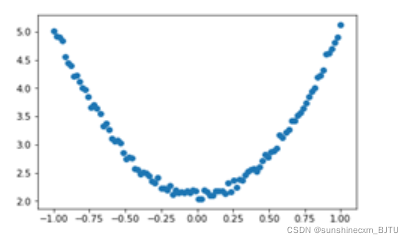

画 ArcFace 中的 margin 曲线

scons 搭建嵌入式arm编译

Mysql database installation and configuration details

【点云系列】PnP-3D: A Plug-and-Play for 3D Point Clouds

Write a wechat double open gadget to your girlfriend

【点云系列】FoldingNet:Point Cloud Auto encoder via Deep Grid Deformation

[point cloud series] pnp-3d: a plug and play for 3D point clouds

Compression and acceleration technology of deep learning model (I): parameter pruning

Machine learning II: logistic regression classification based on Iris data set

Visual studio 2019 installation and use

以智能生产引领行业风潮!美摄智能视频生产平台亮相2021世界超高清视频产业发展大会

【3D形状重建系列】Implicit Functions in Feature Space for 3D Shape Reconstruction and Completion