当前位置:网站首页>Classification of cifar100 data set based on convolutional neural network

Classification of cifar100 data set based on convolutional neural network

2022-04-23 17:53:00 【Stephen_ Tao】

List of articles

CIFAR100 Data set introduction

CIFAR100 The dataset has 100 Categories , Each category contains 600 A picture , And each category has 500 Training pictures and 100 Test pictures .CIFAR100 Data sets 100 The three categories are divided into 20 A superclass . Each image has a " fine " label ( The class it belongs to ) And a " Rough " label ( The superclass it belongs to ).

Code implementation

Reading data sets

# Import dataset

from tensorflow.python.keras.datasets import cifar100

class CNNMnist(object):

def __init__(self):

# Reading data sets

(self.train,self.train_label),(self.test,self.test_label) = cifar100.load_data()

# Normalize the data set

self.train = self.train.reshape(-1,32,32,3) / 255.0

self.test = self.test.reshape(-1,32,32,3) / 255.0

Build a network model

- Convolution layer :32 individual 5*5 Convolution kernel , The step size is set to 1, The activation function uses relu

- Pooling layer : The pool size is 2, The step size is set to 2

- Convolution layer :64 individual 5*5 Convolution kernel , The step size is set to 1, The activation function uses relu

- Pooling layer : The pool size is 2, The step size is set to 2

- Fully connected layer : Set up 1024 Neurons , The activation function is relu

- Fully connected layer : Set up 100 Neurons , The activation function is softmax

# Import necessary packages

from tensorflow.python.keras import layers,losses,optimizers

from tensorflow.python.keras.models import Sequential

import tensorflow as tf

class CNNMnist(object):

model = Sequential([

layers.Conv2D(32,kernel_size=5,strides=1,padding='same',data_format='channels_last',activation=tf.nn.relu),

layers.MaxPool2D(pool_size=2,strides=2,padding='same'),

layers.Conv2D(64,kernel_size=5,strides=1,padding='same',data_format='channels_last',activation=tf.nn.relu),

layers.MaxPool2D(pool_size=2,strides=2,padding='same'),

layers.Flatten(),

layers.Dense(1024,activation=tf.nn.relu),

layers.Dense(100,activation=tf.nn.softmax)

])

Network model compilation

class CNNMnist(object):

def compile(self):

CNNMnist.model.compile(optimizer=optimizers.adam_v2.Adam(),

loss=losses.sparse_categorical_crossentropy,

metrics=['accuracy'])

return None

model training

class CNNMnist(object):

def fit(self):

CNNMnist.model.fit(self.train,self.train_label,epochs=1,batch_size=32)

return None

Model to evaluate

class CNNMnist(object):

def evaluate(self):

train_loss,train_acc = CNNMnist.model.evaluate(self.train,self.train_label)

test_loss,test_acc = CNNMnist.model.evaluate(self.test,self.test_label)

print("train_loss:",train_loss)

print("train_acc:",train_acc)

print("test_loss:",test_loss)

print("test_acc:",test_acc)

return None

The model runs

if __name__ == '__main__':

cnn = CNNMnist()

cnn.compile()

cnn.fit()

cnn.evaluate()

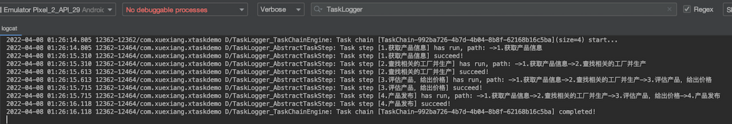

Model running results

1563/1563 [==============================] - 199s 126ms/step - loss: 3.5098 - accuracy: 0.1748

1563/1563 [==============================] - 56s 35ms/step - loss: 2.8101 - accuracy: 0.3094

313/313 [==============================] - 11s 33ms/step - loss: 2.9732 - accuracy: 0.2672

train_loss: 2.81014084815979

train_acc: 0.3094399869441986

test_loss: 2.9731905460357666

test_acc: 0.2671999931335449

You can see from the results , The accuracy obtained is still relatively low . Because the loss of convolutional neural networks does not decline as fast as fully connected neural networks , And the above code only iterates once . However, compared with fully connected neural networks, convolutional neural networks , Reduced training parameters , It can reduce the requirements for computing power and performance of equipment , Therefore, in pattern recognition 、 Object detection has a wide range of applications .

summary

This paper focuses on how to build a convolutional neural network model , No necessary improvements have been made to the model .

notes : The code resource of this article comes from the dark horse programmer course

版权声明

本文为[Stephen_ Tao]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230548468570.html

边栏推荐

- 编译原理 求first集 follow集 select集预测分析表 判断符号串是否符合文法定义(有源码!!!)

- Halo open source project learning (II): entity classes and data tables

- Future usage details

- Uniapp custom search box adaptation applet alignment capsule

- Tell the truth of TS

- 402. Remove K digits - greedy

- 2022年广东省安全员A证第三批(主要负责人)特种作业证考试题库及在线模拟考试

- Error in created hook: "referenceerror:" promise "undefined“

- Cross domain settings of Chrome browser -- including new and old versions

- 470. 用 Rand7() 实现 Rand10()

猜你喜欢

440. The k-th small number of dictionary order (difficult) - dictionary tree - number node - byte skipping high-frequency question

Land cover / use data product download

Remember using Ali Font Icon Library for the first time

Halo open source project learning (II): entity classes and data tables

470. 用 Rand7() 实现 Rand10()

.104History

![Click Cancel to return to the previous page and modify the parameter value of the previous page, let pages = getcurrentpages() let prevpage = pages [pages. Length - 2] / / the data of the previous pag](/img/ed/4d61ce34f830209f5adbddf9165676.png)

Click Cancel to return to the previous page and modify the parameter value of the previous page, let pages = getcurrentpages() let prevpage = pages [pages. Length - 2] / / the data of the previous pag

Comparison between xtask and kotlin coroutine

Halo 开源项目学习(二):实体类与数据表

Leak detection and vacancy filling (6)

随机推荐

Laser slam theory and practice of dark blue College Chapter 3 laser radar distortion removal exercise

SystemVerilog (VI) - variable

Halo 开源项目学习(二):实体类与数据表

Matlab / Simulink simulation of double closed loop DC speed regulation system

Learning record of uni app dark horse yougou project (Part 2)

土地覆盖/利用数据产品下载

Exercise: even sum, threshold segmentation and difference (two basic questions of list object)

92. Reverse linked list II byte skipping high frequency question

Detailed deployment of flask project

Type judgment in [untitled] JS

Construction of functions in C language programming

Ring back to origin problem - byte jumping high frequency problem

Client example analysis of easymodbustcp

[appium] write scripts by designing Keyword Driven files

This point in JS

41. The first missing positive number

Remember using Ali Font Icon Library for the first time

386. 字典序排数(中等)-迭代-全排列

386. Dictionary order (medium) - iteration - full arrangement

Kubernetes service discovery monitoring endpoints