当前位置:网站首页>Machine learning III: classification prediction based on logistic regression

Machine learning III: classification prediction based on logistic regression

2022-04-23 07:19:00 【Amyniez】

1 Introduction and application of logistic regression

1.1 Introduction of logical regression

Logical regression (Logistic regression, abbreviation LR) Although it has " Return to " Two words , But logistic regression is actually a classification Model , And widely used in various fields . Although deep learning is now more popular than these traditional methods , But in fact, these traditional methods are still widely used in various fields due to their unique advantages .

And for logistic regression, and , The most prominent two points are its Simple model and The model has strong explanatory power .

Advantages and disadvantages of logistic regression model :

- advantage : Implement a simple , Easy to understand and implement ; It's not expensive to calculate , fast , Low storage resources ;

- shortcoming : Easy under fitting , Classification accuracy may not be high

1.1 Application of logistic regression

Logistic regression model is widely used in various fields , Including machine learning , Most medical fields and Social Sciences . for example , By the first Boyd The trauma and injury severity score developed by et al (TRISS) It is widely used to predict the mortality of injured patients , Use logical regression Based on the observed patient characteristics ( Age , Gender , Body mass index , Results of various blood tests, etc ) Analysis predicts the occurrence of specific diseases ( For example, diabetes , Coronary heart disease (CHD) ) The risk of . Logistic regression models are also used to predict in a given process , The possibility of system or product failure . It's also used for marketing applications , For example, predict the tendency of customers to buy products or stop ordering . In economics, it can be used to predict the possibility of a person choosing to enter the labor market , And commercial applications can be used to predict the likelihood that homeowners will default on their mortgage . Conditional random fields are extensions of logistic regression to sequential data , For natural language processing .

Logistic regression model is also the basic component of many classification algorithms , such as The classification task is based on GBDT Algorithm +LR Credit card transaction anti fraud realized by logistic regression ,CTR( Click through rate ) Estimate, etc , The advantage is that the output value naturally falls in 0 To 1 Between , And it has probabilistic significance . The model is clear , There is a corresponding theoretical basis of probability . The parameters it fits represent every feature (feature) The effect on the result . It's also a good tool for understanding data . But at the same time, because it is essentially a linear classifier , So we can't deal with the more complicated data situation . A lot of times, we will also use the logistic regression model to do some baseline tasks baseline( Basic level ).

4.1 Demo

Step1: Library function import

- notes : Seaborn Is in matplotlib On the basis of a more advanced API encapsulation , So it's easier to draw , Use... In most cases seaborn Can make a very attractive picture . Such as ,

- 1.set_style( ) Used to set the theme ;

- 2.distplot( ) by hist Enhanced Edition 、kdeplot( ) Is the density curve ;

- 3. Box figure boxplot( ): The biggest advantage is that it is not affected by outliers , It can describe the discrete distribution of data in a relatively stable way ;

- 4. Joint distribution jointplot( )

- 5. Hot spots heatmap( )

# Basic function library

import numpy as np

# Import drawing library

import matplotlib.pyplot as plt

import seaborn as sns

# Import logistic regression model function

from sklearn.linear_model import LogisticRegression

Step2: model training

# Construct data set

x_fearures = np.array([[-1, -2], [-2, -1], [-3, -2], [1, 3], [2, 1], [3, 2]])

y_label = np.array([0, 0, 0, 1, 1, 1])

# Call the logistic regression model

lr_clf = LogisticRegression()

# A logistic regression model is used to fit the constructed data set

lr_clf = lr_clf.fit(x_fearures, y_label) # The fitting equation is y=w0+w1*x1+w2*x2,w For weight ,w0 Is the offset or error value

Step3: Model parameters view

# Look at the corresponding model of w

# coef_:“ Slope ” Parameters (w, Also called weight )

print('the weight of Logistic Regression:',lr_clf.coef_)

# Look at the corresponding model of w0

# intercept_ : Offset or intercept (b)

print('the intercept(w0) of Logistic Regression:',lr_clf.intercept_)

result :

the weight of Logistic Regression: [[0.73462087 0.6947908 ]]

the intercept(w0) of Logistic Regression: [-0.03643213]

Step4: Data and Model Visualization

# Visual construction of data sample points

plt.figure()

# Scatter plot

plt.scatter(x_fearures[:,0],x_fearures[:,1], c=y_label, s=50, cmap='viridis')

plt.title('Dataset')

plt.show()

# Visualizing decision boundaries

plt.figure()

plt.scatter(x_fearures[:,0],x_fearures[:,1], c=y_label, s=50, cmap='viridis')

plt.title('Dataset')

nx, ny = 200, 100

# Set the boundaries of the image limit, That is, the maximum and the minimum

x_min, x_max = plt.xlim()

y_min, y_max = plt.ylim()

x_grid, y_grid = np.meshgrid(np.linspace(x_min, x_max, nx),np.linspace(y_min, y_max, ny))

z_proba = lr_clf.predict_proba(np.c_[x_grid.ravel(), y_grid.ravel()])

z_proba = z_proba[:, 1].reshape(x_grid.shape)

plt.contour(x_grid, y_grid, z_proba, [0.5], linewidths=2., colors='blue')

plt.show()

Visual prediction of new samples :

plt.figure()

# new point 1

x_fearures_new1 = np.array([[0, -1]])

plt.scatter(x_fearures_new1[:,0],x_fearures_new1[:,1], s=50, cmap='viridis')

# annotate notes

# Annotate points with text x、y;

# By defining *arrowprops*( Arrow prop ) add to

plt.annotate(s='New point 1',xy=(0,-1),xytext=(-2,0),color='blue',arrowprops=dict(arrowstyle='-|>',connectionstyle='arc3',color='red'))

# new point 2

x_fearures_new2 = np.array([[1, 2]])

plt.scatter(x_fearures_new2[:,0],x_fearures_new2[:,1], s=50, cmap='viridis')

plt.annotate(s='New point 2',xy=(1,2),xytext=(-1.5,2.5),color='red',arrowprops=dict(arrowstyle='-|>',connectionstyle='arc3',color='red'))

# The training sample

plt.scatter(x_fearures[:,0],x_fearures[:,1], c=y_label, s=50, cmap='viridis')

plt.title('Dataset')

# Visualizing decision boundaries

plt.contour(x_grid, y_grid, z_proba, [0.5], linewidths=2., colors='blue')

plt.show()

Step5: Model to predict

# In the training set and test set, the trained model is used to predict

y_label_new1_predict = lr_clf.predict(x_fearures_new1)

y_label_new2_predict = lr_clf.predict(x_fearures_new2)

print('The New point 1 predict class:\n',y_label_new1_predict)

print('The New point 2 predict class:\n',y_label_new2_predict)

# Since the logistic regression model is a probabilistic prediction model ( Previously described p = p(y=1|x,\theta)), So we can use predict_proba Function predicts its probability

y_label_new1_predict_proba = lr_clf.predict_proba(x_fearures_new1)

y_label_new2_predict_proba = lr_clf.predict_proba(x_fearures_new2)

print('The New point 1 predict Probability of each class:\n',y_label_new1_predict_proba)

print('The New point 2 predict Probability of each class:\n',y_label_new2_predict_proba)

The New point 1 predict class:

[0]

The New point 2 predict class:

[1]

The New point 1 predict Probability of each class:

[[0.67507358 0.32492642]]

The New point 2 predict Probability of each class:

[[0.11029117 0.88970883]]

It can be found that the trained regression model will X_new1 Forecast for category 0( The lower left side of the discriminant plane ),X_new2 Forecast for category 1( The upper right side of the discriminant plane ). The probability of the logistic regression model trained is 0.5 The discrimination surface is the blue line in the above figure .

版权声明

本文为[Amyniez]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230610096384.html

边栏推荐

- Five methods are used to obtain the parameters and calculation of torch network model

- 电脑关机程序

- WebView displays a blank due to a certificate problem

- Summary of image classification white box anti attack technology

- 【動態規劃】不同路徑2

- 【2021年新书推荐】Professional Azure SQL Managed Database Administration

- MySQL笔记5_操作数据

- 【 planification dynamique】 différentes voies 2

- Android interview Online Economic encyclopedia [constantly updating...]

- 最简单完整的libwebsockets的例子

猜你喜欢

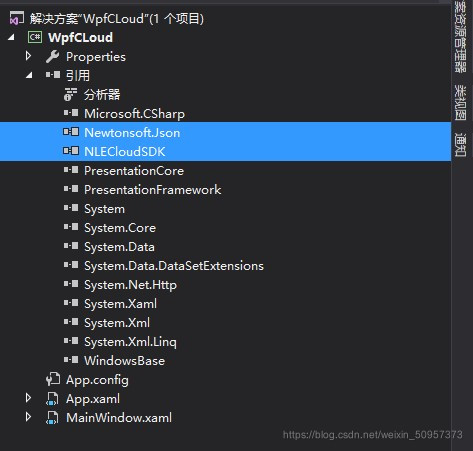

C connection of new world Internet of things cloud platform (simple understanding version)

MySQL数据库安装与配置详解

C# EF mysql更新datetime字段报错Modifying a column with the ‘Identity‘ pattern is not supported

Itop4412 HDMI display (4.4.4_r1)

C#新大陆物联网云平台的连接(简易理解版)

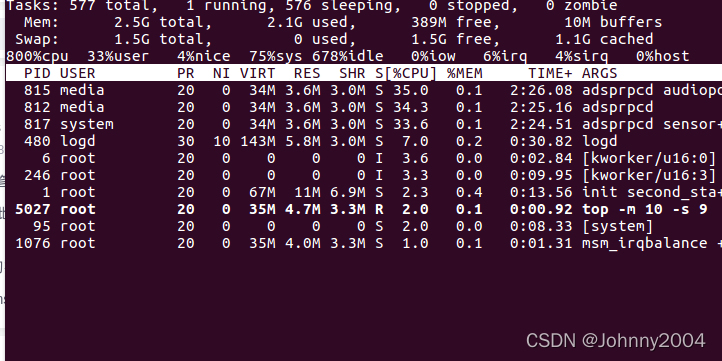

adb shell top 命令详解

基于BottomNavigationView实现底部导航栏

winform滚动条美化

第8章 生成式深度学习

Easyui combobox 判断输入项是否存在于下拉列表中

随机推荐

第3章 Pytorch神经网络工具箱

torch.mm() torch.sparse.mm() torch.bmm() torch.mul() torch.matmul()的区别

adb shell常用模拟按键keycode

[recommendation of new books in 2021] enterprise application development with C 9 and NET 5

Handler进阶之sendMessage原理探索

Tiny4412 HDMI display

素数求解的n种境界

Summary of image classification white box anti attack technology

PaddleOCR 图片文字提取

【2021年新书推荐】Practical IoT Hacking

ffmpeg常用命令

C#新大陆物联网云平台的连接(简易理解版)

常用UI控件简写名

项目,怎么打包

iTOP4412 SurfaceFlinger(4.0.3_r1)

WebView displays a blank due to a certificate problem

Android interview Online Economic encyclopedia [constantly updating...]

PyTorch 模型剪枝实例教程三、多参数与全局剪枝

图像分类白盒对抗攻击技术总结

微信小程序 使用wxml2canvas插件生成图片部分问题记录