当前位置:网站首页>【吴恩达来信】强化学习的发展!

【吴恩达来信】强化学习的发展!

2022-08-10 14:34:00 【正仪】

一、译文

亲爱的朋友们,

在准备学习机器学习专业课程的第三课时(其中包括强化学习的内容),我认真思考了为何强化学习算法在使用上仍是非常苛刻的。它们对超参数的选择非常敏感,有超参数调优经验的人可能会获得10倍或100倍的性能提升。十年前,使用有监督深度学习算法同样苛刻,但随着建立有监督模型的系统方法的研究不断进步,它也逐渐变得更加稳健。

强化学习算法在未来十年还会变得更加稳健吗?我希望如此。然而,强化学习在建立真实世界(非模拟)基准方面面临着一个独特的障碍。

当有监督深度学习处于开发的早期阶段时,经验丰富的超参数调节器可以获得比经验较少的调节器更好的结果。我们必须选择神经网络结构、正则化方法、学习速率、降低学习速率的时间表、mini-batch 的尺寸、动量、随机权重初始化方法等等。正确的选择会对算法的收敛速度和最终性能产生巨大影响。

归功于过去十年的研究进展,我们现在有了更加稳健的优化算法,如Adam,更好的神经网络架构,以及对许多其他超参数的默认选择的更系统的指导,从而更容易获得好的结果。我怀疑扩大神经网络的规模也会使它们更加稳健。这些天来,即使我只有100个训练样本,我也会毫不犹豫地训练一个包含2000多万个参数的网络(比如 ResNet-50)。相反,如果在100个样本上训练包含1000个参数的网络,每个参数都更重要,因此我们需要更仔细地进行调优。

我和我的伙伴们已经将强化学习应用于汽车、直升机、四足动物、机器蛇和许多其他应用。然而,今天的强化学习算法依然挑剔。虽然监督深度学习中的超参数调优不当可能导致你的算法训练速度慢3倍或10倍(这很糟糕),但在强化学习中,如果算法不收敛,可能会导致训练速度降低100倍!与十年前的监督学习类似,我们已经开发了许多技术来帮助强化学习算法收敛(如双 Q 学习、软更新、经验重放和使用 epsilon 缓慢递减的epsilon-greedy exploration)。这些方法都很聪明,我赞扬了开发它们的研究人员,但这些技术中的许多都产生了额外的超参数,在我看来,这些参数很难调整。

对强化学习的进一步研究可能遵循有监督深度学习的路径,并为我们提供更加稳健的算法和如何做出这些选择的系统指导。但有一件事令我担心。在监督学习中,基准数据集使全球研究人员能够针对同一数据集来调整算法,并在彼此的工作基础上进行构建。在强化学习中,更常用的基准是模拟环境,如 OpenAI Gym。但让强化学习算法在模拟机器人上运行要比让它在物理机器人上运行容易得多。

许多在模拟任务中表现出色的算法与物理机器人进行斗争。即使是同一机器人设计的两个副本也会有所不同。此外,为每一位有抱负的强化学习研究人员提供他们自己的机器人副本是不可行的。虽然研究人员在模拟机器人(以及玩视频游戏)的强化学习方面取得了快速进展,但应用于非模拟环境中应用中的桥梁往往缺失。许多优秀的研究实验室正在研究物理机器人。但由于每个机器人都是独一无二的,一个实验室的结果可能很难被其他实验室复制,这阻碍了发展的速度。

目前我还没有找到解决这些棘手问题的办法。但我希望所有人工智能领域的人们都能共同努力,使这些算法更加稳健且广泛有效。

请不断学习!

吴恩达

二、原文

Dear friends,

While working on Course 3 of the Machine Learning Specialization, which covers reinforcement learning, I was reflecting on how reinforcement learning algorithms are still quite finicky. They’re very sensitive to hyperparameter choices, and someone experienced at hyperparameter tuning might get 10x or 100x better performance. Supervised deep learning was equally finicky a decade ago, but it has gradually become more robust with research progress on systematic ways to build supervised models.

Will reinforcement learning (RL) algorithms also become more robust in the next decade? I hope so. However, RL faces a unique obstacle in the difficulty of establishing real-world (non-simulation) benchmarks.

When supervised deep learning was at an earlier stage of development, experienced hyperparameter tuners could get much better results than less-experienced ones. We had to pick the neural network architecture, regularization method, learning rate, schedule for decreasing the learning rate, mini-batch size, momentum, random weight initialization method, and so on. Picking well made a huge difference in the algorithm’s convergence speed and final performance.

Thanks to research progress over the past decade, we now have more robust optimization algorithms like Adam, better neural network architectures, and more systematic guidance for default choices of many other hyperparameters, making it easier to get good results. I suspect that scaling up neural networks — these days, I don’t hesitate to train a 20 million-plus parameter network (like ResNet-50) even if I have only 100 training examples — has also made them more robust. In contrast, if you’re training a 1,000-parameter network on 100 examples, every parameter matters much more, so tuning needs to be done much more carefully.D

My collaborators and I have applied RL to cars, helicopters, quadrupeds, robot snakes, and many other applications. Yet today’s RL algorithms still feel finicky. Whereas poorly tuned hyperparameters in supervised deep learning might mean that your algorithm trains 3x or 10x more slowly (which is bad), in reinforcement learning, it feels like they might result in training 100x more slowly — if it converges at all! Similar to supervised learning a decade ago, numerous techniques have been developed to help RL algorithms converge (like double Q learning, soft updates, experience replay, and epsilon-greedy exploration with slowly decreasing epsilon). They’re all clever, and I commend the researchers who developed them, but many of these techniques create additional hyperparameters that seem to me very hard to tune.

Further research in RL may follow the path of supervised deep learning and give us more robust algorithms and systematic guidance for how to make these choices. One thing worries me, though. In supervised learning, benchmark datasets enable the global community of researchers to tune algorithms against the same dataset and build on each other’s work. In RL, the more-commonly used benchmarks are simulated environments like OpenAI Gym. But getting an RL algorithm to work on a simulated robot is much easier than getting it to work on a physical robot.

Many algorithms that work brilliantly in simulation struggle with physical robots. Even two copies of the same robot design will be different. Further, it’s infeasible to give every aspiring RL researcher their own copy of every robot. While researchers are making rapid progress on RL for simulated robots (and for playing video games), the bridge to application in non-simulated environments is often missing. Many excellent research labs are working on physical robots. But because each robot is unique, one lab’s results can be difficult for other labs to replicate, and this impedes the rate of progress.

I don’t have a solution to these knotty issues. But I hope that all of us in AI collectively will manage to make these algorithms more robust and more widely useful.

Keep learning!

Andrew

吴恩达发布于:2022-08-05 10:10

原文链接:【吴恩达来信】强化学习的发展!

边栏推荐

- websocket实现实时变化图表内容

- @RequestBody的使用[通俗易懂]

- tensorflow安装踩坑总结

- Summary of tensorflow installation stepping on the pit

- 字节终面:CPU 是如何读写内存的?

- Steam教育在新时代中综合学习论

- FPN详解

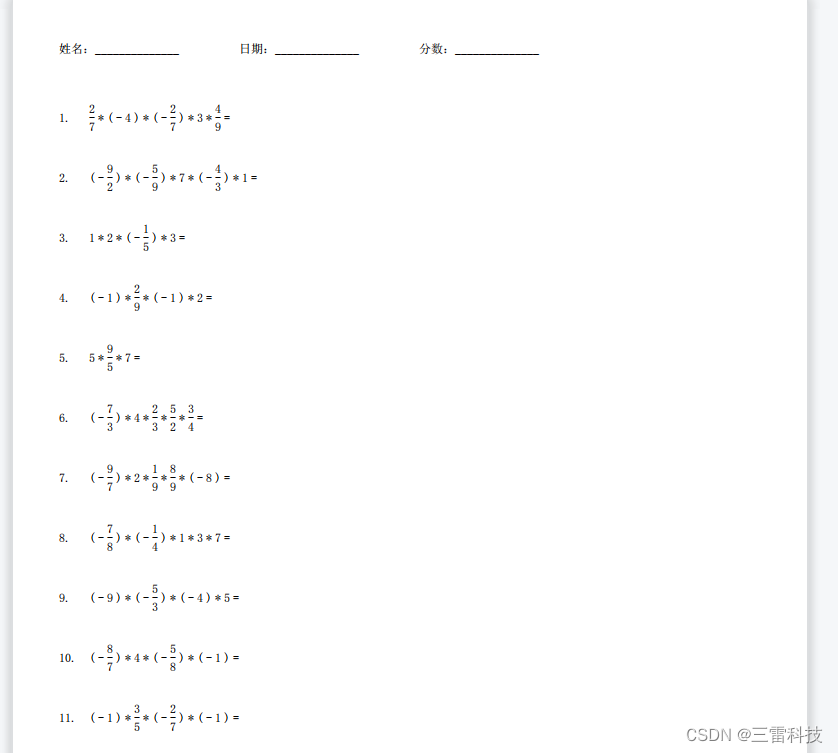

- Unfinished mathematics test paper ----- test paper generator (Qt includes source code)

- Send a post request at the front desk can't get the data

- Data product manager thing 2

猜你喜欢

Existing in the rain of PFAS chemical poses a threat to the safety of drinking water

中学数学建模书籍及相关的视频等(2022.08.09)

【有限元分析】异型密封圈计算泄漏量与参数化优化过程(带分析源文件)

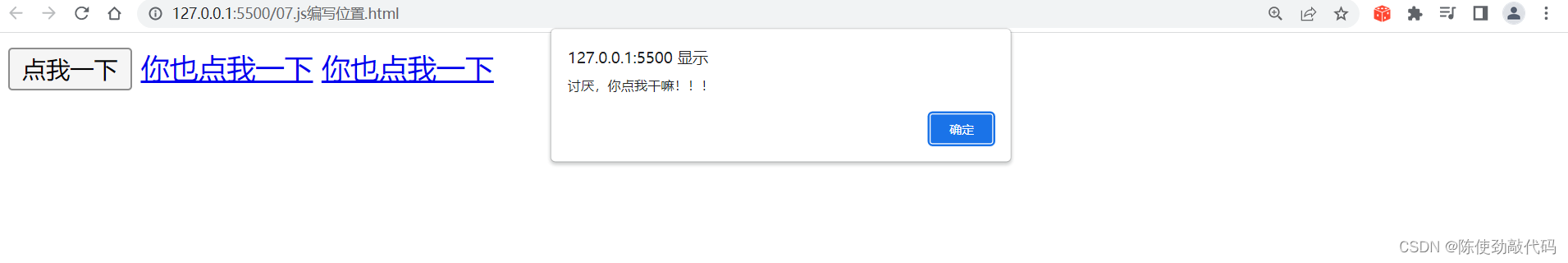

JS入门到精通完整版

关于已拦截跨源请求CORS 头缺少 ‘Access-Control-Allow-Origin‘问题解决

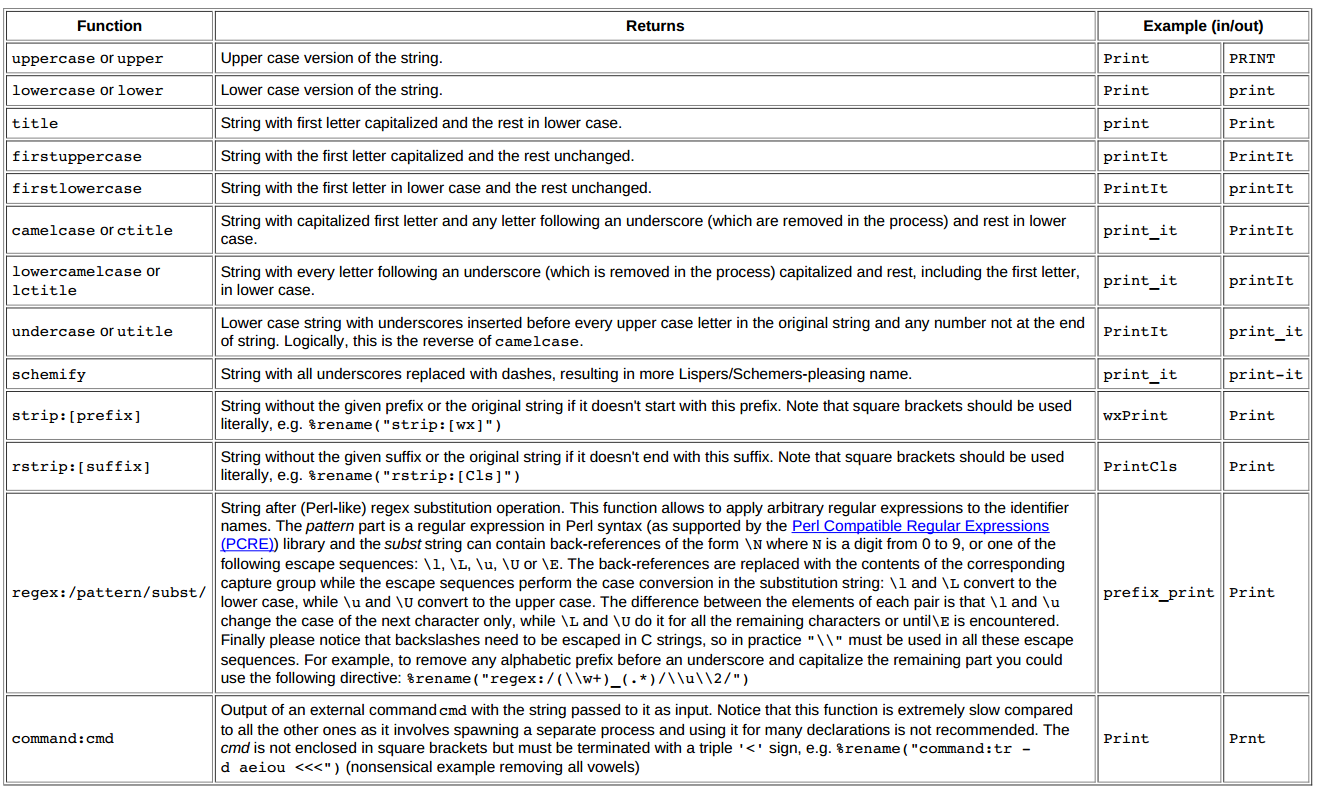

SWIG教程《二》

Unfinished mathematics test paper ----- test paper generator (Qt includes source code)

富爸爸穷爸爸之读书笔记

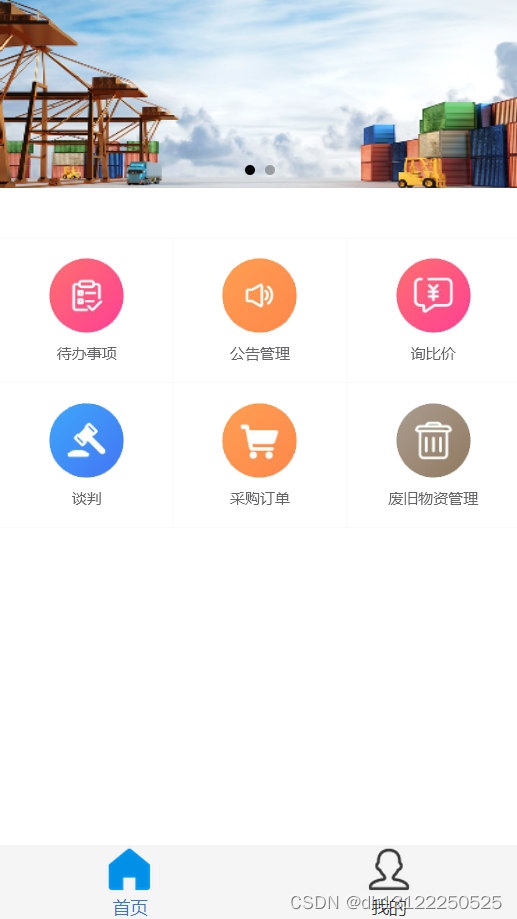

物资采购小程序开发制作功能介绍

laravel 抛错给钉钉

随机推荐

关于已拦截跨源请求CORS 头缺少 ‘Access-Control-Allow-Origin‘问题解决

SWIG教程《四》-go语言的封装

《论文阅读》PLATO: Pre-trained Dialogue Generation Model with Discrete Latent Variable

$‘\r‘: command not found

win2012安装Oraclerac失败

Flask框架——MongoEngine使用MongoDB数据库

写不完的数学试卷-----试卷生成器(Qt含源码)

List集合

【MinIO】Using tools

How does vue clear the tab switching cache problem?

微信扫码登陆(1)—扫码登录流程讲解、获取授权登陆二维码

d为何用模板参数

普林斯顿微积分读本05第四章--求解多项式的极限问题

websocket实现实时变化图表内容

2022年中国软饮料市场洞察

data product manager

符合信创要求的堡垒机有哪些?支持哪些系统?

第三方软件测评有什么作用?权威软件检测机构推荐

池化技术有多牛?来,告诉你阿里的Druid为啥如此牛逼!

DB2查询2个时间段之间的所有月份,DB2查询2个时间段之间的所有日期