当前位置:网站首页>Probably 95% of the people are still making PyTorch mistakes

Probably 95% of the people are still making PyTorch mistakes

2022-08-09 10:28:00 【PaperWeekly】

作者 | serendipity

单位 | 同济大学

研究方向 | 行人搜索、3D人体姿态估计

引言

或许是 by design,但是这个 bug 目前还存在于很多很多人的代码中.就连特斯拉 AI 总监 Karpathy 也被坑过,并发了一篇推文.

事实上,The twitter is a recent one bug 引发的,该 bug It is because of forget correctly as DataLoader workers 设置随机数种子,In the whole training process accident repeating batch 数据.

2018 年 2 Has been in PyTorch 的 repo 下提了 issue [1],但是直到 2021 年 4 Month to repair.此问题只在 PyTorch 1.9 Version appeared before,涉及范围之广,甚至包括了 PyTorch 官方教程 [2]、OpenAI 的代码 [3]、NVIDIA 的代码 [4].

PyTorch DataLoader的隐藏bug

在PyTorch中加载、Preprocessing and data standard method is:继承 torch.utils.data.Dataset 并重载它的 __getitem__ 方法.In order to enhances the data,Such as random cropping and image flip,该 __getitem__ 方法通常使用 NumPy 来生成随机数.And then pass the data set to DataLoader 创建 batch.Data preprocessing is likely to be the bottleneck of network training,So sometimes need to parallel loading data,这可以通过设置 Dataloader的 num_workers 参数来实现.

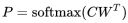

We use a simple code to copy this now bug,PyTorch 版本应 <1.9,I used in the experiment is 1.6.

import numpy as np

from torch.utils.data import Dataset, DataLoader

class RandomDataset(Dataset):

def __getitem__(self, index):

return np.random.randint(0, 1000, 3)

def __len__(self):

return 8

dataset = RandomDataset()

dataloader = DataLoader(dataset, batch_size=2, num_workers=2)

for batch in dataloader:

print(batch)输出为

tensor([[116, 760, 679], # 第1个batch, 由进程0返回

[754, 897, 764]])

tensor([[116, 760, 679], # 第2个batch, 由进程1返回

[754, 897, 764]])

tensor([[866, 919, 441], # 第3个batch, 由进程0返回

[ 20, 727, 680]])

tensor([[866, 919, 441], # 第4个batch, 由进程1返回

[ 20, 727, 680]])We were amazed to find that each process return random number is the same!!

问题原因

PyTorch 用 fork [5] Method to create more child process parallel loading data.This means that each child processes can inherit the parent process all resources,包括 Numpy The state of the random number generator.

解决方法

注: spawn Method is to build from scratch a child process,Won't inherit the parent state of random number. torch.multiprocessing 在Unix 系统中默认使用 fork ,在 MacOS 和 Windows上默认是 spawn .So the problem only in Unix 上出现.当然,Can also be mandatory in MacOS 和 Windows 中使用 fork 方式创建子进程.

DataLoaderThe constructor has an optional parameter worker_init_fn.在加载数据之前,Each child will call this function before.我们可以在 worker_init_fn 中设置 NumPy 的种子,例如:

def worker_init_fn(worker_id):

# np.random.get_state(): 得到当前的NumpyState of the random number,The main process of random state

# worker_id是子进程的id,如果num_workers=2,Two childid分别是0和1

# 和worker_idAdditive can ensure that every child has a different random number seed

np.random.seed(np.random.get_state()[1][0] + worker_id)

dataset = RandomDataset()

dataloader = DataLoader(dataset, batch_size=2, num_workers=2, worker_init_fn=worker_init_fn)

for batch in dataloader:

print(batch)正如我们期望的那样,每个 batch 的值都是不同的.

tensor([[282, 4, 785],

[ 35, 581, 521]])

tensor([[684, 17, 95],

[774, 794, 420]])

tensor([[180, 413, 50],

[894, 318, 729]])

tensor([[530, 594, 116],

[636, 468, 264]])等一下,If we again much iteration several epoch 呢?

for epoch in range(3):

print(f"epoch: {epoch}")

for batch in dataloader:

print(batch)

print("-"*25)我们发现,虽然在一个 epoch Back to normal within,但是不同 epoch Between the repeat again.

epoch: 0

tensor([[282, 4, 785],

[ 35, 581, 521]])

tensor([[684, 17, 95],

[774, 794, 420]])

tensor([[939, 988, 37],

[983, 933, 821]])

tensor([[832, 50, 453],

[ 37, 322, 981]])

-------------------------

epoch: 1

tensor([[282, 4, 785],

[ 35, 581, 521]])

tensor([[684, 17, 95],

[774, 794, 420]])

tensor([[939, 988, 37],

[983, 933, 821]])

tensor([[832, 50, 453],

[ 37, 322, 981]])

-------------------------

epoch: 2

tensor([[282, 4, 785],

[ 35, 581, 521]])

tensor([[684, 17, 95],

[774, 794, 420]])

tensor([[939, 988, 37],

[983, 933, 821]])

tensor([[832, 50, 453],

[ 37, 322, 981]])

-------------------------因为在默认情况下,每个子进程在 epoch Was killed at the end of the,All the process of resources will be lost.在开始新的 epoch 时,In the process of the main random state has not changed,Used to initialize each child process again,So the child to the random number seed and the last epoch 完全相同.

因此We need to set up a meeting with epoch The number changed by random number,例如:np.random.get_state()[1][0] + epoch + worker_id.

The random number in practice it is difficult to realize,因为在 worker_init_fn Don't know the current is which a epoch.但是 torch.initial_seed() 可以满足我们的需求.

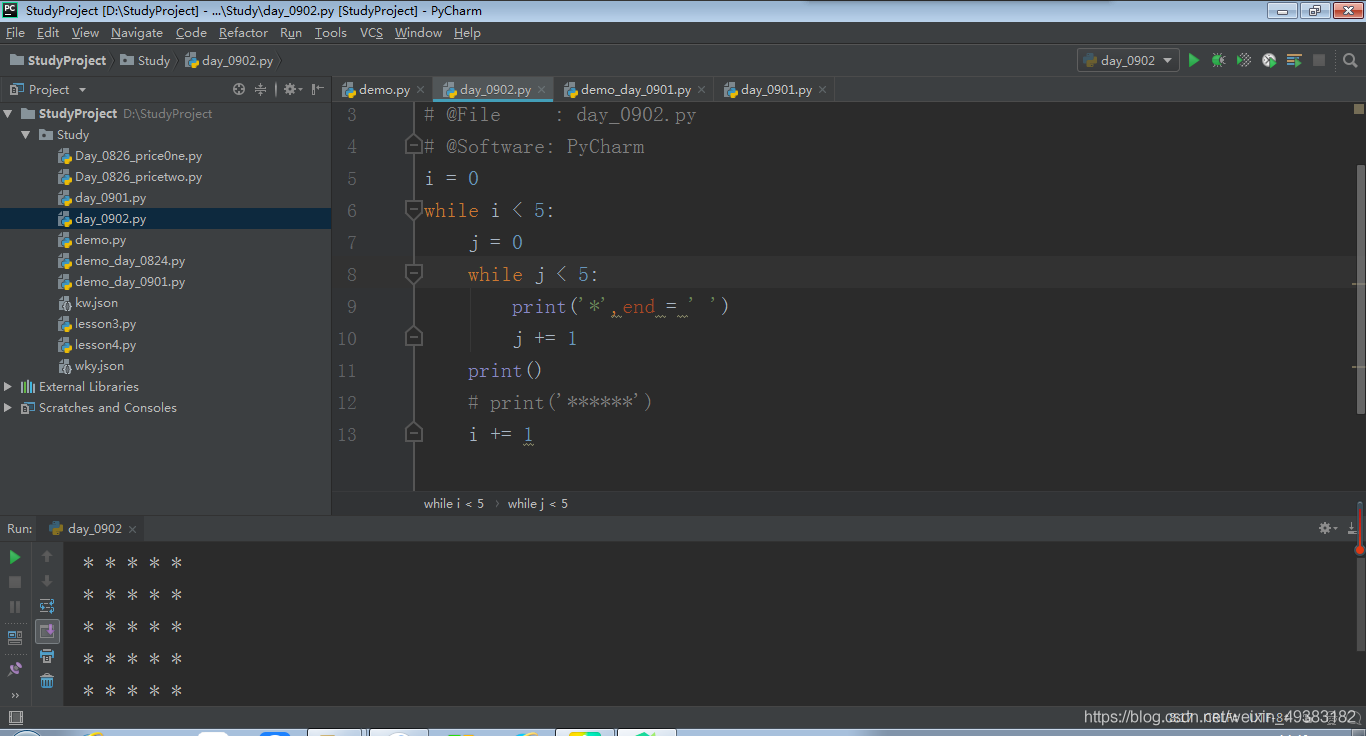

def seed_worker(worker_id):

worker_seed = torch.initial_seed() % 2**32

np.random.seed(worker_seed)实际上,这就是 PyTorch 官方推荐的做法 [6].

Not ready to delve into the reader can already be here,以后创建 DataLoader 时,把 worker_init_fn 设置为上面的 seed_worker 函数即可.Want to understand the principle behind,请看下一节,会涉及到 DataLoader 的源码理解.

为什么torch.initial_seed()可以?

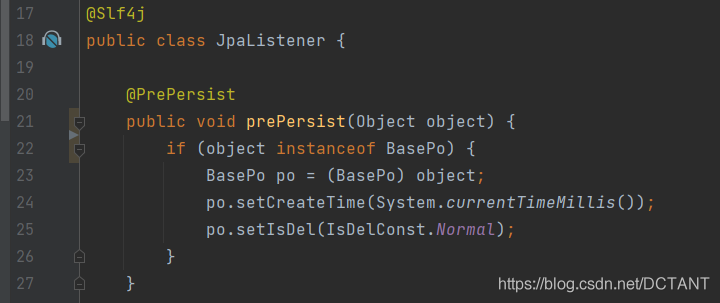

We first need to understand the processes more DataLoader 的处理流程.

1. 在主进程中实例化 DataLoader(dataset, num_workers=2).

2. 创建两个 multiprocessing.Queue [7] To tell the two child process which data should be responsible for their respective take.假设 Queue1 = [0, 2], Queue2 = [1, 3] On behalf of the first child process should be responsible for taking the first 0,2 个数据,The second process is responsible for the first 1,3 个数据.When the user to take the first index 个数据时,Main process query first which the child is free,If the second child process free,则把 index 放入到 Queue2 中. 再创建一个 result_queue [8] Used to save the child to read data,格式为 (index, dataset[index]).

3. 每个 epoch 开始时,主要干两件事情.a): Randomly generated a seed [9] base_seed b): 用 fork 方法创建 2 个子进程 [10].在每个子进程中,将 torch 和 random 的The random number seed set to base_seed + worker_id.Then have constantly query the respective queue data,如果有,Just get the index,从 dataset 中获取第 index 个数据 dataset[index],将结果保存到 result_queue 中.

在子进程中运行 torch.initial_seed(),返回的就是 torch The random number seed,即 base_seed + worker_id.因为每个 epoch 开始时,The master will regenerate the a base_seed,所以 base_seed 是随 epoch Changes in the random number.此外,torch.initial_seed()返回的是 long int 类型,而 Numpy 只接受 uint 类型([0, 2**32 - 1]),所以需要对 2**32 取模.

如果我们用 torch 或者 random 生成随机数,而不是 numpy,Do not have to worry about will encounter this problem,因为 PyTorch 已经把 torch 和 random Random number set up in order to base_seed + worker_id.

综上所述,这个 bug The emergence of the need to satisfy the following two conditions:

PyTorch 版本 < 1.9

在 Dataset 的

__getitem__方法中使用了 Numpy 的随机数

附录

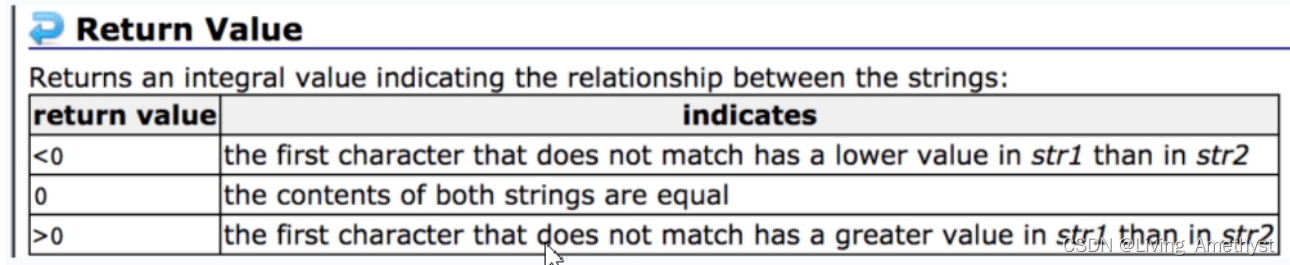

Some candidates.

pytorch-image-models [11]

def seed_worker(worker_id): worker_info = torch.utils.data.get_worker_info() # worker_info.seed == torch.initial_seed() np.random.seed(worker_info.seed % 2**32)@晚星 [12]

def seed_worker(worker_id): seed = np.random.default_rng().integers(low=0, high=2**32, size=1) np.random.seed(seed)@ggggnui [13]

class WorkerInit: def __init__(self, global_step): self.global_step = global_step def worker_init_fn(self, worker_id): np.random.seed(self.global_step + worker_id) def update_global_step(self, global_step): self.global_step = global_step worker_init = WorkerInit(0) dataloader = DataLoader(dataset, batch_size=2, num_workers=2, worker_init_fn=worker_init.worker_init_fn) for epoch in range(3): for batch in dataloader: print(batch) # 需要注意的是len(dataloader)必须>=num_workers,Otherwise will repeat worker_init.update_global_step((epoch + 1) * len(dataloader))

文内链接 & 参考文献

[1] https://github.com/pytorch/pytorch/issues/5059

[2] https://github.com/pytorch/tutorials/blob/af754cbdaf5f6b0d66a7c5cd07ab97b349f3dd9b/beginner_source/data_loading_tutorial.py%23L270-L271

[3] https://github.com/openai/ebm_code_release/blob/18898a24ee24dcd75c41ac3e228b9db79e53237c/data.py%23L465-L470

[4] https://github.com/NVlabs/Deep_Object_Pose/blob/11bbc3b8545e099b35901a13f549ddddacd7dd1f/scripts/train.py%23L518-L521

[5] https://docs.python.org/3/library/multiprocessing.html%23contexts-and-start-methods

[6] https://pytorch.org/docs/stable/notes/randomness.html%23dataloader

[7] https://github.com/pytorch/pytorch/blob/bc3d892c20ee8cf6c765742481526f307e20312a/torch/utils/data/dataloader.py%23L897

[8] https://github.com/pytorch/pytorch/blob/bc3d892c20ee8cf6c765742481526f307e20312a/torch/utils/data/dataloader.py%23L888

[9] https://github.com/pytorch/pytorch/blob/bc3d892c20ee8cf6c765742481526f307e20312a/torch/utils/data/dataloader.py%23L495

[10] https://github.com/pytorch/pytorch/blob/bc3d892c20ee8cf6c765742481526f307e20312a/torch/utils/data/dataloader.py%23L901

[11] https://github.com/rwightman/pytorch-image-models/blob/e4360e6125bb0bb4279785810c8eb33b40af3ebd/timm/data/loader.py#L149

[12] https://www.zhihu.com/people/wan-xing-13

[13] https://www.zhihu.com/people/ggggnui

[14] https://tanelp.github.io/posts/a-bug-that-plagues-thousands-of-open-source-ml-projects/

[15] https://github.com/pytorch/pytorch/pull/56488

更多阅读

#投 稿 通 道#

让你的文字被更多人看到

如何才能让更多的优质内容以更短路径到达读者群体,缩短读者寻找优质内容的成本呢?答案就是:你不认识的人.

总有一些你不认识的人,知道你想知道的东西.PaperWeekly 或许可以成为一座桥梁,促使不同背景、不同方向的学者和学术灵感相互碰撞,迸发出更多的可能性.

PaperWeekly 鼓励高校实验室或个人,在我们的平台上分享各类优质内容,可以是最新论文解读,也可以是学术热点剖析、科研心得或竞赛经验讲解等.我们的目的只有一个,让知识真正流动起来.

稿件基本要求:

• 文章确系个人原创作品,未曾在公开渠道发表,如为其他平台已发表或待发表的文章,请明确标注

• 稿件建议以 markdown 格式撰写,文中配图以附件形式发送,要求图片清晰,无版权问题

• PaperWeekly 尊重原作者署名权,并将为每篇被采纳的原创首发稿件,提供业内具有竞争力稿酬,具体依据文章阅读量和文章质量阶梯制结算

投稿通道:

• 投稿邮箱:[email protected]

• 来稿请备注即时联系方式(微信),以便我们在稿件选用的第一时间联系作者

• 您也可以直接添加小编微信(pwbot02)快速投稿,备注:姓名-投稿

△长按添加PaperWeekly小编

现在,在「知乎」也能找到我们了

进入知乎首页搜索「PaperWeekly」

点击「关注」订阅我们的专栏吧

·

边栏推荐

- KeyBERT和labse提取字符串中的关键词

- SQL Server查询优化

- Master-slave postition changes cannot be locked_Slave_IO_Running shows No_Slave_Sql_Running shows No---Mysql master-slave replication synchronization 002

- unix环境编程 第十五章 15.10 POSIX信号量

- 分类预测 | MATLAB实现CNN-GRU(卷积门控循环单元)多特征分类预测

- MySQL索引的B+树到底有多高?

- 集合与函数

- EndNoteX9 OR X 20 Guide

- Win7 远程桌面限制IP

- stimulus.js 初体验

猜你喜欢

随机推荐

史上最小白之《Word2vec》详解

The GNU Privacy Guard

Browser error classification

GeoScene Pro 2.1下载地址与安装基本要求

机器学习--朴素贝叶斯(Naive Bayes)

3D printed this DuPont cable management artifact, and the desktop is no longer messy

2021-01-11-雪碧图做表情管理器

socket实现TCP/IP通信

从源码分析UUID类的常用方法

主从postition变化无法锁定_Slave_IO_Running显示No_Slave_Sql_Running显示No---Mysql主从复制同步002

分类预测 | MATLAB实现CNN-LSTM(卷积长短期记忆神经网络)多特征分类预测

需求侧电力负荷预测(Matlab代码实现)

【原创】解决阿里云oss-browser.exe双击没反应打不开,提供一种解决方案

按键精灵之输出文本

conditional control statement

阿里神作!吃透这份资料入厂率高达99%

StratoVirt 中的虚拟网卡是如何实现的?

Transformer+Embedding+Self-Attention原理详解

函数二

EndNote User Guide

![[贴装专题] 贴装流程中涉及到的位置关系计算](/img/72/a60a51c86e641749f38fab66f1236a.png)