当前位置:网站首页>Experiment record: the process of building a network

Experiment record: the process of building a network

2022-08-09 11:44:00 【anonymous magician】

I. Problems encountered

1.RuntimeError: Input type (torch.cuda.FloatTensor) and weight type (torch.FloatTensor) should be the same

RuntimeError: Input type (torch.cuda.FloatTensor) and weight type (torch.FloatTensor) should be the sameWhen you want to test the built network, look at the output shape of each process, and create a fixed shape input as follows

search_var = torch.FloatTensor(1, 3, 255, 255).cuda()Observing the problem of error reporting, it is found that the input is of torch.cuda.FloatTensor type, and the weight is of torch.FloatTensor type. Therefore, you need to put the model on cuda.

Solution:

model =model.cuda()2. TypeError: 'PointTarget' object is not iterable

This is due to a problem in the design of points, due to the definition of the class

class PointTarget:def __init__(self):def __call__(self, target, size, neg=False):It is caused by the conflict of incoming parameters of __init__ and __call__, so it needs to be changed.

And when using a class, you must first initialize the instance object,

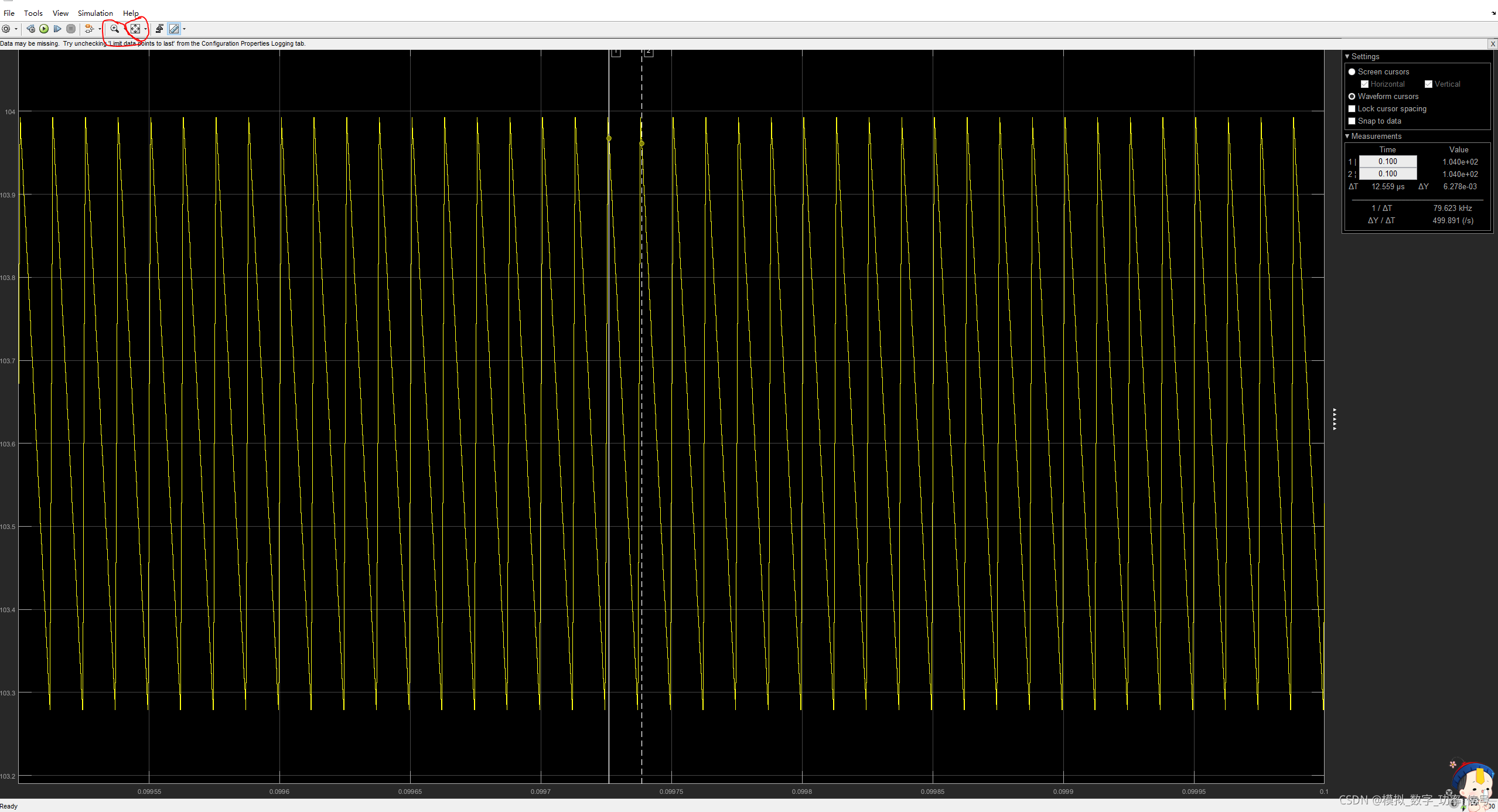

In 3.debug, if the variable shows unable to get repr for The classification loss function uses focal loss, so the data needs to be mapped between 0 and 1 before being sent, so a sigmoid operation needs to be performed.

4. RuntimeError: CUDA error: device-side assert triggered

5. The output of the neural network has a nan value

This may be due to the fact that the output of a certain layer of the network is not normalized or relu output when the network structure is built, resulting in abnormal output values

6. config.py conflicts with config.yaml

If the parameter value set in config.yaml also appears in the config.py file, must make sure that the parameter value set in the config.py file is the same as the one set in config.yaml>

7. Distributed training stuck

Can be set

--nproc_per_node=1

But the next morning I set it to 2 again.

Second, the realization process

1. Build your own network

First build the model according to the design method and write the code.The process of building the model is done in parts, i.e. completing each part of the foundation first and then connecting them together.For example, it is divided into backbone and head.

Finally, the forward propagation process of the model is completed by creating a model class, which will inherit the nn.Module class of pytorch

You can create input variables at will, and then look at the change of feature shape during the transmission step by step. The statement to create a tensor variable is as follows

template_var = torch.rand((1, 3, 127, 127))search_var = torch.rand((1, 3, 255, 255))

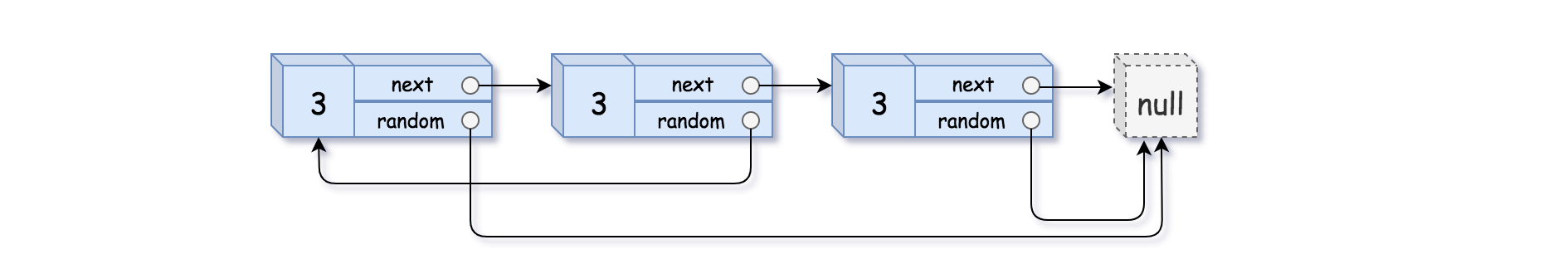

2. Design and implement the calculation of the loss function

After building the network structure, you can get the desired output form through the model, and then the next step is the design and implementation of the loss function.First of all, according to the needs of the loss function, the whole process is determined first.In this process, the get of the label is involved. How to get the corresponding label according to the desired output?The shape of the label generally corresponds to the output shape of the model.Here, the label must correspond to the predicted output obtained one-to-one, which is related to the corresponding shape transformation when performing operations such as permute and torch.cat on the label and the predicted output.

1) Create points2) get the labels cls and reg3) Construct dataloader when composing data for formal training4) Build the model5) The data is sent to the model to get the prediction output

3. Embedding model

1) Note the reset of data.Labels in data to see if they match2) Pay attention to the forward process of the model during training, whether it is smooth3) The settings of the learning rate and optimizer of each module in the network, pay attention to the decoupling in this regard

The setting of the learning rate is set through a dictionary, and the parameters and lr keys and the corresponding value are set again

trainable_params += [{'params': filter(lambda x: x.requires_grad,model.backbone.parameters()),'lr': cfg.BACKBONE.LAYERS_LR * cfg.TRAIN.BASE_LR}] # It may be that the initial learning rate of the backbone network and other parts are differentif cfg.ADJUST.ADJUST: # Truetrainable_params += [{'params': model.fpn.parameters(),'lr': cfg.TRAIN.BASE_LR}] # trainable_params += [{'params': model.ban.parameters(),'lr': cfg.TRAIN.BASE_LR}]

4. Reasoning process

The inference process and the training process are two distinct parts. Generally, the inference process and the training process are in the same tracker class, but they need to define their own processes separately.

The classification loss function uses focal loss, so the data needs to be mapped between 0 and 1 before being sent, so a sigmoid operation needs to be performed.

4. RuntimeError: CUDA error: device-side assert triggered

5. The output of the neural network has a nan value

This may be due to the fact that the output of a certain layer of the network is not normalized or relu output when the network structure is built, resulting in abnormal output values

6. config.py conflicts with config.yaml

If the parameter value set in config.yaml also appears in the config.py file, must make sure that the parameter value set in the config.py file is the same as the one set in config.yaml>

7. Distributed training stuck

Can be set

--nproc_per_node=1But the next morning I set it to 2 again.

Second, the realization process

1. Build your own network

First build the model according to the design method and write the code.The process of building the model is done in parts, i.e. completing each part of the foundation first and then connecting them together.For example, it is divided into backbone and head.

Finally, the forward propagation process of the model is completed by creating a model class, which will inherit the nn.Module class of pytorch

You can create input variables at will, and then look at the change of feature shape during the transmission step by step. The statement to create a tensor variable is as follows

template_var = torch.rand((1, 3, 127, 127))search_var = torch.rand((1, 3, 255, 255))2. Design and implement the calculation of the loss function

After building the network structure, you can get the desired output form through the model, and then the next step is the design and implementation of the loss function.First of all, according to the needs of the loss function, the whole process is determined first.In this process, the get of the label is involved. How to get the corresponding label according to the desired output?The shape of the label generally corresponds to the output shape of the model.Here, the label must correspond to the predicted output obtained one-to-one, which is related to the corresponding shape transformation when performing operations such as permute and torch.cat on the label and the predicted output.

1) Create points2) get the labels cls and reg3) Construct dataloader when composing data for formal training4) Build the model5) The data is sent to the model to get the prediction output3. Embedding model

1) Note the reset of data.Labels in data to see if they match2) Pay attention to the forward process of the model during training, whether it is smooth3) The settings of the learning rate and optimizer of each module in the network, pay attention to the decoupling in this regardThe setting of the learning rate is set through a dictionary, and the parameters and lr keys and the corresponding value are set again

trainable_params += [{'params': filter(lambda x: x.requires_grad,model.backbone.parameters()),'lr': cfg.BACKBONE.LAYERS_LR * cfg.TRAIN.BASE_LR}] # It may be that the initial learning rate of the backbone network and other parts are differentif cfg.ADJUST.ADJUST: # Truetrainable_params += [{'params': model.fpn.parameters(),'lr': cfg.TRAIN.BASE_LR}] # trainable_params += [{'params': model.ban.parameters(),'lr': cfg.TRAIN.BASE_LR}] 4. Reasoning process

The inference process and the training process are two distinct parts. Generally, the inference process and the training process are in the same tracker class, but they need to define their own processes separately.

边栏推荐

猜你喜欢

随机推荐

redis内存的淘汰机制

WPF 实现带蒙版的 MessageBox 消息提示框

mysql + redis + flask + flask-sqlalchemy + flask-session 配置及项目打包移植部署

结构体知识点整合(前篇)

[工程数学]1_特征值与特征向量

【C language】动态数组的创建和使用

wait system call

研发需求的验收标准应该怎么写? | 敏捷实践

wait系统调用

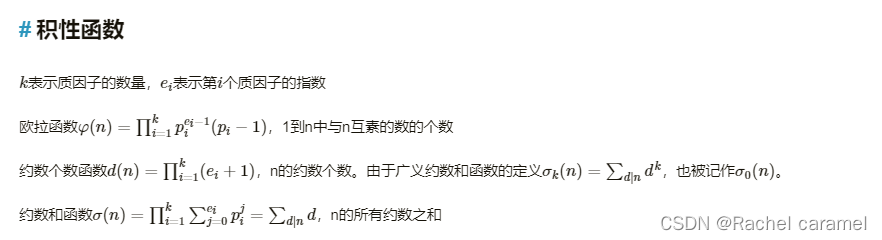

Number theory knowledge

【C language】typedef的使用:结构体、基本数据类型、数组

web课程设计

使用gdb调试多进程程序、同时调试父进程和子进程

在北京参加UI设计培训到底怎么样?

学长告诉我,大厂MySQL都是通过SSH连接的

防止数据冒用的方法

Win10调整磁盘存储空间详解

PAT1004

实现strcat函数

Notepad++安装插件

![[Essence] Analysis of the special case of C language structure: structure pointer / basic data type pointer, pointing to other structures](/img/cc/9e5067c9cedaf1a1797c0509e67f88.png)