当前位置:网站首页>pytorch tear CNN

pytorch tear CNN

2022-08-10 22:41:00 【Python ml】

import torch

from torch.utils.data import DataLoader # We want to load the data sets of

from torchvision import transforms # The raw data processing

from torchvision import datasets # pytorchVery thoughtful for us directly the data set

import torch.nn.functional as F # 激活函数

import torch.optim as optim

import matplotlib.pyplot as plt

batch_size = 64

# We get the picture ispillow,We want to convert him to training model cantensorThat is the format of the tensor

transform = transforms.Compose([transforms.ToTensor()])

# 加载训练集,pytorchVery thoughtful for us directly the data set,注意,Even if you don't have to download the data sets

# 数据下载

train_dataset = datasets.MNIST(root='E:/data/cnn', train=True, download=True, transform=transform)

# 数据打包

train_loader = DataLoader(dataset=train_dataset, shuffle=True, batch_size=batch_size)

# Same way to load the test set

test_dataset = datasets.MNIST(root='E:/data/cnn', train=False, download=True, transform=transform)

test_loader = DataLoader(dataset=test_dataset, shuffle=False, batch_size=batch_size)

# Next we look at the model is how to do

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

# Defines our first used convolution layer,Because image input channel for1,第一个参数就是1

# The output channel for10,kernel_size是卷积核的大小,这里定义的是5x5的

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

# Understand the definition of the above,The following are you sure you can understand

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

# To define a pooling layer

self.pooling = torch.nn.MaxPool2d(2)

# Finally we did classification using linear layer

self.fc = torch.nn.Linear(320, 10)

# The following is the process of calculation

def forward(self, x):

# Flatten data from (n, 1, 28, 28) to (n, 784)

batch_size = x.size(0) # 这里面的0是xThe size of the first1个参数,自动获取batch大小

# 输入x经过一个卷积层,After a pooling layer,最后用relu做激活

x = F.relu(self.pooling(self.conv1(x)))

# Go through the above process

x = F.relu(self.pooling(self.conv2(x)))

# In order to give us the final linear layer of a fully connected with

# We are going to take a two-dimensional picture(Actually here is processed)20x4x4Tensor into a d

x = x.view(batch_size, -1) # flatten

# 经过线性层,Sure he is0~9The probability of each number

x = self.fc(x)

return x

model = Net() # 实例化模型

# Moved the computing andGPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

# 定义一个损失函数,We model to calculate the output value and standard value of the gap between

criterion = torch.nn.CrossEntropyLoss()

# 定义一个优化器,Training model zha training,就靠这个,He would reverse the changes to the corresponding layer weights

optimizer = optim.SGD(model.parameters(), lr=0.1, momentum=0.5) # lr为学习率

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0): # 每次取一个样本

inputs, target = data

inputs, target = inputs.to(device), target.to(device)

# 优化器清零

optimizer.zero_grad()

# Forward to calculate the

outputs = model(inputs)

# 计算损失

loss = criterion(outputs, target)

# 反向求梯度

loss.backward()

# 更新权重

optimizer.step()

# Add up losses

running_loss += loss.item()

# 每300Output the data

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 2000))

running_loss = 0.0

def CNN_test():

correct = 0

total = 0

with torch.no_grad(): # 不用算梯度

for data in test_loader:

inputs, target = data

inputs, target = inputs.to(device), target.to(device)

outputs = model(inputs)

# We take the highest probability that count as the output

_, predicted = torch.max(outputs.data, dim=1)

total += target.size(0)

# 计算正确率

correct += (predicted == target).sum().item()

print('Accuracy on test set: %d %% [%d/%d]' % (100 * correct / total, correct, total))

return correct / total

if __name__ == '__main__':

print("开始训练")

epoch_list = []

acc_list = []

for epoch in range(10):

print("epoch:%d" % epoch)

train(epoch)

acc = CNN_test()

epoch_list.append(epoch)

acc_list.append(acc)

plt.plot(epoch_list, acc_list)

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.show()

边栏推荐

- shell(文本打印工具awk)

- 交换机和生成树知识点

- mmpose关键点(一):评价指标(PCK,OKS,mAP)

- 测试4年感觉和1、2年时没什么不同?这和应届生有什么区别?

- virtual address space

- Distribution Network Expansion Planning: Consider Decisions Using Probabilistic Energy Production and Consumption Profiles (Matlab Code Implementation)

- String类的常用方法

- ThreadLocal comprehensive analysis (1)

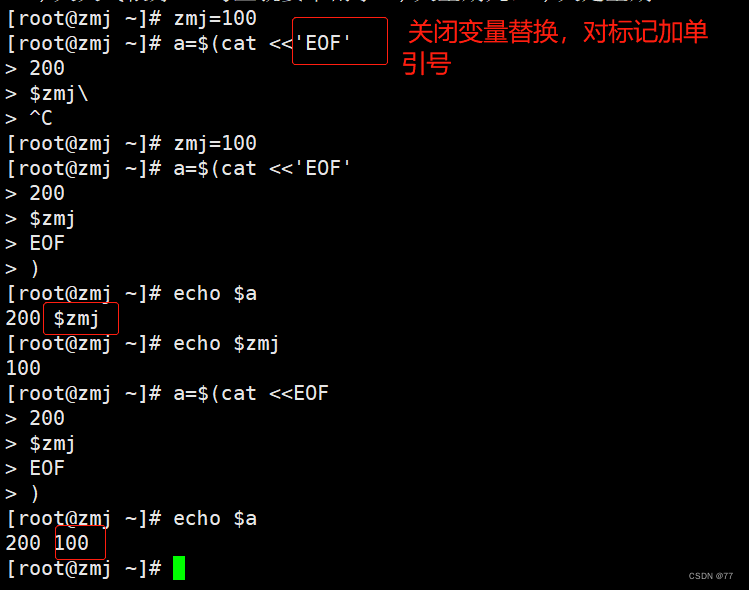

- xshell (sed command)

- QT笔记——QT工具uic,rcc,moc,qmake的使用和介绍

猜你喜欢

《DevOps围炉夜话》- Pilot - CNCF开源DevOps项目DevStream简介 - feat. PMC成员胡涛

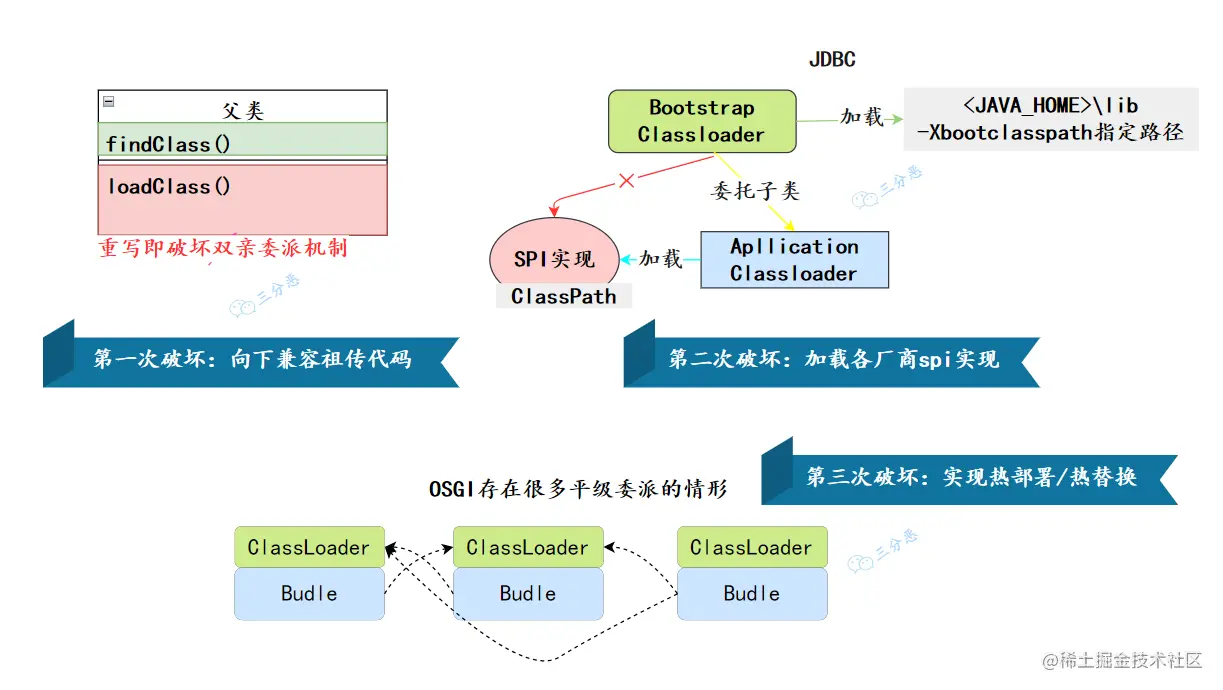

JVM classic fifty questions, now the interview is stable

shell programming without interaction

使用 Cloudreve 搭建私有云盘

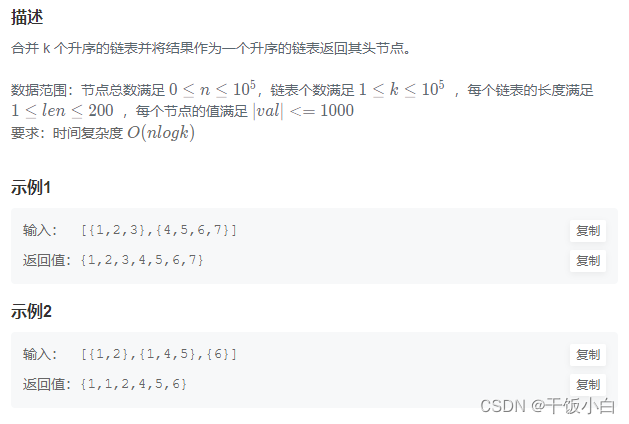

合并k个已排序的链表

Alibaba and Ant Group launched OceanBase 4.0, a distributed database, with single-machine deployment performance exceeding MySQL

How to translate financial annual report, why choose a professional translation company?

字节跳动原来这么容易就能进去...

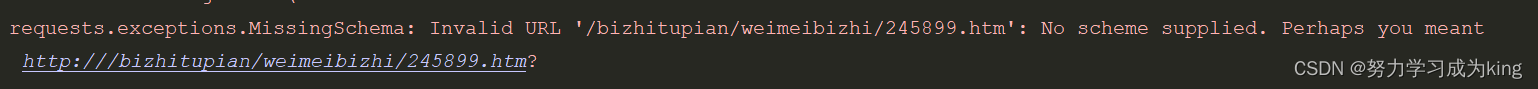

爬虫request.get()出现错误

瑞幸咖啡第二季营收33亿:门店达7195家 更换CFO

随机推荐

高通平台开发系列讲解(应用篇)QCMAP应用框架介绍

BM7 链表中环的入口结点

3598. 二叉树遍历(华中科技大学考研机试题)

An article to teach you a quick start and basic explanation of Pytest, be sure to read

Power system power flow calculation (Newton-Raphson method, Gauss-Seidel method, fast decoupling method) (Matlab code implementation)

新一代网络安全防护体系的五个关键特征

A shell script the for loop statements, while statement

OneNote 教程,如何在 OneNote 中整理笔记本?

pytorch手撕CNN

12 Recurrent Neural Network RNN2 of Deep Learning

艺术与科技的狂欢,阿那亚2022砂之盒沉浸艺术季

APP UI自动化测试常见面试题,或许有用呢~

c语言之 练习题1 大贤者福尔:魔法数,神奇的等式

自组织是管理者和成员的双向奔赴

文件IO-缓冲区

Detailed installation steps and environment configuration of geemap

FPGA - Memory Resources of 7 Series FPGA Internal Structure -03- Built-in Error Correction Function

file IO-buffer

STL-deque

Shell 编程--Sed