当前位置:网站首页>PyTorch 18. torch. backends. cudnn

PyTorch 18. torch. backends. cudnn

2022-04-23 07:29:00 【DCGJ666】

PyTorch 18. torch.backends.cudnn

Written in the beginning

torch.backends.cudnn.benchmark=True

When this parameter is set to True when , The network will initially look for the convolution calculation method that is most suitable for the current network operation , It can improve the training efficiency of the network ; But when the input image size changes constantly , Using this parameter will slow down the network training speed .

Rules

If the input data dimension or type of the network changes little ( That is, the same data size when initializing the input data ), Setting this parameter can increase the operation efficiency ;

If the input data of the network is in each iteration If everything changes , It can lead to cuDNN Find the optimal configuration every time , This will reduce the operation efficiency .

Background knowledge

cuDNN

cuDNN NVIDIA is specially developed for deep neural network GPU Acceleration Library , For convolution 、 Many low-level optimizations have been made for common operations such as pooling , More than average GPU The program is much faster . Most mainstream deep learning frameworks support cuDNN. In the use of GPU When ,PyTorch Will be used by default cuDNN Speed up . however , In the use of cuDNN When ,torch.backends.cudnn.benchmark The model is False.

Convolution operation

Convolution layer is the most important part of convolution neural network , It is also the part with the largest amount of computation , If we can improve the efficiency of convolution in low-level code , Without changing the given neural network architecture , Improve the speed of online training .

The implementation methods of convolution are :

- direct method , Loop multi-layer nesting

- GEMM(General Matrix Multiply)

- FFT( The fast Fourier transform ), First convert to frequency domain , Multiply and convert to time domain

- be based on Winograd Algorithm

- …

torch.backends.cudnn.benchmark

stay PyTorch The convolution layer in the model is optimized in advance , That is to test in every convolution layer cuDNN The convolution implementation algorithm provided , Then choose the fastest one . So when the model starts , Just take a little extra preprocessing time , The training time can be greatly reduced .

Placement position

if args.use_gpu and torch.cuda.is_available():

device = torch.device('cuda')

torch.backends.cudnn.benchmark = True

else:

device = torch.device('cpu')

版权声明

本文为[DCGJ666]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230611343704.html

边栏推荐

猜你喜欢

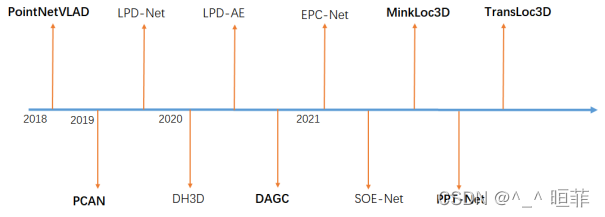

【点云系列】 场景识别类导读

![[Point Cloud Series] SG - Gan: Adversarial Self - attachment GCN for Point Cloud Topological parts Generation](/img/1d/92aa044130d8bd86b9ea6c57dc8305.png)

[Point Cloud Series] SG - Gan: Adversarial Self - attachment GCN for Point Cloud Topological parts Generation

Mysql database installation and configuration details

Systrace 解析

Detailed explanation of device tree

【点云系列】Learning Representations and Generative Models for 3D pointclouds

AUTOSAR从入门到精通100讲(五十一)-AUTOSAR网络管理

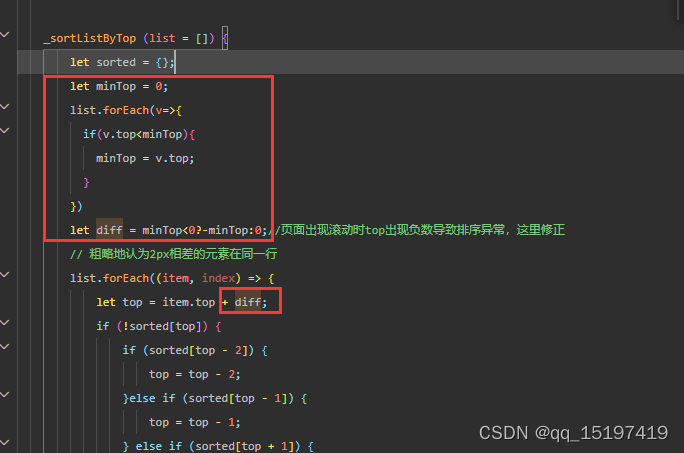

Wechat applet uses wxml2canvas plug-in to generate some problem records of pictures

GIS实用小技巧(三)-CASS怎么添加图例?

“泉”力以赴·同“州”共济|北峰人一直在行动

随机推荐

【无标题】制作一个0-99的计数器,P1.7接按键,P2接数码管段,共阳极数码管,P3.0,P3.1接数码管位码,每按一次键,数码管显示加一。请写出单片机的C51代码

swin transformer 转 onnx

. net encountered failed to decode downloaded font while loading font:

PyTorch 13. 嵌套函数和闭包(狗头)

enforce fail at inline_ container. cc:222

[point cloud series] pnp-3d: a plug and play for 3D point clouds

美摄科技云剪辑,助力哔哩哔哩使用体验再升级

By onnx checker. check_ Common errors detected by model

PyTorch 14. module类

应急医疗通讯解决方案|MESH无线自组网系统

torch.where能否传递梯度

美摄科技推出桌面端专业视频编辑解决方案——美映PC版

AUTOSAR从入门到精通100讲(五十)-AUTOSAR 内存管理系列- ECU 抽象层和 MCAL 层

直观理解 torch.nn.Unfold

[3D shape reconstruction series] implicit functions in feature space for 3D shape reconstruction and completion

Common regular expressions

【点云系列】Neural Opacity Point Cloud(NOPC)

【无标题】PID控制TT编码器电机

Use originpro express for free

SHA512/384 原理及C语言实现(附源码)