当前位置:网站首页>Universal template for scikit learn model construction

Universal template for scikit learn model construction

2022-04-23 13:59:00 【Daydreamer_ Fat seven seven】

Preface

This article is my official account in WeChat. Deep learning beginners What you see above ,《 Introduction to machine learning | Use scikit-learn Universal template for building models 》, It feels very suitable for beginners . To prevent article connection failure , The main content of the article is recorded here , The official account is also strongly addressed to Amway. !

Introduction to machine learning | Use scikit-learn Universal template for building models

1. Identify the type of problem you need to solve , And know the corresponding algorithm to solve this type of problem .

There are only three types of common problems : classification 、 Return to 、 clustering .

classification : If you need to get a category variable by entering data , That's the classification problem ; Divided into two categories is the second classification , Divided into more than two categories is the multi classification problem . such as , Judge whether an email is spam 、 Distinguish the cat or dog in the picture according to the picture .

Return to : If you need to input data to get a specific continuous value , That is the question of return . such as , Predict the house price in a certain area, etc .

clustering : If your dataset does not have a corresponding attribute tag , What you're going to do , Is to explore the spatial distribution of this group of samples , For example, analyze which samples are closer , Which samples are far apart .

2. Call algorithm to build model ( Universal template 1.0)

(1) Load data set ( To load the scikit-learn Data set of iris )

from sklearn.datasets import load_iris

data = load_iris()

x = data.data

y = data.target(2) Data set splitting

from sklearn.model_selection import train_test_split

train_x,test_x,train_y,test_y = train_test_split(x,y,test_size=0.1,random_state=0)Universal template 1.0 ( Help you quickly build a basic algorithm model )

Different algorithms just change the name , And the parameters of the model are different .

With this universal template , The next step is to simply copy, paste and change the name :

And in scikit-learn in , The location of each package is regular , such as : Random forest is under the integrated learning folder .

Templates 1.0 The application case

1、 structure SVM Classification model

Access to information , We know svm Algorithm in scikit-learn.svm.SVC Next , therefore :

-

Fill in the algorithm position :

svm -

Fill in the algorithm name :

SVC() -

The model name is given by itself , Here we call it

svm_model

The procedure of applying the template is as follows :

# svm classifier

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score

svm_model = SVC()

svm_model.fit(train_x,train_y)

pred1 = svm_model.predict(train_x)

accuracy1 = accuracy_score(train_y,pred1)

print(' Accuracy on the training set : %.4f'%accuracy1)

pred2 = svm_model.predict(test_x)

accuracy2 = accuracy_score(test_y,pred2)

print(' Accuracy on the test set : %.4f'%accuracy2)Output :

Accuracy on the training set : 0.9810

Accuracy on the test set : 0.9778

2、 structure LR Classification model

Empathy , find LR Algorithm in sklearn.linear_model.LogisticRegression Next , therefore :

-

Fill in the algorithm position :

linear_model -

Fill in the algorithm name :

LogisticRegression -

The model is called doing :lr_model.

The procedure is as follows :

The procedure of applying the template is as follows :

# LogisticRegression classifier

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score # The scoring function is evaluated with accuracy

lr_model = LogisticRegression()

lr_model.fit(train_x,train_y)

pred1 = lr_model.predict(train_x)

accuracy1 = accuracy_score(train_y,pred1)

print(' Accuracy on the training set : %.4f'%accuracy1)

pred2 = lr_model.predict(test_x)

accuracy2 = accuracy_score(test_y,pred2)

print(' Accuracy on the test set : %.4f'%accuracy2)Output :

Accuracy on the training set : 0.9429

Accuracy on the test set : 0.8889

3、 Build a random forest classification model

Random forest algorithm in sklearn.ensemble.RandomForestClassifier Next .

3. Improve the template ( Universal template 2.0)

Add cross validation , Make the evaluation of algorithm model more scientific

stay 1.0 In the template of version , When you run the same program many times, you will find : The accuracy of each run is not the same , But floating within a certain range , This is because the data will be selected before entering the model , The sequence of data input into the model is different in each training . So even if it's the same program , The final performance of the model will be good or bad .

What's worse is , In some cases , On the training set , By adjusting the parameter settings, the performance of the model reaches the best state , However, fitting may occur on the test set . This is the time , The score we get on the training set can not effectively reflect the generalization performance of the model .

To solve these two problems , The verification set should also be divided on the training set (validation set) And combined with cross validation to solve . First , In the training set, the verification set that does not participate in the training is divided , Just evaluate the model after the model training , Then make a final evaluation on the test set .

But this greatly reduces the number of samples that can be used for model learning , Therefore, it is necessary to use the method of cross validation to train more times . For example, the most commonly used k- Fold cross validation is shown in the figure below , It mainly divides the training set into k A smaller set . And then k-1 A training subset is used as the training set to train the model , Put the rest 1 A subset of training sets is used as a verification set for model verification . This requires training k Time , Finally, the evaluation score on the training set is the average of the evaluation scores of all training results .

On the one hand, all the data of the training set can participate in the training , On the other hand, a representative score is obtained through multiple calculations . The only drawback is the high computational cost , Added k Times the amount of calculation .

That's how it works , But the ideal is full , The reality is very backbone . There is a big problem in front of me when I realize it : How can the training set be evenly divided into K Share ?

Don't think too much about this problem , Since don't forget , We are now standing on the shoulders of giants ,scikit-learn It has integrated the uniform splitting method thought by excellent mathematicians with the wisdom of programmers cross_val_score() In this function , Just call this function , You don't need to think about the splitting algorithm , No need to write for Cycle training .

Universal template V2.0 edition

Put the model 、 data 、 Divide the number of verification sets into a brain input function , The function will automatically execute the process mentioned above .

When seeking accuracy , We can simply output the average accuracy :

# Average value of output accuracy

# print(" Accuracy on the training set : %0.2f " % scores1.mean())But now that we've done cross validation , Having done so many calculations , Just finding an average is a bit wasteful , The following code can be used to calculate the confidence of accuracy :

# Average value of output accuracy and confidence interval

print(" Average accuracy on the training set : %0.2f (+/- %0.2f)" % (scores2.mean(), scores2.std() * 2))Templates 2.0 The application case :

1、 structure SVM Classification model

The procedure is as follows :

### svm classifier

from sklearn.model_selection import cross_val_score

from sklearn.svm import SVC

svm_model = SVC()

svm_model.fit(train_x,train_y)

scores1 = cross_val_score(svm_model,train_x,train_y,cv=5, scoring='accuracy')

# Average value of output accuracy and confidence interval

print(" Accuracy on the training set : %0.2f (+/- %0.2f)" % (scores1.mean(), scores1.std() * 2))

scores2 = cross_val_score(svm_model,test_x,test_y,cv=5, scoring='accuracy')

# Average value of output accuracy and confidence interval

print(" Average accuracy on the test set : %0.2f (+/- %0.2f)" % (scores2.mean(), scores2.std() * 2))

print(scores1)

print(scores2)Output :

Accuracy on the training set : 0.97 (+/- 0.08)

Average accuracy on the test set : 0.91 (+/- 0.10)

[1. 1. 1. 0.9047619 0.94736842]

[1. 0.88888889 0.88888889 0.875 0.875 ]

2、 structure LR Classification model

# LogisticRegression classifier

from sklearn.model_selection import cross_val_score

from sklearn.linear_model import LogisticRegression

lr_model = LogisticRegression()

lr_model.fit(train_x,train_y)

scores1 = cross_val_score(lr_model,train_x,train_y,cv=5, scoring='accuracy')

# Average value of output accuracy and confidence interval

print(" Accuracy on the training set : %0.2f (+/- %0.2f)" % (scores1.mean(), scores1.std() * 2))

scores2 = cross_val_score(lr_model,test_x,test_y,cv=5, scoring='accuracy')

# Average value of output accuracy and confidence interval

print(" Average accuracy on the test set : %0.2f (+/- %0.2f)" % (scores2.mean(), scores2.std() * 2))

print(scores1)

print(scores2)

Output :

Accuracy on the training set : 0.94 (+/- 0.07)

Average accuracy on the test set : 0.84 (+/- 0.14)

[0.90909091 1. 0.95238095 0.9047619 0.94736842]

[0.90909091 0.88888889 0.88888889 0.75 0.75 ]

notes : If you want to evaluate multiple indicators at one time , You can also use a method that can input multiple evaluation indicators at one time cross_validate() function .

4. Parameter adjustment and improvement ( Universal template 3.0)

Adjusting parameters makes the algorithm perform to a higher level

All the above are used to train the model through the default parameters of the algorithm , The parameters applicable to different data sets will inevitably be different , You can't design your own algorithm , You can only adjust parameters like this , Adjustable parameter , It is the final dignity of the majority of Algorithm Engineers . Besides, , If the algorithm does not adjust parameters , Isn't it a disgrace to the famous Algorithm Engineer in the Jianghu “ Alchemy Engineer ” The reputation of ?

scikit-learn Different parameters are also provided for different algorithms, which can be adjusted by themselves . If you go into detail , I can write several more articles , The purpose of this paper is to construct a universal algorithm framework and construction template , therefore , Here is only a general automatic parameter adjustment method , For more details, the meaning of the corresponding parameters of each algorithm and the method of manual parameter adjustment , I will introduce it slowly in the future articles with examples .

The first thing to be clear is ,scikit-learn Provides Algorithm ().get_params() Method to see the parameters that can be adjusted by each algorithm , for instance , We want to see SVM The parameters that the classifier algorithm can adjust , Sure :

SVC().get_params()The output is SVM The parameters that can be adjusted by the algorithm and the default parameter value of the system . The specific meaning of each parameter will be introduced in later articles .

{'C': 1.0,

'cache_size': 200,

'class_weight': None,

'coef0': 0.0,

'decision_function_shape': 'ovr',

'degree': 3,

'gamma': 'auto',

'kernel': 'rbf',

'max_iter': -1,

'probability': False,

'random_state': None,

'shrinking': True,

'tol': 0.001,

'verbose': False}

next , Can lead to our V3.0 Version of the universal template .

Universal template 3.0

The form of the parameter is as follows :

The program will test the combination effect of these parameters in order , You don't need to work hard to achieve . Write here , Thank you for writing scikit-learn Such a convenient machine learning package . Suddenly I thought of a sentence : There is no time to be quiet , Just because someone is carrying a load for you .

See here , There may be doubts : Why use lists 、 Dictionaries 、 The way the list is nested in three layers ?params Can't it be in the form of a dictionary directly ? The answer is : That's ok , But not good .

Let's start with a small math problem : If we want to adjust n Parameters , Each parameter has 4 Alternative values . Then the program will train . When n by 10 When , , This is a huge amount of computation for computers . And when we put this 10 Split the parameters into 5 Group , Adjust only two parameters at a time , Other parameters use default values , So the amount of calculation is , The amount of calculation will be greatly reduced .

The function of the list is so , It ensures that only one dictionary parameter in the list can be adjusted at a time .

After running ,best_model Is the optimal model we get , This model can be used to predict .

Of course ,best_model There are many useful properties :

-

best_model.cv_results_: You can view the evaluation results under different parameters . -

best_model.param_: The optimal parameters of the model are obtained -

best_model.best_score_: Get the final score result of the modelTemplates 3.0 The application case

Realization SVM classifier

###1、svm classifier from sklearn.model_selection import cross_val_score,GridSearchCV from sklearn.svm import SVC svm_model = SVC() params = [ {'kernel': ['linear'], 'C': [1, 10, 100, 100]}, {'kernel': ['poly'], 'C': [1], 'degree': [2, 3]}, {'kernel': ['rbf'], 'C': [1, 10, 100, 100], 'gamma':[1, 0.1, 0.01, 0.001]} ] best_model = GridSearchCV(svm_model, param_grid=params,cv = 5,scoring = 'accuracy') best_model.fit(train_x,train_y)1) See the best score :

best_model.best_score_Output :

0.9714285714285714

2) Check the optimal parameters :

best_model.best_params_Output :

{'C': 1, 'kernel': 'linear'}

3) View all parameters of the optimal model :

best_model.best_estimator_This function will show the parameters without parameters , It is convenient to view the parameters of the model as a whole .

4) View the cross validation results of each parameter :

best_model.cv_results_notes : 1、 The previous version was best_model.grid_scores_, Now it has been removed 2、 This function outputs a lot of data , Inconvenient to view , Generally do not useIn practical use , If computing resources are sufficient , The third universal formula is generally used . If , In order to save computing resources, calculate the results as soon as possible , The manual parameter adjustment method introduced later will also be adopted .

Of course , In order to explain the use of universal template , stay Iris All algorithms are implemented on the data set , in application , If the project time is urgent , Choose an appropriate algorithm according to your own needs and data level . How to choose ,scikit-learn The official drew a picture very considerate , For you to choose the appropriate model according to the amount of data and the type of algorithm , This picture suggests collecting :

source :https://zhuanlan.zhihu.com/p/88729124

版权声明

本文为[Daydreamer_ Fat seven seven]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231358008257.html

边栏推荐

- YARN线上动态资源调优

- 1256: bouquet for algenon

- Taobao released the baby prompt "your consumer protection deposit is insufficient, and the expiration protection has been started"

- AtCoder Beginner Contest 248C Dice Sum (生成函数)

- Chapter 15 new technologies of software engineering

- Basic knowledge learning record

- UNIX final exam summary -- for direct Department

- SQL learning | set operation

- Reading notes: meta matrix factorization for federated rating predictions

- Question bank and answer analysis of the 2022 simulated examination of the latest eight members of Jiangxi construction (quality control)

猜你喜欢

Express②(路由)

freeCodeCamp----arithmetic_ Arranger exercise

Elmo (bilstm-crf + Elmo) (conll-2003 named entity recognition NER)

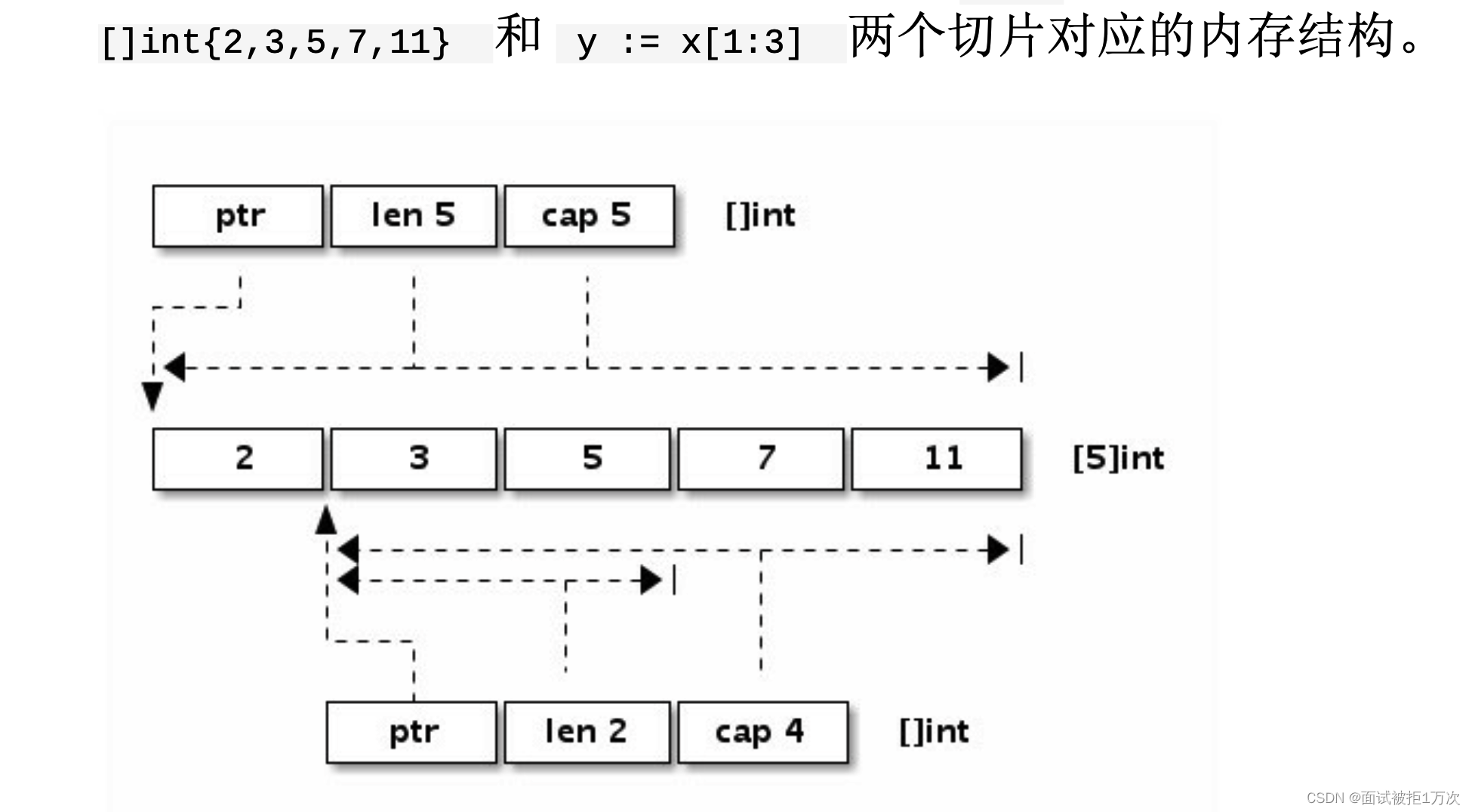

go 语言 数组,字符串,切片

编程旅行之函数

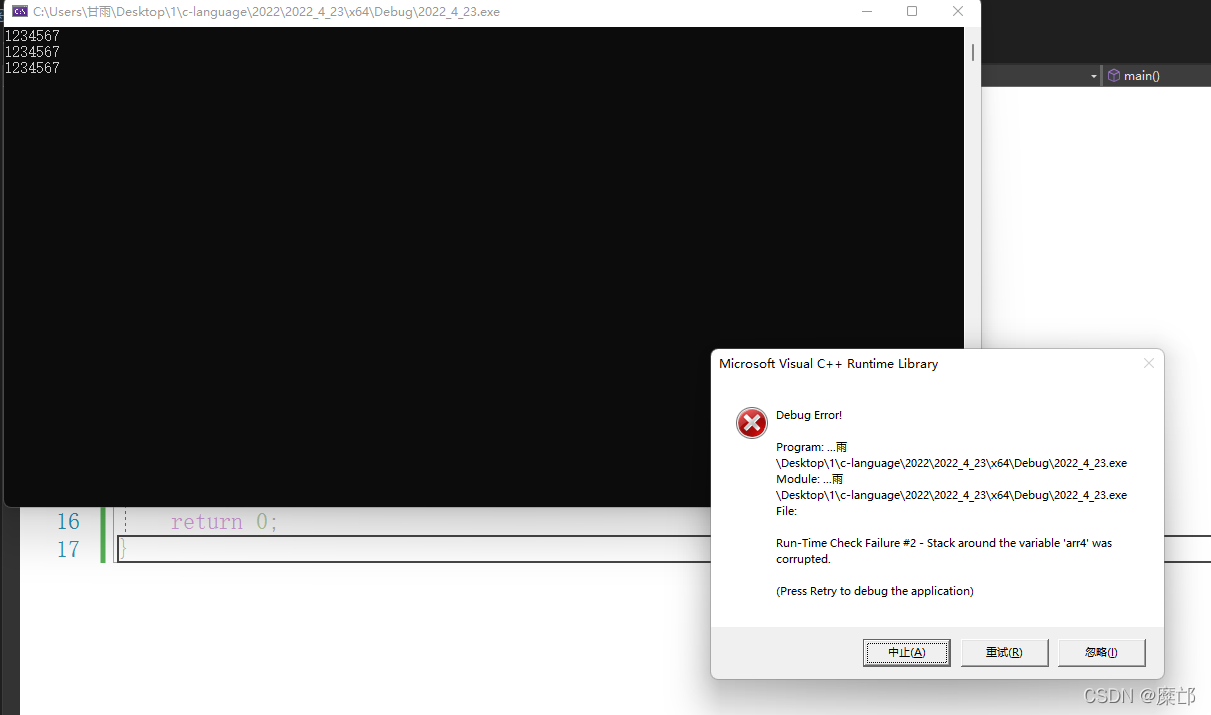

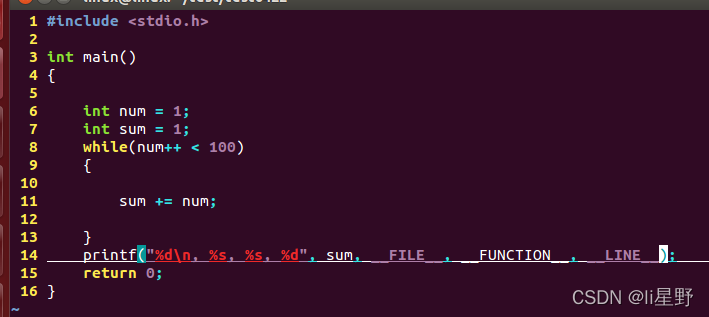

程序编译调试学习记录

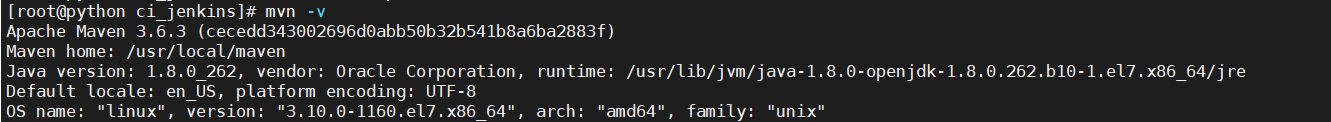

Jenkins construction and use

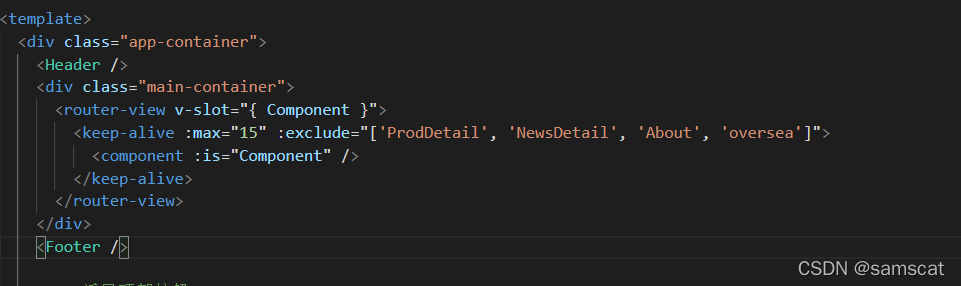

Record a strange bug: component copy after cache component jump

![Special test 05 · double integral [Li Yanfang's whole class]](/img/af/0d52a6268166812425296c3aeb8f85.png)

Special test 05 · double integral [Li Yanfang's whole class]

SSM project deployed in Alibaba cloud

随机推荐

UNIX final exam summary -- for direct Department

Android interview theme collection

2021年秋招,薪资排行NO

蓝绿发布、滚动发布、灰度发布,有什么区别?

[code analysis (7)] communication efficient learning of deep networks from decentralized data

Atcoder beginer contest 248c dice sum (generating function)

Question bank and answer analysis of the 2022 simulated examination of the latest eight members of Jiangxi construction (quality control)

Technologie zéro copie

MySQL 修改主数据库

How does redis solve the problems of cache avalanche, cache breakdown and cache penetration

Go语言 RPC通讯

Jiannanchun understood the word game

Building MySQL environment under Ubuntu & getting to know SQL

leetcode--977. Squares of a Sorted Array

Using Baidu Intelligent Cloud face detection interface to achieve photo quality detection

Special test 05 · double integral [Li Yanfang's whole class]

服务器中挖矿病毒了,屮

专题测试05·二重积分【李艳芳全程班】

L2-024 部落 (25 分)

crontab定时任务输出产生大量邮件耗尽文件系统inode问题处理