Fully Adaptive Bayesian Algorithm for Data Analysis

FABADA

FABADA is a novel non-parametric noise reduction technique which arise from the point of view of Bayesian inference that iteratively evaluates possible smoothed models of the data, obtaining an estimation of the underlying signal that is statistically compatible with the noisy measurements. Iterations stop based on the evidence $E$ and the $\chi^2$ statistic of the last smooth model, and we compute the expected value of the signal as a weighted average of the smooth models. You can find the entire paper describing the new method in (link will be available soon).

Explore the docs »

View Demo · Report Bug · Request Feature

Table of Contents

About The Method

This automatic method is focused in astronomical data, such as images (2D) or spectra (1D). Although, this doesn't mean it can be treat like a general noise reduction algorithm and can be use in any kind of two and one-dimensional data reproducing reliable results. The only requisite of the input data is an estimation of its variance.

Getting Started

We try to make the usage of FABADA as simple as possible. For that purpose, we have create a PyPI and Conda package to install FABADA in its latest version.

Prerequisites

The first requirement is to have a version of Python greater than 3.5. Although PyPI install the prerequisites itself, FABADA has two dependecies.

Installation

To install fabada we can, use the Python Package Index (PyPI) or Conda.

Using pip

pip install fabada

we are currently working on uploading the package to the Conda system.

Usage

Along with the package two examples are given.

- fabada_demo_image.py

In here we show how to use fabada for an astronomical grey image (two dimensional) First of all we have to import our library previously install and some dependecies

from fabada import fabada

import numpy as np

from PIL import Image

Then we read the bubble image borrowed from the Hubble Space Telescope gallery. In our case we use the Pillow library for that. We also add some random Gaussian white noise using numpy.random.

# IMPORTING IMAGE

y = np.array(Image.open("bubble.png").convert('L'))

# ADDING RANDOM GAUSSIAN NOISE

np.random.seed(12431)

sig = 15 # Standard deviation of noise

noise = np.random.normal(0, sig ,y.shape)

z = y + noise

variance = sig**2

Once the noisy image is generated we can apply fabada to produce an estimation of the underlying image, which we only have to call fabada and give it the variance of the noisy image

y_recover = fabada(z,variance)

And its done

As easy as one line of code.

The results obtained running this example would be:

The left, middle and right panel corresponds to the true signal, the noisy meassurents and the estimation of fabada respectively. There is also shown the Peak Signal to Noise Ratio (PSNR) in dB and the Structural Similarity Index Measure (SSIM) at the bottom of the middle and right panel (PSNR/SSIM).

- fabada_demo_spectra.py

In here we show how to use fabada for an astronomical spectrum (one dimensional), basically is the same as the example above since fabada is the same for one and two-dimensional data. First of all, we have to import our library previously install and some dependecies

from fabada import fabada

import pandas as pd

import numpy as np

Then we read the interacting galaxy pair Arp 256 spectra, taken from the ASTROLIB PYSYNPHOT package which is store in arp256.csv. Again we add some random Gaussian white noise

# IMPORTING SPECTRUM

y = np.array(pd.read_csv('arp256.csv').flux)

y = (y/y.max())*255 # Normalize to 255

# ADDING RANDOM GAUSSIAN NOISE

np.random.seed(12431)

sig = 10 # Standard deviation of noise

noise = np.random.normal(0, sig ,y.shape)

z = y + noise

variance = sig**2

Once the noisy image is generated we can, again, apply fabada to produce an estimation of the underlying spectrum, which we only have to call fabada and give it the variance of the noisy image

y_recover = fabada(z,variance)

And done again

Which is exactly the same as for two dimensional data.

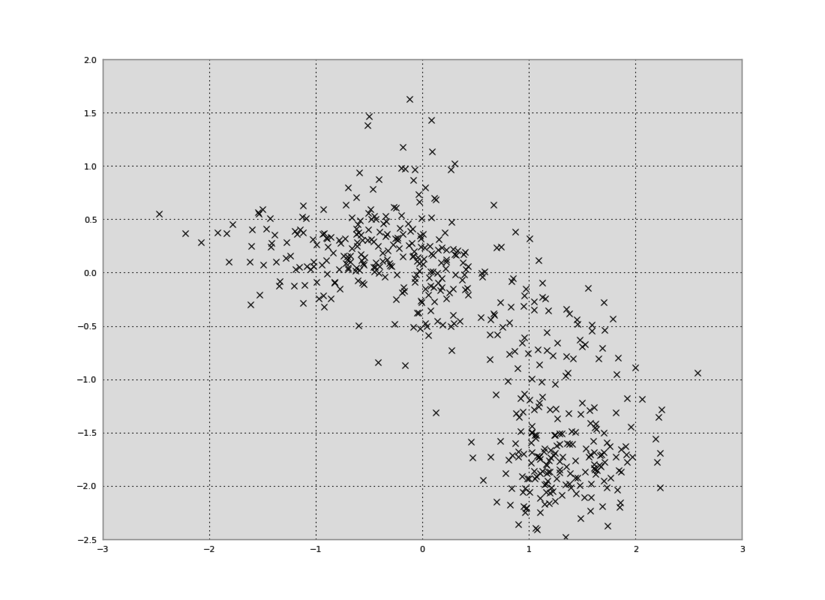

The results obtained running this example would be:

The red, grey and black line represents the true signal, the noisy meassurents and the estimation of fabada respectively. There is also shown the Peak Signal to Noise Ratio (PSNR) in dB and the Structural Similarity Index Measure (SSIM) in the legend of the figure (PSNR/SSIM).

Results

All the results of the paper of this algorithm can be found in the folder results along with a jupyter notebook that allows to explore all of them through an interactive interface. You can run the jupyter notebook through Google Colab in this link --> Explore the results.

Contributing

Contributions are what make the open source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

If you have a suggestion that would make this better, please fork the repo and create a pull request. You can also simply open an issue with the tag "enhancement". Don't forget to give the project a star! Thanks again!

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

License

Distributed under the GNU General Public License. See LICENSE.txt for more information.

Contact

Pablo M Sánchez Alarcón - [email protected]

Yago Ascasibar Sequeiros - [email protected]

Project Link: https://github.com/PabloMSanAla/fabada

Cite

Thank you for using FABADA.

Citations and acknowledgement are vital for the continued work on this kind of algorithms.

Please cite the following record if you used FABADA in any of your publications.

@ARTICLE{2022arXiv220105145S,

author = {{Sanchez-Alarcon}, Pablo M and {Ascasibar Sequeiros}, Yago},

title = "{Fully Adaptive Bayesian Algorithm for Data Analysis, FABADA}",

journal = {arXiv e-prints},

keywords = {Astrophysics - Instrumentation and Methods for Astrophysics, Astrophysics - Astrophysics of Galaxies, Astrophysics - Solar and Stellar Astrophysics, Computer Science - Computer Vision and Pattern Recognition, Physics - Data Analysis, Statistics and Probability},

year = 2022,

month = jan,

eid = {arXiv:2201.05145},

pages = {arXiv:2201.05145},

archivePrefix = {arXiv},

eprint = {2201.05145},

primaryClass = {astro-ph.IM},

adsurl = {https://ui.adsabs.harvard.edu/abs/2022arXiv220105145S}

}

Sanchez-Alarcon, P. M. and Ascasibar Sequeiros, Y., “Fully Adaptive Bayesian Algorithm for Data Analysis, FABADA”, arXiv e-prints, 2022.

https://arxiv.org/abs/2201.05145

Readme file taken from Best README Template.