当前位置:网站首页>Cdh6 based on CM management 3.2 cluster integration atlas 2 one

Cdh6 based on CM management 3.2 cluster integration atlas 2 one

2022-04-23 14:03:00 【A hundred nights】

be based on CM Managed CDH6.3.2 Cluster integration Atlas2.1.0

Data governance of big data platform needs , use Apache Atlas Data governance . download Atlas2.1.0 Version source package . download https://www.apache.org/dyn/closer.cgi/atlas/2.1.0/apache-atlas-2.1.0-sources.tar.gz To windows.

Premise CDH The cluster has been set up , Component services include Hdfs、Hive、Hbase、Solr、Kafka、Sqoop、Zookeeper、Impala、Yarn、Spark、Oozie、Phoenix、Hue etc. .

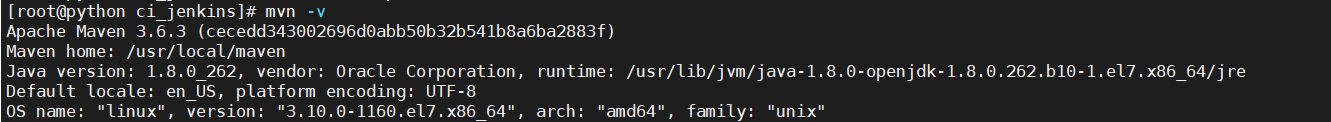

windows Environmental Science JDK(1.8.1_151 above )、Maven(3.5.0 above ) The version is best and Linux Medium JDK、Maven Version consistency .

Modify the source code and compile

decompression apache-atlas-2.1.0-sources.tar.gz Compressed package , obtain apache-atlas-sources-2.1.0 Catalog , use InetlliJ Idea Open project file , Modify the directory of pom.xml file .

In the tag, change the component to CDH Version used in .

<lucene-solr.version>7.4.0-cdh6.3.2</lucene-solr.version>

<hadoop.version>3.0.0-cdh6.3.2</hadoop.version>

<hbase.version>2.1.0-cdh6.3.2</hbase.version>

<solr.version>7.4.0-cdh6.3.2</solr.version>

<hive.version>2.1.1-cdh6.3.2</hive.version>

<kafka.version>2.2.1-cdh6.3.2</kafka.version>

<sqoop.version>1.4.7-cdh6.3.2</sqoop.version>

<zookeeper.version>3.4.5-cdh6.3.2</zookeeper.version>

Add the following dependency sources in the tag , Save and exit !wq

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos</url>

<releases>

<enabled>true</enabled>

</releases>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

For compatibility hive2.1.1 edition , Need modification Atlas2.1.0 Default Hive3.1 Source code , Project location /opt/apache-atlas-sources-2.1.0/addons/hive-bridge.

(1) Modify file ./src/main/java/org/apache/atlas/hive/bridge/HiveMetaStoreBridge.java

// The first 577 Line source code :

String catalogName = hiveDB.getCatalogName() != null ? hiveDB.getCatalogName().toLowerCase() : null;

// It is amended as follows :

String catalogName = null;

(2) modify .src/main/java/org/apache/atlas/hive/hook/AtlasHiveHookContext.java

// The first 81 Line source code :

this.metastoreHandler = (listenerEvent != null) ? metastoreEvent.getIHMSHandler() : null;

// It is amended as follows :

this.metastoreHandler = null;

After the year of revision , wait for Maven All dependent packages have been downloaded .

Open command window Terminal Line to compile .

mvn clean -DskipTests package -Pdist -Drat.skip=true

Waiting for compilation and installation . During this period, red tips , The following sentence is the core of the problem .

Failure to find org.apache.lucene:lucene-core:jar:7.4.0-cdh6.3.2 in https://maven.aliyun.com/repository/public was cached in the local repository, resolution will not be reattempted until the update interval of aliyunmaven has elapsed or updates are forced

or

Could not find artifact org.apache.lucene:lucene-parent:pom:7.4.0-cdh6.3.2 in aliyunmaven (https://maven.aliyun.com/repository/public) ->[Help 1]

Go to maven The warehouse found the corresponding folder , If I have a local path here D:\apache-maven-3.6.1\repository\org\apache\lucene\lucene-core\7.4.0-cdh6.3.2 Keep only the inside .jar and .pom file , Other documents such as .repositories、.jar.lastUpdated、.jar.sha1、.pom.lastUpdated、.pom.sha1 Delete all , Then recompile . If you still report an error here , Just go to https://repository.cloudera.com/artifactory/cloudera-repos/ Corresponding missing file found in , Put it in the local warehouse , Then recompile .

After compilation , stay distro/target Can be seen in apache-atlas-2.1.0-bin.tar.gz file , Unzip this file to CM server node /data/software/atlas, And extract the .

tar -zxvf apache-atlas-2.1.0-bin.tar.gz

modify atlas The configuration file

stay atlas The installation directory /conf Directory is atlas-application.properties、atlas-log4j.xml、atlas-env.sh

cd apache-atlas-2.1.0/conf

-------------------------------------

-rw-r--r-- 1 root root 12411 3 month 24 15:00 atlas-application.properties

-rw-r--r-- 1 root root 3281 3 month 24 15:13 atlas-env.sh

-rw-r--r-- 1 root root 5733 3 month 24 15:03 atlas-log4j.xml

-rw-r--r-- 1 root root 2543 5 month 25 2021 atlas-simple-authz-policy.json

-rw-r--r-- 1 root root 31403 5 month 25 2021 cassandra.yml.template

drwxr-xr-x 2 root root 18 3 month 24 15:15 hbase

drwxr-xr-x 3 root root 140 5 month 25 2021 solr

-rw-r--r-- 1 root root 207 5 month 25 2021 users-credentials.properties

drwxr-xr-x 2 root root 54 5 month 25 2021 zookeeper

atlas-application.properties modify :

# modify hbase

atlas.graph.storage.hostname=hadoop01:2181,hadoop02:2181,hadoop03:2181

atlas.graph.storage.hbase.regions-per-server=1

atlas.graph.storage.lock.wait-time=10000

# modify solr

atlas.graph.index.search.solr.zookeeper-url=192.168.1.185:2181/solr,192.168.1.186:2181/solr,192.168.1.188:2181/solr

# be equal to false External kafka

atlas.notification.embedded=false

atlas.kafka.zookeeper.connect=192.168.1.185:2181,192.168.1.186:2181,192.168.1.188:2181

atlas.kafka.bootstrap.servers=192.168.1.185:9092,192.168.1.186:9092,192.168.1.188:9092

atlas.kafka.zookeeper.session.timeout.ms=60000

atlas.kafka.zookeeper.connection.timeout.ms=60000

# Modify other configurations

# Default access port 21000, This port and impala Conflict , Can be in cm Revision in China impala port , Because it's already installed imapala, So modify the port here .

atlas.server.http.port=21021

atlas.rest.address=http://hadoop01:21021

# If set to true, Then the installation steps will be run when the server starts

atlas.server.run.setup.on.start=false

# hbase Of zk Cluster nodes

atlas.audit.hbase.zookeeper.quorum=hadoop01:2181,hadoop02:2181,hadoop03:2181

# add to hive

######### Hive Hook Configs #######

atlas.hook.hive.synchronous=false

atlas.hook.hive.numRetries=3

atlas.hook.hive.queueSize=10000

atlas.cluster.name=primary

atlas-log4j.xml modify :

Remove the comments in the following code section 79 That's ok -95 That's ok

<!-- Uncomment the following for perf logs -->

<!-- <appender name="perf_appender" class="org.apache.log4j.DailyRollingFileAppender"> <param name="file" value="${atlas.log.dir}/atlas_perf.log" /> <param name="datePattern" value="'.'yyyy-MM-dd" /> <param name="append" value="true" /> <layout class="org.apache.log4j.PatternLayout"> <param name="ConversionPattern" value="%d|%t|%m%n" /> </layout> </appender> <logger name="org.apache.atlas.perf" additivity="false"> <level value="debug" /> <appender-ref ref="perf_appender" /> </logger> -->

atlas-env.sh modify : newly added export HBASE_CONF_DIR=/etc/hbase/conf

#!/usr/bin/env bash

#

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# The java implementation to use. If JAVA_HOME is not found we expect java and jar to be in path

#export JAVA_HOME=

# any additional java opts you want to set. This will apply to both client and server operations

#export ATLAS_OPTS=

# any additional java opts that you want to set for client only

#export ATLAS_CLIENT_OPTS=

# java heap size we want to set for the client. Default is 1024MB

#export ATLAS_CLIENT_HEAP=

# any additional opts you want to set for atlas service.

#export ATLAS_SERVER_OPTS=

# indicative values for large number of metadata entities (equal or more than 10,000s)

#export ATLAS_SERVER_OPTS="-server -XX:SoftRefLRUPolicyMSPerMB=0 -XX:+CMSClassUnloadingEnabled -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:+PrintTenuringDistribution -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=dumps/atlas_server.hprof -Xloggc:logs/gc-worker.log -verbose:gc -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=1m -XX:+PrintGCDetails -XX:+PrintHeapAtGC -XX:+PrintGCTimeStamps"

# java heap size we want to set for the atlas server. Default is 1024MB

#export ATLAS_SERVER_HEAP=

# indicative values for large number of metadata entities (equal or more than 10,000s) for JDK 8

#export ATLAS_SERVER_HEAP="-Xms15360m -Xmx15360m -XX:MaxNewSize=5120m -XX:MetaspaceSize=100M -XX:MaxMetaspaceSize=512m"

# What is is considered as atlas home dir. Default is the base locaion of the installed software

#export ATLAS_HOME_DIR=

# Where log files are stored. Defatult is logs directory under the base install location

#export ATLAS_LOG_DIR=

# Where pid files are stored. Defatult is logs directory under the base install location

#export ATLAS_PID_DIR=

# where the atlas titan db data is stored. Defatult is logs/data directory under the base install location

#export ATLAS_DATA_DIR=

# Where do you want to expand the war file. By Default it is in /server/webapp dir under the base install dir.

#export ATLAS_EXPANDED_WEBAPP_DIR=

#hbse Profile path

export HBASE_CONF_DIR=/etc/hbase/conf

# indicates whether or not a local instance of HBase should be started for Atlas

# External use hbase, no need atlas built-in hbase

export MANAGE_LOCAL_HBASE=false

# indicates whether or not a local instance of Solr should be started for Atlas

# External use solr, Don't use atlas built-in solr

export MANAGE_LOCAL_SOLR=false

# indicates whether or not cassandra is the embedded backend for Atlas

# External use cassandra, Don't use atlas built-in cassandra

export MANAGE_EMBEDDED_CASSANDRA=false

# indicates whether or not a local instance of Elasticsearch should be started for Atlas

# External use es, Don't use atlas built-in es

export MANAGE_LOCAL_ELASTICSEARCH=false

Component service integration

Integrate CDH in HBase

take hbase The configuration file is added to atlas Of conf/hbase in .

ln -s /etc/hbase/conf /data/software/atlas/apache-atlas-2.1.0/conf/hbase/

Integrate CDH in Solr

take atlas/conf/solr Copy folder to all installations solr Node directory , And changed its name to atlas-solr

cp -r atlas/conf/solr /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/solr

# all solr Node execution

cd /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/solr

# all solr Node execution

mv solr atlas-solr

# all solr Node execution , modify solr User corresponding bash

vi /etc/passwd

/sbin/nologin It is amended as follows /bin/bash

useradd atlas && echo atlas | passwd --stdin atlas

chown -R atlas:atlas /usr/local/src/solr/

# solr Node creation collection

# Switch solr User execution

su solr

/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/solr/bin/solr create -c vertex_index -d /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/solr/atlas-solr -shards 3 -replicationFactor 1

/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/solr/bin/solr create -c edge_index -d /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/solr/atlas-solr -shards 3 -replicationFactor 1

/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/solr/bin/solr create -c fulltext_index -d /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/solr/atlas-solr -shards 3 -replicationFactor 1

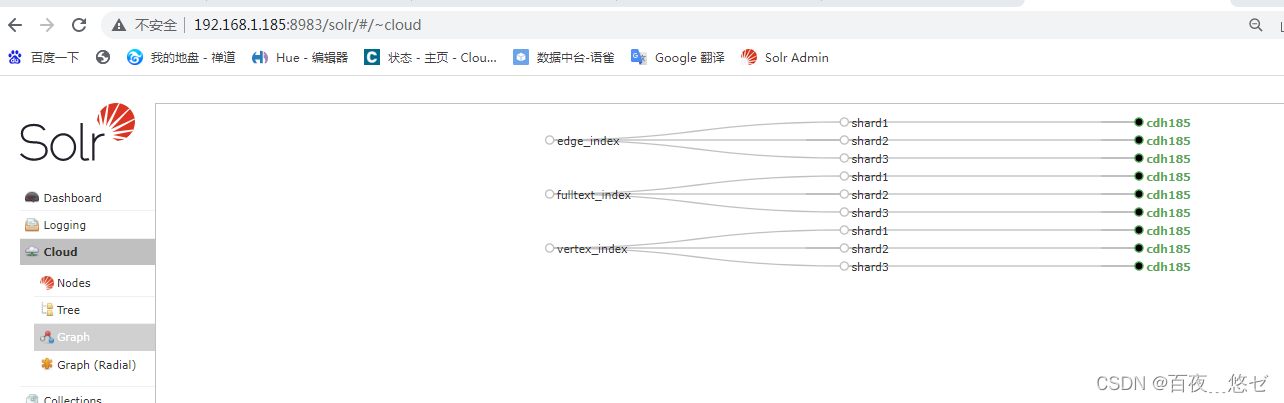

see solr Service node web page ,http://cdh001:8983, Verify that the creation was successful , The following appears .

Integrate CDH in Kafka

# Create the test Topic

kafka-topics --zookeeper cdh185:2181,cdh186:2181,cdh188.com:2181 --create --replication-factor 3 --partitions 3 --topic _HOATLASOK

kafka-topics --zookeeper cdh185:2181,cdh186:2181,cdh188.com:2181, --create --replication-factor 3 --partitions 3 --topic ATLAS_ENTITIES

kafka-topics --zookeeper cdh185:2181,cdh186:2181,cdh188.com:2181, --create --replication-factor 3 --partitions 3 --topic ATLAS_HOOK

# see Topic list

kafka-topics --zookeeper cdh185:2181 --list

add to Atlas To system variables

vim /etc/profile

#---------------- atlas ---------------------------

export ATLAS_HOME=/data/software/atlas/apache-atlas-2.1.0

export PATH=$PATH:$ATLAS_HOME/bin

start-up Atlas

# Start command

atlas_start.py

starting atlas on host localhost

starting atlas on port 21021

...................

Apache Atlas Server started!!!

# Check the port enabling status

netstat -nultap | grep 21021

tcp 0 0 0.0.0.0:21021 0.0.0.0:* LISTEN

tcp 0 0 192.168.1.185:21021 172.16.10.11:51805 TIME_WAIT

tcp 0 0 192.168.1.185:21021 172.16.10.11:51806 TIME_WAIT

tcp 0 0 192.168.1.185:21021 172.16.10.11:51809 TIME_WAIT

tcp 0 0 192.168.1.185:21021 172.16.10.11:51804 TIME_WAIT

tcp 0 0 192.168.1.185:21021 172.16.10.11:51808 TIME_WAIT

tcp 0 0 192.168.1.185:21021 172.16.10.11:51807 TIME_WAIT

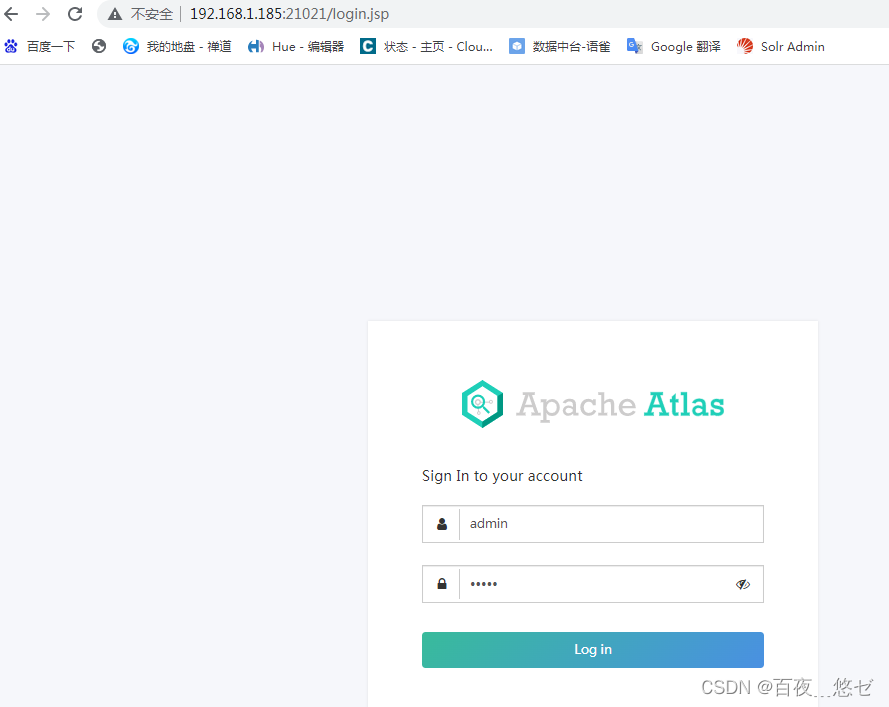

# Page view , Sign in http://hadoop01:21021, Default account secret admin/admin

# Stop the order

atlas_stop.py

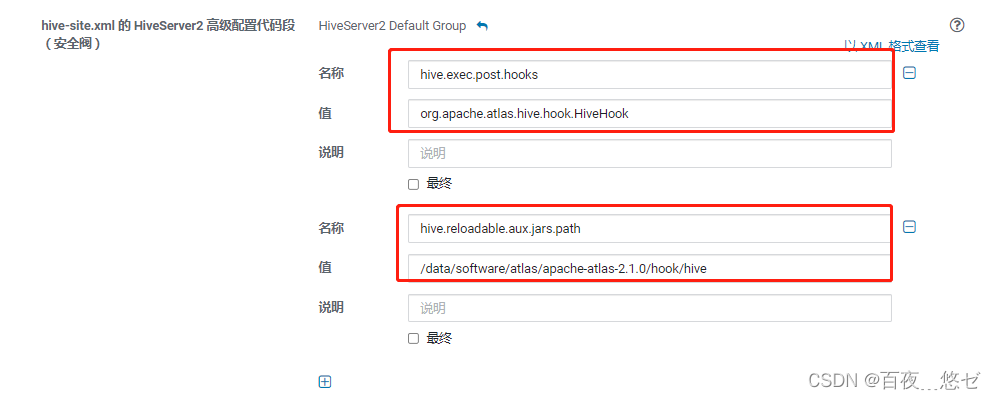

Integrate CDH in Hive

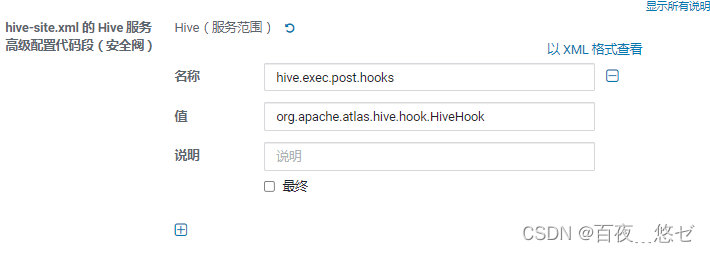

CM Interface operation Hive The configuration file hive-site.xml,

(1) modify 【hive-site.xml Of Hive Service advanced configuration code snippet ( Safety valve )】

name :hive.exec.post.hooks

value :org.apache.atlas.hive.hook.HiveHook

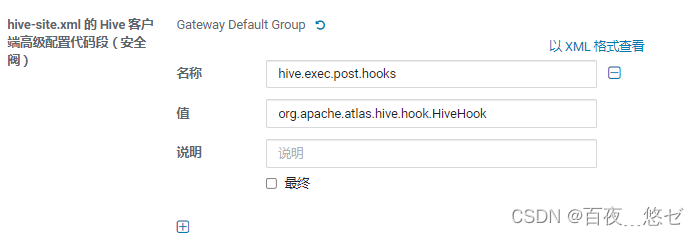

(2) modify 【hive-site.xml Of Hive Client advanced configuration code snippet ( Safety valve )】

name :hive.exec.post.hooks

value :org.apache.atlas.hive.hook.HiveHook

(3) modify 【hive-site.xml Of HiveServer2 Advanced configuration snippet ( Safety valve )】

name :hive.exec.post.hooks

value :org.apache.atlas.hive.hook.HiveHook

name :hive.reloadable.aux.jars.path

value :HIVE_AUX_JARS_PATH=/data/software/atlas/apache-atlas-2.1.0/hook/hive

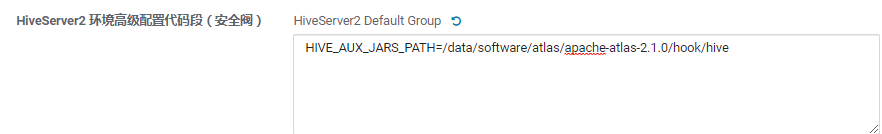

(4) modify 【HiveServer2 Environment advanced configuration code snippet ( Safety valve )】

HIVE_AUX_JARS_PATH=/data/software/atlas/apache-atlas-2.1.0/hook/hive

(5)atlas-application.properties Copy the configuration file to /etc/hive/conf Catalog

cp /data/software/atlas/apache-atlas-2.1.0/conf/atlas-application.properties /etc/hive/conf

(6) take atlas-application.properties Copy the configuration file to atlas/hook/hive Catalog , Compress the configuration file to atlas-plugin-classloader-2.1.0.jar in

# Copy file

cp /data/software/atlas/apache-atlas-2.1.0/conf/atlas-application.properties /data/software/atlas/apache-atlas-2.1.0/hook/hive

# Entry directory

cd /data/software/atlas/apache-atlas-2.1.0/hook/hive

# Compress the configuration file to atlas-plugin-classloader-2.1.0.jar

zip -u atlas-plugin-classloader-2.1.0.jar atlas-application.properties

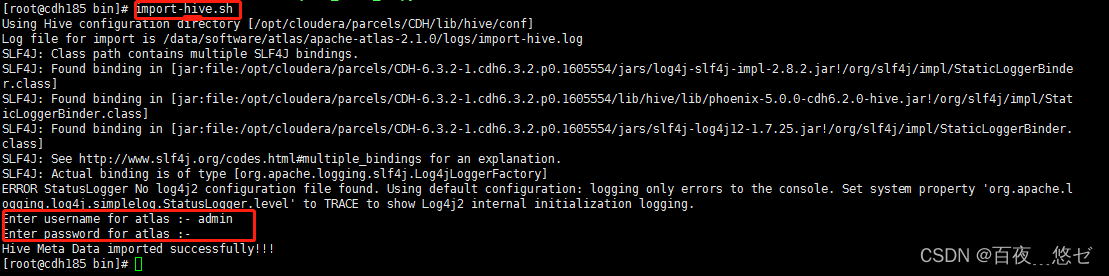

(7)Atlas Import hive Metadata .

import-hive.sh

# Account density admin/admin

# appear Hive Meta Data imported successfully!!! Successful import hive Metadata .

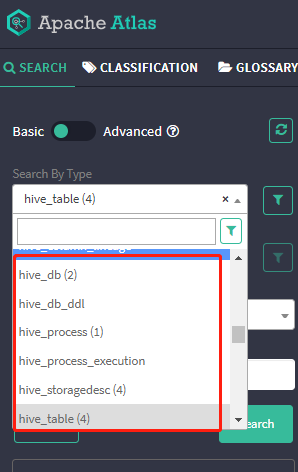

# adopt atlas page , Query to see hive_db Just have a number after it .

thus Atlas Integrate CDH Cluster complete .

版权声明

本文为[A hundred nights]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231401520731.html

边栏推荐

- mysql通过binlog文件恢复数据

- Android篇:2019初中级Android开发社招面试解答(中

- Express middleware ③ (custom Middleware)

- Special test 05 · double integral [Li Yanfang's whole class]

- Decentralized Collaborative Learning Framework for Next POI Recommendation

- 微信小程序调用客服接口

- 1256:献给阿尔吉侬的花束

- New关键字的学习和总结

- Restful WebService和gSoap WebService的本质区别

- Strange bug of cnpm

猜你喜欢

1256:献给阿尔吉侬的花束

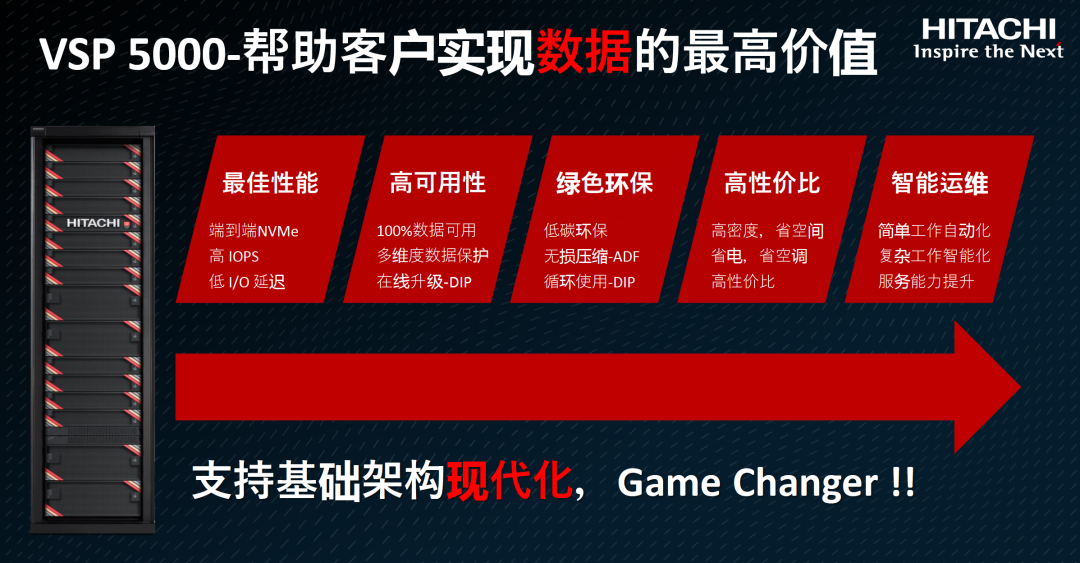

33 million IOPs, 39 microsecond delay, carbon footprint certification, who is serious?

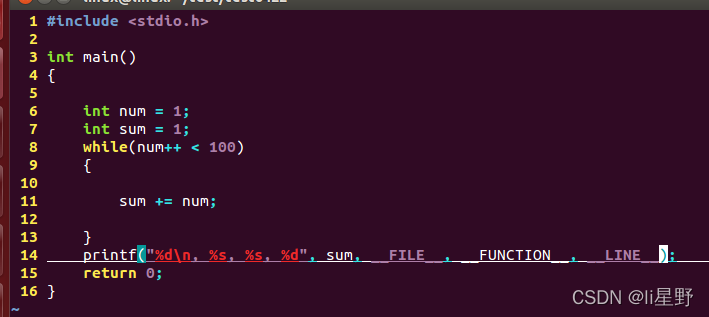

Program compilation and debugging learning record

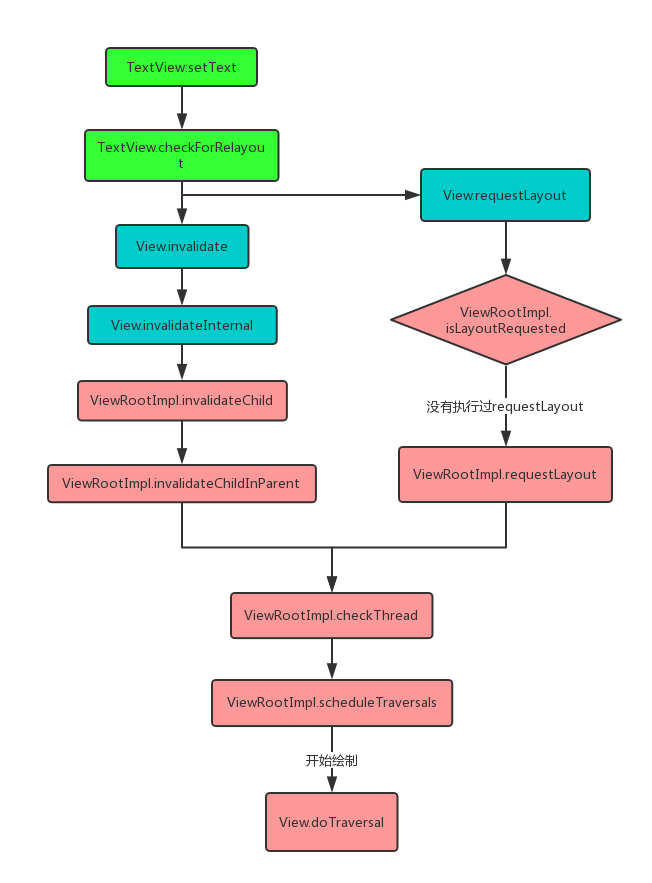

Choreographer full resolution

Business case | how to promote the activity of sports and health app users? It is enough to do these points well

Jenkins construction and use

The art of automation

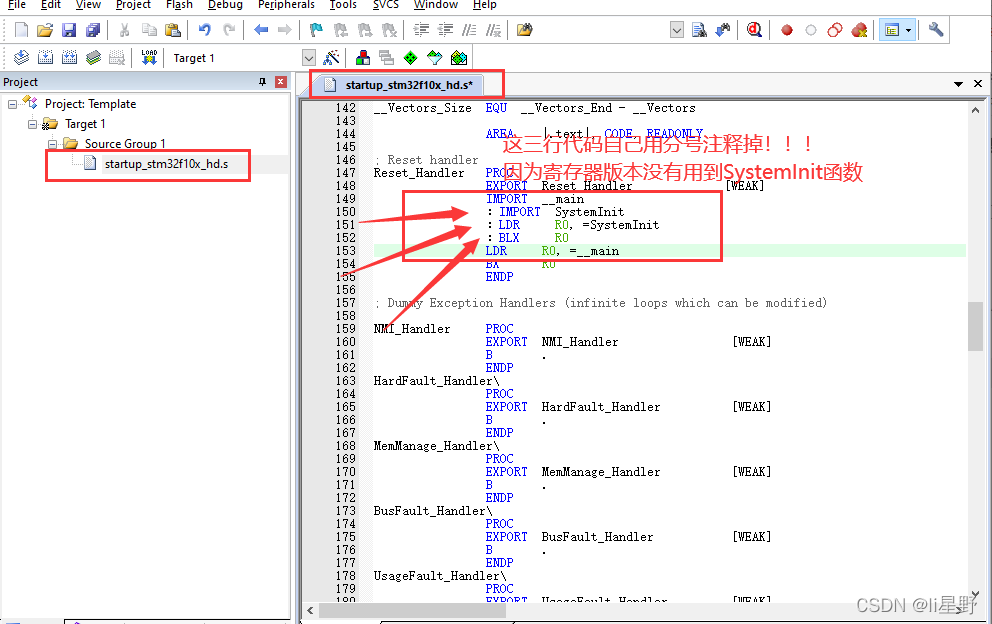

STM32学习记录0007——新建工程(基于寄存器版)

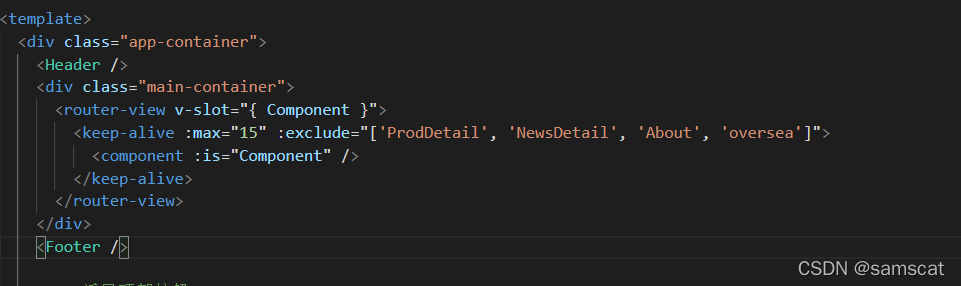

Record a strange bug: component copy after cache component jump

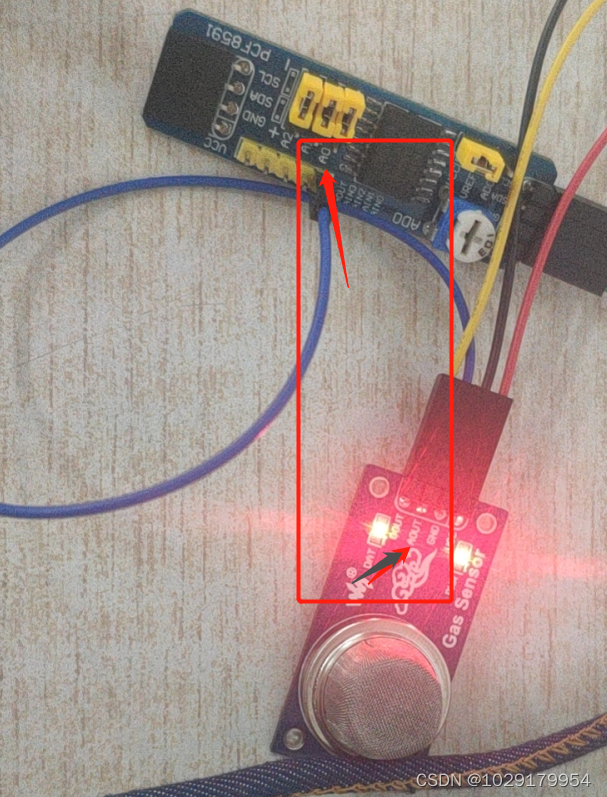

烟雾传感器(mq-2)使用详细教程(基于树莓派3b+实现)

随机推荐

Yarn online dynamic resource tuning

基于CM管理的CDH6.3.2集群集成Atlas2.1.0

Expression「Func「TSource, object」」 转Expression「Func「TSource, object」」[]

基于Ocelot的gRpc网关

centOS下mysql主从配置

1256:献给阿尔吉侬的花束

Nodejs安装及环境配置

SPC简介

Quartus Prime硬件实验开发(DE2-115板)实验一CPU指令运算器设计

1256: bouquet for algenon

nodejs通过require读取本地json文件出现Unexpected token / in JSON at position

Un modèle universel pour la construction d'un modèle d'apprentissage scikit

Decentralized Collaborative Learning Framework for Next POI Recommendation

JS brain burning interview question reward

初识go语言

How does redis solve the problems of cache avalanche, cache breakdown and cache penetration

程序编译调试学习记录

Choreographer full resolution

Oracle alarm log alert Chinese trace and trace files

There is a mining virus in the server