当前位置:网站首页>Realrange, reduce, repeat and einops in einops package layers. Rearrange and reduce in torch. Processing methods of high-dimensional data

Realrange, reduce, repeat and einops in einops package layers. Rearrange and reduce in torch. Processing methods of high-dimensional data

2022-04-23 20:48:00 【NuerNuer】

from einops import rearrange, reduce, repeat

from einops.layers.torch import Rearrange, ReduceOne .rearrange and Rearrange, effect : It can also be seen from the function name that it rearranges the tensor scale ,

difference :

1.einops.layers.torch Medium Rearrange, It is used to analyze the tensor when building the network structure “ Implicit ” To deal with

for example :

class PatchEmbedding(nn.Module):

def __init__(self, in_channels: int = 3, patch_size: int = 16, emb_size: int = 768, img_size: int = 224):

self.patch_size = patch_size

super().__init__()

self.projection = nn.Sequential(

# using a conv layer instead of a linear one -> performance gains

nn.Conv2d(in_channels, emb_size, kernel_size=patch_size, stride=patch_size),

Rearrange('b e (h) (w) -> b (h w) e'),

) there Rearrange('b e (h) (w) -> b (h w) e'), It means that you will 4 The dimension tensor is converted to 3 dimension , And the original last two dimensions are merged into one dimension :(16,512,4,16)->(16,64,512)

In this way, as long as we know the initial tensor dimension, we can operate the annotation to rearrange its dimension .

2.eniops Medium rearrange, For tensor ‘ Show ’ To deal with , It's a function

for example :

rearrange(images, 'b h w c -> b (h w) c') take 4 The dimension tensor is converted to 3 dimension , alike , As long as we know the initial dimension , You can manipulate annotations to rearrange them

It is worth noting that : After the annotation here is given, it represents the current dimension , Can't change , for example :

image = torch.randn(1,2,3,2) # torch.Size([1,2,3,2])

out = rearrange(image, 'b c h w -> b (c h w)', c=2,h=3,w=2) # torch.Size([1,12])

# h,w Value change for

err1 = rearrange(image, 'b c h w -> b (c h w)', c=2,h=2,w=3) # Report errors

Two .repeat: the tensor Repeat a dimension in , To expand the number of dimensions

B = 16

cls_token = torch.randn(1, 1, emb_size)

cls_tokens = repeat(cls_token, '() n e -> b n e', b=B)# Dimension for 1 Available when () Instead of

take (1,1,emb_size) The tensor treatment of is (B,1,emb_size)

R = 16

a = torch.randn(2,3,4)

b = repeat(a, 'b n e -> (r b) n e', r = R)

#(2R, 3, 4)

c = repeat(a, 'b n e -> b (r n) e', r = R)

#(2, 3R, 4)

# Incorrect usage :

d = repeat(a, 'b n e -> c n e', c = 2R)

# take (2,3,4) The dimensional tensor is treated as (2R, 3, 4)......

The above is the expansion of the same latitude , Let's look at an extension of dimension upgrading :

R = 5

a = torch.randn(2, 3, 4)

d = repeat(a,'b n e-> b n c e ', c = R)# take (2,3,4) The dimensional tensor is treated as (2, 3, 5, 4)......

Here, we also only need to operate the dimension annotation to complete the corresponding tensor operation .

3、 ... and .Reduce and reduce:

x = torch.randn(100, 32, 64)

# perform max-reduction on the first axis:

y0 = reduce(x, 't b c -> b c', 'max') #(32, 64)

# Appoint h2,w2, Equivalent to the size of the specified pooled core

x = torch.randn(10, 512, 30, 40)

# 2d max-pooling with kernel size = 2 * 2

y1 = reduce(x, 'b c (h1 h2) (w1 w2) -> b c h1 w1', 'max', h2=2, w2=2)

#(10, 512, 15, 20)

# go back to the original height and width

y2 = rearrange(y1, 'b (c h2 w2) h1 w1 -> b c (h1 h2) (w1 w2)', h2=2, w2=2)

#(10, 128, 30, 40)

# Appoint h1,w1, Equivalent to the size of the tensor after specified pooling

# 2d max-pooling to 12 * 16 grid:

y3 = reduce(x, 'b c (h1 h2) (w1 w2) -> b c h1 w1', 'max', h1=12, w1=16)

#(10, 512, 12, 16)

# 2d average-pooling to 12 * 16 grid:

y4 = (reduce(x, 'b c (h1 h2) (w1 w2) -> b c h1 w1', 'mean', h1=12, w1=16)

#(10, 512, 12, 16)

# Global average pooling

y5 = reduce(x, 'b c h w -> b c', 'mean')

#(10, 512)Redece Empathy .

Be careful : Here we take tensor as an example ,einops Can also handle numpy The data under the

版权声明

本文为[NuerNuer]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204210545522821.html

边栏推荐

- unity 功能扩展

- Matlab: psychtoolbox installation

- 內網滲透之DOS命令

- Cmake project under vs2019: calculating binocular parallax using elas method

- Resolve the error - error identifier 'attr_ id‘ is not in camel case camelcase

- Addition, deletion, modification and query of advanced MySQL data (DML)

- The more you use the computer, the slower it will be? Recovery method of file accidental deletion

- Thinking after learning to type

- Selenium 显示等待WebDriverWait

- DOS command of Intranet penetration

猜你喜欢

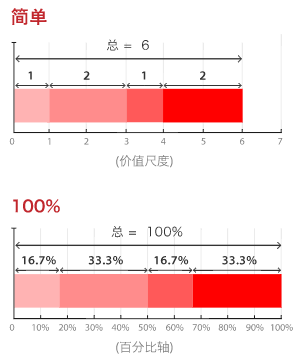

Recommended usage scenarios and production tools for common 60 types of charts

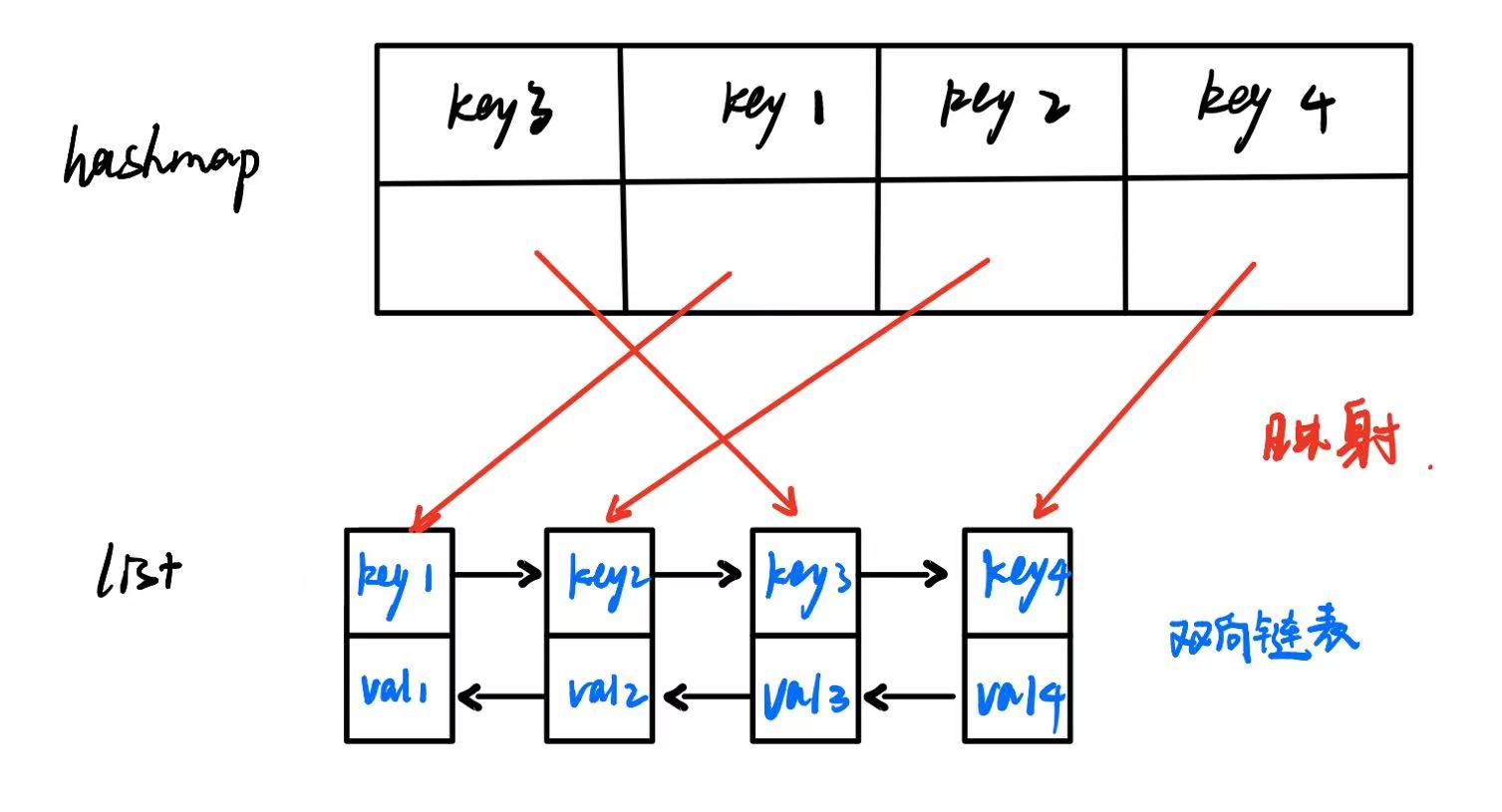

缓存淘汰算法初步认识(LRU和LFU)

wait、waitpid

笔记本电脑卡顿怎么办?教你一键重装系统让电脑“复活”

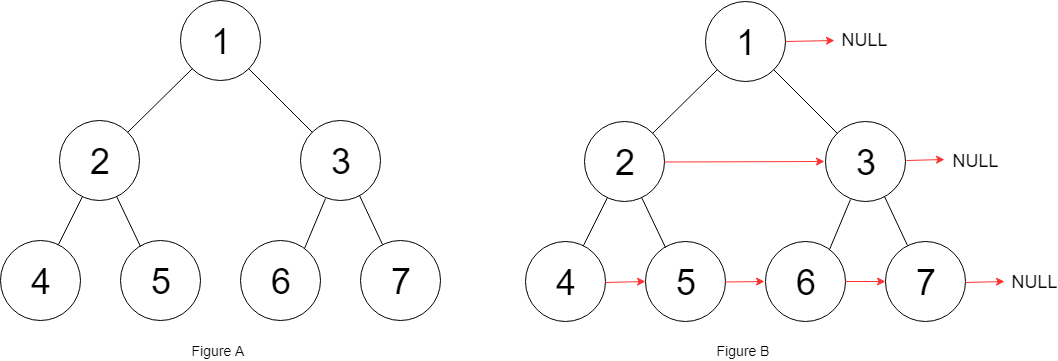

LeetCode 116. 填充每个节点的下一个右侧节点指针

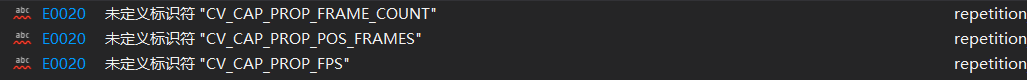

Identifier CV is not defined in opencv4_ CAP_ PROP_ FPS; CV_ CAP_ PROP_ FRAME_ COUNT; CV_ CAP_ PROP_ POS_ Frames problem

Express③(使用Express编写接口、跨域有关问题)

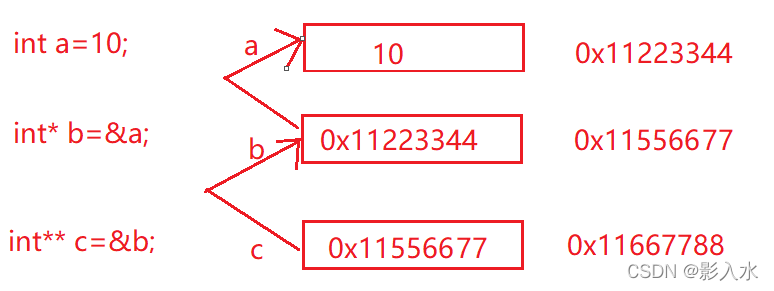

Deep analysis of C language pointer (Part I)

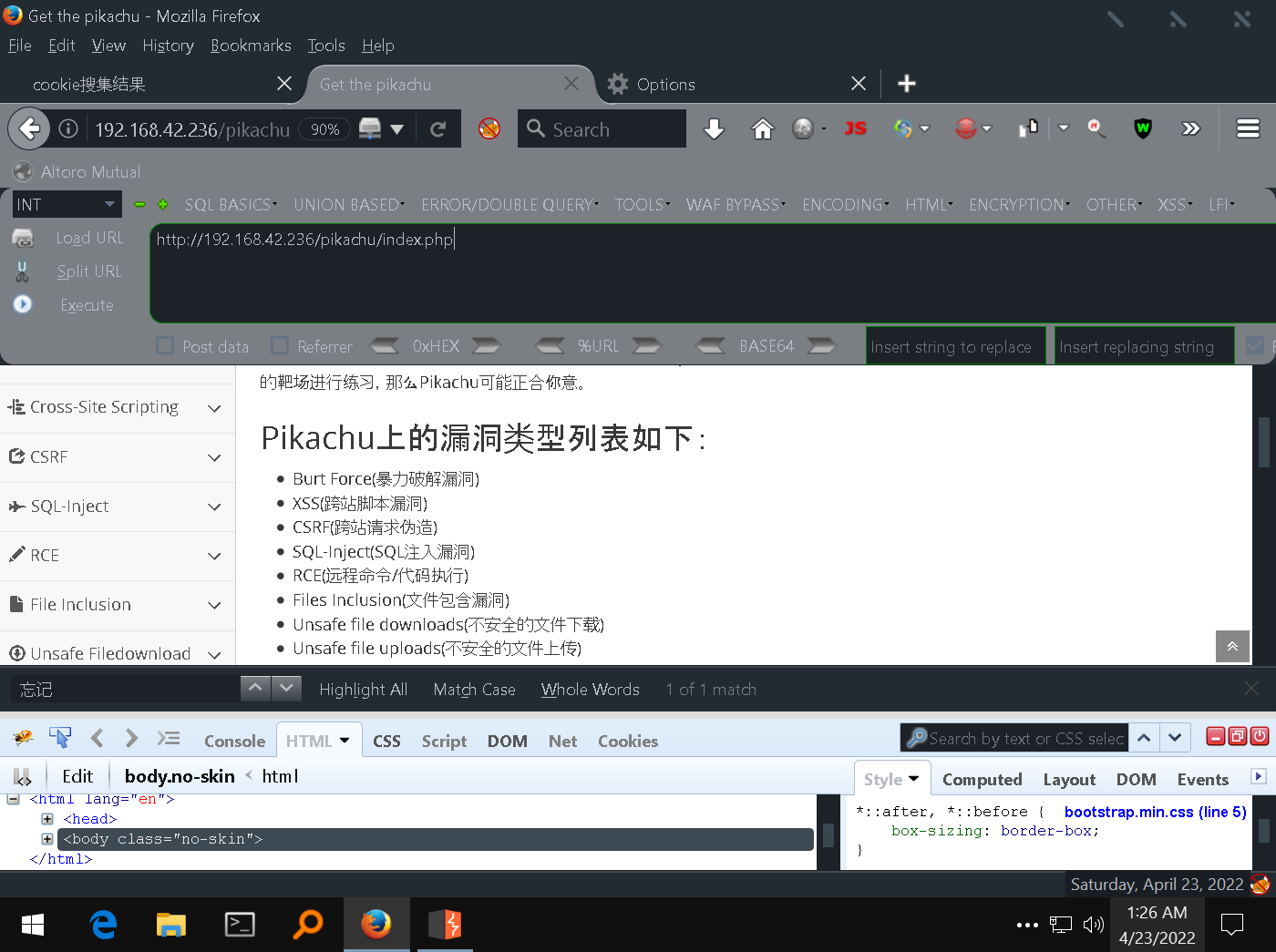

3-5通过XSS获取cookie以及XSS后台管理系统的使用

Write table of MySQL Foundation (create table)

随机推荐

How to configure SSH public key in code cloud

Vulnhub DC: 1 penetration notes

Imitation Baidu map realizes the three buttons to switch the map mode by automatically shrinking the bottom

Leetcode 232, queue with stack

Reentrant function

Is qiniu school useful and is the recommended securities account safe

MySQL数据库常识之储存引擎

Matlab: psychtoolbox installation

又一款数据分析神器:Polars 真的很强大

Go limit depth traversal of files in directory

The problem of 1 pixel border on the mobile terminal

【SQL】字符串系列2:将一个字符串根据特定字符分拆成多行

LeetCode 116. 填充每个节点的下一个右侧节点指针

Leetcode 542, 01 matrix

LeetCode 1337、矩阵中战斗力最弱的 K 行

unity 功能扩展

Fastdfs思维导图

JS arrow function user and processing method of converting arrow function into ordinary function

Some grounded words

[SQL] string series 2: split a string into multiple lines according to specific characters