当前位置:网站首页>[Deep Learning] Note 2 - The accuracy of the model in the test set is greater than that in the training set

[Deep Learning] Note 2 - The accuracy of the model in the test set is greater than that in the training set

2022-08-11 11:57:00 【aaaafeng】

Preface

Activity address: CSDN 21-day Learning Challenge

Blogger homepage: Aaaafeng's homepage_CSDN

Keep input, keep output!(quoting a sentence from my a friend)

Article table of contents

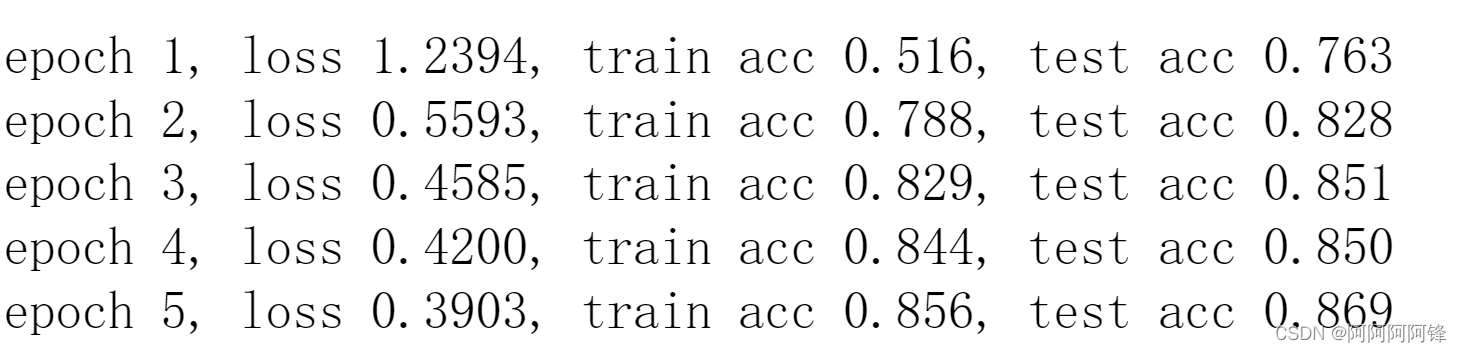

1. Description of the problem

In the process of model training, I suddenly found that the accuracy rate of the model is actually higher on the test set than on the training set.But we know that the way we train the model is to minimize the loss on the training set.Therefore, it should be normal for the model to perform better on the training set.

So, what caused the higher accuracy on the test set?

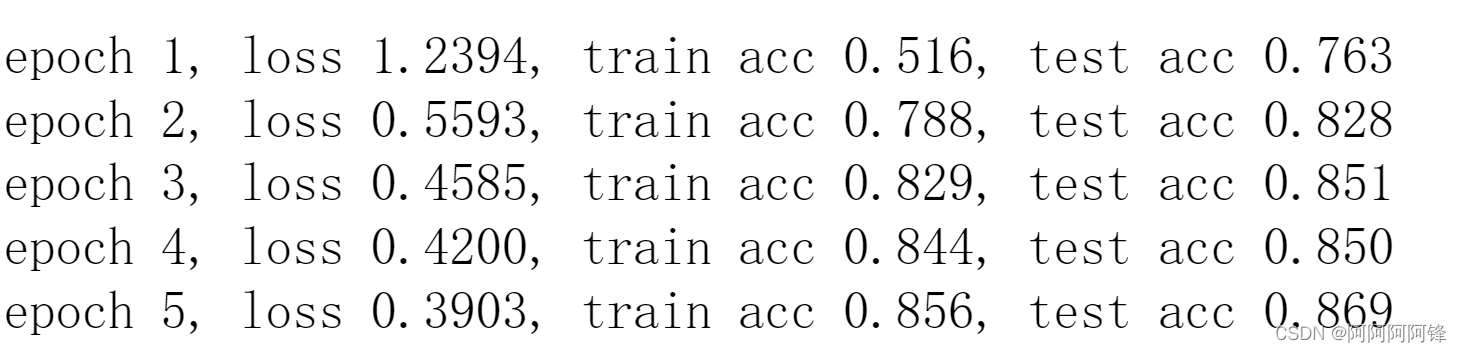

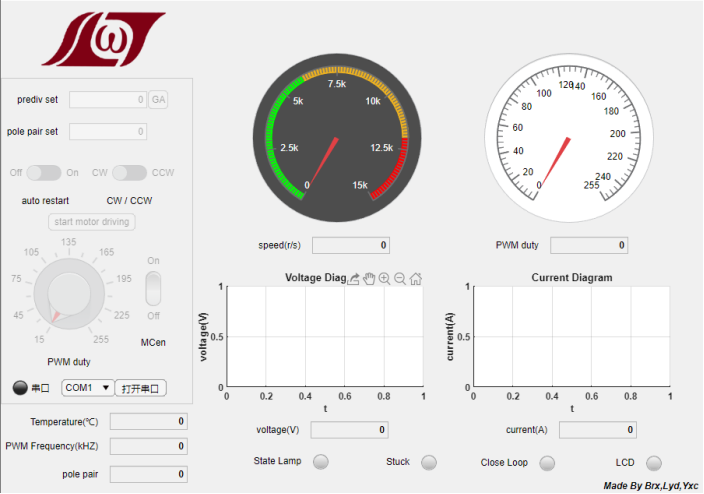

Model training results:

2. Fix the problem

2. 1. Underfitting

Later I consulted a big boss, she said: "Train a few more times to see, the first few times have been underfitting", I immediately felt, Good suggestionstrong>!

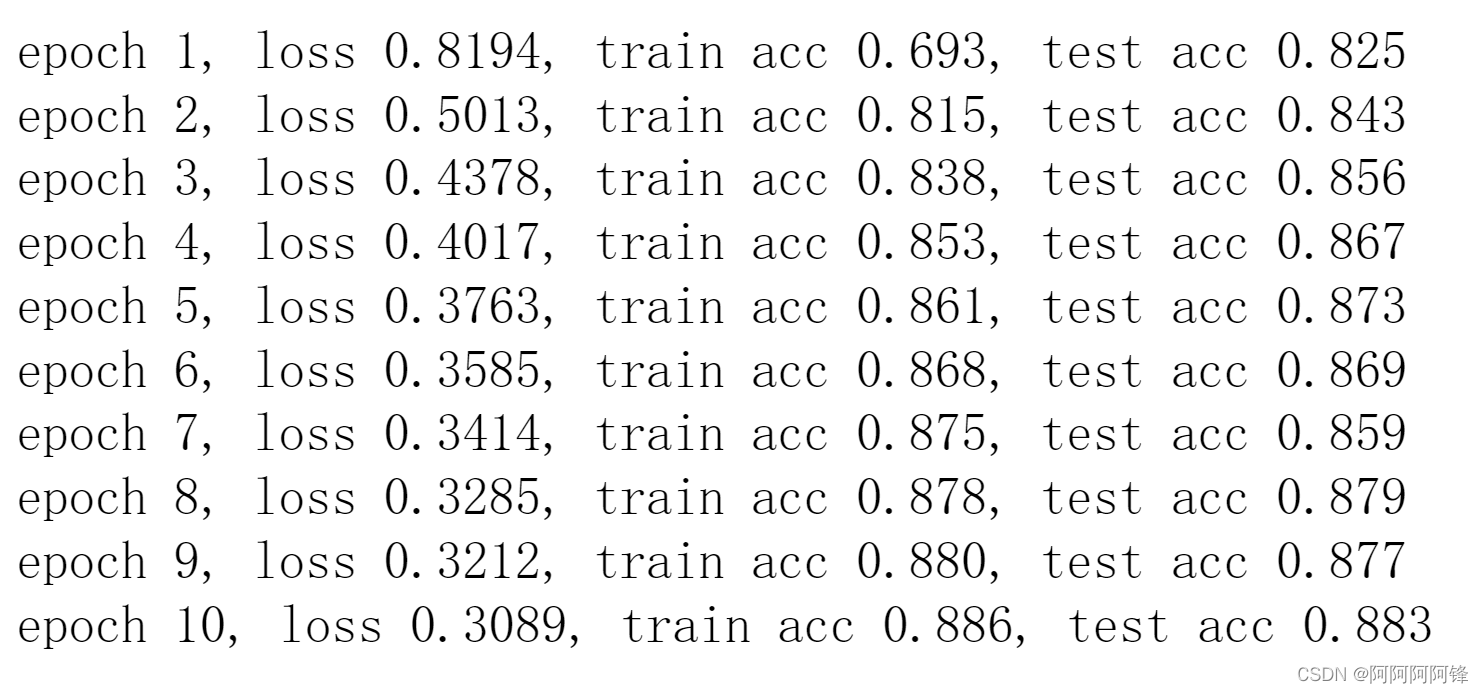

Increase the number of training epochs:

Sure enough!With increasing training epochs, the model accuracy slowly returned to the right track.The accuracy on the training set again exceeds that on the test set.

2. 2. Hysteresis of mini-batch statistics

But I still have some doubts, why in the underfitting state with fewer training cycles, the model has a higher accuracy on the test set?What is the relationship between them?

There is a part of the explanation given by a blog post, which I think is very reasonable and more in line with the situation I encountered:

The accuracy of the training set is generated after each batch, while the accuracy of the validation set is generally generated after an epoch. The model during validation is trained after batches, and there is a lag.It can be said that the model that has been trained about the same is used for verification, of course, the accuracy rate is higher.

That is, the problem arises with the way individuals specifically count the accuracy of the training set.If the accuracy of the model on the training set is counted after each training cycle, rather than at the end of each mini-batch, this will not happenThe problem.

Of course, just talking is not enough, you have to practice.I checked the previous model code and found that the accuracy on my training set was indeed counted after each mini-batch.Then you might as well try the accuracy of the training set and count it after each cycle.

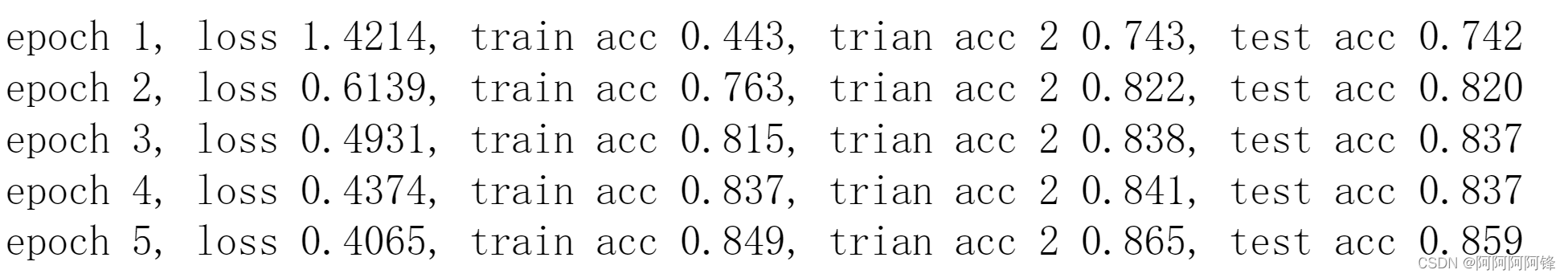

Accuracy on the training set after each training cycle (train acc 2):

It is easy to find that even in the state of underfitting, if the training set and test set accuracy are statistically the same, the model will still be more accurate on the training set.

Summary

When you encounter a problem, looking at other people's thoughts may make you feel stunned in an instant.It is not advisable for a person to drill into a bull's horn.

边栏推荐

猜你喜欢

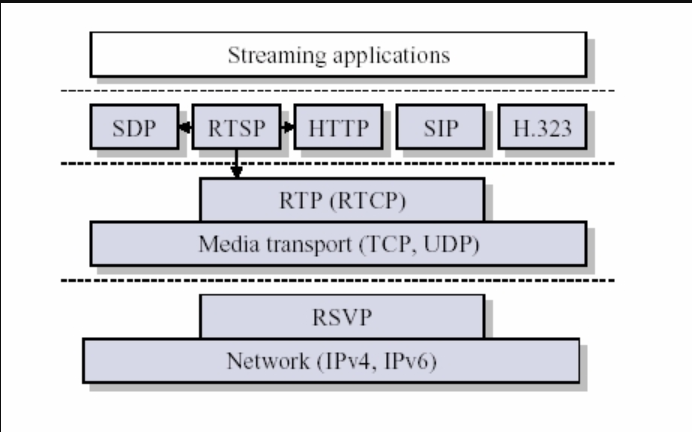

RTP协议浅析

为什么最好的光刻机来自荷兰,而不是芯片大国美国?

![[10 o'clock open class]: Optimization of AV1 encoder and its application in streaming media and real-time communication](/img/86/a6cd309cd66eb37159fcb8ae3338b1.png)

[10 o'clock open class]: Optimization of AV1 encoder and its application in streaming media and real-time communication

编程手册管理软件-涉及各类编程语言

【集创赛】arm杯一等奖作品:智能BLDC驱动系统

pip安装后仍有ImportError No module named XX问题解决

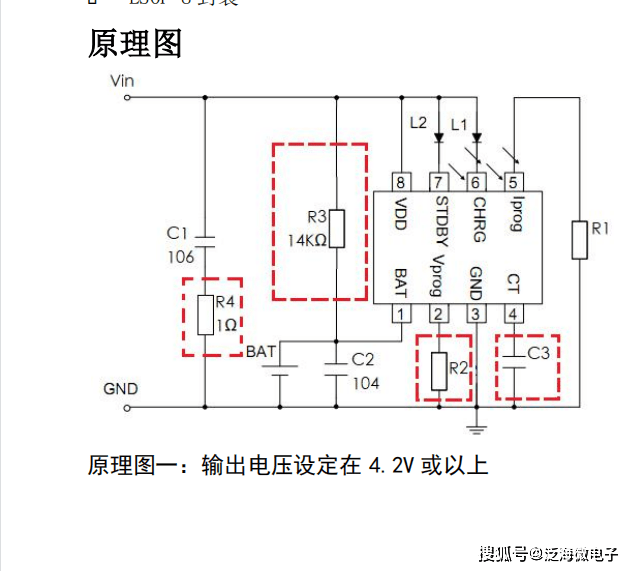

PL4807-ADJ线性锂电池可调充电芯片

【深度学习】笔记2-模型在测试集的准确率大于训练集

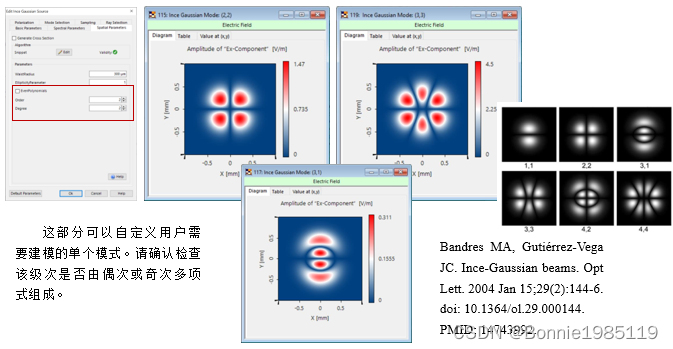

Ince-Gaussian mode

性能测试的环境以及测试数据构造

随机推荐

【深度学习】笔记2-模型在测试集的准确率大于训练集

公共经济学(开卷)期末复习题

《长津湖》和《三十而已》,不及王一博赚钱?

SpinalHDL资料汇总

通过热透镜聚焦不同类型的高斯模式

资本市场做好为工业互联网“买单”的准备了吗?

B端产品需求分析与优先级判断

在华门店数超星巴克,瑞幸咖啡完成“逆袭”?

【医学统计学】二项分布

【学生个人网页设计作品】使用HMTL制作一个超好看的保护海豚动物网页

从 IP 开始,学习数字逻辑:DataMover 基础篇

Visual Studio: Arm64EC官方支持来了

Tool_RE_IDA基础字符串修改

Grid 布局介绍

【五一特刊】FPGA零基础学习:SDR SDRAM 驱动设计

简化供采交易路径,B2B电子交易系统实现钢铁行业全链路数字化

Application practice of low-latency real-time audio and video in 5G remote control scenarios

【LeetCode 周赛】第84场双周赛

威佐夫博弈

目标检测学习笔记——paddleDetection使用