当前位置:网站首页>1.1 pytorch and neural network

1.1 pytorch and neural network

2022-04-23 07:21:00 【sunshinecxm_ BJTU】

The first 1 Chapter PyTorch And neural networks

1.1 PyTorch introduction

1.1.2 PyTorch tensor

1.1.3 PyTorch Automatic derivation mechanism

1.1.4 Calculation chart

Automatic gradient calculation seems magical , But it's not magic .

The principle behind it is worthy of in-depth understanding , This knowledge will help us build larger networks .

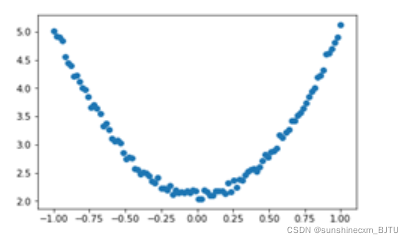

Take a look at this very simple network . It's not even a neural network , It's just a series of calculations .

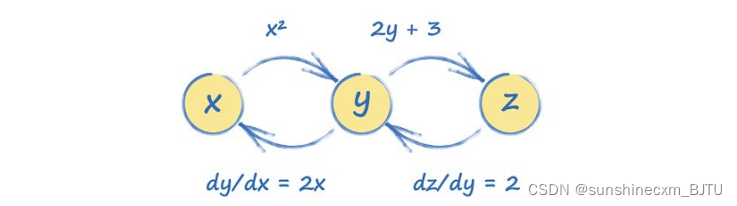

In the diagram above , We see the input x Used to calculate y,y Then used to calculate the output z.

hypothesis y and z The calculation process of is as follows :

If we want to know the output z z z How to follow x x x change , We need to know the gradient d y / d x dy/dx dy/dx. Let's calculate step by step .

The first line is the chain rule of calculus (chain rule), Very important to us .

We just figured out , z z z along with x x x The change of can be expressed as 4 x 4x 4x. If x = 3.5 x = 3.5 x=3.5, be d z / d x = 4 × 3.5 = 14 dz/dx = 4 × 3.5 = 14 dz/dx=4×3.5=14.

When y y y With x x x The formal definition of , and z z z With y y y The formal definition of ,PyTorch Then connect these tensors into a picture , To show how these tensors are connected . This picture is called the calculation chart (computation graph).

In our case , The calculation diagram may look like the following :

We can see y y y How is from x x x Calculated , z z z How is from y y y Calculated . Besides ,PyTorch Several reverse arrows have also been added , Express y y y How to follow x x x change , z z z How to follow y y y change . These are gradients , It is used to update the neural network in the training process . The process of calculus consists of PyTorch complete , There is no need for us to calculate .

To work out z z z How to follow x x x change , We merged from z z z Through y y y go back to x x x All gradients in the path of . This is the chain rule of calculus .

PyTorch There is only one forward connection graph . We need to pass backward() function , send PyTorch Calculate the reverse gradient .

gradient dz/dx In tensor x Is stored as x.grad.

It is worth noting that , tensor x x x The internal gradient value is the same as z It's about . This is because we ask PyTorch Use z.backward () from z z z Reverse calculation . therefore , x . g r a d x.grad x.grad yes d z / d x dz/dx dz/dx, instead of d y / d x dy/dx dy/dx.

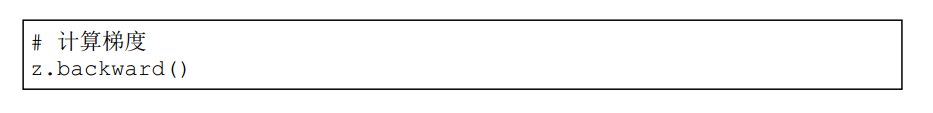

Most effective neural networks contain multiple nodes , Each node has multiple links connected to the node , And the link from this node . Let's take a simple example , The node in the example has multiple incoming links .

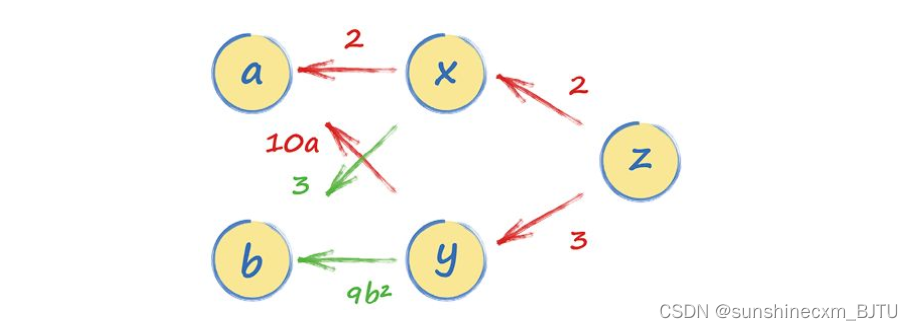

so , Input a a a and b b b At the same time x x x and y y y Have an impact on , And output z z z By x x x and y y y Calculated .

The relationship between these nodes is as follows .

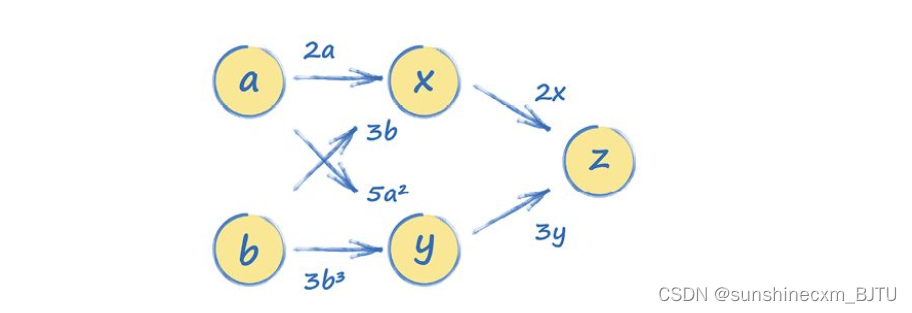

We calculate the gradient in the same way .

next , Add this information to the calculation diagram .

Now? , We can easily pass z To a Calculate the gradient of the path dz/da. actually , from z To a There are two paths , One goes through x, The other one goes through y, We just need to add the expressions of the two paths . This is reasonable , Because from a To z Both paths are affected z Value , This is also the same as what we calculated with the chain rule of calculus dz/da The result is the same .

d z / d a = d z / d x + d x / d a + d z / d y + d y / d a dz/da = dz/dx+dx/da +dz/dy+dy/da dz/da=dz/dx+dx/da+dz/dy+dy/da

The first path goes through x x x, Expressed as 2 × 2 2 × 2 2×2; The second path goes through y y y, Expressed as 3 × 10 a 3×10a 3×10a. therefore , z z z along with a a a The rate of change is 4 + 30 a 4 + 30a 4+30a.

If a a a yes 2, be d z / d a dz/da dz/da yes 4 + 30 × 2 = 64.

Let's test it with PyTorch Whether this value can also be obtained . First , We define PyTorch The relationships needed to build the calculation diagram .

next , We trigger the gradient calculation and query the tensor a a a The value of the inside .

Effective neural networks are usually much larger than this small network . however PyTorch The way to construct the calculation diagram and the process of calculating the gradient backward along the path are the same .

1.1.5 Learning points

- Colab The service allows us to run on Google's servers Python Code .Colab Use Python The notebook , We only need one Web Browser ready to use .

- PyTorch It's a leading Python Machine learning architecture . It is associated with numpy similar , Allows us to use an array of numbers . meanwhile , It also provides a rich set of tools and functions , Make machine learning easier .

- stay PyTorch in , The basic unit of data is tensor (tensor). Tensors can be multidimensional arrays 、 Simple two-dimensional matrix 、 One dimensional list , It can also be a single value .

- PyTorch Its main feature is that it can automatically calculate the gradient of the function (gradient). The calculation of gradient is the key to training neural network . So ,PyTorch You need to build a calculation diagram (computationgraph), The graph contains multiple tensors and the relationship between them . In the code , This process is automatically completed when we define another tensor with one tensor .

版权声明

本文为[sunshinecxm_ BJTU]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230610529584.html

边栏推荐

- Easyui combobox 判断输入项是否存在于下拉列表中

- Summary of image classification white box anti attack technology

- 【动态规划】三角形最小路径和

- 【点云系列】FoldingNet:Point Cloud Auto encoder via Deep Grid Deformation

- 【动态规划】最长递增子序列

- Android exposed components - ignored component security

- N states of prime number solution

- [2021 book recommendation] red hat rhcsa 8 cert Guide: ex200

- 第4章 Pytorch数据处理工具箱

- Fill the network gap

猜你喜欢

Summary of image classification white box anti attack technology

1.2 初试PyTorch神经网络

ArcGIS License Server Administrator 无法启动解决方法

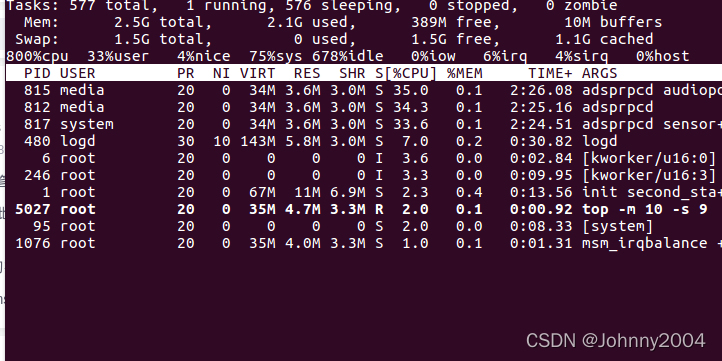

adb shell top 命令详解

![[Point Cloud Series] SG - Gan: Adversarial Self - attachment GCN for Point Cloud Topological parts Generation](/img/1d/92aa044130d8bd86b9ea6c57dc8305.png)

[Point Cloud Series] SG - Gan: Adversarial Self - attachment GCN for Point Cloud Topological parts Generation

![[recommendation of new books in 2021] practical IOT hacking](/img/9a/13ea1e7df14a53088d4777d21ab1f6.png)

[recommendation of new books in 2021] practical IOT hacking

第2章 Pytorch基础2

![[2021 book recommendation] Red Hat Certified Engineer (RHCE) Study Guide](/img/36/1c484aec5efbac8ae49851844b7946.png)

[2021 book recommendation] Red Hat Certified Engineer (RHCE) Study Guide

【2021年新书推荐】Professional Azure SQL Managed Database Administration

【点云系列】Multi-view Neural Human Rendering (NHR)

随机推荐

[point cloud series] pnp-3d: a plug and play for 3D point clouds

Component based learning (3) path and group annotations in arouter

WebView displays a blank due to a certificate problem

Chapter 1 numpy Foundation

[dynamic programming] longest increasing subsequence

Android interview Online Economic encyclopedia [constantly updating...]

MySQL数据库安装与配置详解

Summary of image classification white box anti attack technology

Migrating your native/mobile application to Unified Plan/WebRTC 1.0 API

1.1 PyTorch和神经网络

torch_geometric学习一,MessagePassing

三子棋小游戏

深度学习模型压缩与加速技术(一):参数剪枝

PaddleOCR 图片文字提取

【点云系列】PnP-3D: A Plug-and-Play for 3D Point Clouds

1.2 preliminary pytorch neural network

ThreadLocal,看我就够了!

树莓派:双色LED灯实验

【点云系列】SO-Net:Self-Organizing Network for Point Cloud Analysis

torch. mm() torch. sparse. mm() torch. bmm() torch. Mul () torch The difference between matmul()