当前位置:网站首页>Oracle data pump usage

Oracle data pump usage

2022-04-23 16:08:00 【Learning is boundless, happy to make a boat】

One 、 Why choose data pump mode

be relative to exp/imp The way , Data pump (expdp/impdp) faster , And can export empty tables ; be relative to rman、dg Methods such as , Data pump operation is simpler . Besides , In a small amount of data 、 When the warehouse can be stopped , The data pump method can ensure the integrity of data .

remarks :exp/imp And expdp/impdp The difference between

1、exp and imp Is a client utility , They can be used on the client side , It can also be used on the server .

2、expdp and impdp It is the tool program of the server , They can only be in Oracle Server use , Can't use... On the client side .

3、imp Only applicable to exp Exported files , Do not apply to expdp export file ;impdp Only applicable to expdp Exported files , Not applicable to exp export file .

4、 about 10g The servers above , Use exp You can't usually export 0 Empty table of row data , And you have to use expdp export .Two 、 preparation

If necessary , You need to create the necessary tablespaces on the new library 、 user ( And empowering )、 Data backup directory , Create a data backup directory on the source database .

1、 Operations on the target new library

(1) Create a temporary table space

create temporary tablespace User temporary tablespace name tempfile '/u01/tablespaces/user_temp.dbf' size 50m autoextend on next 50m maxsize 20480m extent management local;

remarks : Adjust the table space size and other parameters according to the actual situation and plan the temporary table space 、 Rollback table space and other related planning .

(2) Create a data table space

create tablespace User table namespace datafile '/u01/tablespaces/user_data.dbf' size 50m autoextend on next 50m maxsize 20480m extent management local;

(3) Build users , And specify the default tablespace

create user User name identified by password default tablespace User table namespace temporary tablespace User temporary tablespace name ;

(4) Grant permissions to users

grant connect,resource to user name 1; grant create database link to user name ; Be careful : In the case of empowering multiple users , Between user names , Just separate .

(5) Login needs to create dblink Users of , establish dblink

CREATE DATABASE LINK DBLink name CONNECT TO user IDENTIFIED BY password USING '(DESCRIPTION =(ADDRESS_LIST =(ADDRESS =(PROTOCOL = TCP)(HOST = XXX.XXX.XXX.XXX)(PORT = 1521)))(CONNECT_DATA =(SERVICE_NAME = Instance name )))';

Be careful : establish DBLINK The default is user level , Valid for current users . Only if it needs to be valid for all users , Then establish public DBlink object (pulic Parameters ).

About DBlink Knowledge points of , Reference resources :Oracle--dblink Use http://www.cnblogs.com/chinas/p/6973058.html

2、 Create a data backup directory ( Source library and target library )

The backup directory needs to use the operating system user to create a real Directory , Then login oracle dba user , Create a logical Directory , Point to this path . such oracle To identify this backup directory .

(1) Create a real directory on the operating system

- $ mkdir -p /u01/app/oracle/bankup

(2) Sign in oracle Administrator user

- $ sqlplus /nolog

- SQL> conn /as sysdba

(3) Create a logical Directory

- SQL> create directory auto_bankup as '/u01/app/oracle/bankup';

(4) Check whether the directory has been created successfully :

- SQL> select * from dba_directories;

(5) use sys The administrator gives the specified user the operation permission in the directory

SQL> grant read,write on directory data_dir to user; SQL> grant read,write on directory data_dir to user1,user2;

3、 ... and 、 Import and export

1、expdp export

Make sure that the data backup path has been created , If not, follow the instructions in the preparatory work to create .( Be careful : if CPU With sufficient resources, it is strongly recommended to enable parallel parameters , It can greatly save the cost of importing 、 Export time )

The first one is :“full=y”, Full export database :

- expdp user/passwd@orcl dumpfile=expdp.dmp directory=data_dir full=y logfile=expdp.log;

The second kind :schemas Export by user :

- expdp user/passwd@orcl schemas=user dumpfile=expdp.dmp directory=data_dir logfile=expdp.log;

The third kind of : Export by table space :

- expdp sys/passwd@orcl tablespace=tbs1,tbs2 dumpfile=expdp.dmp directory=data_dir logfile=expdp.log;

A fourth : Export table :

- expdp user/passwd@orcl tables=table1,table2 dumpfile=expdp.dmp directory=data_dir logfile=expdp.log;

The fifth : According to the query conditions :

- expdp user/passwd@orcl tables=table1='where number=1234' dumpfile=expdp.dmp directory=data_dir logfile=expdp.log;

2、impdp Import

Be careful :

(1) Ensure that the database software is installed correctly , Character set 、 The database version is consistent with the source database , Try to avoid failure caused by such factors .

(2) Ensure that the database backup directory has been built in advance , If there is no , Refer to the previous instructions to establish the directory .

(3) Transfer the data files exported from the source library to the backup directory of the target library in advance , And ensure that the database user at the time of import has operation permission for the file .

The first one is :“full=y”, Import the database in full ;

- impdp user/passwd directory=data_dir dumpfile=expdp.dmp full=y;

The second kind : Import users with the same name , From user A Import to user A;

- impdp A/passwd schemas=A directory=data_dir dumpfile=expdp.dmp logfile=impdp.log;

The third kind of :

a. from A User middle table table1 and table2 Import to B Among users ;

- impdp B/passwdtables=A.table1,A.table2 remap_schema=A:B directory=data_dir dumpfile=expdp.dmp logfile=impdp.log;

b. Set the table space TBS01、TBS02、TBS03 Import into tablespace A_TBS, Will the user B Data imported to A, And create a new oid To prevent conflict ;

- impdp A/passwdremap_tablespace=TBS01:A_TBS,TBS02:A_TBS,TBS03:A_TBS remap_schema=B:A FULL=Y transform=oid:n

- directory=data_dir dumpfile=expdp.dmp logfile=impdp.log

A fourth : Import tablespace ;

- impdp sys/passwd tablespaces=tbs1 directory=data_dir dumpfile=expdp.dmp logfile=impdp.log;

The fifth : Additional data ;

- impdp sys/passwd directory=data_dir dumpfile=expdp.dmp schemas=system table_exists_action=replace logfile=impdp.log;

--table_exists_action: What to do when the import object already exists . Valid keywords :SKIP,APPEND,REPLACE and TRUNCATE

Four 、 Parameter description

1、expdb

(1) Keywords and their descriptions

ATTACH Connect to an existing job , for example ATTACH [= Job name ].

COMPRESSION Reduce the size of the contents of the dump file , The valid keywords are The value is : ALL, (METADATA_ONLY), DATA_ONLY and NONE.

CONTENT Specify the data to unload , The valid keywords are The value is : (ALL), DATA_ONLY and METADATA_ONLY.

DATA_OPTIONS Data layer tags , The only valid value is : Use CLOB Format XML_CLOBS-write XML data type .

DIRECTORY Directory objects for dump and log files , Logical directory .

DUMPFILE Target dump file (expdp.dmp) A list of , for example DUMPFILE=expdp1.dmp, expdp2.dmp.

ENCRYPTION Encrypt some or all dump files , The valid keyword value is : ALL, DATA_ONLY, METADATA_ONLY,ENCRYPTED_COLUMNS_ONLY or NONE.

ENCRYPTION_ALGORITHM Specify how encryption should be done , The valid keyword value is : (AES128), AES192 and AES256.

ENCRYPTION_MODE Method of generating encryption key , The valid keyword value is : DUAL, PASSWORD and (TRANSPARENT).

ENCRYPTION_PASSWORD Password keyword used to create encrypted column data .

ESTIMATE Calculate job estimate , The valid keyword value is : (BLOCKS) and STATISTICS.

ESTIMATE_ONLY Calculate the job estimate without performing the export .

EXCLUDE Exclude specific object types , for example EXCLUDE=TABLE:EMP. example :EXCLUDE=[object_type]:[name_clause],[object_type]:[name_clause] .

FILESIZE Specifies the size of each dump file in bytes .

FLASHBACK_SCN Used to set the session snapshot back to its previous state SCN. -- Specifies to export a specific SCN Timetable data .

FLASHBACK_TIME Used to get the closest to the specified time SCN Time for .-- Export table data at a specific time point , Be careful FLASHBACK_SCN and FLASHBACK_TIME Can't be used at the same time .

FULL Export the entire database (N).

HELP Show help messages (N).

INCLUDE Include specific object types , for example INCLUDE=TABLE_DATA.

JOB_NAME The name of the export job to be created .

LOGFILE Log file name (export.log).

NETWORK_LINK The name of the remote database linked to the source system .

NOLOGFILE Do not write to log file (N).

PARALLEL Change the activity of the current job worker Number of .

PARFILE Specify the parameter file .

QUERY Predicate clause used to export a subset of the table .--QUERY = [schema.][table_name:] query_clause.

REMAP_DATA Specify the data conversion function , for example REMAP_DATA=EMP.EMPNO:REMAPPKG.EMPNO.

REUSE_DUMPFILES Overwrite target dump file ( If the file exists ) (N).

SAMPLE Percentage of data to be exported .

SCHEMAS List of schemes to export ( Login scheme ).

STATUS At the default value (0) Will display the new status when available , The frequency to be monitored ( In seconds ) Job status .

TABLES Identify the list of tables to export - There is only one plan .--[schema_name.]table_name[:partition_name][,…]

TABLESPACES Identifies the list of tablespaces to export .

TRANSPORTABLE Specifies whether transportable methods can be used , The valid keyword value is : ALWAYS, (NEVER).

TRANSPORT_FULL_CHECK Verify the storage segments of all tables (N).

TRANSPORT_TABLESPACES List of tablespaces from which to unload metadata .

VERSION The version of the object to export , The valid keywords are :(COMPATIBLE), LATEST Or any valid database version .(2) Commands and instructions

ADD_FILE Add dump files to the dump file set .

CONTINUE_CLIENT Return to recording mode . If idle , The job will be restarted .

EXIT_CLIENT Exit the client session and leave the job running .

FILESIZE follow-up ADD_FILE The default file size of the command ( byte ).

HELP Summarize the interactive commands .

KILL_JOB Separate and delete jobs .

PARALLEL Change the activity of the current job worker Number of .PARALLEL=<worker Number of >.

_DUMPFILES Overwrite target dump file ( If the file exists ) (N).

START_JOB start-up / Resume the current job .

STATUS At the default value (0) Will display the new status when available , The frequency to be monitored ( In seconds ) Job status .STATUS[=interval].

STOP_JOB Sequentially shut down the executed jobs and exit the client .STOP_JOB=IMMEDIATE Data pump operation will be shut down immediately .2、impdb

(1) Keywords and descriptions

ATTACH Connect to an existing job , for example ATTACH [= Job name ].

CONTENT Specify the data to unload , The valid keywords are The value is : (ALL), DATA_ONLY and METADATA_ONLY.

DATA_OPTIONS Data layer tags , The only valid value is :SKIP_CONSTRAINT_ERRORS- Constraint error is not serious .

DIRECTORY For dump files , Log files and sql The directory object used by the file , Logical directory .

DUMPFILE From you to (expdp.dmp) List of dump files imported in , for example DUMPFILE=expdp1.dmp, expdp2.dmp.

ENCRYPTION_PASSWORD Password keyword used to access encrypted column data . This parameter is not valid for network import job .

ESTIMATE Calculate job estimate , The valid keywords are :(BLOCKS) and STATISTICS.

EXCLUDE Exclude specific object types , for example EXCLUDE=TABLE:EMP.

FLASHBACK_SCN Used to set the session snapshot back to its previous state SCN.

FLASHBACK_TIME Used to get the closest to the specified time SCN Time for .

FULL Import all objects from source (Y).

HELP Show help messages (N).

INCLUDE Include specific object types , for example INCLUDE=TABLE_DATA.

JOB_NAME The name of the import job to create .

LOGFILE Log file name (import.log).

NETWORK_LINK The name of the remote database linked to the source system .

NOLOGFILE Do not write to log file .

PARALLEL Change the activity of the current job worker Number of .

PARFILE Specify the parameter file .

PARTITION_OPTIONS Specify how partitions should be converted , The valid keywords are :DEPARTITION,MERGE and (NONE).

QUERY Predicate clause used to import a subset of the table .

REMAP_DATA Specify the data conversion function , for example REMAP_DATA=EMP.EMPNO:REMAPPKG.EMPNO.

REMAP_DATAFILE In all DDL Redefine the data file reference in the statement .

REMAP_SCHEMA Load objects from one scheme into another .

REMAP_TABLE Remap table names to another table , for example REMAP_TABLE=EMP.EMPNO:REMAPPKG.EMPNO.

REMAP_TABLESPACE Remap a tablespace object to another tablespace .

REUSE_DATAFILES If the tablespace already exists , Initialize it (N).

SCHEMAS List of schemes to import .

SKIP_UNUSABLE_INDEXES Skip indexes set to useless index state .

SQLFILE Will all SQL DDL Write the specified file .

STATUS At the default value (0) Will display the new status when available , The frequency to be monitored ( In seconds ) Job status .

STREAMS_CONFIGURATION Enable loading of stream metadata .

TABLE_EXISTS_ACTION What to do when the import object already exists . Valid keywords :(SKIP),APPEND,REPLACE and TRUNCATE.

TABLES Identify the list of tables to import .

TABLESPACES Identify the list of tablespaces to import .

TRANSFORM Metadata transformations to apply to applicable objects . Valid conversion keywords are :SEGMENT_ATTRIBUTES,STORAGE,OID and PCTSPACE.

TRANSPORTABLE Options for selecting transportable data movement . Valid keywords are : ALWAYS and (NEVER). Only in NETWORK_LINK Valid in mode import operation .

TRANSPORT_DATAFILES List of data files imported in transportable mode .

TRANSPORT_FULL_CHECK Verify the storage segments of all tables (N).

TRANSPORT_TABLESPACES List of tablespaces from which to load metadata . Only in NETWORK_LINK Valid in mode import operation .

VERSION The version of the object to export , The valid keywords are :(COMPATIBLE), LATEST Or any valid database version . Only on NETWORK_LINK and SQLFILE It works .(2) Commands and instructions

CONTINUE_CLIENT Return to recording mode . If idle , The job will be restarted .

EXIT_CLIENT Exit the client session and leave the job running .

HELP Summarize the interactive commands .

KILL_JOB Separate and delete jobs .

PARALLEL Change the activity of the current job worker Number of .PARALLEL=<worker Number of >.

START_JOB start-up / Resume the current job .START_JOB=SKIP_CURRENT Any action performed when the job stops will be skipped before starting the job .

STATUS At the default value (0) Will display the new status when available , The frequency to be monitored ( In seconds ) Job status .STATUS[=interval].

STOP_JOB Sequentially shut down the executed jobs and exit the client .STOP_JOB=IMMEDIATE Data pump operation will be shut down immediately .5、 ... and 、 Error and handling

1、ORA-39112

Export normal , When importing data , Only some records and other data are successfully imported , The other part of the withdrawal ora 39112 error , After investigation, it is because the exported user data , The index of a table with partial records is in another table space , The space has not been created yet , So it leads to the failure .

resolvent : At the time of import , Add parameter :RANSFORM=segment_attributes:n , coordination table_exists_action=replace Parameters , Just import it again .

RANSFORM=segment_attributes:n At the time of import , The data will be imported into the default table space .

Add , Possible causes of this problem :

1、 In the original test library , The goal is schema Mutual authorization with other users , But you derived dmp Not all users are included in , When importing, the corresponding user did not create .

2、 Then there , Table space problem , The index of a table under the user in the test library is not in its default table space , So you have to be on the target side ( This is the production environment ), Create the corresponding table space ,

That is, if you put a The permission of a table under the user is granted to b, So you're putting a When the user pours the data pump into the production warehouse , He will check whether there are users in the production Library b. Do the same thing .2、ORA-39346

During the import process , Encounters an error " ORA-39346: data loss in character set conversion for object SCHEMA_EXPORT/PROCEDURE/PROCEDURE "

oracle The official description is as follows :

Description: data loss in character set conversion for object string

Cause: Oracle Data Pump import converted a metadata object from the export database character set into the target database character set prior to processing the object. Some characters could not be converted to the target database character set and so the default replacement character was used.

Action: No specific user action is required. This type of data loss can occur if the target database character set is not a superset of the export databases character set.

6、 ... and 、 One click script

Because the network segments of the old library and the new library are different , So it's not a one click migration script , Instead, export 、 The import operation is carried out separately . I wrote a script , Reduce repetitive labor , Students in need can refer to it

#!/bin/bash

#############################################################################

# Script function :

# Script path :

# Usage method : Script name Operation type parameter

#############################################################################

export NLS_LANG=american_america.AL32UTF8

export ORACLE_HOME=/u01/app/oracle/product/12.1./db_1

export ORACLE_SID=cyrtestdb

export PATH=$PATH:$ORACLE_HOME/bin:/usr/bin:/usr/local/bin:/bin:/usr/bin/X11:/usr/local/bin:.

v_date=`date +%Y%m%d`

v_logfile=`echo $(basename $) | awk -F "." '{print $1".log"}'` # Log file name : The script is in the directory , Script name .log

v_usr_1="" #Oracle user name 、 password

v_pwd_1=""

v_usr_2=""

v_pwd_2=""

v_db_instance="" # Database instance name

v_backup_dir="" #Oracle Backup directory ( Global variables )

v_oradir_name="" #

v_tmp_space1="" # Temporary table space 、 Table space 、 Index table space

v_tmp_space2=""

v_space1=""

v_space2=""

v_idx_space1=""

v_idx_space2=""

v_max_size="5120m" # Maximum value of tablespace data file

v_dblink="" #dblink name

# Log

record_log(){

echo -e `date '+%Y-%m-%d %H:%M:%S'` $ | tee -a ${v_logfile}

}

# User data export

exp_usrdata(){

v_exp_usr=$

v_exp_pwd=$

v_oradir_name=$

cd ${v_backup_dir}

[[ -f ${v_exp_usr}"_"${v_date}".dmp" ]] && rm -rf ${v_exp_usr}"_"${v_date}".dmp"

expdp ${v_exp_usr}/${v_exp_pwd} DIRECTORY=${v_oradir_name} DUMPFILE=${v_exp_usr}"_"${v_date}".dmp" SCHEMAS=${v_exp_usr} LOGFILE=${v_exp_usr}"_"${v_date}".log"

}

# Create a database backup directory on the target library

create_bankup_dir(){

# Create the physical path of the operating system

[[ -d ${v_backup_dir} ]] && mkdir -p ${v_backup_dir}

sqlplus -S / as sysdba >> ${v_logfile} <<EOF

set heading off feedback off verify off

spool tmp_space_flag.tmp

grant read,write on directory '${v_oradir_name}' to '${v_usr_1}','${v_usr_2}';

spool off

exit;

EOF

## If the current tablespace does not exist , Create , Otherwise, exit the current function

if [[ `grep ${v_oradir_name} tmp_space_flag.tmp | wc -l` -eq ]]; then

record_log " Create backup directory "$" Start "

sqlplus -S / as sysdba >> ${v_logfile} <<EOF

set heading off feedback off verify off

grant read,write on directory '${v_oradir_name}' to '${v_usr_1}','${v_usr_2}';

exit;

EOF

record_log " Create backup directory "$" end "

else

record_log " Create backup directory "$" Already exists "

return

fi

## Pay attention to cleaning the temporary flag file

[[ -f ./tmp_space_flag.tmp ]] && rm -rf tmp_space_flag.tmp

}

# Create tablespace

create_space(){

v_space_name=$

# Determine the type of tablespace ( Temporary or normal tablespaces )

if [[ `grep TMP $` -eq ]]; then

v_space_type="temporary"

else

v_space_type=""

fi

sqlplus -S / as sysdba >> ${v_logfile} <<EOF

set heading off feedback off verify off

create '${v_space_type}' tablespace '${v_space_name}' tempfile '${v_backup_dir}/${v_space_name}.dbf' size 50m autoextend on next 128k maxsize '${v_max_size}' extent management local;

exit;

EOF

}

# Determine whether the tablespace exists , If tablespace does not exist, create

deal_spaces(){

v_space_name=$

sqlplus -S / as sysdba <<EOF

set heading off feedback off verify off

spool tmp_space_flag.tmp

select tablespace_name from dba_tablespaces where tablespace_name='${v_space_name}';

spool off

exit;

EOF

## If the current tablespace does not exist , Create , Otherwise, exit the current function

if [[ `grep ${v_space_name} tmp_space_flag.tmp | wc -l` -eq ]]; then

record_log " Create tablespace "$" Start "

create_space ${v_space_name}

record_log " Create tablespace "$" end "

else

record_log " Table space "$" Already exists "

return

fi

## Pay attention to cleaning the temporary flag file

[[ -f ./tmp_space_flag.tmp ]] && rm -rf tmp_space_flag.tmp

}

# Create and empower users on the target library

create_usrs(){

v_create_usr=$ # Parameters 1: user name

v_create_pwd=$ # Parameters 2: password

v_create_tmp_space=$ # Parameters 3: Temporary table space name

v_create_space=$ # Parameters 4: Tablespace name

sqlplus -S / as sysdba >> ${v_logfile} <<EOF

set heading off feedback off verify off

create user '${v_create_usr}' identified by '${v_create_pwd}' default tablespace '${v_create_space}' temporary tablespace '${v_create_tmp_space}';

grant connect,resource to '${v_create_usr}';

grant exp_full_database to '${v_create_usr}';

grant imp_full_database to '${v_create_usr}';

grant unlimited tablespace to '${v_create_usr}';

exit;

EOF

}

# User data import

imp_usrdata(){

v_imp_usr=$

v_imp_pwd=$

v_oradir_name=$

impdp ${v_imp_usr}/${v_imp_pwd} DIRECTORY=${v_oradir_name} DUMPFILE=${v_imp_usr}"_"${v_date}".dmp" SCHEMAS=${v_imp_usr} LOGFILE=${v_imp_usr}"_"${v_date}".log" table_exists_action=replace RANSFORM=segment_attributes:n

}

# Delete user

drop_user(){

v_drop_usr=$

# Delete the user and all data under the user , Delete the tablespace and all data under the tablespace

sqlplus -S / as sysdba >> ${v_logfile} <<EOF

set heading off feedback off verify off

drop user '${v_drop_usr}' cascade;

exit

EOF

}

# Delete the tablespace and delete the data files under the tablespace

drop_tablespace(){

v_drop_space=$

# Delete the user and all data under the user , Delete the tablespace and all data under the tablespace

sqlplus -S / as sysdba >> ${v_logfile} <<EOF

set heading off feedback off verify off

drop tablespace '${v_drop_space}' including contents and datafiles;

exit

EOF

## Data files under the table space on the operating system

[[ -f ${v_backup_dir}/${v_drop_space}.dbf ]] && rm -rf ${v_backup_dir}/${v_drop_space}.dbf

}

# establish dblink

create_dblink(){

v_clink_usr=$

v_clink_pwd=$

# Create... As an administrator dblink Empowerment

sqlplus -S / as sysdba >> ${v_logfile} <<EOF

set heading off feedback off verify off

grant create database link to '${v_clink_usr}';

exit;

EOF

# Log in as a normal user to create dblink

sqlplus -S ${v_clink_usr}/${v_clink_pwd}@${v_db_instance} >> ${v_logfile} <<EOF

set heading off feedback off verify off

CREATE DATABASE LINK ${v_dblink} CONNECT TO '${v_clink_usr}' IDENTIFIED BY '${v_clink_pwd}' USING '{v_db_instance}';

exit

EOF

}

# Judge dblink Whether there is

deal_dblink(){

v_link=$

v_link_usr=$

v_link_pwd=$

sqlplus -S / as sysdba <<EOF

set heading off feedback off verify off

spool tmp_space_flag.tmp

select object_name from dba_objects where object_name = '${v_link}';

spool off

exit;

EOF

## If at present dblink non-existent , Create , Otherwise, exit the current function

if [[ `grep ${v_space_name} tmp_space_flag.tmp | wc -l` -eq ]]; then

record_log " establish "$" Start "

create_dblink ${v_link_usr} ${v_link_pwd}

record_log " establish "$" end "

else

record_log $" Already exists "

return

fi

## Pay attention to cleaning the temporary flag file

[[ -f ./tmp_space_flag.tmp ]] && rm -rf tmp_space_flag.tmp

}

# The main function

main(){

v_start=`date +%s`

if [[ $ -eq "exp" ]]; then

record_log "bl Library export started ..."

exp_usrdata ${v_usr_1} ${v_pwd_1} ${v_oradir_name}

record_log "bl End of Library export ..."

record_log "hx Library export started ..."

exp_usrdata ${v_usr_2} ${v_pwd_2} ${v_oradir_name}

record_log "hx End of Library export ..."

elif [[ $ -eq "pre" ]]; then

#、 Create backup directory

create_bankup_dir

#、 Create tablespace

for v_sp in ${v_tmp_space1} ${v_tmp_space2} ${v_space1} ${v_space2} ${v_idx_space1} ${v_idx_space2}; do

deal_spaces ${v_sp}

done

#、 Create user 、 Empowerment

record_log " Create user start ..."

create_usrs ${v_usr_1} ${v_pwd_1} ${v_tmp_space1} ${v_space1}

create_usrs ${v_usr_2} ${v_pwd_2} ${v_tmp_space2} ${v_space2}

record_log " End of user creation ..."

#、 by hx Library creation dblink

record_log " establish dblink Start ..."

deal_dblink ${v_dblink} ${v_usr_2} ${v_pwd_2}

record_log " establish dblink end ..."

elif [[ $ -eq "imp" ]]; then

record_log "bl Library import started ..."

imp_usrdata ${v_usr_1} ${v_pwd_1} ${v_oradir_name}

record_log "bl Library import finished ..."

record_log "hx Library import started ..."

imp_usrdata ${v_usr_2} ${v_pwd_2} ${v_oradir_name}

record_log "hx Library import finished ..."

elif [[ $ -eq "clean" ]]; then

read -t -p " Confirm clear 0- no 1- yes " v_num

if [[ ${v_num} -eq ]]; then

record_log " Clean up the data file ..."

for m in ${} ${}; do

drop_user ${m}

done

for n in ${v_tmp_space1} ${v_tmp_space2} ${v_space1} ${v_space2} ${v_idx_space1} ${v_idx_space2}; do

drop_tablespace ${n}

done

record_log " End of data file cleaning ..."

else

exit

fi

else

echo "Usage: sh script [exp|clean|pre|imp]"

exit

fi

v_end=`date +%s`

v_use_time=$[ v_end - v_start ]

record_log " The running time of this script :"${v_use_time}" second "

}

mainReference documents

Oracle Use EXPDP and IMPDP Data pump export and import method _ Database technology http://www.linuxidc.com/Linux/2017-09/146764.htm

ORA-39112: dependent object type comment skipped - CSDN Blog http://blog.csdn.net/zhengwei125/article/details/53670656

Oracle 12cR1 ORA-39346 data loss in character set conversion for object string https://www.oraexcel.com/oracle-12cR1-ORA-39346

from 11.2.0.4 Pour into 12c Report errors :ORA-39346 - Oracle 12c Chongqing Sizhuang Oracle Forum http://bbs.cqsztech.com/forum.php?mod=viewthread&tid=17282

版权声明

本文为[Learning is boundless, happy to make a boat]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231403594354.html

边栏推荐

- 捡起MATLAB的第(5)天

- Vim使用Vundle安装代码补全插件(YouCompleteMe)

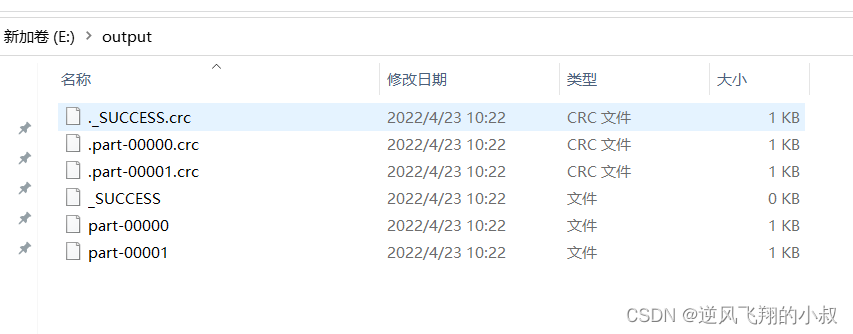

- Intersection, union and difference sets of spark operators

- Config learning notes component

- Oak-d raspberry pie cloud project [with detailed code]

- OAK-D树莓派点云项目【附详细代码】

- Meaning and usage of volatile

- Website pressure measurement tools Apache AB, webbench, Apache jemeter

- The most detailed knapsack problem!!!

- Nanny Anaconda installation tutorial

猜你喜欢

随机推荐

一文读懂串口及各种电平信号含义

TIA botu - basic operation

Method 2 of drawing ROC curve in R language: proc package

Cloud migration practice in the financial industry Ping An financial cloud integrates hypermotion cloud migration solution to provide migration services for customers in the financial industry

Compile, connect -- Notes

vim指定行注释和解注释

Gartner predicts that the scale of cloud migration will increase significantly; What are the advantages of cloud migration?

Unity shader learning

Leetcode-374 guess the size of the number

捡起MATLAB的第(6)天

[key points of final review of modern electronic assembly]

Nanny Anaconda installation tutorial

Master vscode remote GDB debugging

The system research problem that has plagued for many years has automatic collection tools, which are open source and free

面试题 17.10. 主要元素

捡起MATLAB的第(4)天

Meaning and usage of volatile

浅谈 NFT项目的价值、破发、收割之争

volatile的含义以及用法

MySQL的btree索引和hash索引区别

![Merging of Shanzhai version [i]](/img/e7/f301697aea879bcad8cef70ca890cc.png)