当前位置:网站首页>An idea of rendering pipeline based on FBO

An idea of rendering pipeline based on FBO

2022-04-23 19:13:00 【cjzcjl】

If I want to implement the following features :

1、 Use slice element shader, hold YUV The signal is input as a texture , Convert to... During sampling RGB The signal .

2、 The first 1 The picture obtained in this step passes through the slice element shader, Use 3*3 Convolution kernel , Realize convolution blur .

that , There are several schemes as follows :

The first one is :

fragment shader Every sampling 3*3 A coordinate , Record to array after conversion , After that, the array is convoluted , Finally, output the slice color .

The second kind :

fragment shader Every sampling 1 A coordinate , After conversion, it is directly output to the chip color , At this time, the output after sampling will be in the specified frameBuffer in . Then the second slice shader Use framebuffer As texture input , When sampling, each sampling 3*3 Convolute the coordinates of .

The sampling and calculation workload of the two schemes are consistent , But obviously, the first solution will increase with the number of processing steps you want to insert , And make the whole piece shader Gradually expand , And it is not convenient to add or delete flexibly . If I want to make a video editing software , The processing steps can be 1、2、3, It can also be... First 3、1、2, So in a single shader It's hard to achieve such flexible distribution , Is there a better way ?

Refer to Wu Yafeng's 《OpenGL ES 3.x Game development Volume II 》 in 1.6 chapter 《 Frame buffer and rendering buffer 》 And 1.7 chapter 《 Multiple render targets 》 after , I found the following that might be useful OpenGL characteristic :

1、FBO:

2、FBO Map to texture :

In fact, these two features are also the features that the so-called off screen rendering depends on . Through the above two feature, That could be one shader Render to framebuffer Image in , Take it and continue to be used as texture input for the next processing . The output of each step , Can be the texture input of the next processing , In this way, you can distribute shader Processing sequence and depth of , Similar to free assembly OpenGL Image processing line factory , Make it much more flexible , It is easier to implement multi-layer 、 Multi pipeline , Suitable for fish video editing 、 Multiple image processing and other fields . My architecture design is as follows :

Every Layer You can insert multiple identical or different..., as needed shaderProgram, Random order , All last Layer One by one superimposed into the final picture .

The actual code is as follows :

The first is the layer class Layer.cpp:

//

// Created by jiezhuchen on 2021/7/5.

//

#include <GLES3/gl3.h>

#include <android/log.h>

#include "Layer.h"

#include "RenderProgram.h"

#include "shaderUtil.h"

using namespace OPENGL_VIDEO_RENDERER;

Layer::Layer(float x, float y, float z, float w, float h, int windowW, int windowH) {

mX = x;

mY = y;

mZ = z;

mWidth = w;

mHeight = h;

mWindowW = windowW;

mWindowH = windowH;

mRenderSrcData.data = nullptr;

createFrameBuffer();

createLayerProgram();

}

Layer::~Layer() {

destroy();

}

void Layer::initObjMatrix() {

// Create identity matrix

setIdentityM(mObjectMatrix, 0);

setIdentityM(mUserObjectMatrix, 0);

setIdentityM(mUserObjectRotateMatrix, 0);

}

void Layer::scale(float sx, float sy, float sz) {

scaleM(mObjectMatrix, 0, sx, sy, sz);

}

void Layer::translate(float dx, float dy, float dz) {

translateM(mObjectMatrix, 0, dx, dy, dz);

}

void Layer::rotate(int degree, float roundX, float roundY, float roundZ) {

rotateM(mObjectMatrix, 0, degree, roundX, roundY, roundZ);

}

/** The user directly sets the zoom amount **/

void Layer::setUserScale(float sx, float sy, float sz) {

mUserObjectMatrix[0] = sx;

mUserObjectMatrix[5] = sy;

mUserObjectMatrix[10] = sz;

}

/** The user directly sets the position offset **/

void Layer::setUserTransLate(float dx, float dy, float dz) { // because opengl The matrix of has been rotated , So it was changed to 3、7、11 The matrix position of becomes 12、13、14

mUserObjectMatrix[12] = dx;

mUserObjectMatrix[13] = dy;

mUserObjectMatrix[14] = dz;

}

/** The user directly sets the rotation amount **/

void Layer::setUserRotate(float degree, float vecX, float vecY, float vecZ) {

setIdentityM(mUserObjectRotateMatrix, 0); // Recover first

rotateM(mUserObjectRotateMatrix, 0, degree, vecX, vecY, vecZ);

}

void Layer::locationTrans(float cameraMatrix[], float projMatrix[], int muMVPMatrixPointer) {

multiplyMM(mMVPMatrix, 0, mUserObjectMatrix, 0, mObjectMatrix, 0);

multiplyMM(mMVPMatrix, 0, mUserObjectRotateMatrix, 0, mMVPMatrix, 0);

multiplyMM(mMVPMatrix, 0, cameraMatrix, 0, mMVPMatrix, 0); // Multiply the camera matrix by the object matrix

multiplyMM(mMVPMatrix, 0, projMatrix, 0, mMVPMatrix, 0); // Multiply the projection matrix by the result matrix of the previous step

glUniformMatrix4fv(muMVPMatrixPointer, 1, false, mMVPMatrix); // Pass the final transformation relationship into the rendering pipeline

}

//todo Each modification will cause the bound texture itself to be modified , This leads to the same problem as circular argument , So use double Framebuffer

void Layer::createFrameBuffer() {

int frameBufferCount = sizeof(mFrameBufferPointerArray) / sizeof(GLuint);

// Generate framebuffer

glGenFramebuffers(frameBufferCount, mFrameBufferPointerArray);

// Generate render buffer buffer

glGenRenderbuffers(frameBufferCount, mRenderBufferPointerArray);

// Generate framebuffer texture pointer

glGenTextures(frameBufferCount, mFrameBufferTexturePointerArray);

// Traverse framebuffer And initialization

for (int i = 0; i < frameBufferCount; i++) {

// Bind frame buffer , Traverse two framebuffer Initialize separately

glBindFramebuffer(GL_FRAMEBUFFER, mFrameBufferPointerArray[i]);

// Bind buffer pointer

glBindRenderbuffer(GL_RENDERBUFFER, mRenderBufferPointerArray[i]);

// Initialize the storage for the render buffer , Allocate video memory

glRenderbufferStorage(GL_RENDERBUFFER,

GL_DEPTH_COMPONENT16, mWindowW, mWindowH); // Set up framebuffer The length and width of

glBindTexture(GL_TEXTURE_2D, mFrameBufferTexturePointerArray[i]); // Bind texture Pointer

glTexParameterf(GL_TEXTURE_2D,// Set up MIN Sampling methods

GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameterf(GL_TEXTURE_2D,// Set up MAG Sampling methods

GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameterf(GL_TEXTURE_2D,// Set up S Axis stretch method

GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D,// Set up T Axis stretch method

GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexImage2D// Format the color attachment texture map

(

GL_TEXTURE_2D,

0, // level

GL_RGBA, // Internal format

mWindowW, // Width

mWindowH, // Height

0, // Boundary width

GL_RGBA, // Format

GL_UNSIGNED_BYTE,// Data format per pixel

nullptr

);

glFramebufferTexture2D // Set the color buffer attachment of custom frame buffer

(

GL_FRAMEBUFFER,

GL_COLOR_ATTACHMENT0, // Color buffer attachment

GL_TEXTURE_2D,

mFrameBufferTexturePointerArray[i], // texture id

0 // level

);

glFramebufferRenderbuffer // Set the depth buffer attachment of custom frame buffer

(

GL_FRAMEBUFFER,

GL_DEPTH_ATTACHMENT, // Depth buffer attachment

GL_RENDERBUFFER, // Render buffer

mRenderBufferPointerArray[i] // Render depth buffer id

);

}

// Bind back to the system default framebuffer, Otherwise, nothing will be displayed

glBindFramebuffer(GL_FRAMEBUFFER, 0);// Bind frame buffer id

}

void Layer::createLayerProgram() {

char vertShader[] = GL_SHADER_STRING(

##version 300 es\n

uniform mat4 uMVPMatrix; // Rotate, translate, zoom Total transformation matrix . The object matrix is multiplied by it to produce a transformation

in vec3 objectPosition; // Object position vector , Participate in the operation but not output to the film source

in vec4 objectColor; // Physical color vector

in vec2 vTexCoord; // Coordinates within the texture

out vec4 fragObjectColor;// Output the processed color value to the chip program

out vec2 fragVTexCoord;// Output the processed texture coordinates to the slice program

void main() {

gl_Position = uMVPMatrix * vec4(objectPosition, 1.0); // Set object position

fragVTexCoord = vTexCoord; // No processing by default , Directly output physical internal sampling coordinates

fragObjectColor = objectColor; // No processing by default , Output color values to the source

}

);

char fragShader[] = GL_SHADER_STRING(

##version 300 es\n

precision highp float;

uniform sampler2D textureFBO;// Texture input

in vec4 fragObjectColor;// receive vertShader The processed color value is given to the chip element program

in vec2 fragVTexCoord;// receive vertShader The processed texture coordinates are given to the slice element program

out vec4 fragColor;// The color of the slice output to

void main() {

vec4 color = texture(textureFBO, fragVTexCoord);// The color of the corresponding coordinates in the sampled texture , Texture rendering

color.a = color.a * fragObjectColor.a;// Use vertex transparency information to control texture transparency

fragColor = color;

}

);

float ratio = (float) mWindowH / mWindowW;;

float tempTexCoord[] = // Sampling coordinates in texture , Be similar to canvas coordinate // There's something wrong with this thing , It leads to two problems framebuffer When the pictures take textures from each other, they are upside down

{

1.0, 0.0,

0.0, 0.0,

1.0, 1.0,

0.0, 1.0

};

memcpy(mTexCoor, tempTexCoord, sizeof(tempTexCoord));

float tempColorBuf[] = {

1.0, 1.0, 1.0, 1.0,

1.0, 1.0, 1.0, 1.0,

1.0, 1.0, 1.0, 1.0,

1.0, 1.0, 1.0, 1.0

};

memcpy(mColorBuf, tempColorBuf, sizeof(tempColorBuf));

float vertxData[] = {

mX + 2, mY, mZ,

mX, mY, mZ,

mX + 2, mY + ratio * 2, mZ,

mX, mY + ratio * 2, mZ,

};

memcpy(mVertxData, vertxData, sizeof(vertxData));

mLayerProgram = createProgram(vertShader + 1, fragShader + 1);

// Get the vertex position attribute reference in the program " The pointer "

mObjectPositionPointer = glGetAttribLocation(mLayerProgram.programHandle, "objectPosition");

// Texture sampling coordinates

mVTexCoordPointer = glGetAttribLocation(mLayerProgram.programHandle, "vTexCoord");

// Get the vertex color attribute reference in the program " The pointer "

mObjectVertColorArrayPointer = glGetAttribLocation(mLayerProgram.programHandle, "objectColor");

// Get the total transformation matrix reference in the program " The pointer "

muMVPMatrixPointer = glGetUniformLocation(mLayerProgram.programHandle, "uMVPMatrix");

// Create identity matrix

initObjMatrix();

}

void Layer::destroy() {

//todo

}

void Layer::addRenderProgram(RenderProgram *program) {

mRenderProgramList.push_back(program);

}

void Layer::removeRenderProgram(RenderProgram *program) {

mRenderProgramList.remove(program);

}

/** Pass in rendering data to each template **/ //todo Revise it , If data The first renderer is called only when it is updated loadData Refresh texture , save CPU resources Add one more needRefresh sign

void Layer::loadData(char *data, int width, int height, int pixelFormat, int offset) {

mRenderSrcData.data = data;

mRenderSrcData.width = width;

mRenderSrcData.height = height;

mRenderSrcData.pixelFormat = pixelFormat;

mRenderSrcData.offset = offset;

}

/**@param texturePointers Can be used to render already bound textures , Or directly into FBO, Render the result of the previous layer further , For example, superimposing pictures 、 Or the ground glass effect treatment . Of course, you can also stack more renderers on one layer , However, multi-layer is convenient for the overlap of different pictures with different sizes , The renderer keeps the same size in the same layer **/

void Layer::loadTexture(GLuint texturePointer, int width, int height) {

mRenderSrcTexture.texturePointer = texturePointer;

mRenderSrcTexture.width = width;

mRenderSrcTexture.height = height;

}

void Layer::drawLayerToFrameBuffer(float *cameraMatrix, float *projMatrix, GLuint outputFBOPointer, DrawType drawType) {

glUseProgram(mLayerProgram.programHandle);

glBindFramebuffer(GL_FRAMEBUFFER, outputFBOPointer);

// Keep the object and zoom the scene

float objMatrixClone[16];

memcpy(objMatrixClone, mObjectMatrix, sizeof(objMatrixClone));

// The coordinate axis here should logically be x,y,z Of equal density , But because of all kinds of equipment ,x,y The shaft may have varying degrees of density stretching , So we have to deal with it :

/** The principle of maintaining the width and height of the picture :

* 0、 Calculate the current percentage of texture

* At the last mapping , The vertex is multiplied by the modified object scaling relationship matrix , And that's what happened

* **/

if (drawType == DRAW_DATA) {

float ratio =

mWindowW > mWindowH ? ((float) mWindowH / (float) mWindowW) : ((float) mWindowW /

(float) mWindowH); // Calculates the short edge of the current viewport / Long side ratio , So we know X Axis and Y The shaft -1~1 The ratio of the normalized length to the actual length

// Determine which side of the picture better covers the viewport length of the corresponding axis , Make it full of space on either side , On the other side, press OpenGL Short edge of viewport / Aspect ratio scaling , At this time, the picture with any aspect ratio will become a rectangle , Then multiplied by the scale of the picture itself, it is converted into the aspect ratio of the picture itself , You can restore the scale of the picture itself when the texture is rendered

float widthPercentage = (float) mRenderSrcData.width / (float) mWindowW;

float heightPercentage = (float) mRenderSrcData.height / (float) mWindowH;

if (widthPercentage > heightPercentage) { // If the width ratio is more , Stretch as wide as possible , The height is readjusted to a uniform density unit according to the viewport scale , Then adjust the scaling of the high side of the object according to the ratio of height to width of the picture

scale(1.0, ratio * ((float) mRenderSrcData.height / mRenderSrcData.width), 1.0); //SCALEY The ratio of height to width of the picture * Viewport scale

} else {

scale(ratio * ((float) mRenderSrcData.width / mRenderSrcData.height), 1.0, 1.0);

}

} else {

float ratio =

mWindowW > mWindowH ? ((float) mWindowH / (float) mWindowW) : ((float) mWindowW /

(float) mWindowH); // Calculates the short edge of the current viewport / Long side ratio , So we know X Axis and Y The shaft -1~1 The ratio of the normalized length to the actual length

// Determine which side of the picture better covers the viewport length of the corresponding axis , Make it full of space on either side , On the other side, press OpenGL Short edge of viewport / Aspect ratio scaling , At this time, the picture with any aspect ratio will become a rectangle , Then multiplied by the scale of the picture itself, it is converted into the aspect ratio of the picture itself , You can restore the scale of the picture itself when the texture is rendered

float widthPercentage = (float) mRenderSrcTexture.width / (float) mWindowW;

float heightPercentage = (float) mRenderSrcTexture.height / (float) mWindowH;

if (widthPercentage > heightPercentage) {

scale(1.0, ratio * ((float) mRenderSrcTexture.height / mRenderSrcTexture.width), 1.0);

// Several other successful algorithms :

// scale(1.0, ((float) (mRenderSrcTexture.width * (mWindowH / mRenderSrcTexture.height)) / (float) mWindowW) * ratio, 1.0);

// scale(1.0, ((float) mWindowW / mWindowH) * ((float) mRenderSrcTexture.height / mRenderSrcTexture.width) * (1 / ratio), 1.0);

// scale(1.0, ((float) mRenderSrcTexture.height / mWindowH) * ((float) mWindowW / mRenderSrcTexture.width) * (ratio), 1.0);

} else {

scale(ratio * ((float) mRenderSrcTexture.width / mRenderSrcTexture.height), 1.0, 1.0); // Proportional : Containers w * texture

}

}

// Object coordinates * Scale translation rotation matrix -> Apply the effect of scaling the picture

locationTrans(cameraMatrix, projMatrix, muMVPMatrixPointer);

// Restore zoom scene

memcpy(mObjectMatrix, objMatrixClone, sizeof(mObjectMatrix));

// /** Realize two Framebuffer The picture is superimposed , Here's an explanation :

// * If it's an even number of renderers , So after alternate rendering , So the first 0 individual FBO The picture is the previous picture , The first 1 individual FBO For the latest picture , So first draw the second 0 individual FBO The content is superimposed with the first

// * Otherwise, it is after alternation , The first 1 The first renderer is the last picture , The first 0 individual FBO It's the last picture , The stacking order needs to be changed **/

// for(int i = 0; i < 2; i ++) {

// glActiveTexture(GL_TEXTURE0);

// if (mRenderProgramList.size() % 2 == 0) {

// setUserScale(0.5, 0.5, 0);

// setUserTransLate(-0.5, 0, 0);

// glBindTexture(GL_TEXTURE_2D, mFrameBufferTexturePointerArray[i]);

// } else {

// setUserScale(0.5, 0.5, 0);

// setUserTransLate(0.5, 0, 0);

// glBindTexture(GL_TEXTURE_2D, mFrameBufferTexturePointerArray[1 - i]);

// }

// glUniform1i(glGetUniformLocation(mLayerProgram.programHandle, "textureFBO"), 0); // Get the pointer of texture attribute

// // Feed vertex position data into rendering pipeline

// glVertexAttribPointer(mObjectPositionPointer, 3, GL_FLOAT, false, 0, mVertxData); // Three dimensional vector ,size by 2

// // Send vertex color data to the rendering pipeline

// glVertexAttribPointer(mObjectVertColorArrayPointer, 4, GL_FLOAT, false, 0, mColorBuf);

// // Transfer vertex texture coordinate data into the rendering pipeline

// glVertexAttribPointer(mVTexCoordPointer, 2, GL_FLOAT, false, 0, mTexCoor); // Two dimensional vector ,size by 2

// glEnableVertexAttribArray(mObjectPositionPointer); // Turn on vertex properties

// glEnableVertexAttribArray(mObjectVertColorArrayPointer); // Enable color properties

// glEnableVertexAttribArray(mVTexCoordPointer); // Enable texture sampling to locate coordinates

// glDrawArrays(GL_TRIANGLE_STRIP, 0, 4); // Draw lines , Added point Floating point numbers /3 Is the coordinate number ( Because a coordinate consists of x,y,z3 individual float constitute , Can't be used directly )

// glDisableVertexAttribArray(mObjectPositionPointer);

// glDisableVertexAttribArray(mObjectVertColorArrayPointer);

// glDisableVertexAttribArray(mVTexCoordPointer);

// }

/** If it's an even number fragShaderProgram, Then the final picture falls on FBO_1. Otherwise, the odd number will fall in FBO_0, There's no need to put two FBO Add it up in two times **/

glActiveTexture(GL_TEXTURE0);

if (mRenderProgramList.size() % 2 == 0) {

//setUserScale(0.5, 0.5, 0); // Test code

//setUserTransLate(-0.5, 0, 0); // Test code

glBindTexture(GL_TEXTURE_2D, mFrameBufferTexturePointerArray[1]);

} else {

//setUserScale(0.5, 0.5, 0); // Test code

//setUserTransLate(0.5, 0, 0); // Test code

glBindTexture(GL_TEXTURE_2D, mFrameBufferTexturePointerArray[0]);

}

glUniform1i(glGetUniformLocation(mLayerProgram.programHandle, "textureFBO"), 0); // Gets the index of the texture attribute , Assign the current value to the variable corresponding to the index ActiveTexture The index of

// Feed vertex position data into rendering pipeline

glVertexAttribPointer(mObjectPositionPointer, 3, GL_FLOAT, false, 0, mVertxData); // Three dimensional vector ,size by 2

// Send vertex color data to the rendering pipeline

glVertexAttribPointer(mObjectVertColorArrayPointer, 4, GL_FLOAT, false, 0, mColorBuf);

// Transfer vertex texture coordinate data into the rendering pipeline

glVertexAttribPointer(mVTexCoordPointer, 2, GL_FLOAT, false, 0, mTexCoor); // Two dimensional vector ,size by 2

glEnableVertexAttribArray(mObjectPositionPointer); // Turn on vertex properties

glEnableVertexAttribArray(mObjectVertColorArrayPointer); // Enable color properties

glEnableVertexAttribArray(mVTexCoordPointer); // Enable texture sampling to locate coordinates

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4); // Draw lines , Added point Floating point numbers /3 Is the coordinate number ( Because a coordinate consists of x,y,z3 individual float constitute , Can't be used directly )

glDisableVertexAttribArray(mObjectPositionPointer);

glDisableVertexAttribArray(mObjectVertColorArrayPointer);

glDisableVertexAttribArray(mVTexCoordPointer);

}

/** Gradually process and draw

* @param cameraMatrix Camera matrix , Locate the observer 、 Observe the rotation degree and observation direction of the picture

* @param projMatrix Projection matrix , decision 3d By what coefficient the object is projected onto the screen **/ //todo Revise it , If data The first renderer is called only when it is updated loadData Refresh texture , save CPU resources

void

Layer::drawTo(float *cameraMatrix, float *projMatrix, GLuint outputFBOPointer, int fboW, int fboH, DrawType drawType) {

// Clean up the double Framebuffer What remains

for (int i = 0; i < 2; i++) {

glBindFramebuffer(GL_FRAMEBUFFER, mFrameBufferPointerArray[i]);

glClear(GL_DEPTH_BUFFER_BIT | GL_COLOR_BUFFER_BIT); // Clean the screen

}

int i = 0;

/** The first 0 A renderer to data For data entry , Use FBO[0] Render the result . The first 1 A renderer uses FBO_texture[0] As texture input , Render results are output to FBO[1].

* The first 2 A renderer uses FBO_texture[1] As texture input , Render results are output to FBO[0], Cycle the results and inputs in turn , Achieve effect superposition .

* Use double FBO The reason for binding each other is to solve some problems shader If the algorithm is bound FBO_texture And the output of FBO If it is the same, an exception will appear , So use this method **/

for (auto item = mRenderProgramList.begin(); item != mRenderProgramList.end(); item++, i++) {

// To receive drawing data framebuffer And used as texture input framebuffer It can't be the same

int layerFrameBuffer = i % 2 == 0 ? mFrameBufferPointerArray[0] : mFrameBufferPointerArray[1];

int fboTexture = i % 2 == 1 ? mFrameBufferTexturePointerArray[0] : mFrameBufferTexturePointerArray[1];

// The first renderer accepts the layer's raw data ( The original data of the layer can be an output byte array , Or the texture itself , for example OES texture ), Others are taken as input from the previous rendering result

if (i == 0) {

switch (drawType) {

case DRAW_DATA: {

if (mRenderSrcData.data != nullptr) {

(*item)->loadData(mRenderSrcData.data, mRenderSrcData.width,

mRenderSrcData.height,

mRenderSrcData.pixelFormat, mRenderSrcData.offset);

// The renderer processes the results into layers FBO in

(*item)->drawTo(cameraMatrix, projMatrix, RenderProgram::DRAW_DATA,

layerFrameBuffer, mWindowW, mWindowH);

}

break;

}

case DRAW_TEXTURE: {

Textures texture;

texture.texturePointers = mRenderSrcTexture.texturePointer;

texture.width = mRenderSrcTexture.width;

texture.height = mRenderSrcTexture.height;

Textures textures[1];

textures[0] = texture;

(*item)->loadTexture(textures);

// The renderer processes the results into layers FBO in

(*item)->drawTo(cameraMatrix, projMatrix, RenderProgram::DRAW_TEXTURE,

layerFrameBuffer, mWindowW, mWindowH);

break;

}

}

} else { // If there is only one renderer, you can't go else in , Otherwise, No 0 The last renderer uses the results of the last one in turn , That is, layers FBO Data in as input bug

// Save to... Using the previous renderer FBO Result , That is to say FBO_texture As texture input for secondary processing

Textures t[1];

t[0].texturePointers = fboTexture;

t[0].width = mWindowW;

t[0].height = mWindowH;

(*item)->loadTexture(t); // Use the rendering result of the previous renderer as the painting input

// The renderer processes the results into layers FBO in

(*item)->drawTo(cameraMatrix, projMatrix, RenderProgram::DRAW_TEXTURE,

layerFrameBuffer, mWindowW, mWindowH);

}

}

// Finally, render to the target framebuffer

drawLayerToFrameBuffer(cameraMatrix, projMatrix, outputFBOPointer, drawType);

// Rendering Statistics

mFrameCount++;

}

among createFrameBuffer I created two FBO object , The reference technology is the double buffering mechanism , Each time the current fragShaderProgram When rendering ,FBO_0 Saved the last fragShaderProgram The rendering result of , Input as texture to the currently executing fragShaderProgram,FBO_1 Used to save the current fragShaderProgram The result of the rendering . Carry out the next fragShaderProgram when ,FBO_1 As input ,FBO_0 As the output , By analogy , Swap every time you render . Realize the rendering of multiple effects of a single layer .

among drawTo Method can be passed in to host the content of the layer FBO, The default is 0 Number FBO, That is to say glSurfaceview Creating EGLContext And bind to view The one on the , So that the content can be presented on the screen . The code of the more important part is as follows :

for (auto item = mRenderProgramList.begin(); item != mRenderProgramList.end(); item++, i++) {

// To receive drawing data framebuffer And used as texture input framebuffer It can't be the same

int layerFrameBuffer = i % 2 == 0 ? mFrameBufferPointerArray[0] : mFrameBufferPointerArray[1];

int fboTexture = i % 2 == 1 ? mFrameBufferTexturePointerArray[0] : mFrameBufferTexturePointerArray[1];

// The first renderer accepts the layer's raw data ( The original data of the layer can be an output byte array , Or the texture itself , for example OES texture ), Others are taken as input from the previous rendering result

if (i == 0) {

switch (drawType) {

case DRAW_DATA: {

if (mRenderSrcData.data != nullptr) {

(*item)->loadData(mRenderSrcData.data, mRenderSrcData.width,

mRenderSrcData.height,

mRenderSrcData.pixelFormat, mRenderSrcData.offset);

// The renderer processes the results into layers FBO in

(*item)->drawTo(cameraMatrix, projMatrix, RenderProgram::DRAW_DATA,

layerFrameBuffer, mWindowW, mWindowH);

}

break;

}

case DRAW_TEXTURE: {

Textures texture;

texture.texturePointers = mRenderSrcTexture.texturePointer;

texture.width = mRenderSrcTexture.width;

texture.height = mRenderSrcTexture.height;

Textures textures[1];

textures[0] = texture;

(*item)->loadTexture(textures);

// The renderer processes the results into layers FBO in

(*item)->drawTo(cameraMatrix, projMatrix, RenderProgram::DRAW_TEXTURE,

layerFrameBuffer, mWindowW, mWindowH);

break;

}

}

} else { // If there is only one renderer, you can't go else in , Otherwise, No 0 The last renderer uses the results of the last one in turn , That is, layers FBO Data in as input bug

// Save to... Using the previous renderer FBO Result , That is to say FBO_texture As texture input for secondary processing

Textures t[1];

t[0].texturePointers = fboTexture;

t[0].width = mWindowW;

t[0].height = mWindowH;

(*item)->loadTexture(t); // Use the rendering result of the previous renderer as the painting input

// The renderer processes the results into layers FBO in

(*item)->drawTo(cameraMatrix, projMatrix, RenderProgram::DRAW_TEXTURE,

layerFrameBuffer, mWindowW, mWindowH);

}

}Previous introduction createFrameBuffer It has been said that it uses a double buffer mechanism , therefore fragShaderProgram The difference in the parity of the number of , The final picture rendered by the layer may fall into different Framebuffer in . For example, the rendering pipeline of layers has 3 individual fragShaderProgram Words , Then, first render the picture and output it to Framebuffer0, And then framebuffer0 As input , Output to after rendering Framebuffer1, Then take it. Framebuffer1 As input , Output to Framebuffer0. This rotation mode , bring fragShaderProgram The rendering effect can be superimposed continuously .

renderProgram The base class of is designed as follows :

//

// Created by jiezhuchen on 2021/6/21.

//

#include <GLES3/gl3.h>

#include <GLES3/gl3ext.h>

#include "RenderProgram.h"

#include "matrix.c"

#include "shaderUtil.c"

using namespace OPENGL_VIDEO_RENDERER;

void RenderProgram::initObjMatrix() {

// Create identity matrix

setIdentityM(mObjectMatrix, 0);

}

void RenderProgram::scale(float sx, float sy, float sz) {

scaleM(mObjectMatrix, 0, sx, sy, sz);

}

void RenderProgram::translate(float dx, float dy, float dz) {

translateM(mObjectMatrix, 0, dx, dy, dz);

}

void RenderProgram::rotate(int degree, float roundX, float roundY, float roundZ) {

rotateM(mObjectMatrix, 0, degree, roundX, roundY, roundZ);

}

float* RenderProgram::getObjectMatrix() {

return mObjectMatrix;

}

void RenderProgram::setObjectMatrix(float objMatrix[]) {

memcpy(mObjectMatrix, objMatrix, sizeof(mObjectMatrix));

}

void RenderProgram::locationTrans(float cameraMatrix[], float projMatrix[], int muMVPMatrixPointer) {

multiplyMM(mMVPMatrix, 0, cameraMatrix, 0, mObjectMatrix, 0); // Multiply the camera matrix by the object matrix

multiplyMM(mMVPMatrix, 0, projMatrix, 0, mMVPMatrix, 0); // Multiply the projection matrix by the result matrix of the previous step

glUniformMatrix4fv(muMVPMatrixPointer, 1, false, mMVPMatrix); // Pass the final transformation relationship into the rendering pipeline

}

Then after inheritance , According to their own needs , Realize your own rendering pipeline , Examples are as follows (LUT Filter renderer ):

//

// Created by jiezhuchen on 2021/6/21.

//

#include <GLES3/gl3.h>

#include <GLES3/gl3ext.h>

#include <string.h>

#include <jni.h>

#include <cstdlib>

#include "RenderProgramFilter.h"

#include "android/log.h"

using namespace OPENGL_VIDEO_RENDERER;

static const char *TAG = "nativeGL";

#define LOGI(fmt, args...) __android_log_print(ANDROID_LOG_INFO, TAG, fmt, ##args)

#define LOGD(fmt, args...) __android_log_print(ANDROID_LOG_DEBUG, TAG, fmt, ##args)

#define LOGE(fmt, args...) __android_log_print(ANDROID_LOG_ERROR, TAG, fmt, ##args)

RenderProgramFilter::RenderProgramFilter() {

vertShader = GL_SHADER_STRING(

$#version 300 es\n

uniform mat4 uMVPMatrix; // Rotate, translate, zoom Total transformation matrix . The object matrix is multiplied by it to produce a transformation

in vec3 objectPosition; // Object position vector , Participate in the operation but not output to the film source

in vec4 objectColor; // Physical color vector

in vec2 vTexCoord; // Coordinates within the texture

out vec4 fragObjectColor;// Output the processed color value to the chip program

out vec2 fragVTexCoord;// Output the processed texture coordinates to the slice program

void main() {

gl_Position = uMVPMatrix * vec4(objectPosition, 1.0); // Set object position

fragVTexCoord = vTexCoord; // No processing by default , Directly output physical internal sampling coordinates

fragObjectColor = objectColor; // No processing by default , Output color values to the source

}

);

fragShader = GL_SHADER_STRING(

##version 300 es\n

precision highp float;

precision highp sampler2DArray;

uniform sampler2D sTexture;// Image texture input

uniform sampler2DArray lutTexture;// Filter texture input

uniform float pageSize;

uniform float frame;// Frame number

uniform vec2 resolution;// The resolution of the

in vec4 fragObjectColor;// receive vertShader The processed color value is given to the chip element program

in vec2 fragVTexCoord;// receive vertShader The processed texture coordinates are given to the slice element program

out vec4 fragColor;// The color of the slice output to

void main() {

vec4 srcColor = texture(sTexture, fragVTexCoord);

srcColor.r = clamp(srcColor.r, 0.01, 0.99);

srcColor.g = clamp(srcColor.g, 0.01, 0.99);

srcColor.b = clamp(srcColor.b, 0.01, 0.99);

fragColor = texture(lutTexture, vec3(srcColor.b, srcColor.g, srcColor.r * (pageSize - 1.0)));

}

);

float tempTexCoord[] = // Sampling coordinates in texture , Be similar to canvas coordinate // There's something wrong with this thing , It leads to two problems framebuffer When the pictures take textures from each other, they are upside down

{

1.0, 0.0,

0.0, 0.0,

1.0, 1.0,

0.0, 1.0

};

memcpy(mTexCoor, tempTexCoord, sizeof(tempTexCoord));

float tempColorBuf[] = {

1.0, 1.0, 1.0, 1.0,

1.0, 1.0, 1.0, 1.0,

1.0, 1.0, 1.0, 1.0,

1.0, 1.0, 1.0, 1.0

};

memcpy(mColorBuf, tempColorBuf, sizeof(tempColorBuf));

}

RenderProgramFilter::~RenderProgramFilter() {

destroy();

}

void RenderProgramFilter::createRender(float x, float y, float z, float w, float h, int windowW,

int windowH) {

mWindowW = windowW;

mWindowH = windowH;

initObjMatrix(); // Initialize the object matrix to the identity matrix , Otherwise, the following matrix operations are multiplied by 0 It's invalid

float vertxData[] = {

x + w, y, z,

x, y, z,

x + w, y + h, z,

x, y + h, z,

};

memcpy(mVertxData, vertxData, sizeof(vertxData));

mImageProgram = createProgram(vertShader + 1, fragShader + 1);

// Get the vertex position attribute reference in the program " The pointer "

mObjectPositionPointer = glGetAttribLocation(mImageProgram.programHandle, "objectPosition");

// Texture sampling coordinates

mVTexCoordPointer = glGetAttribLocation(mImageProgram.programHandle, "vTexCoord");

// Get the vertex color attribute reference in the program " The pointer "

mObjectVertColorArrayPointer = glGetAttribLocation(mImageProgram.programHandle, "objectColor");

// Get the total transformation matrix reference in the program " The pointer "

muMVPMatrixPointer = glGetUniformLocation(mImageProgram.programHandle, "uMVPMatrix");

// Choose how to render ,0 For lines ,1 For texture

mGLFunChoicePointer = glGetUniformLocation(mImageProgram.programHandle, "funChoice");

// Rendered frame count pointer

mFrameCountPointer = glGetUniformLocation(mImageProgram.programHandle, "frame");

// Set the resolution pointer , tell gl The current resolution of the script

mResoulutionPointer = glGetUniformLocation(mImageProgram.programHandle, "resolution");

}

void RenderProgramFilter::setAlpha(float alpha) {

if (mColorBuf != nullptr) {

for (int i = 3; i < sizeof(mColorBuf) / sizeof(float); i += 4) {

mColorBuf[i] = alpha;

}

}

}

//todo

void RenderProgramFilter::setBrightness(float brightness) {

}

//todo

void RenderProgramFilter::setContrast(float contrast) {

}

//todo

void RenderProgramFilter::setWhiteBalance(float redWeight, float greenWeight, float blueWeight) {

}

void RenderProgramFilter::loadData(char *data, int width, int height, int pixelFormat, int offset) {

if (!mIsTexutresInited) {

glUseProgram(mImageProgram.programHandle);

glGenTextures(1, mTexturePointers);

mGenTextureId = mTexturePointers[0];

mIsTexutresInited = true;

}

// Binding processing

glBindTexture(GL_TEXTURE_2D, mGenTextureId);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexImage2D(GL_TEXTURE_2D, 0, pixelFormat, width, height, 0, pixelFormat, GL_UNSIGNED_BYTE, (void*) (data + offset));

mDataWidth = width;

mDataHeight = height;

}

/**@param texturePointers Pass in the texture to be rendered , Can be the result of the last processing , For example, after processing FBOTexture **/

void RenderProgramFilter::loadTexture(Textures textures[]) {

mInputTexturesArrayPointer = textures[0].texturePointers;

mInputTextureWidth = textures[0].width;

mInputTextureHeight = textures[0].height;

}

/** Set up LUT Filter **/

void RenderProgramFilter::loadLut(char* lutPixels, int lutWidth, int lutHeight, int unitLength) { // Texture updates can only be done in GL Thread operation , So we can only save the data first

mLutWidth = lutWidth;

mLutHeight = lutHeight;

mLutUnitLen = unitLength;

mLutPixels = (char*) malloc(mLutWidth * mLutHeight * 4);

memcpy(mLutPixels, lutPixels, mLutWidth * mLutHeight * 4);

}

/**@param outputFBOPointer Draw to which framebuffer, The system defaults to 0 **/

void RenderProgramFilter::drawTo(float *cameraMatrix, float *projMatrix, DrawType drawType, int outputFBOPointer, int fboW, int fboH) {

if (mIsDestroyed) {

return;

}

glUseProgram(mImageProgram.programHandle);

// Set window size and position

glBindFramebuffer(GL_FRAMEBUFFER, outputFBOPointer);

glViewport(0, 0, mWindowW, mWindowH);

glUniform1i(mGLFunChoicePointer, 1);

// Pass in location information

locationTrans(cameraMatrix, projMatrix, muMVPMatrixPointer);

// Start rendering :

if (mVertxData != nullptr && mColorBuf != nullptr) {

// Feed vertex position data into rendering pipeline

glVertexAttribPointer(mObjectPositionPointer, 3, GL_FLOAT, false, 0, mVertxData); // Three dimensional vector ,size by 2

// Send vertex color data to the rendering pipeline

glVertexAttribPointer(mObjectVertColorArrayPointer, 4, GL_FLOAT, false, 0, mColorBuf);

// Transfer vertex texture coordinate data into the rendering pipeline

glVertexAttribPointer(mVTexCoordPointer, 2, GL_FLOAT, false, 0, mTexCoor); // Two dimensional vector ,size by 2

glEnableVertexAttribArray(mObjectPositionPointer); // Turn on vertex properties

glEnableVertexAttribArray(mObjectVertColorArrayPointer); // Enable color properties

glEnableVertexAttribArray(mVTexCoordPointer); // Enable texture sampling to locate coordinates

float resolution[2];

switch (drawType) {

case OPENGL_VIDEO_RENDERER::RenderProgram::DRAW_DATA:

glActiveTexture(GL_TEXTURE0); // Activate 0 No. texture

glBindTexture(GL_TEXTURE_2D, mGenTextureId); //0 No. texture binding content

glUniform1i(glGetUniformLocation(mImageProgram.programHandle, "sTexture"), 0); // Map to rendering script , Get the pointer of texture attribute

resolution[0] = (float) mDataWidth;

resolution[1] = (float) mDataHeight;

glUniform2fv(mResoulutionPointer, 1, resolution);

break;

case OPENGL_VIDEO_RENDERER::RenderProgram::DRAW_TEXTURE:

glActiveTexture(GL_TEXTURE0); // Activate 0 No. texture

glBindTexture(GL_TEXTURE_2D, mInputTexturesArrayPointer); //0 No. texture binding content

glUniform1i(glGetUniformLocation(mImageProgram.programHandle, "sTexture"), 0); // Map to rendering script , Get the pointer of texture attribute

resolution[0] = (float) mInputTextureWidth;

resolution[1] = (float) mInputTextureHeight;

glUniform2fv(mResoulutionPointer, 1, resolution);

break;

}

int longLen = mLutWidth > mLutHeight ? mLutWidth : mLutHeight;

if (mLutPixels) {

if (mHadLoadLut) {

glBindTexture(GL_TEXTURE_2D_ARRAY, mLutTexutresPointers[0]);

glTexImage3D(GL_TEXTURE_2D_ARRAY, 0, GL_RGBA, 0, 0, 0, 0, GL_RGBA, GL_UNSIGNED_BYTE,

nullptr);

glDeleteTextures(1, mLutTexutresPointers);

}

glGenTextures(1, mLutTexutresPointers);

glBindTexture(GL_TEXTURE_2D_ARRAY, mLutTexutresPointers[0]);

glTexParameterf(GL_TEXTURE_2D_ARRAY, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D_ARRAY, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D_ARRAY, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D_ARRAY, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D_ARRAY, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexImage3D(GL_TEXTURE_2D_ARRAY, 0, GL_RGBA, mLutUnitLen, mLutUnitLen, longLen / mLutUnitLen, 0, GL_RGBA, GL_UNSIGNED_BYTE, mLutPixels);

//lut Data loaded , Clean up memory

free(mLutPixels);

mLutPixels = nullptr;

mHadLoadLut = true;

}

if (mHadLoadLut) {

glActiveTexture(GL_TEXTURE1); // Activate 1 No. texture

glBindTexture(GL_TEXTURE_2D_ARRAY, mLutTexutresPointers[0]);

glUniform1i(glGetUniformLocation(mImageProgram.programHandle, "lutTexture"), 1); // Map to rendering script , Get the pointer of texture attribute

glUniform1f(glGetUniformLocation(mImageProgram.programHandle, "pageSize"), longLen / mLutUnitLen); // Map to rendering script , Get the pointer of texture attribute

}

glDrawArrays(GL_TRIANGLE_STRIP, 0, /*mPointBufferPos / 3*/ 4); // Draw lines , Added point Floating point numbers /3 Is the coordinate number ( Because a coordinate consists of x,y,z3 individual float constitute , Can't be used directly )

glDisableVertexAttribArray(mObjectPositionPointer);

glDisableVertexAttribArray(mObjectVertColorArrayPointer);

glDisableVertexAttribArray(mVTexCoordPointer);

}

}

void RenderProgramFilter::destroy() {

if (!mIsDestroyed) {

// Release the video memory occupied by the texture

glBindTexture(GL_TEXTURE_2D, 0);

glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, 0, 0, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, nullptr);

glDeleteTextures(1, mTexturePointers);

glBindTexture(GL_TEXTURE_2D_ARRAY, mLutTexutresPointers[0]);

glTexImage3D(GL_TEXTURE_2D_ARRAY, 0, GL_RGBA, 0, 0, 0, 0, GL_RGBA, GL_UNSIGNED_BYTE,

nullptr);

glDeleteTextures(1, mLutTexutresPointers);

// Delete the unused shaderprogram

destroyProgram(mImageProgram);

}

mIsDestroyed = true;

}Just briefly Layer And what I wrote render How to use with :

1、 Create Layer , Input the texture or data to be processed :

Layer *layer = new Layer(-1, -mRatio, 0, 2, mRatio * 2, mWidth, mHeight); // Create a full screen layer ;

// Load data :

LOGI("cjztest, Java_com_opengldecoder_jnibridge_JniBridge_addFullContainerLayer containerW:%d, containerH:%d, w:%d, h:%d", mWidth, mHeight, textureWidthAndHeightPointer[0], textureWidthAndHeightPointer[1]);

layer->loadTexture(texturePointer, textureWidthAndHeightPointer[0], textureWidthAndHeightPointer[1]);

layer->loadData((char *) dataPointer, dataWidthAndHeightPointer[0], dataWidthAndHeightPointer[1], dataPixelFormat, 0);

if (mLayerList) {

struct ListElement* cursor = mLayerList;

while (cursor->next) {

cursor = cursor->next;

}

cursor->next = (struct ListElement*) malloc(sizeof(struct ListElement));

cursor->next->layer = layer;

cursor->next->next = nullptr;

} else {

mLayerList = (struct ListElement*) malloc(sizeof(struct ListElement));

mLayerList->layer = layer;

mLayerList->next = nullptr;

}

2、 Add the desired... To the layer fragShader Renderers

RenderProgramFilter *renderProgramFilter = new RenderProgramFilter();

renderProgramFilter->createRender(-1, -mRatio, 0, 2,

mRatio * 2,

mWidth,

mHeight);

resultProgram = renderProgramFilter;

layer->addRenderProgram(resultProgram);3、 Input... For screen presentation framebuffer Indexes

// Prevent the picture from remaining :

glClear(GL_DEPTH_BUFFER_BIT | GL_COLOR_BUFFER_BIT); // Clean the screen

glClearColor(0.0, 0.0, 0.0, 0.0);

// Traverse the layer and render

if (mLayerList) {

struct ListElement* cursor = mLayerList;

while (cursor) {

cursor->layer->drawTo(mCameraMatrix, mProjMatrix, fboPointer, fboWidth, fboHeight, Layer::DRAW_TEXTURE);

cursor = cursor->next;

}

}Demo Code address :

The actual effect , This Demo Convolution and... Are turned on in turn LUT The filter has two lines fragShader:

Based on... On Android OpenGL ES Realize rendering pipeline and Lut Filter , Effect display

thus , A simple OpenGL The simple framework of multi-layer and multi rendering pipeline is built , It can be expanded into a video editing software or image processing software .

版权声明

本文为[cjzcjl]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204210600587804.html

边栏推荐

- 坐标转换WGS-84 转 GCJ-02 和 GCJ-02转WGS-84

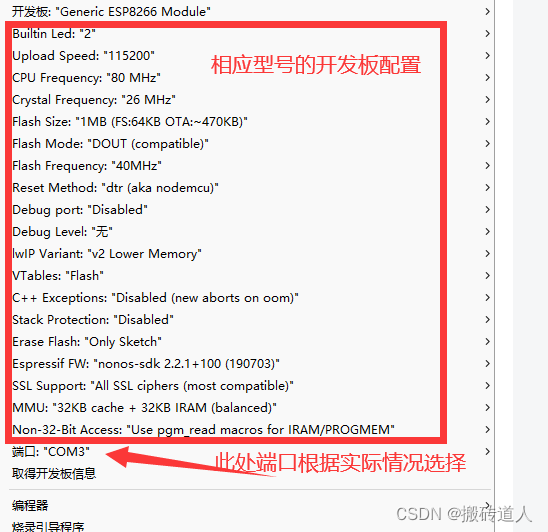

- Esp01s with Arduino development environment

- JVM的类加载过程

- Openlayers 5.0 loading ArcGIS Server slice service

- Simple use of viewbinding

- SQL server requires to query the information of all employees with surname 'Wang'

- arcgis js api dojoConfig配置

- I just want to leave a note for myself

- How about CICC wealth? Is it safe to open an account up there

- The flyer realizes page Jump through routing routes

猜你喜欢

Esp01s with Arduino development environment

![[record] typeerror: this getOptions is not a function](/img/c9/0d92891b6beec3d6085bd3da516f00.png)

[record] typeerror: this getOptions is not a function

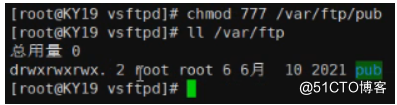

FTP、ssh远程访问及控制

【历史上的今天】4 月 23 日:YouTube 上传第一个视频;网易云音乐正式上线;数字音频播放器的发明者出生

12 examples to consolidate promise Foundation

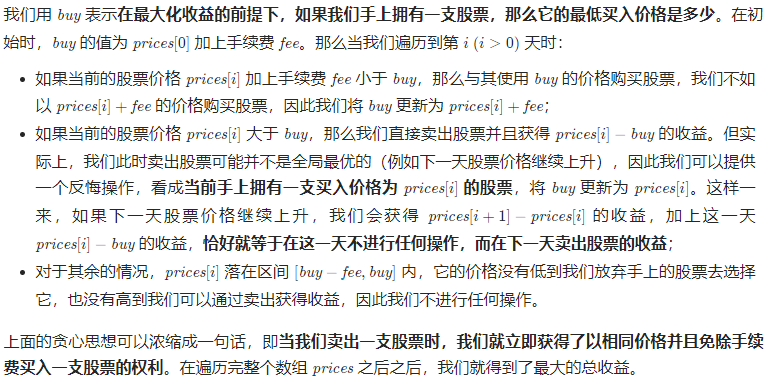

2022.04.23(LC_714_买卖股票的最佳时机含手续费)

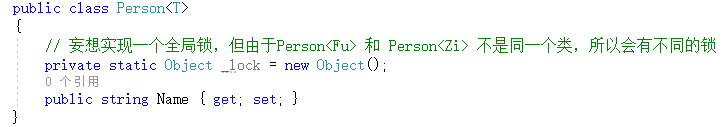

c#:泛型反射

Raspberry pie uses root operation, and the graphical interface uses its own file manager

简化路径(力扣71)

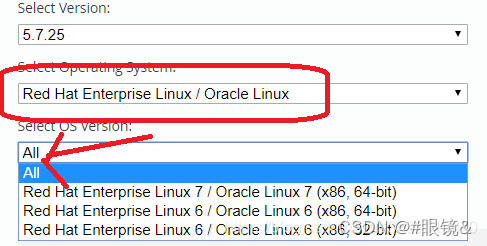

MySQL Téléchargement et installation de la version Linux

随机推荐

JS controls the file type and size when uploading files

arcgis js api dojoConfig配置

Use of fluent custom fonts and pictures

微搭低代码零基础入门课(第三课)

SSDB基础3

MySQL Téléchargement et installation de la version Linux

On the forced conversion of C language pointer

WebView opens H5 video and displays gray background or black triangle button. Problem solved

在渤海期货办理开户安全吗。

[report] Microsoft: application of deep learning methods in speech enhancement

PostgreSQL

std::stoi stol stoul stoll stof stod

White screen processing method of fulter startup page

Openlayers 5.0 reload the map when the map container size changes

Tencent cloud GPU best practices - remote development training using jupyter pycharm

Raspberry pie uses root operation, and the graphical interface uses its own file manager

Codeforces Round #784 (Div. 4)

C1000k TCP connection upper limit test 1

Using bafayun to control the computer

机器学习目录

https://gitee.com/cjzcjl/learnOpenGLDemo/tree/main/app/src/main/cpp/opengl_decoder

https://gitee.com/cjzcjl/learnOpenGLDemo/tree/main/app/src/main/cpp/opengl_decoder