当前位置:网站首页>Some experience in using MySQL / tidb database [slowly updating...]

Some experience in using MySQL / tidb database [slowly updating...]

2022-04-23 05:04:00 【canger_】

Use MySQL and Tidb As a summary of some experiences encountered in storing databases

Insert

Batch insert

- Splicing sql sentence , Send multiple messages at one time ( Pay attention to the of splicing sql size , Databases generally have limitations sql Statement size )

REPLACE INTO

The primary key will be updated , In essence, delete first and then add

INSERT INTO xxx ON DUPLICATE KEY

If there is no record, add , If yes, update the corresponding column on the original row

Insert duplicate data

package main

import (

"database/sql"

"github.com/sirupsen/logrus"

"gorm.io/driver/mysql"

"gorm.io/gorm"

"gorm.io/gorm/schema"

)

type Camera struct {

ID uint `gorm:"primaryKey"`

Name string `gorm:"not null;type:varchar(32)"`

}

func main() {

db, err := gorm.Open(mysql.Open("root:root@tcp(127.0.0.1:3306)/testDB?charset=utf8mb4&parseTime=true&loc=Local"), &gorm.Config{

NamingStrategy: schema.NamingStrategy{

SingularTable: false,

},

})

if err != nil {

return

}

db.AutoMigrate(&Camera{

})

ch := make(chan struct{

})

for i := 0; i < 5; i++ {

go func(index int) {

db.Debug().Transaction(func(tx *gorm.DB) error {

ca := []Camera{

}

if tx.Raw("SELECT * FROM cameras WHERE name='camera_TEST'").Scan(&ca).RowsAffected == 0 {

return tx.Create(&Camera{

Name: "camera_TEST",

}).Error

}

return nil

}, &sql.TxOptions{

Isolation: sql.LevelRepeatableRead})

ch <- struct{

}{

}

}(i)

}

for i := 0; i < 5; i++ {

<-ch

}

}

The inserted data does not unique Fields and primary keys , In high concurrency scenarios , Only by first select Query whether the record exists , Nonexistence insert Transaction insert , There is a high probability that duplicate data will be inserted . resolvent :

1、 Raise the database transaction isolation level to serial level ——( Basically not considered

2、 Create... For the inserted data unique constraint ( Need to deal with duplicate entry error

type Camera struct {

ID uint `gorm:"primaryKey"`

Name string `gorm:"not null;type:varchar(32);uniqueIndex"`

}

3、 Application layer to solve , For example, the insertion logic is unified as queue insertion ; Or lock it , The lock key by unique Field of , Here is a simple example of locking

type Camera struct {

ID uint `gorm:"primaryKey"`

Name string `gorm:"not null"`

}

func main() {

db, err := gorm.Open(mysql.Open("root:root@tcp(127.0.0.1:3306)/testDB?charset=utf8mb4&parseTime=true&loc=Local"), &gorm.Config{

NamingStrategy: schema.NamingStrategy{

SingularTable: false,

},

})

if err != nil {

return

}

rdb := redis.NewFailoverClient(&redis.FailoverOptions{

MasterName: "mymaster",

SentinelAddrs: []string{

"127.0.0.1:26379"},

})

if err := rdb.Ping().Err(); err != nil {

panic(err)

}

db.AutoMigrate(&Camera{

})

ch := make(chan struct{

})

for i := 0; i < 5; i++ {

go func(index int) {

defer func() {

ch <- struct{

}{

}

}()

if lock := rdb.SetNX("c1", "random", 5*time.Second).Val(); !lock {

return

}

db.Debug().Transaction(func(tx *gorm.DB) error {

ca := []Camera{

}

if tx.Raw("SELECT * FROM cameras WHERE name IN ('c1')").Scan(&ca).RowsAffected == 0 {

var cs []Camera

cs = append(cs, Camera{

Name: "c1"})

return tx.Create(&cs).Error

}

return nil

}, &sql.TxOptions{

Isolation: sql.LevelRepeatableRead})

rdb.Del("c1")

}(i)

}

for i := 0; i < 5; i++ {

<-ch

}

}

Delete

tidb Deletion of big data

When deleting a large amount of data , It is recommended to use Delete from t where xx limit 5000(xx It is recommended to meet the business filtering logic , Try to add strong filtering index columns or directly use the primary key selection range , Such as id >= 5000n+m and id <= 5000(n+1)+m Such a plan , Delete by looping , use Affected Rows == 0 As a condition for the end of the cycle , This avoids the limitation of transaction size ( otherwise Tidb Will be submitted to the Transaction too large). If the amount of data deleted at one time is very large , This cycle will be slower and slower , Because each deletion is traversed from front to back , After the previous deletion , A lot of deletion marks will remain in a short time ( It will be followed by GC fall ), Affect the latter Delete sentence . If possible , Make a proposal to Where Condition refinement . For example, delete the data of one day as follows :

for i from 0 to 23:

while affected_rows > 0:

delete * from t where insert_time >= i:00:00 and insert_time < (i+1):00:00 limit 5000;

affected_rows = select affected_rows()

Soft delete and hard delete

The current practice of soft deletion

- Add... To the table isDeleted bool

- Add... To the table deleted_at timestamp ( It can be for null) (gorm This kind of orm Framework support ,gorm It will be automatically indexed , This index can be deleted in combination with business considerations )

- Store the deleted data in another table ( I've seen some software implemented like this , I feel efficient , Development takes a little time )

Design the principle of soft deletion

- Consider whether soft deletion is necessary , Soft deletion is mainly for data recovery and subsequent statistics , It's not a real deletion ( For example, the product is off the shelf )

- Consider data traffic , The ratio of reading to writing

- The read condition is for where The conditions are complex And it is recommended not to use... If there is a large amount of business deleted_at, You can consider using 3

Inquire about

Indexes

MySQL The index of

mysql The index of , And this is basically innodb, Use b+ Tree as the underlying data structure , Primary key index b+ In the tree ,key For primary key value , All the data is stored in b+ The leaf node of the tree value in ; Other indexes that are not primary keys , Also called secondary index ( Secondary index ), Its data structure is also a b+ Trees , But the value stored in the leaf node is the primary key value , Therefore, the secondary index query data is divided into two steps , The first step is to find the primary key value , The second step is to find specific data through the primary key

Tidb The index of

tidb The underlying data structure of the index is LSM-Tree

Use of index

- Use index reasonably , Don't invalidate the index , And don't build too many indexes ( There are many cases of index failure , Don't go into details

- Reasonably cover the index for query , Reduce secondary addressing

- Tables must have primary keys , The query speed through the primary key is the fastest

- Too many indexes may affect the optimizer's choice of indexes , You can force the use of index queries when not necessary

force index(idx_name)

other

- Unnecessary columns do not need to be returned , Can't you

*No* - join Try to drive large tables from small tables for join queries

- can limit Just limit, Reduce unnecessary data return

to update

for update

For scenarios with high data consistency requirements , Use an exclusive lock , Try to match the primary key /unique Indexes / Create a conditional query for the field where the general index is located , In this way, the granularity of locks can be controlled at the row level .

Investigation and Analysis

Slow log

tidb Slow log

select query_time, query, user

from information_schema.slow_query

where is_internal = false -- exclude TiDB Internal slow query SQL

order by query_time desc;

Explain

See if the index is used

Optimize

in the light of mysql

- Partition table

- vertical / Horizontal sub table

版权声明

本文为[canger_]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220552063370.html

边栏推荐

- MySQL memo (for your own query)

- Learning Android from scratch -- Introduction

- JS determines whether the numeric string contains characters

- Innovation training (XI) airline ticket crawling company information

- MySQL views the SQL statement details executed by the optimizer

- [database] MySQL multi table query (I)

- A trinomial expression that causes a null pointer

- Acid of MySQL transaction

- Day. JS common methods

- Innovation training (VI) routing

猜你喜欢

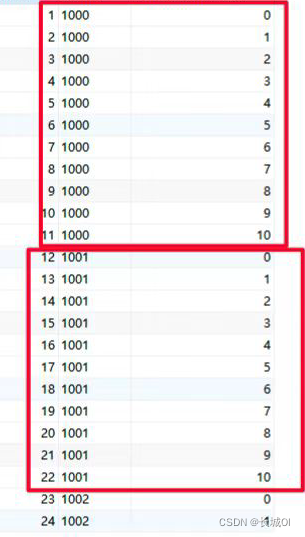

MySQL circularly adds sequence numbers according to the values of a column

Customize the navigation bar at the top of wechat applet (adaptive wechat capsule button, flex layout)

What are the redis data types

【数据库】MySQL基本操作(基操~)

![View, modify and delete [database] table](/img/a2/fcb38f2006772a1ec45cab520620ba.png)

View, modify and delete [database] table

Learning Android from scratch -- Introduction

Repair of self calibration SPC failure of Tektronix oscilloscope dpo3054

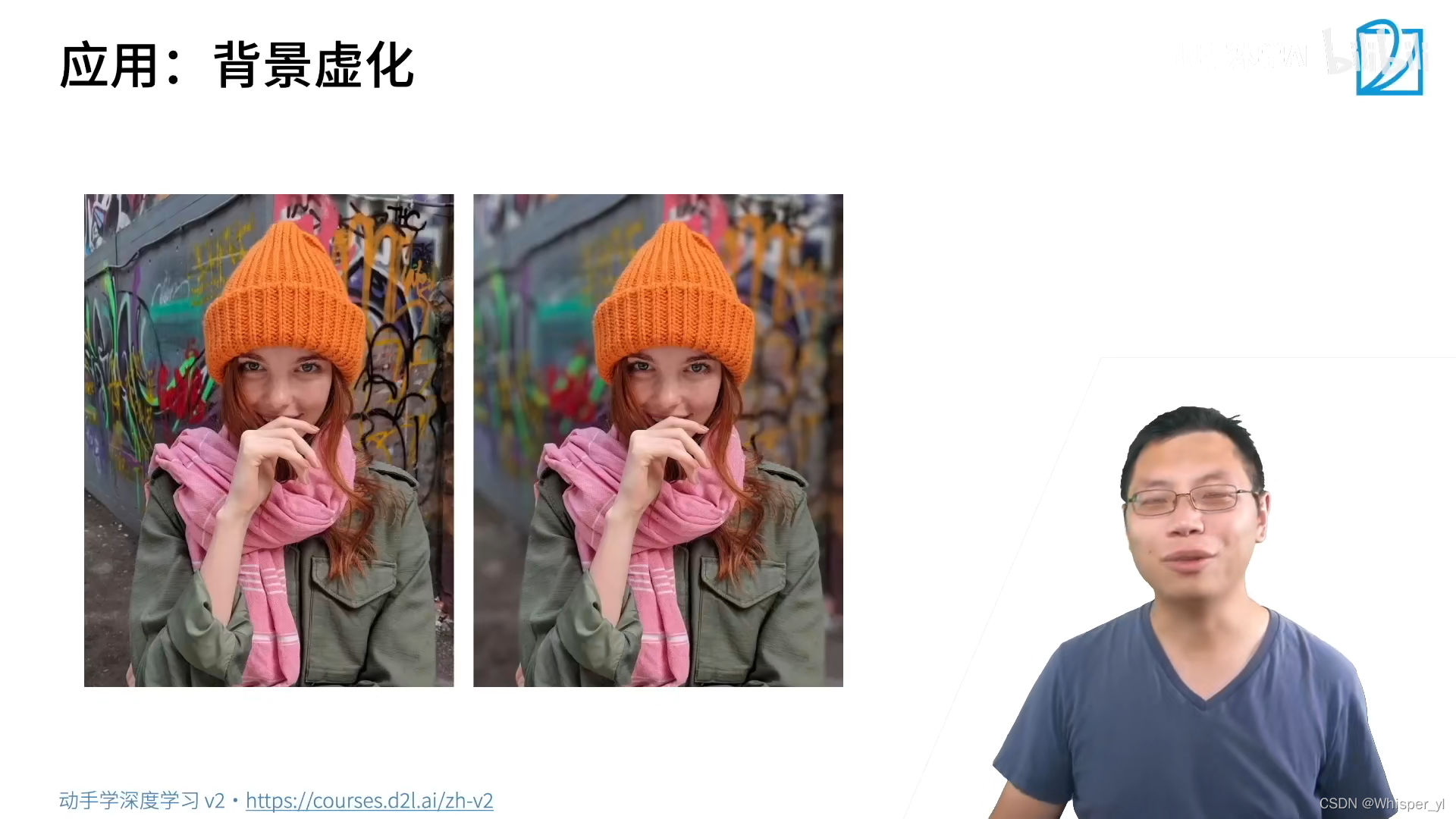

深度学习笔记 —— 语义分割和数据集

《2021多多阅读报告》发布,95后、00后图书消费潜力攀升

MySQL 慢查询

随机推荐

Pixel 5 5g unlocking tutorial (including unlocking BL, installing edxposed and root)

API slow interface analysis

MySQL 慢查询

MySQL slow query

C. Tree infection (simulation + greed)

Making message board with PHP + MySQL

跨境电商 | Facebook 和 Instagram:哪个社交媒体更适合你?

深度学习笔记 —— 微调

Basic concepts of multithreading (concurrency and parallelism, threads and processes) and entry cases

Graduation project

Sword finger offer: symmetric binary tree (recursive iteration leetcode 101)

HRegionServer的详解

《2021多多阅读报告》发布,95后、00后图书消费潜力攀升

直播带货表格模板-自动显示图片-自动关联系列商品

PHP counts the number of files in the specified folder

leetcode——启发式搜索

【数据库】MySQL单表查询

2022/4/22

【数据库】MySQL多表查询(一)

How to exit VIM